IceCube Maintenance & Operations FY14 Annual Report: Oct 2013 – Sept 2014

Cooperative Agreement: ANT-0937462 August 1, 2014

IceCube Maintenance and Operations

Fiscal Year 2014 Annual Report

October 1, 2013 – September 30, 2014

Submittal Date: August 1, 2014

____________________________________

University of Wisconsin–Madison

This report is submitted in accordance with the reporting requirements set forth in the IceCube Maintenance and Operations Cooperative Agreement, ANT-0937462.

Foreword

This FY2014 Annual Report is submitted as required by the NSF Cooperative Agreement ANT-0937462. This report covers the 12-month period beginning October 1, 2013 and concluding September 30, 2014. The status information provided in the report covers actual common fund contributions received through March 31, 2014 and the full 86-string IceCube detector (IC86) performance through July 1, 2014.

Table of Contents

| Foreword

| | 2 |

| | |

| Section I – Financial/Administrative Performance

| | 4 |

| | |

| Section II – Maintenance and Operations Status and Performance

| 6 |

| Detector Operations and Maintenance

| 6 |

| Computing and Data Management

| 13 |

| Data Release

| 21 |

| Program Management

| 23 |

| | |

| Section III – Project Governance and Upcoming Events

| | 25 |

| | |

| | |

| | |

| | |

| | |

| | |

| |

| Section I – Financial/Administrative Performance

|

| |

| The University of Wisconsin–Madison is maintaining three separate accounts with supporting charge numbers for collecting IceCube M&O funding and reporting related costs: 1) NSF M&O Core account, 2) U.S. Common Fund account, and 3) Non-U.S. Common Fund account.

|

| |

| The second FY2014 installment of $3,450,000 was released to UW–Madison to cover the costs of maintenance and operations during the second half of FY2014. $470,925 was directed to the U.S. Common Fund account, and $2,979,075 was directed to the IceCube M&O Core account (Figure 1).

|

| |

FY2014

|

Funds Awarded to UW for Oct 1, 2013 – March 31, 2014

|

Funds Awarded to UW for

Apr 1, 2014 – Sept 30, 2014

|

IceCube M&O Core account

|

$2,979,075

|

$2,979,075

|

U.S. Common Fund account

|

$470,925

|

$470,925

|

TOTAL NSF Funds

|

$3,450,000

|

$3,450,000

|

Figure 1: NSF IceCube M&O Funds

Of the IceCube M&O FY2014 Core funds, $1,015,212 were committed to seven U.S. subawardee institutions. The institutions submit invoices to receive reimbursement against their actual IceCube M&O costs. Deliverable commitments made by each subawardee institution are monitored throughout the year. Figure 2 summarizes M&O responsibilities and total FY2014 funds for the seven subawardee institutions.

�

Institution

|

Major Responsibilities

|

Funds

|

Lawrence Berkeley National Laboratory

|

DAQ maintenance, computing infrastructure

|

$79,984

|

Pennsylvania State University

|

Computing and data management, simulation production

|

$40,878

|

University of California at Berkeley

|

Detector calibration, monitoring coordination

|

$92,973

|

University of Delaware, Bartol Institute

|

IceTop calibration, monitoring and maintenance

|

$206,796

|

University of Maryland at College Park

|

IceTray software framework, online filter, simulation software

|

$506,616

|

University of Alabama at Tuscaloosa

|

Detector calibration, reconstruction and analysis tools

|

$43,396

|

Georgia Institute of Technology

|

TFT coordination

|

$44,569

|

Total

|

|

$1,015,212

|

Figure 2: IceCube M&O Subawardee Institutions – FY2014 Major Responsibilities and Funding

IceCube NSF M&O Award Budget, Actual Cost and Forecast

The current IceCube NSF M&O 5-year award was established at the beginning of Federal Fiscal Year 2011, on October 1, 2010. The following table presents the financial status as of June 30, 2014, which is Year 4 of the award, and shows an estimated balance at the end of FY2014.

Total awarded funds to the University of Wisconsin (UW) for supporting IceCube M&O from the beginning of FY2011 through the end of FY2014 are $27,794K. Total actual cost as of June 30, 2014 is $24,590K; open commitments are $1,091K. The current balance as of June 30, 2014 is $2,113K. With a projection of $1,733K for the remaining expenses during the final three months of FY2014, the estimated unspent funds at the end of FY2014 are $380K, which is 5.5% of the FY2014 budget (Figure 3).

� (a)

|

(b)

|

(c)

|

(d)= a - b - c

|

(e)

|

(f) = d – e

|

YEARS 1-4 Budget

Oct.’10-Sep.’14

|

Actual Cost To Date through

June 30, 2014

|

Open Commitments

on

June 30, 2014

|

Current Balance

on

June 30, 2014

|

Remaining Projected Expenses

through Sept. 2014

|

End of FY2014 Forecast Balance on Sept. 30, 2014

|

$27,794K

|

$24,590K

|

$1,091K

|

$2,113K

|

$1,733K

|

$380K

|

Figure 3: IceCube NSF M&O Award Budget, Actual Cost and Forecast

The current forecasted balance at the end of the fourth year of the five-year M&O award (FY2014) is expected to be less than the allowed 10% annual carryover, according to the M&O Cooperative Agreement

.

IceCube M&O Common Fund Contributions

The IceCube M&O Common Fund was established to enable collaborating institutions to contribute to the costs of maintaining the computing hardware and software required to manage experimental data prior to processing for analysis.

Each institution contributed to the Common Fund, based on the total number of the institution’s Ph.D. authors, at the established rate of $13,650 per Ph.D. author.

The Collaboration updates t

he Ph.D. author count

twice a year before each collaboration meeting

in conjunction with the update to the IceCube Memorandum of Understanding for M&O.

The M&O activities identified as appropriate for support from the Common Fund are those core activities that are agreed to be of common necessity for reliable operation of the IceCube detector and computing infrastructure and are listed in the Maintenance & Operations Plan.

Figure 4 summarizes the planned and actual Common Fund contributions for the period of April 1, 2013–March 31, 2014, based on v14.0 of the IceCube Institutional Memorandum of Understanding, from April 2013. Actual Common Fund FY2014 contributions are $21k less than planned.

� | |

Ph.D. Authors

|

Planned Contribution

|

|

Actual Received

|

Total Common Funds

|

124

|

$1,692,600

|

|

$1,671,395

|

U.S. Contribution

|

69

|

$941,850

|

|

$941,850

|

Non-U.S. Contribution

|

55

|

$750,750

|

|

$729,545

|

| |

|

|

|

|

Figure 4: Planned and Actual CF Contributions for the period of April 1, 2013–March 31, 2014

Section II – Maintenance and Operations Status and Performance

Detector Operations

and Maintenance

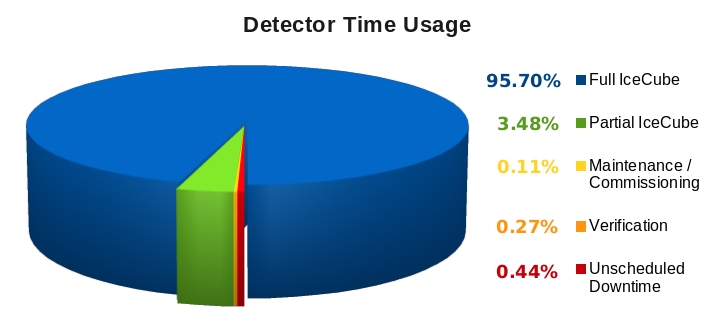

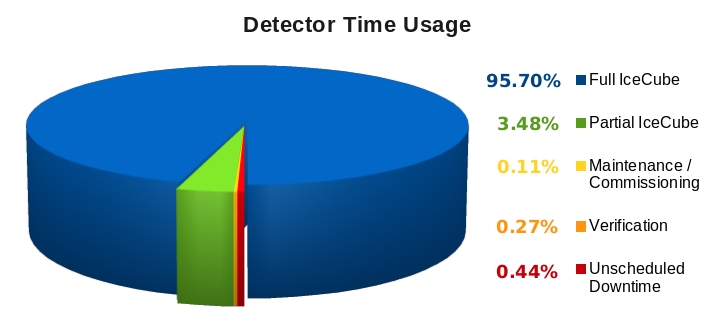

Detector Performance – During the period from September 1, 2013, to July 1, 2014, the full 86-string detector configuration (IC86) operated in standard physics mode 95.7% of the time. Figure 5 shows the cumulative detector time usage over the reporting period. Maintenance, commissioning, and verification required 0.38% of detector time. Careful planning of the hardware upgrades during the polar summer season limited the partial configuration uptime to 3.48% of the total. The unexpected downtime due to failures of 0.44% is comparable to previous periods and reflects the stability of the detector operation.

Figure 5: Cumulative IceCube Detector Time Usage, September 1, 2013 – July 1, 2014

Figure 5: Cumulative IceCube Detector Time Usage, September 1, 2013 – July 1, 2014

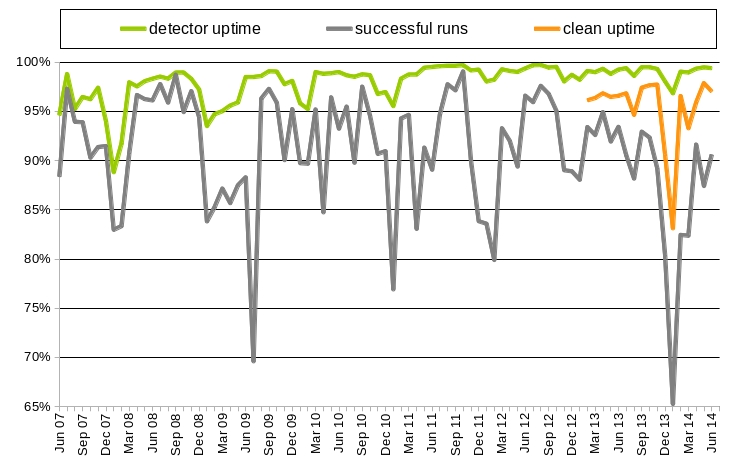

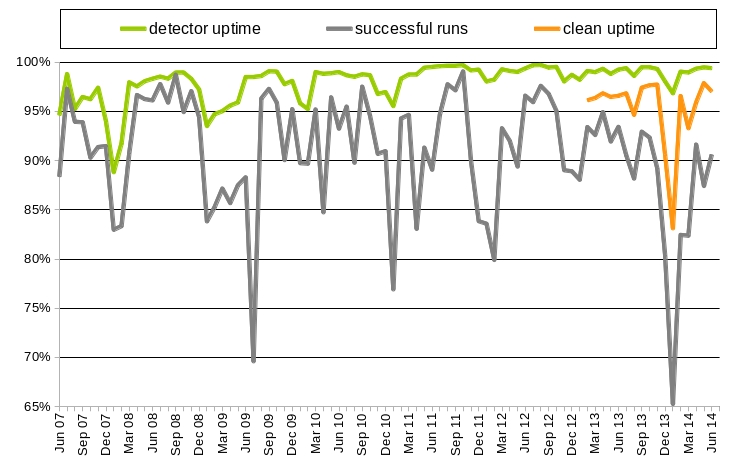

Over the reporting period of September 1, 2013, to July 1, 2014, an average detector uptime of 99.0% was achieved, comparable to previous years, as shown in Figure 6. Of the total uptime, 85.3% of the time the runs were successful, meaning the DAQ did not fail within the set 8-hour run duration and used the full 86-string detector. This run failure rate is higher than normal and reflects some instability after the upgrades this pole season (see discussion below).

However, we have now implemented the ability to track portions of failed runs as good, allowing the recovery of data from all but the last few minutes of multi-hour runs that fail. This feature has provided us a gain of ~10% in clean uptime, bringing the average for this reporting period to 94.7% for standard analysis-ready, full-detector data, as shown in Figure 6.

Figure 6: Total IceCube Detector Uptime and Clean Uptime

Figure 6: Total IceCube Detector Uptime and Clean Uptime

About 2% of the loss in clean uptime is due to the failed portions of runs that are not usable for analysis and runs less than 10 minutes long. About 2% of the loss in clean uptime is due to runs not using the full-detector configuration. This occurs when certain components of the detector are excluded from the run configuration during required repairs and maintenance. This South Pole summer season was an exception and saw around 4% loss of clean uptime due to partial-detector runs while hardware components were upgraded. Additionally, the experiment control system and DAQ are moving towards a non-stop run configuration. This will eliminate the approximately 90–120 seconds of downtime between run transitions, gaining roughly 0.5% of uptime.

The IceCube Run Monitoring system, I3Moni, provides a comprehensive set of tools for assessing and reporting data quality. IceCube collaborators participate in performing daily monitoring shift duties by reviewing information presented on the web pages and evaluating and reporting the data quality for each run. The original monolithic monitoring system processes data from various SPS subsystems, packages them together in ROOT files for transfer to the Northern Hemisphere, and reprocesses them in the north for displaying on the monitoring web pages. In a new monitoring system under development (I3Moni 2.0), all detector subsystems will report their data directly to IceCube Live via a ZeroMQ messaging system. Major advantages of this new approach include: higher quality of the monitoring alerts; simplicity and easier maintenance; flexibility, modularity, and scalability; faster data presentation to the end user; and a significant improvement in the overall longevity of the system implementation over the lifetime of the experiment.

The infrastructure for collecting the monitoring data is in place at SPS, and monitoring quantities are now being collected from the two major data acquisition systems, pDAQ and SNDAQ. During a workshop in November 2013, we documented the monitoring quantities in detail to allow contributors to work in parallel on quantity delivery. A follow-up workshop in June 2014 focused on test integration and collection of quantities from the processing and filtering system (PnF), as well as a timeline for the rollout in IceCube Live, starting later in 2014.

Development of IceCube Live, the experiment control and monitoring system, is still quite active, and this reporting period has seen three major releases. The primary features of these releases are:

·

Live v2.3 (January 2014): 71 issues and requested features resolved, including a new paging alert that detects rare cases in which multiple DAQ runs fail in a short time but still have produced some physics events, as well as better tracking of the usage of the low-latency ITS Iridium link.

·

Live v2.4 (April 2014) and v2.5 (June 2014): 74 issues and feature requests resolved, including compression and encryption of data sent over the Iridium link to maximize throughput and improve security. Latency of time-sensitive alert emails (e.g. supernova alerts) is also greatly reduced by allowing transmission over ITS. A web view of the Optical Follow-up system was added showing recent alerts to other telescopes.

Features planned for the next release include improvements in the chat feature of I3Live to facilitate communications with winter-over operators. Also planned is a feature to forward monitoring messages from systems running at the South Pole to systems in the north, particularly to forward neutrino events with minimal latency for optical follow-up efforts. Data visualization upgrades are also planned. The I3Moni 2.0 system rollout will be achieved in stages, in parallel with the existing system. The uptime for the I3Live experiment control system during the reporting period was 99.976%. This is lower than previous reporting periods due to an unplanned 90-minute downtime associated with the upgrade in June 2014.

The IceCube Data Acquisition System (DAQ) has reached a stable state, and consequently the frequency of software releases has slowed to the rate of 1–2 per year. Nevertheless, the DAQ group continues to develop new features and patch bugs. During the reporting period of October 2013–July 2014, the following accomplishments are noted:

·

Delivery of a new release (“Furthermore”) of DAQ on November 1, 2013, that includes performance enhancements in the IceCube trigger system by spreading the multiple trigger algorithms across many execution threads, thus benefiting from the multicore architectures used in the DAQ computing platforms.

·

Delivery of the "Great_Dane" release of the DAQ on June 30, 2014. This release includes delivery of I3Moni2.0 monitoring quantities to IceCube Live; enables DOM message packing to improve the efficiency of communication on the in-ice cables; supports supernova DAQ muon subtraction (see details below); and refinement of the "replay" feature which allows for testing/debugging the DAQ using real data and for comparing simulation results with actual DAQ output.

·

Development toward the "Hinterland" release of the DAQ. This will be primarily a bugfix release, in preparation for an upgrade of the StringHub component in the following release. The primary new feature planned for this release is production of a monitoring file in HDF5 format for IceTop analysis, to replace a ROOT file produced by an unmaintained program.

The single-board computer (SBC) in the IceCube DOMHubs was also previously identified as a bottleneck in DAQ processing. After the successful upgrade of 10 hubs in the 2012–2013 polar season, the remaining 87 DOMHubs were upgraded in December and January of the 2013–2014 season. The hardware upgrade was achieved ahead of our aggressive schedule. Because most of the detector remained running while sets of 2 to 4 hubs were upgraded, total detector uptime remained high (97% in January).

In addition to the SBC hardware upgrades, most of the South Pole System servers (see the computing section for more details) were upgraded this season, and the operating system on all DAQ, DOMHub, and processing / filtering machines was also upgraded. Critical data-taking servers were upgraded and swapped into the detector with only 3 to 5 minutes of downtime. The significant software upgrades did result in some instability during January 2014, with some DAQ crashes at run transitions and random DOMHub machine lockups (approximately every 3000 hub-hours). These issues impacted the clean uptime for January, but both were resolved in February 2014.

The supernova data acquisition system (SNDAQ) found that 98.9% of the available data from September 1st, 2013 through July 1st, 2014, met the minimum analysis criteria for run duration and data quality for sending triggers. The trigger downtime was 1.1 days (0.9 % of the time interval) from physics runs under 10 minutes in duration. This arose mainly during the pole season, when most of the DOMHub single board computers were replaced. Supernova candidates in these short runs can be recovered offline should the need arise; in addition, buffered hitspool data are available on request for an even larger fraction of the time.

New SNDAQ releases “Kabuto 1” (Dec. 11, 2013), “Kabuto 2” (Dec. 13, 2013), “Kabuto 3” (April 15, 2014); “Kabuto 4” (June 24, 2014), “Kabuto 5” (July 8, 2014), and “Kabuto 6” (July 11, 2014) were installed, featuring fabric installation support, improved hitspooling communication and monitoring, NIST-based leap second handling as part of a re-designed time class, the incorporation of muon trigger information as well as many small improvements and bug fixes. The improvements include full I3Moni 2.0 support, the incorporation of end-of-run summaries and the Iridium transmission of supernova alarms and short datagrams, as well as the possibility to automatically page the winterovers. The supernova alarm latency induced by the Iridium system was decreased from ~10 min to under one minute.

With the Kabuto 6 SNDAQ release and the Great_Dane pDAQ release, information on the number of optical modules hit for cosmic-ray muon triggers is available to SNDAQ. The incorporation of this information and the subsequent online correction of cosmic-muon-induced hits in the significance calculation constitutes a major milestone, as statistical fluctuations in the number of hits from cosmic ray muons are the predominant sources of false alarms. As of this writing, the muon-corrected information is listed in the alarm mails and monitoring web pages. After carefully studying the stability of the system, we will form the triggers from the muon-subtracted results, allowing us to significantly lower the alarm threshold in the future and to avoid the seasonal increase of triggers during the austral summer.

The supernova data acquisition has reached a stable state. Consequently, the frequency of software releases will slow to the rate of 1–2 per year. Future efforts will include the incorporation of external SNEWS alarms, updates for new versions of external software and the switch to muon-corrected triggers, as discussed above.

The online filtering system performs real-time reconstruction and selection of events collected by the data acquisition system and sends them for transmission north via the data movement system. Version V14-04-01 is currently deployed in support of the IC86-2014 physics run. Efforts for the past year have included system testing and support work for the SPS computing system and operating system upgrade performed during the 2013-2014 pole season, and preparing for and deploying the IC86-2014 physics run filtering system. The hardware and operating system upgrade have resulted in a ~50% increase in the online filtering system capacity, and the software release for the IC86-2014 physics runs deploys an updated filter selection set from the IceCube physics working groups and a new output file format containing the SuperDST records for all events. This new file format is saved to disk as an additional long-term data archive of IceCube data with a smaller storage requirement than the raw waveforms. Additionally, work is finishing to finalize the I3Moni 2.0 system, where data-quality monitoring values are now available for direct reported to the IceCube Live system by detector systems. Testing of DAQ stopless run data is being performed before this feature is deployed to the South Pole system.

A weekly calibration call keeps collaborators abreast of issues in both in-ice and offline DOM calibration. Using the single photoelectron (SPE) peak in data to recalibrate the DOM charge response, the mean SPE peak position has been corrected from 1.04 PE to 1.0 PE, with the DOM-to-DOM variation reduced from 2.4% to 0.6%. Efforts continue to improve our knowledge of the absolute sensitivity of the DOMs in the ice, using muon data, laboratory measurements, neutrino events from physics analyses and noise hits in the DOMs. A lab setup has been developed to measure the DOM absolute optical sensitivity as a function of incident polar angle and wavelength, using an optical system which illuminates the DOM uniformly from a given direction with pulsed and steady sources at a variety of wavelengths. Errors introduced by nonlinearities in the system are less than 0.2% over 9 orders of magnitude in brightness, and 10-20 DOMs will be absolutely calibrated using this system by mid-2015. We plan to use the known relative DOM sensitivities to transfer the calibration in the lab to the DOMs in ice.

Figure 7. Optical effective absorption length vs. depth in the SPICE3 ice model (black line),

Figure 7. Optical effective absorption length vs. depth in the SPICE3 ice model (black line),

compared to merged dust log data (gray band).

Online verification software is being merged into the upcoming I3Moni 2.0 framework. Software modules have been written that output the SPE distributions, baselines and IceTop charge distributions in the I3Moni2.0-compliant format, and upload the data to a database, which is then read by the IceCube Live system. Plotting and testing of data in IceCube live has been verified for SPE charge distributions. We expect to be able to decommission the separate verification system in spring 2015.

Flasher data continues to be analyzed to reduce ice model systematics, with a major focus on the hole ice, low-brightness flasher data, and multi-wavelength flashers. The latest version of the ice model "SPICE3" (see Figure 7), based on multi-string flasher data collected in 2012, agrees with the flasher data to within 16%, compared to 20-30% in previous published versions of the ice model. Fitting for the individual relative DOM efficiency improves the agreement to within 12%. The SPICE3 ice model will be tested with muon simulation in fall 2014.

Figure 8. Decrease in IceTop filter rate in 2012 and 2013 due to snow accumulation

(blue: uncorrected rate; red: rate corrected for barometric pressure).

A procedure for tracking IceTop snow depths is in place and continues to work well. However, despite ongoing snow management during the austral summer season, the increasing snow depth on the IceTop tanks continues to increase the energy threshold for cosmic ray air shower detection each year; a decrease in filter rate of 15% per year is shown in Figure 8. Moreover, the snow overburden complicates analyses due to uncertainties in the snow density. During this austral summer season, we plan a series of measurements with portable muon taggers over IceTop tanks to calibrate the snow density. Additionally, we continue to work to integrate IceTop and related environmental monitoring functions into IceCube Live, including stratospheric temperature data used for muon rate corrections.

IC86 Physics Runs – The fourth season of the 86-string physics run, IC86-2014, began on May 6, 2014. Detector settings were updated using the latest yearly DOM calibrations from March 2014, but minimal changes to filter settings were implemented. DAQ trigger settings did not change from IC86-2013.

The last DOM failures (2 DOMs) occurred during a power outage on May 22, 2013. No DOMs have failed during this reporting period, even with the short string power outages required for the DOMHub upgrades. The total number of active DOMs remains 5404 (98.5% of deployed DOMs).

TFT Board – The TFT board is in charge of adjudicating SPS resources according to scientific need, as well as assigning CPU and storage resources at UW for mass offline data processing (a.k.a. Level 2). The IC86-2013 season was the first instance in which L2 processing was handled by the TFT from beginning to end. The result is a dramatic reduction in the latency for processing of L2. For the IC86-2011 season, L2 latency was several months after the end of the data season. For IC86-2013 and IC86-2014, the latency is 2–4 weeks after data-taking.

Working groups within IceCube will submit approximately 20 proposals requesting data processing, satellite bandwidth and data storage, and the use of various IceCube triggers for IC86-2014. Sophisticated online filtering data selection techniques are used on SPS to preserve bandwidth for other science objectives. Over the past three years, new data compression algorithms (SuperDST) have allowed IceCube to send a larger fraction of the triggered events over TDRSS than in previous seasons. The additional data enhances the science of IceCube in the search for neutrino sources toward the Galactic Center.

Starting with IC86-2014, we have begun to implement changes to the methodology for producing online quasi-real-time alerts. Neutrino candidate events at a rate of 3 mHz will be sent via a combination of Iridium satellite and e-mail, so that neutrino coincident multiplets (and thus candidates for astrophysical transient sources) can be rapidly calculated and distributed in the northern hemisphere. This change will enable significant flexibility in the type of fast alerts produced by IceCube.

The average TDRSS data transfer rate during IC86-2013 was 95 GB/day plus an additional 5 GB/day allocated for use by the detector operations group. Since the IC86-2014 run start in May, the average daily transfer rate is approximately 85 GB/day. IceCube is a heavy user of the available bandwidth, and we will continue to moderate our usage without compromising the physics data.

Operational Communications – Communications with the IceCube winterovers and login access to SPS is critical to IceCube’s high-uptime operations. Several technologies are used for this purpose, including interactive chat, ssh/scp, and the IceCube Teleport System (ITS) using a dedicated Iridium modem in short-burst data mode.

Low-bandwidth text chat is used to communicate with the IceCube winterovers to coordinate operations and diagnose problems. Skype has been successfully used for this purpose for many years in IceCube, but during 2014 ASC notified us of the necessity to stop the use of Skype at the South Pole. A project to restore Jabber as an alternative chat solution is underway. It works with no problems during satellite time, but using it via ASC’s Iridium link is still ongoing work. Due to problems with Iridium communications, NSF has not given permission to add any more traffic to this link, including Jabber.

During May/June 2014, a drop in the quality and speed of the satellite connection to the South Pole for interactive work occurred. After some investigation, this was traced to a low priority rule for ssh incoming traffic in the ASC traffic shapers. A higher prioritization of ssh traffic from specific hosts at the North into the South Pole access machine was requested, given that this is a critical day-to-day operational tool. The new filter was installed at the end of June 2014 and resulted in a noticeable improvement in the quality of the connections.

In June 2014, after an Iridium service outage, IceCube’s primary modem did not come back online; subsequent diagnosis suggests that the firmware is likely corrupted. We have currently switched to our backup modem and will work with ASC and the vendor to repair the modem in the North when possible. We continue to work with ASC and NSF to explore alternate Iridium solutions, such as RUDICS or SIM-less short-burst-data options.

Personnel – The primary contracted IceCube Live developer, John Jacobsen, left the project in January 2014. Matt Newcomb, a DAQ programmer, also left WIPAC in February 2014. The IceCube Live developer position was filled in June 2014 by Colin Burreson, previously the WIPAC webmaster. The DAQ software developer position is open as of July 2014. A software developer position at WIPAC, shared between detector operations and analysis software support, was filled in December 2013 by physicist Jim Braun.

Computing and Data Management

On the 1st and 2nd of April 2014, a meeting of the IceCube Software and Computing Advisory Panel (SCAP) took place at WIPAC. The IceCube SCAP was established in 2009 and is composed of experts in the fields of software development and scientific computing. The SCAP advises the IceCube Spokesperson and Director on computing-related topics. IceCube staff reported about the status of different systems such as online and offline computing facilities, data processing and filtering, simulation production and analysis support. A number of breakout and discussion sessions followed the presentations. The overall evaluation of the panel was positive and highlighted that the impressive progress in the IceCube scientific program was enabled by a very successful software and computing program. A number of areas that could be reinforced were also identified. From a general perspective, the SCAP recommended that more effort should be devoted to developing requirements, predicting impact, and tracing trends in all aspects of the computing enterprise, including human effort, resource consumption and impact on the science mission. A written report with the detailed findings and recommendations was provided following the meeting

1

and is available upon request.

Computing Infrastructure – IceCube computing and storage systems, both at the Pole and in the north, have performed well over the past year. The total disk storage capacity in the data warehouse is 2781 TB (terabytes): 1017 TB for experimental data, 1315 TB for simulation, 270 TB for analysis, and 179 TB for user data.

A disk expansion for the Fiber Channel Storage Area Network (FC SAN) was procured at the end of 2013. The requirements for this new system were to provide highly reliable and performing storage to be integrated in the data warehouse Lustre file systems. The current data warehouse architecture relies on these file systems to host critical data that need to be accessed by several users from large computing clusters, which can potentially place a high load on the system. Part of this disk expansion was targeting a net increase in storage space for hosting the new data generated by the experiment. Another part was targeting the upgrade of some of the disk servers that were reaching the end of their operative life. The chosen solution was a Dell Compellent system composed by two SC8000 RAID Controllers and five SC280 dense enclosures, each of them hosting 85 4TB hard drives. The new system was delivered and commissioned in February 2014. Once in production, it will provide a total net capacity of 1152 TB.

A critical part of the Lustre file systems are the Metadata and Object Storage servers (MDS and OSS) that provide the actual high performance file system functionality on top of the bare storage. The MDS and OSS servers in two of the major IceCube file systems (/data/exp and /data/sim) were purchased in 2008-2009 so an upgrade was needed to ensure the scalability and reliability of the system were preserved. Ten Dell PowerEdge R720 servers were purchased in July 2014 as an upgrade of these Lustre infrastructure servers. Each of these servers has two Intel E5-2650v2 eight-core processors and 128 GB of memory. The higher specifications of the new servers will ensure the main file systems in the data warehouse keep delivering the required performance as the amount of data grows.

In July 2014 an additional disk expansion was purchased consisting of six Dell PowerEdge R515 servers and eighteen Dell PowerVault MD1200 arrays. The complete system hosts 288 4TB disk drives, providing a total net capacity of around 900 TB. The architecture of this system is Direct Attached Storage (DAS) and provides substantial larger storage capacity per dollar at the cost of reduced flexibility in the configuration and somewhat lower peak performance. There are portions of the data with lower criticality where the reliability and performance requirements can be relaxed. The goal is to try and translate the boost in net capacity that a lower cost DAS system provides into a boost in workflows throughput and therefore science output.

A major upgrade of the Lustre software version in the main file systems is planned for this year (from v1.8 to v2.4). The disk expansion described above will provide the needed headroom for safely performing the upgrade and data migration as needed.

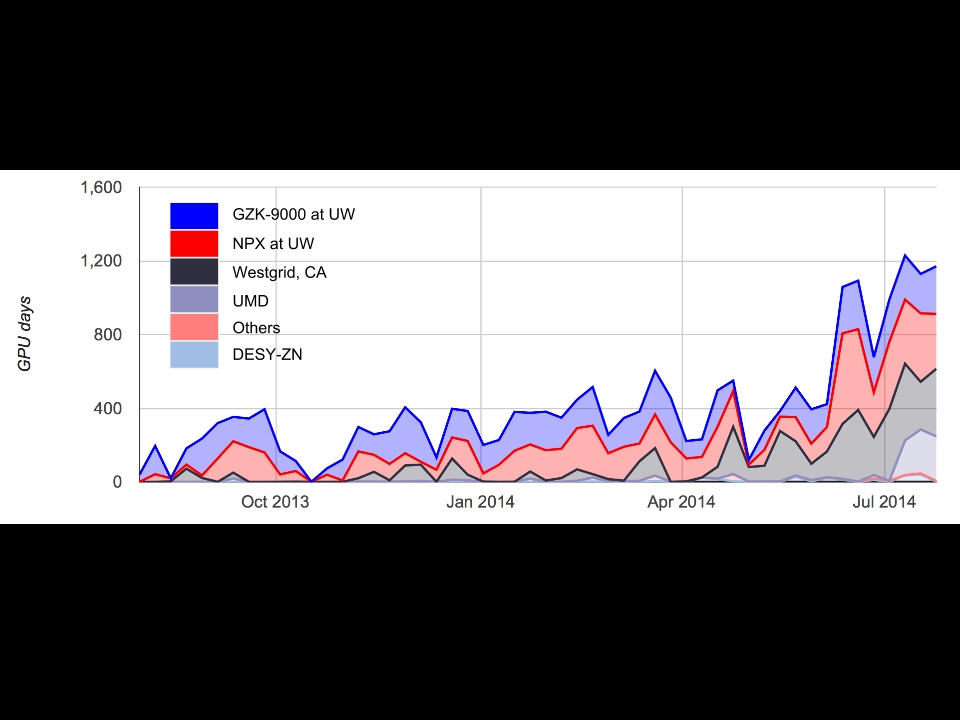

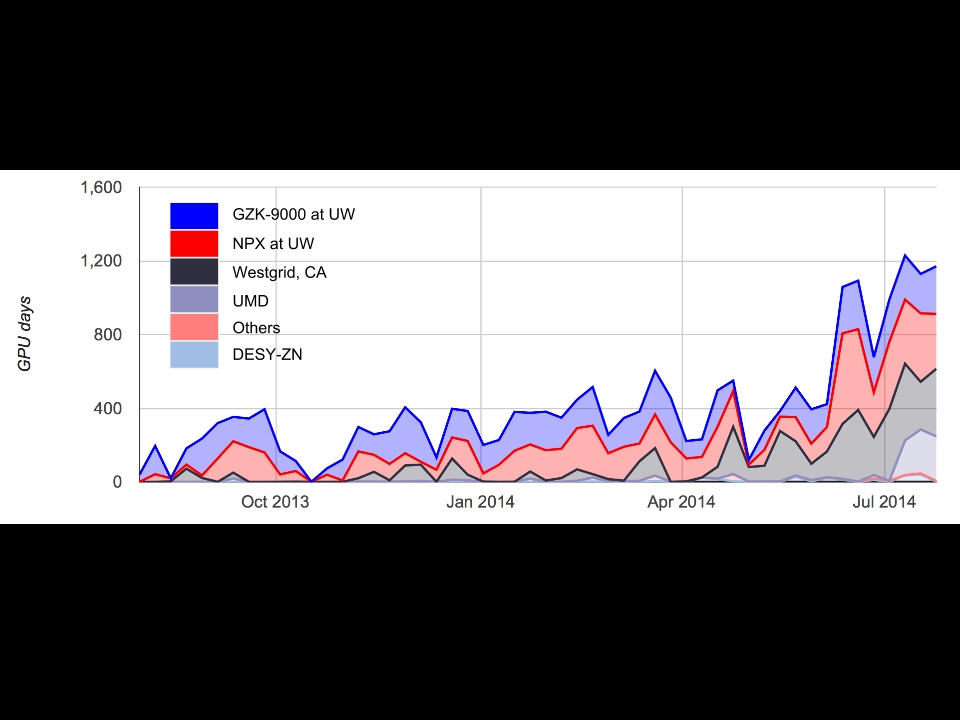

In 2012, the IceCube Collaboration approved the use of direct photon propagation for the mass production of simulation data. This requires the use of GPU (graphical processing units) hardware to deliver adequate performance. A GPU-based cluster, named GZK-9000, was installed in early 2012, and it has been extensively used for simulation production since then. The GZK-9000 cluster contains 48 NVidia Tesla M2070 GPUs. An extension of the GPU cluster containing 32 NVidia GeForce GTX-690 and 32 AMD ATI Radeon 7970 GPUs was deployed at UW–Madison during August/September 2013. The computing power of this 64-GPU extension is equivalent to four times the old 48-GPU cluster. The new GPU nodes were deployed as additional resources of the main IceCube HTCondor

2

cluster, named NPX. The goal was to facilitate the access to this collaboration resource to a larger number of users, by providing an interface as integrated as possible with the existing facilities already being used by most of the collaboration members. A number of other sites in the collaboration are currently deploying GPU clusters to reach the overall simulation production capacity goal. Figure 9 shows the accounting for GPU days used by simulation production jobs in the various available clusters. A substantial increase in the total utilized resources can be seen from the 2nd quarter 2014, as the Westgrid cluster in Canada utilization ramped up and the new cluster at UMD came online.

Figure 9: GPU days consumed by IceCube simulation production jobs in the various available GPU clusters.

A series of actions are planned with the goal of facilitating access and use of Grid resources to the collaboration members. With this objective, a CernVM-Filesystem

3

(CVMFS) repository was deployed at UW–Madison hosting the IceCube offline software stack and photonic tables needed for data reconstruction and analysis. CVMFS enables seamless access to the IceCube software from Grid nodes by means of HTTP. High scalability and performance can be accomplished by deploying a chain of standard web caches. The system was made available to all collaboration users in October 2013 and became the default software distribution mechanism at UW-Madison facilities in January 2014. In the last months, some IceCube sites in Europe have deployed and configured CVMFS in their computing clusters and several others are in the process of doing so. Also, a replica of the main repository (Stratum1) has been deployed at DESY Zeuthen. This provides load balancing and fault tolerance capabilities to this new software distribution infrastructure.

One of the mechanisms the IceCube users have for accessing external resources to run their analysis jobs is an HTCondor submit node at UW Madison (skua.icecube.wisc.edu). This node provides transparent access on the one hand to various shared clusters at UW (High Energy Physics department, Center for High Throughput Computing, etc.) and on the other hand to Grid resources outside the UW campus. The latter is accomplished via an HTCondor glideinWMS

4

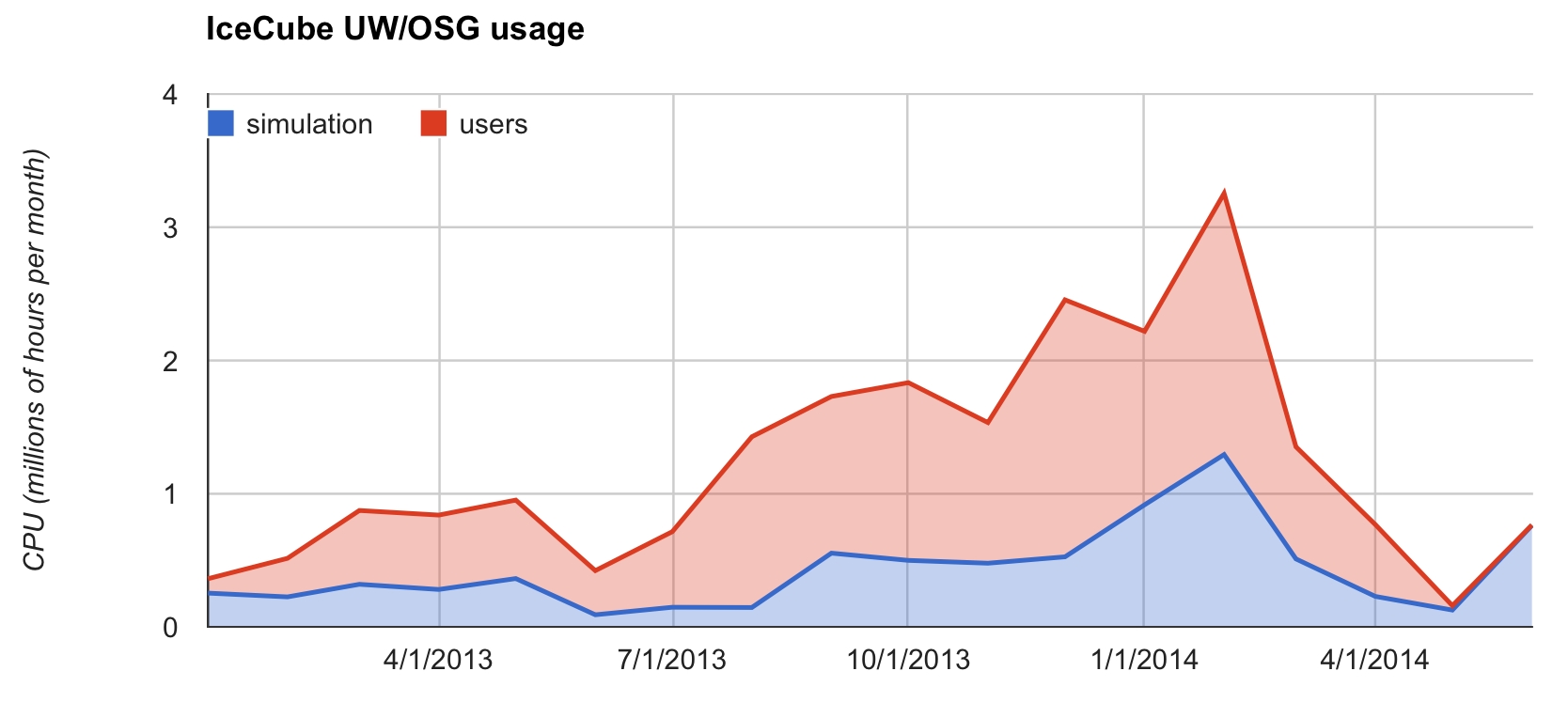

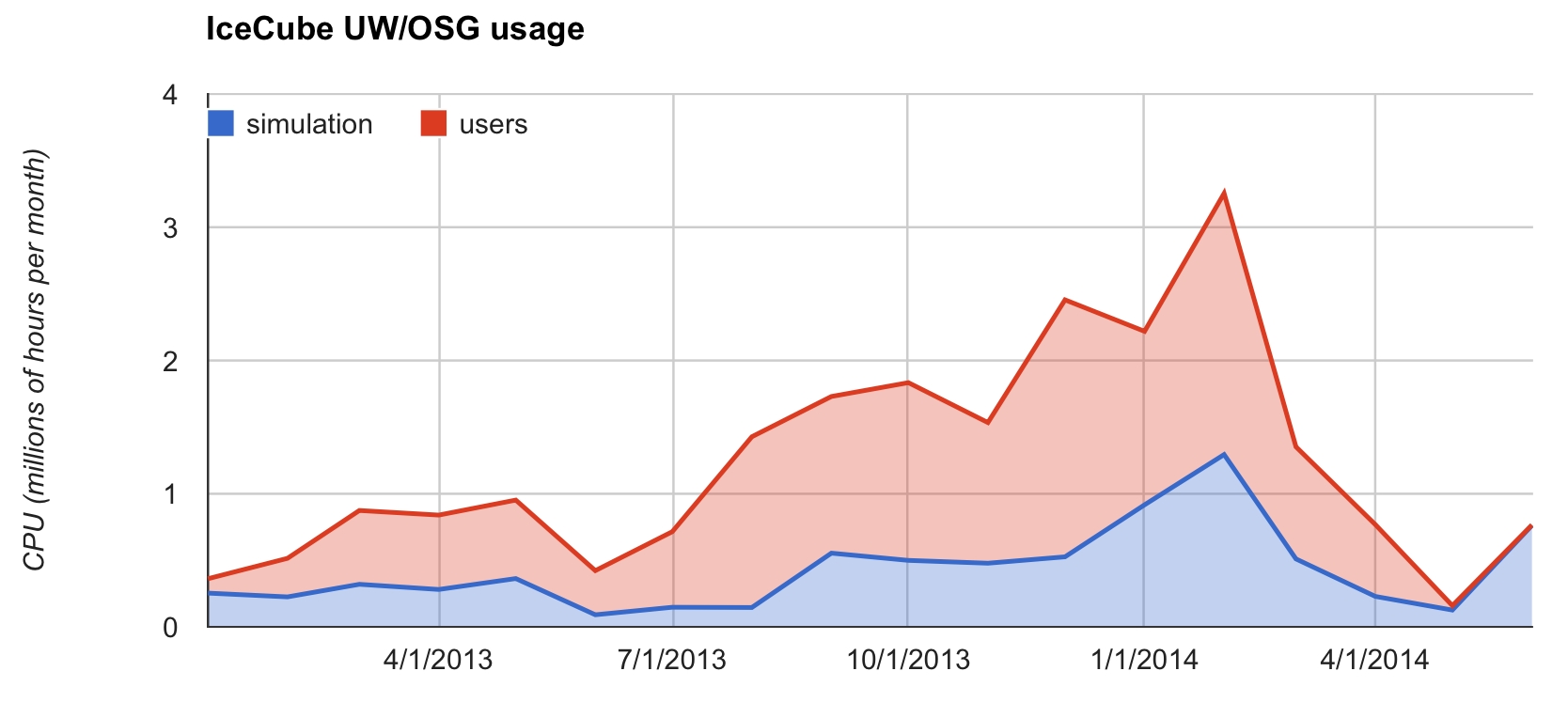

service provided by CHTC at UW. Until May 2014, the Grid resources that IceCube could access via this CHTC glideinWMS were all in the Open Science Grid (OSG). In May, the configuration of this infrastructure was updated to enable access to opportunistic resources at DESY Zeuthen via this same interface. The graph in Figure 10 shows the hours of CPU consumed by IceCube users and simulation production on these opportunistic resources. It is worth noting that 2 million hours is approximately the CPU power that the full internal NPX cluster delivers in one month if run with close to 100% efficiency; i.e., IceCube is sometimes able to more than double the amount of available computing time when adding these external sources.

Figure 9: GPU days consumed by IceCube simulation production jobs in the various available GPU clusters.

A series of actions are planned with the goal of facilitating access and use of Grid resources to the collaboration members. With this objective, a CernVM-Filesystem

3

(CVMFS) repository was deployed at UW–Madison hosting the IceCube offline software stack and photonic tables needed for data reconstruction and analysis. CVMFS enables seamless access to the IceCube software from Grid nodes by means of HTTP. High scalability and performance can be accomplished by deploying a chain of standard web caches. The system was made available to all collaboration users in October 2013 and became the default software distribution mechanism at UW-Madison facilities in January 2014. In the last months, some IceCube sites in Europe have deployed and configured CVMFS in their computing clusters and several others are in the process of doing so. Also, a replica of the main repository (Stratum1) has been deployed at DESY Zeuthen. This provides load balancing and fault tolerance capabilities to this new software distribution infrastructure.

One of the mechanisms the IceCube users have for accessing external resources to run their analysis jobs is an HTCondor submit node at UW Madison (skua.icecube.wisc.edu). This node provides transparent access on the one hand to various shared clusters at UW (High Energy Physics department, Center for High Throughput Computing, etc.) and on the other hand to Grid resources outside the UW campus. The latter is accomplished via an HTCondor glideinWMS

4

service provided by CHTC at UW. Until May 2014, the Grid resources that IceCube could access via this CHTC glideinWMS were all in the Open Science Grid (OSG). In May, the configuration of this infrastructure was updated to enable access to opportunistic resources at DESY Zeuthen via this same interface. The graph in Figure 10 shows the hours of CPU consumed by IceCube users and simulation production on these opportunistic resources. It is worth noting that 2 million hours is approximately the CPU power that the full internal NPX cluster delivers in one month if run with close to 100% efficiency; i.e., IceCube is sometimes able to more than double the amount of available computing time when adding these external sources.

Figure 10: CPU hours used by IceCube users (red) and simulation production (blue) in the shared UW clusters infrastructure and OSG.

Since June 2014, OSG Connect

5

is being explored as an additional mechanism for IceCube to access opportunistic Grid resources. The goal of this activity is to evaluate whether the number of accessible nodes is larger than the one accessible via the UW OSG gateway and also to assess if the new interface provides additional functionality that can be useful for the IceCube users.

A complete upgrade of the South Pole System (SPS) servers was carried out during the 2013-14 austral summer. This upgrade was mainly targeting to resolve performance issues that had been detected in parts of the system when operating under high trigger rate conditions. The previous set of servers was at the end of their three year planned lifetime, so a complete upgrade ensured that the homogeneity of the system was preserved. This is one of the main requirements for the SPS, given that it has to be operated for most of the year under complete isolation conditions. The server upgrade was extremely successful. All of the servers were replaced during the month of December with almost no impact on data taking (DAQ uptime for that month was 98.08%). The server replacement also provides an important reduction of the power usage, since the new servers consume 30-40% less power.

Besides the server replacement, the remaining HP UPS systems were also replaced during the 2013-14 season. The current system has 2 fully redundant UPSs per rack, including the DOMhub GPS receivers. This greatly reduces the detector downtime impact that short power outages might cause.

During the 2013-2014 season a prototype system for archiving data to disk instead of tape was deployed at SPS. The plan is to run this system in parallel with the existing tape-based system during 2014, making use of the new JADE software, which notably improves the scalability, and configurability of the current SPADE software. The new system started archiving the new SuperDST data stream as it became available with the start of the IC86-4 run in early May. This prototype provides direct experience with the handling of disks and the procedures necessary to operate a disk-based archive. Based on the experience accumulated so far, the current plan is to retire the tape-based system in the 2014-15 season. A large purchase of 250 4TB disk drives has been executed as part of the Pole season preparation. The drives have been delivered to UW Madison and have gone through an extensive testing process before being sent to Pole.

Four external SAS disk enclosures have been also purchased during the spring 2014. They will be used to provide additional disk slots to the JADE servers hence increasing the flexibility of the disk archive system. Three will be installed at the SPS and one at the SPTS.

Also as part of the 2014-2015 preparation, thirty units of the Dataprobe iBootBar IEC 20A managed PDU have been purchased that will be deployed in the ICL during the Pole season. These will allow Winter-overs to remotely power-cycle unresponsive DOMhubs from the station.

Figure 10: CPU hours used by IceCube users (red) and simulation production (blue) in the shared UW clusters infrastructure and OSG.

Since June 2014, OSG Connect

5

is being explored as an additional mechanism for IceCube to access opportunistic Grid resources. The goal of this activity is to evaluate whether the number of accessible nodes is larger than the one accessible via the UW OSG gateway and also to assess if the new interface provides additional functionality that can be useful for the IceCube users.

A complete upgrade of the South Pole System (SPS) servers was carried out during the 2013-14 austral summer. This upgrade was mainly targeting to resolve performance issues that had been detected in parts of the system when operating under high trigger rate conditions. The previous set of servers was at the end of their three year planned lifetime, so a complete upgrade ensured that the homogeneity of the system was preserved. This is one of the main requirements for the SPS, given that it has to be operated for most of the year under complete isolation conditions. The server upgrade was extremely successful. All of the servers were replaced during the month of December with almost no impact on data taking (DAQ uptime for that month was 98.08%). The server replacement also provides an important reduction of the power usage, since the new servers consume 30-40% less power.

Besides the server replacement, the remaining HP UPS systems were also replaced during the 2013-14 season. The current system has 2 fully redundant UPSs per rack, including the DOMhub GPS receivers. This greatly reduces the detector downtime impact that short power outages might cause.

During the 2013-2014 season a prototype system for archiving data to disk instead of tape was deployed at SPS. The plan is to run this system in parallel with the existing tape-based system during 2014, making use of the new JADE software, which notably improves the scalability, and configurability of the current SPADE software. The new system started archiving the new SuperDST data stream as it became available with the start of the IC86-4 run in early May. This prototype provides direct experience with the handling of disks and the procedures necessary to operate a disk-based archive. Based on the experience accumulated so far, the current plan is to retire the tape-based system in the 2014-15 season. A large purchase of 250 4TB disk drives has been executed as part of the Pole season preparation. The drives have been delivered to UW Madison and have gone through an extensive testing process before being sent to Pole.

Four external SAS disk enclosures have been also purchased during the spring 2014. They will be used to provide additional disk slots to the JADE servers hence increasing the flexibility of the disk archive system. Three will be installed at the SPS and one at the SPTS.

Also as part of the 2014-2015 preparation, thirty units of the Dataprobe iBootBar IEC 20A managed PDU have been purchased that will be deployed in the ICL during the Pole season. These will allow Winter-overs to remotely power-cycle unresponsive DOMhubs from the station.

On May 6th 2014, IceCube system administration staff identified a security breach at the UW-Madison facility. Investigation revealed that an intrusion occurred at approximately May 5th 2014 at 2:00am CDT. The source of the breach appeared to be stolen user credentials. The attackers worked interactively on six IceCube systems and left some evidence of their activities. At a high level, this involved escalating to administrative privilege, modifying the ssh daemon and client on the compromised machines and collecting user passwords for further exploit. Using one of these stolen passwords, the attackers gained access to the IceCube login machine at the South Pole. As soon as this was confirmed on May 6th 11:21am CDT, the winter-overs disconnected the compromised machine. Further analysis of the compromised machine indicated that the attackers were not successful in getting privileged access on this system.

UW-Madison IceCube system administration staff took the compromised systems offline for further analysis. The compromised machines were reinstalled, and IceCube users were required to change their account password. The data processing and analysis services at UW-Madison were restored on May 9th once the needed cleanup and hardening measures had been taken.

ASC and UW were contacted within 24 hours of the incident. Technical details on the incident and preliminary findings were reported to enable further investigations on their end. After reviewing their systems logs, ASC confirmed that there were no signs of intrusion beyond the IceCube network.

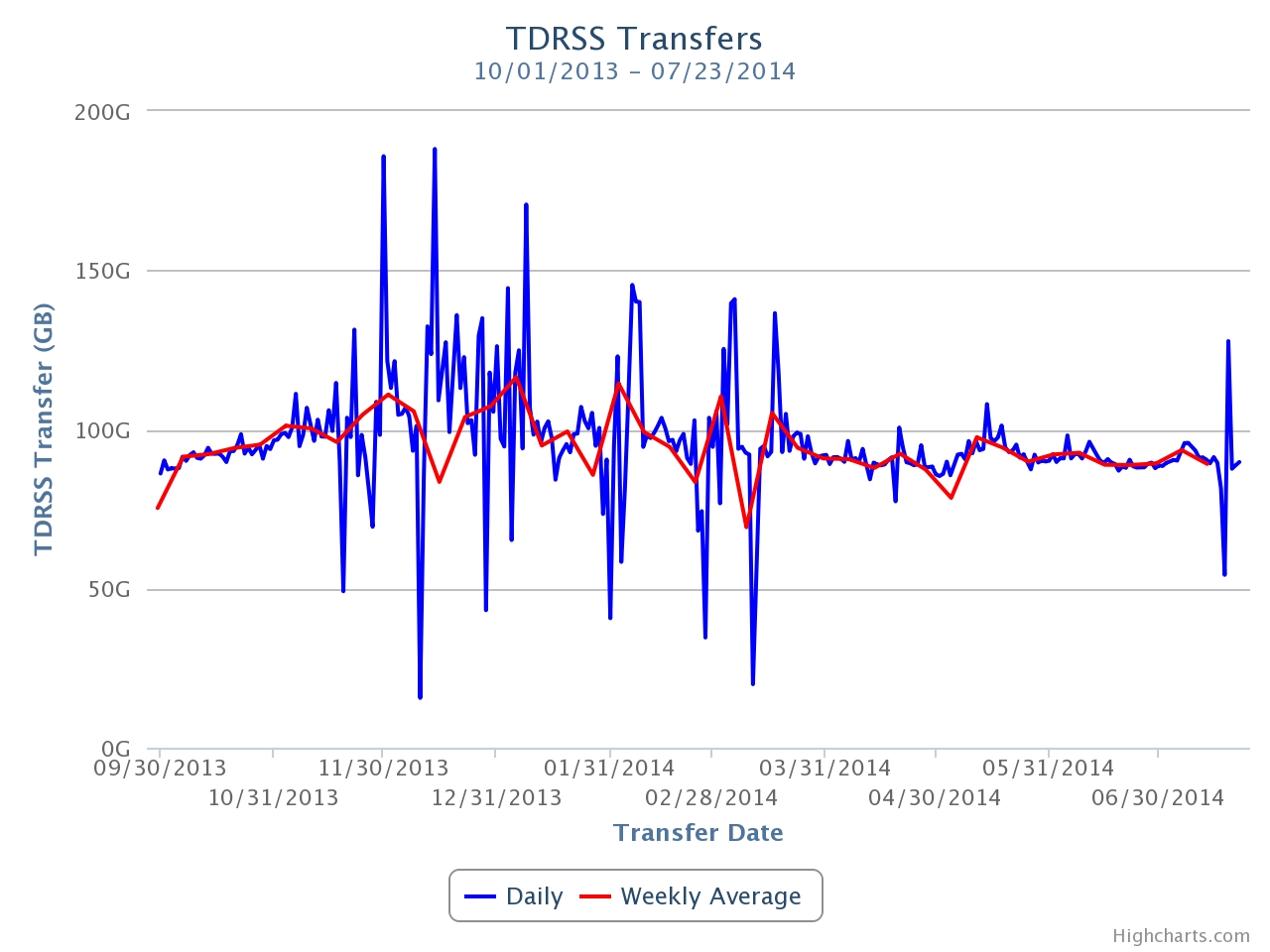

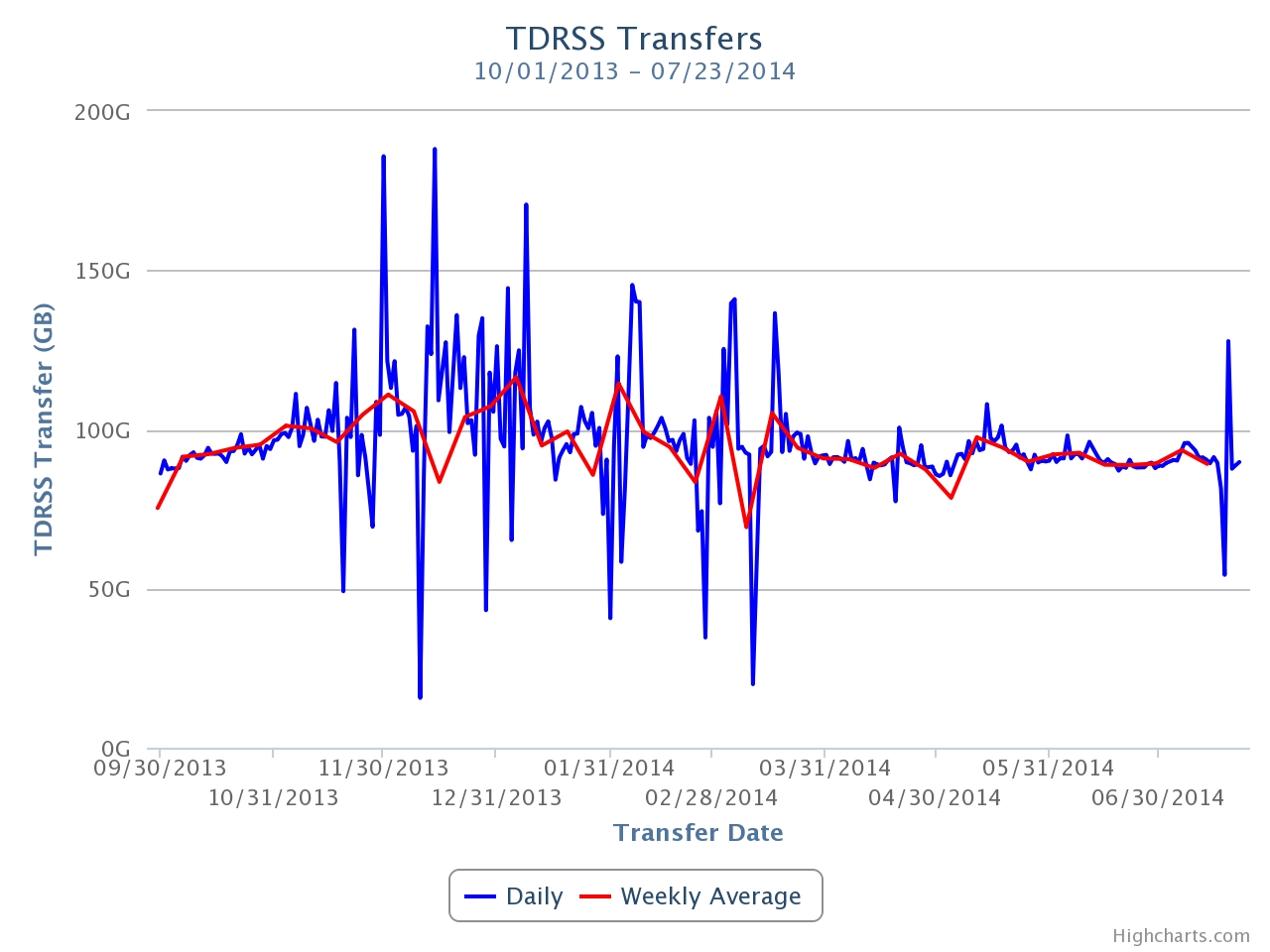

Data Movement – Data movement has performed nominally over the past year. Figure 11 shows the daily satellite transfer rate and weekly average satellite transfer rate in GB/day through July 2014. The IC86 filtered physics data are responsible for 95% of the bandwidth usage.

Figure 11: TDRSS Data Transfer Rates, October 1, 2013–July 24, 2014. The daily transferred volumes are shown in blue and, superimposed in red, the weekly average daily rates are also displayed.

Starting in November 2013, a series of unscheduled software and hardware outages caused unpredictable daily satellite transfer rates. Ongoing maintenance and additional transfer passes kept the average weekly satellite transfer rate close to our nominal rate. Science data transfer goals were met despite the unpredictable daily rates.

Data Archive – The IceCube raw data are archived on LTO4 data tapes. Data archival goals were met despite some outages for maintenance. A total of 261 TB of data were written to LTO tapes from October 1st 2013 and July 24th 2014, averaging 881 GB/day. A total of 28 TB of data were sent over TDRSS, averaging 95 GB/day. As mentioned previously, due to the limited lifespan of and difficulty of data migration from the raw data tapes, alternate strategies for long-term data archiving are being explored.

The possibility of partnering with Fermilab for archiving data is being explored. Managing a multi-petabyte tape archive for several years is an activity that entails a number of very specialized operational procedures. One example of these could be the periodic tape media migration that must be done in an ongoing fashion to avoid losing data when tapes decay. The goal of this exploration is to evaluate whether a large facility where this is done routinely at the 100’s of petabytes level provides economies of scale that ultimately help to make the process more efficient and economical. The service being prototyped is an archive, not for access by users, which would require much greater resources. Remote ingest and access tests have just started as of July 2014

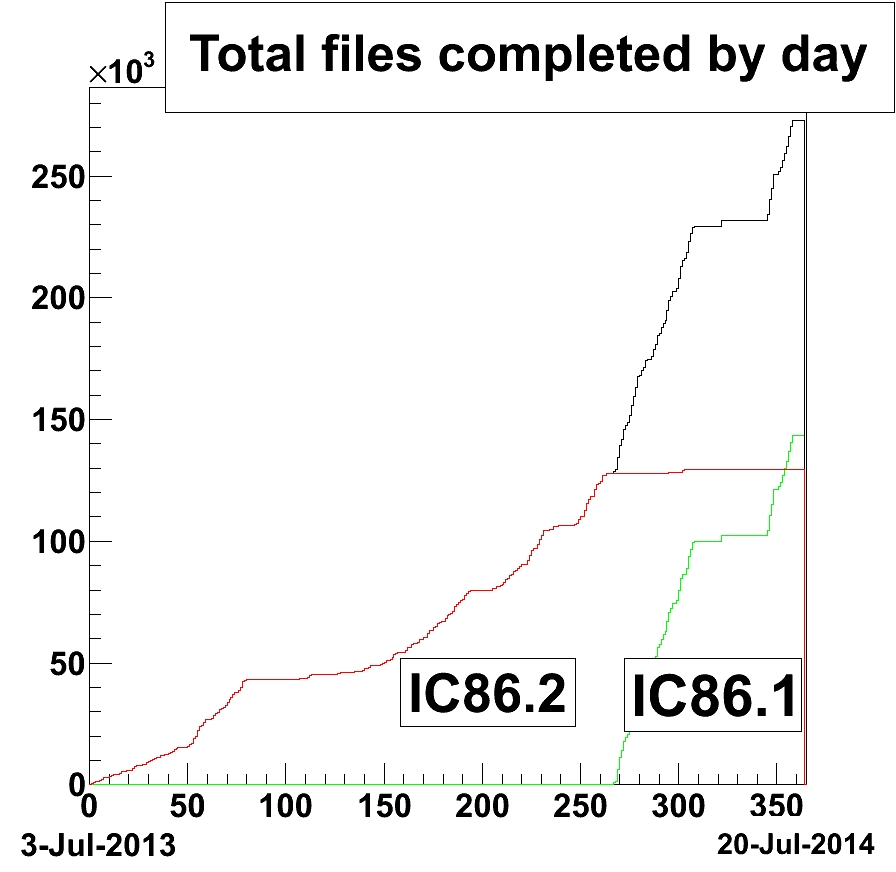

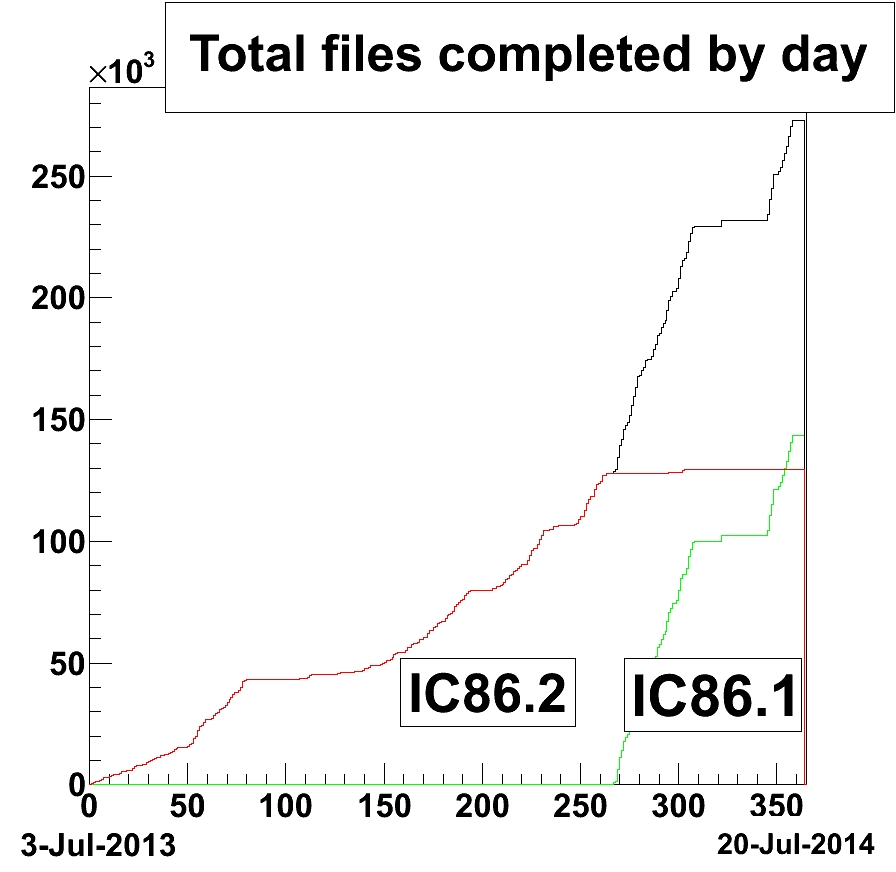

At the end of 2012, the IceCube Collaboration agreed to store the compressed SuperDST as part of the long-term archive of IceCube data. The decision taken was that this change would be implemented from the IC86-2011 run onwards. A server, a small tape library for output, and a partition of the main tape library for input were dedicated to this data reprocessing task, which as of July 2014 had processed 54% of IC86-2012 222952 files (all the tapes that had arrived from the Pole) and 78% of IC86-2011 226283 files. Raw tapes are read to disk and the raw data files processed into SuperDST. A copy is saved in the data warehouse and another is written (using JADE) to LTO5 tapes. The timeline progress of this re-processing task is displayed in Figure 12. The reprocessing started with the IC86-2012 run because the Supernova working group wanted some of the data. After this, the priority is to first process the data that was stored in the older LTO3 tape technology.

Figure 12: Number of files re-processed from the IC86-2011 (green) and IC86-2012 (red) runs to generate the SuperDST archival data products.

Offline Data Filtering – The transition from the IC86-2013 to IC86-2014 season was made on the 6th of May 2014. Processing and final validation of the data from the IC86-2013 season was completed within a 2-week latency target between data collection and offline processing. The season spanned from the 2nd of May 2013 to the 6th of May 2014 (369 days), 362 days of the data collected over this period has been classified as good for further physics analysis. The difference is accounted for by time spent taking test data during the season and the overheads associated with the transition between each detector run. A total of 615,000 CPU hours on the NPX cluster in UW Madison was required for the IC86-2013 production. This is lower than the initial estimates largely because of fewer needs for re-processing during the season, a result of our improved production validation system. 110TB of storage was required for both the Pole-filtered and offline Level 2 data. Replication of these data at Deutsches Elektronen-Synchrotron (DESY) is also completed.

Following the vetting of processing scripts and validation of results with test data, production of the IC86-2014 offline data started at the end of May 2014. A 1-2 week data-collection to offline processing period has been maintained since production started. The required production resources for the current season are estimated to be comparable to the previous season. These are based on benchmark results from test data and the fact that there are minimal changes in processing requirements between the IC86-2013 and IC86-2014 seasons.

There is an on going effort for the various physics analysis groups in the collaboration to adopt the tools currently employed by the centralized offline production in the post-Level2 data processing. In addition to access to the well-established automation and monitoring facilities, this also has the potential advantages of better coordination and an improvement in resource management and planning. A test case using one of the physics analysis groups is in progress.

Simulation – The current production of IC86 Monte Carlo began in mid-2012. Direct generation of Level 2 simulation data is now used to reduce storage space requirements. A new release of the IceCube simulation metaproject, IceSim 4 was released at the beginning of 2014. The new software includes improvements to low-level DOM simulation, correlated noise generation, Earth modeling, and lepton propagation as well as the ability to simulate cosmic shower induced neutrinos from CORSIKA.

We have progressed toward having 100% of all simulations based on direct photon propagation using GPUs or a hybrid of GPU and spline-photonics for high-energy events. Producing simulations of direct photon propagation using GPUs began with a dedicated pool of computers built for this purpose in addition to the standard CPU-based production. Benchmark performance studies of consumer-class GPU cards have been completed and provided to the collaboration as we scale up the available GPUs for simulation. Several institutions have purchased or are in the process of purchasing additional GPU hardware for simulation production.

The simulation production sites are: CHTC – UW campus (including GZK9000 GPU cluster); Dortmund; DESY-Zeuthen; University of Mainz; EGI – German grid; WestGrid – U. Alberta; SciNet – University of Toronto; SWEGRID – Swedish grid; PSU – Pennsylvania State University; UMD – University of Maryland; IIHE – Brussels; UGent – Ghent; Ruhr-Uni – Bochum; UC Irvine; PDSF/Carver/Dirac – LBNL; OSG – Open Science Grid; and NPX – UW IceCube. There are plans to incorporate additional computing resources in Canada and Denmark, and to expand the pool of GPU resources at several computing centers.

Personnel – The Simulation Production team has an open position as of July 2014 for a software developer to work on the maintenance and development of the data processing and simulation production framework. This position will reinforce the Simulation Production operations, which came out as one of the recommendations of the SCAP in April 2014.

Data Release

Data Use Policy – IceCube is committed to the goal of releasing data to the scientific community. The following links contain data sets produced by AMANDA/IceCube researchers along with a basic description. Due to challenging demands on event reconstruction, background rejection and systematic effects, data will be released after the main analyses are completed and results are published by the international IceCube Collaboration. The following two links give more information about IceCube data formats and policies.

IceCube Open Data: http://icecube.umd.edu/PublicData/I3OpenDataFormat.html

IceCube Policy on Data Sharing: http://icecube.umd.edu/PublicData/policy/IceCubeDataPolicy.pdf

Datasets (last release on 21 Nov 2013): http://icecube.wisc.edu/science/data

Program Management

Management & Administration – The primary management and administration effort is to ensure that tasks are properly defined and assigned and that the resources needed to perform each task are available when needed. Efforts include monitoring that resources are used efficiently to accomplish the task requirements and achieve IceCube’s scientific objectives.

\

·

The FY2014 M&O Plan was submitted in December 2013.

·

The detailed M&O Memorandum of Understanding (MoU) addressing responsibilities of each collaborating institution was revised for the collaboration meeting in Banff, Canada, March 2–8, 2014.

Starting from September 2014, Professor Kael Hanson of the Université Libre de Bruxelles, will succeed Jim Yeck as Director of WIPAC and Director of IceCube Maintenance and Operations.

IceCube M&O - FY2014 Milestones Status:

� | Milestone

|

Month

|

| Revise the Institutional Memorandum of Understanding (MOU v15.0) - Statement of Work and Ph.D. Authors head count for the fall collaboration meeting

|

October 2013

|

| Report on Scientific Results at the Fall Collaboration Meeting

|

October 7-12, 2013

|

| Post the revised institutional MoU’s and Annual Common Fund Report and notify IOFG.

|

December 2013

|

| Submit for NSF approval, a revised IceCube Maintenance and Operations Plan (M&OP) and send the approved plan to non-U.S. IOFG members.

|

December 2013

|

| Annual South Pole System hardware and software upgrade is complete.

|

January 2014

|

| Revise the Institutional Memorandum of Understanding (MOU v16.0) - Statement of Work and Ph.D. Authors head count for the spring collaboration Meeting

|

February 2014

|

| Report on Scientific Results at the Spring Collaboration Meeting

|

March 3-8, 2014

|

| Submit to NSF a mid-year interim report with a summary of the status and performance of overall M&O activities, including data handling and detector systems.

|

March 2014

|

| Software & Computing Advisory Panel (SCAP) Review at UW-Madison

|

April 1-2, 2014

|

| Submit for NSF approval an annual report which will describe progress made and work accomplished based on objectives and milestones in the approved annual M&O Plan.

|

August 2014

|

| Revise the Institutional Memorandum of Understanding (MOU v17.0) - Statement of Work and Ph.D. Authors head count for the fall collaboration meeting

|

September 2014

|

Engineering, Science & Technical Support – Ongoing support for the IceCube detector continues with the maintenance and operation of the South Pole Systems, the South Pole Test System, and the Cable Test System. The latter two systems are located at the University of Wisconsin–Madison and enable the development of new detector functionality as well as investigations into various operational issues, such as communication disruptions and electromagnetic interference. Technical support provides for coordination, communication, and assessment of impacts of activities carried out by external groups engaged in experiments or potential experiments at the South Pole.

Education & Outreach (E&O) – The IceCube Collaboration is building on the success of the project, developing partnerships, and advancing new initiatives to reach and sustain audiences and provide them with high-quality experiences. The outside perspective provided by the E&O advisory panel, which met virtually for the second annual review in spring 2014, continues to be a helpful guide. Here we summarize some of the highlights from the past year and describe a few exciting events scheduled for the upcoming year.

At the fall IceCube Collaboration meeting in Munich, education and outreach was covered in a dedicated plenary session that included reports of activities from multiple IceCube institutions and a very enjoyable overview from the 2012-13 winterovers. An evening panel discussion at the Deutsches Museum in Munich, ArtScience—Exploring New Worlds, Realizing the Imagined, drew an audience of nearly 200, with roughly equal participation from the art and science communities. The spring IceCube Collaboration meeting in Banff featured an evening session with the film No Horizon Anymore by Keith Reimink, a cook who wintered over in 2009, along with a flat-screen version of the fulldome video Chasing the Ghost Particle, from the South Pole to the Edge of the Universe.

Chasing the Ghost Particle premiered in its fulldome version on November 21, 2013, at the Milwaukee Public Museum’s planetarium theater to a capacity crowd of over 250 people. The UW–Madison premiere in January 2014 also attracted a capacity crowd of nearly 300 people. Chasing the Ghost Particle has been shown nationally and internationally at more than 30 venues.

The first IceCube masterclass was held on May 21, 2014. Approximately one hundred high school students in total participated at five sites—in the US at Madison, WI, and Newark, DE, and in Europe at two institutions in Brussels, Belgium, and one in Mainz, Germany. The anecdotal reports are very positive from both IceCube collaborators and students who participated. The masterclass will be presented in a talk at the 2014 American Association of Physics Teachers meeting in July 2014. We are actively recruiting more IceCube collaborators to host students for the spring 2015 masterclass.

During this reporting period, four webcasts from the South Pole reached 20 schools and more than 600 people in six countries. In January 2014, we had a joint webcast with CERN that allowed participants in the US and Greece to virtually tour the CMS detector and visit the South Pole. To provide more in-depth information to interested but non-expert audiences, we have created news pieces for the web that summarize IceCube publications, including the paper that appeared on the cover of Science (November 2014, vol. 342). The Science paper attracted a great deal of media attention, which prompted development of dedicated web resources including a photo gallery, videos, and news releases. These and other resources were also used to support activities in conjunction with IceCube being named the Physics World 2013 Breakthrough of the Year.

An NSF IRES proposal has been funded starting in July 2014 to send 18 US IceCube undergraduate students to European IceCube collaborating institutions for 10-week summer research experiences over the next three years. Two students, one from a two-year college, spent 10 weeks in Brussels at the Université libre de Bruxelles in the summer of 2014. This program will provide exciting opportunities for US undergraduates to continue to contribute to the IceCube project.

Section III – Project Governance and Upcoming Events

The detailed M&O institutional responsibilities and Ph.D. author head count is revised twice a year at the time of the IceCube Collaboration meetings. This is formally approved as part of the institutional Memorandum of Understanding (MoU) documentation. The MoU was last revised in March 2014 for the Spring collaboration meeting in Banff, Canada (v16.0), and the next revision (v17.0) will be posted in September 2014 at the Fall collaboration meeting in Geneva, Switzerland.

Changes to the IceCube Governance Document reflecting the analysis procedure that have been in place since last summer were approved at the Spring collaboration meeting in Banff, Canada. The composition and term limits of the publication committee were also revised. A Memorandum of Understanding (MoU) is signed with LIGO, Borexino, LVD, VIRGO for multi-messenger astronomy with supernova neutrinos and gravity waves.

IceCube Collaborating Institutions

At the March 2014 Spring collaboration meeting, the South Dakota School of Mines and Technology with Dr. Xinhua Bai as the institutional lead, and Yale University with Dr. Reina Maruyama as the institutional lead, were approved as full members. The Moscow Engineering Physics Institute (Markus Ackerman for DESY/ Dortmund sponsor, A. Petrukhin, R. Kokoulin, A. Bogdanov, S. Khokhlov, E. Kovylyaeva) and Queen Mary University of London - (Ty DeYoung for PSU sponsor, Teppei Katori) were approved as associate members.

As of July 2014, the IceCube Collaboration consists of 43 institutions in 12 countries (18 U.S. and 25 Europe and Asia Pacific).

The list of current IceCube collaborating institutions can be found on:

http://icecube.wisc.edu/collaboration/collaborators

IceCube Major Meetings and Events

IceCube Fall Collaboration Meeting – CERN (Geneva), Switzerland September 15–19, 2014

National Academies of Sciences Study on Strategic Vision FOR USAP October 21, 2014

IceCube Spring Collaboration Meeting – Madison, WI April 28 – May 2, 2015

Back to top

Acronym List

CVMFS CernVM-Filesystem

DAQ Data Acquisition System

DOM Digital Optical Module

IceCube Live The system that integrates control of all of the detector’s critical subsystems; also “I3Live”

IceTray IceCube core analysis software framework, part of the IceCube core software library

MoU Memorandum of Understanding between UW–Madison and all collaborating institutions

SBC Single-board computer

SNDAQ Supernova Data Acquisition System

SPE Single photoelectron

SPS South Pole System

SuperDST Super Data Storage and Transfer, a highly compressed IceCube data format

TDRSS Tracking and Data Relay Satellite System, a network of communications satellites

TFT Board Trigger Filter and Transmit Board

UPS Uninterruptable Power Supply

WIPAC Wisconsin IceCube Particle Astrophysics Center

Back to top

FY14_Annual_RPT

3