Table of Contents

| Section I – Maintenance and Operations Status and Performance

| 4 |

| | |

| Program Management

| | |

| Detector Operations and Maintenance

| | |

| Computing and Data Management

| | |

| | |

| Section II – Financial/Administrative Performance

| | 16 |

| | |

| Section III – Project Governance & Upcoming Events

| | 17 |

| | |

| | |

| | |

| | |

| | |

| | |

Section I – Maintenance and Operations Status and Performance

|

| |

| Program Management

|

| |

| Management & Administration – The primary management and administration effort is to ensure that tasks are properly defined and assigned and that the resources needed to perform each task are available when needed. Efforts include monitoring that resources are used efficiently to accomplish the task requirements and achieve IceCube’s scientific objectives.

|

| |

| ·

| The FY2011 Maintenance and Operations Plan (M&OP) was submitted in February 2011 and approved by NSF. The FY2011 Mid-Year Report was submitted in March 2011.

|

| ·

| The detailed M&O Institutional Memorandum of Understanding (MoU) was last revised in April 2011 for the spring collaboration meeting in Madison.

|

| ·

| We continue to manage computing resources to maximize uptime of all computing services and ensure the availability of required distributed services, including storage, processing, database, grid, networking, interactive user access, user support, and quota management.

|

| ·

| The IceCube Research Center (IRC) created separate accounts for collecting and reporting IceCube M&O funding: 1) NSF M&O Core; 2) U.S. Common Fund (CF); and, 3) Non U.S. CF. A total of $6,900,000 was provided by NSF to UW-Madison to cover the M&O costs during FY2011, including a total contribution of $928,200 to the U.S. CF. Sub-awards with six U.S. institutions were issued through the established University of Wisconsin-Madison requisition process (details in Section III).

|

| |

IceCube M&O - FY2011 Milestones Status

|

| Milestone

|

Planned

|

Actual

|

| Provide NSF and IOFG with the most recent Memorandum of Understanding (MOU) with all U.S. and foreign institutions in the Collaboration.

|

Sept. 2010

|

|

| Develop and submit for NSF approval, the IceCube M&O Plan (M&OP) and send this plan to each of the non-U.S. IOFG members for their oversight.

|

Jan. 2011

|

Feb. 23, 2011 (A)

|

| Annual South Pole System hardware and software upgrade.

|

Jan. 2011

|

Jan. 2011 (A)

|

| Revise the Institutional Memorandum of Understanding Statement of Work and PhD Authors Head Count for the Spring Collaboration Meeting.

|

Apr. 2011

|

Apr. 22, 2011 (A)

MoU v10.0

|

| Submit a mid-year report with a summary of the status and performance of overall M&O activities, including data handling and detector systems.

|

Mar. 2011

|

Mar. 31, 2011 (A)

|

| Annual Software & Computing Advisory Panel (SCAP) Review.

|

Apr. 2011

|

Apr. 27, 2011 (A)

|

| Report on Scientific Results at the Spring Collaboration Meeting.

|

Apr. 2011

|

Apr. 2011 (A)

|

| Develop and submit for NSF approval a plan for data sharing and data management that is consistent with guidance from the IOFG and NSF.

|

Apr. 2011

|

Apr. 12, 2011 (A)

|

| Annual Scientific Advisory Committee (SAC) Review.

|

May 2011

|

Apr. 27, 2011 (A)

|

| Submit to NSF an annual report which will describe progress made and work accomplished based on objectives and milestones in the approved annual M&O Plan.

|

Sept. 2011

|

Aug. 2011 (A)

|

| Revise the Institutional Memorandum of Understanding Statement of Work and PhD Authors Head Count for the Fall Collaboration Meeting.

|

Sept. 2011

|

Sept. 2011

|

| Report on Scientific Results at the Fall Collaboration Meeting.

|

Sept. 2011

|

Sept. 2011

|

| Annual Detector Up-Time Self Assessment.

|

Oct. 2011

|

Oct. 2011

|

Engineering, Science & Technical Support – Ongoing support for the IceCube detector continues with the maintenance and operation of the South Pole Systems, the South Pole Test System and the Cable Test System. The latter two systems are located at the University of Wisconsin–Madison and enable the development of new detector functionality as well as investigations into various operational issues such as communication disruptions and electromagnetic interference. Technical support provides for coordination, communication, and assessment of impacts of activities carried out by external groups engaged in experiments or potential experiments at South Pole.

Software Coordination – A review panel for permanent code was assembled for the IceTray-based software projects and to address the long term operational implications of recommendations from the Internal Detector Subsystem Reviews of the on-line software systems. The permanent code reviewers are working to unify the coding standards and apply these standards in a thorough and timely manner. The internal reviews of the on-line systems mark an important transition from a development mode into steady-state maintenance and operations. The reviews highlight the many areas of success as well as identify areas in need of additional coordination and improvement.

Work continues on the core analysis and simulation software to rewrite certain legacy projects and improve documentation and unit test coverage. The event handling in IceTray is being modified to solve two related problems: 1) the increasing complexity of the triggered events due to the size of the detector and the sophistication of the on-line triggers and 2) the increasing event size due to different optimizations of the hit selections used in different analyses.

Education & Outreach (E&O) – The completion of construction of the IceCube array gave rise to a growing number of outreach opportunities during this period. IceCube was able to capitalize on detector completion to launch a media campaign at the end of December 2010, and used completion again as the impetus for public outreach programs during the official inauguration in Madison, Wisconsin in the end of April, 2011.

Activities with the Knowles Science Teaching Fellows, PolarTREC, and the high school prep program Upward Bound continue and are detailed below. Education and outreach activities are presented in terms of pre-season (October 2010), South Pole Season (November 2010-February 2011), detector completion celebration events (March-May 2011), and summer activities (June-September 2011).

E&O: Pre-Season

Francis Halzen, IceCube principal investigator, was interviewed for Science Nation, a science video series commissioned by the NSF Office of Legislative and Public Affairs. The video was featured on their site at the end of October, and gives an excellent overview of the project in terms of scientific results and detector construction. The video, which was picked up by several new outlets, can be viewed at:

http://www.nsf.gov/news/special_reports/science_nation/icecube.jsp

Also in October, Professor Albrecht Karle gave the plenary address at the Wisconsin Association of Physics Teachers annual meeting. This meeting was held in conjunction with the Minnesota Association of Physics Teachers and the Wisconsin Society of Physics Students. Prof. Karle brought the audience up-to-date on the IceCube construction project and recent analysis results.

E&O: Pole Season

IceCube construction completion provided a great opportunity to publicize the project and immediately following deployment of the final digital optical module, a press release was issued simultaneously by the National Science Foundation, the University of Wisconsin-Madison, and Lawrence Berkeley National Lab.

Coverage in major online and printed publications was impressive. Media outlets included Scientific American, CBS News, the Daily Mail, the Times of India, Wired News, Nature.com, msnbc.com, and the Associated Press. Reports of completion were also published in German, Swedish, Portuguese, Spanish and in a number of Australian outlets.

The excitement generated by the completion of IceCube construction led to a number of requests for speakers, interviews and contacts during January. IceCube winterovers communicated via teleconference with an elementary school in Florida, a Belgian television station; media contacts came from Science Illustrated Magazine, Virtual Researcher on Call (a non-profit educational outreach program in Canada), the UW alumni association, a local Rotary club, and several elementary schools.

Over 30 media outlets from around the world featured IceCube in January. Here are a few highlights:

IceCube was featured in an audio interview on CBC news with Darren Grant, collaborator at the University of Alberta.

http://www.cbc.ca/technology/story/2011/01/17/antarctica-physics-lab.html

Washington Post.

http://www.washingtonpost.com/wp-dyn/content/article/2011/02/07/AR2011020703606.html

PBS Newshour.

http://www.pbs.org/newshour/rundown/2011/01/what-is-a-neutrino-and-why-should-anyone-but-a-particle-physicist-care.html

The Guardian:

http://www.guardian.co.uk/science/2011/jan/23/neutrino-cosmic-rays-south-pole

IEEE Spectrum:

http://spectrum.ieee.org/aerospace/astrophysics/icecube-the-polar-particle-hunter

During the 2010-2011 South Pole season we were once again able to include a Knowles Science Teaching Fellow (KSTF) as part of the IceTop research team. High school physics teacher Katherine Shirey from Arlington, VA, was selected from candidates who applied through the NSF's PolarTREC program.

As well as playing an active role in building and testing IceTop tanks, Ms. Shirey communicated via a live broadcast and through blogs with her students and other classrooms around the country. Her adventures were featured in the Washington Post and other media outlets.

PolarTREC is an NSF funded outreach program that pairs polar researchers with teachers. KSTF supports recent science, technology, engineering, and math graduates who have decided to pursue a teaching career.

E&O: Detector Completion

In April of 2011, the IceCube Collaboration meeting was held in Madison, Wisconsin and designed to be both a scientific meeting and celebration of detector completion. IceCube Invites Particle Astrophysics, the Antarctic Science Symposium, and a number of public events were carried out in order to attract different audiences and bring attention to various aspects of the project.

During the week, high school students from the River Falls, Wisconsin Upward Bound program traveled to Madison to visit local classrooms. They made presentations in middle schools about the program and what they have learned.

On the Saturday following the official inauguration ceremony, we hosted a teacher workshop, photo session, interactive video broadcast, and a late-night outreach session titled “IceCube Afterdark”. Upward Bound students assisted with the workshop, with presentations from former KSTF and PolarTREC participants Katherine Shirey and Casey O’Hara. Louise Huffman, education and outreach coordinator for the ANDRILL project, also presented at the teacher workshop.

Before the April events, winterover Freija Descamps participated in two week science forum co-produced by the BBC World Service, Public Radio International, and US-based news service WGBH.

An interview with Ms. Descamps was made available during podcast before the beginning of her two week forum. While her forum was open, she was asked to respond to questions daily from an international audience. The podcast and forum questions are available at

http://www.world-science.org/forum/life-south-pole-scott-freija-descamps-amundsen-antarctica/

.

Early April also included IceCube Research Center participation in the annual University of Wisconsin-Madison Science Expeditions, a campus-wide science event. We brought our ice-drilling activity. An estimated 2,000 people attended Science Expeditions this year.

E&O: Summer

The summer months continue to be active with outreach presentations and appearances, but perhaps the most significant educational events take place with summer students. IceCube scientists at UW-Madison sponsor one REU student and fund 10~15 undergraduate students at any time during the summer. They collaborate on a variety of IceCube research projects, including data analysis, information technology, communications, and outreach. Madison also hosts high school summer interns from the Information Technology Academy, a four year program for minority youth.

Upward Bound students and KSTF teachers were re-united again this July for their annual summer workshop, a week-long session of exploring science and education. This year a focus for the teachers and students was on developing and testing engaging new activities for IceCube outreach.

IceCube participated this year in UW Madison days at the Wisconsin State Fair in Milwaukee, Wisconsin. With over 500 pounds of ice and 20 volunteers, the booth was busy and popular with families. IceCube volunteers included graduate students, researchers, engineering staff, and computer scientists, many of whom were attending their first outreach event. While we saw hundreds of people throughout the day, the real success came from seeing our volunteers actively engage and encourage people to learn about the project.

Detector Operations

and Maintenance

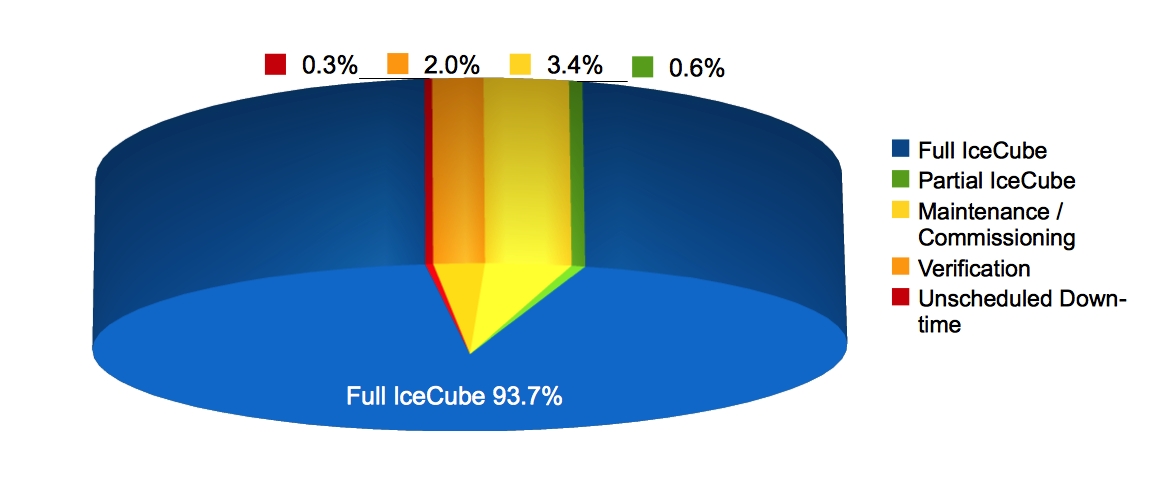

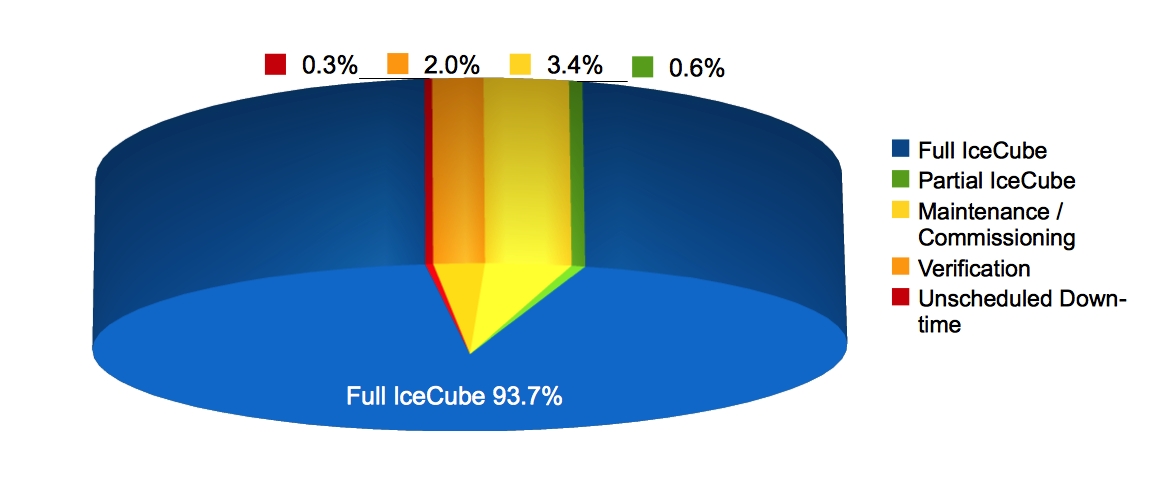

Detector Performance – During the period 1 October 2010 through 12 May 2011 the 79-string detector configuration (IC79) operated in standard physics mode 93% of the time. Standard physics operation was attained 97% of the time during the 86-string configuration from 13 May 2011 through 31 July 2011. Figure 1 shows the cumulative detector time usage over the reporting period, ending 31 July 2011. The trend of increasingly good performance and continual operations continued through the austral summer construction and maintenance season. Seven new strings and eight IceTop stations were commissioned. The initial in-ice verification, calibration, and geometry of the full detector were completed in a mere 66.2 hours of detector time. Data from various verification tools (e.g., dust loggers, in-ice cameras, etc.) to study details of the ice properties used 31.6 hours of detector time. All 52 detector control and processing compute nodes were replaced on the South Pole System (SPS) resulting in a loss of merely 1.5 hours of detector uptime. Unexpected downtime due to routine hardware failures was 0.5%.

Figure 1: Cumulative IceCube Detector Time Usage, 1 October 2010 – 31 July 2011

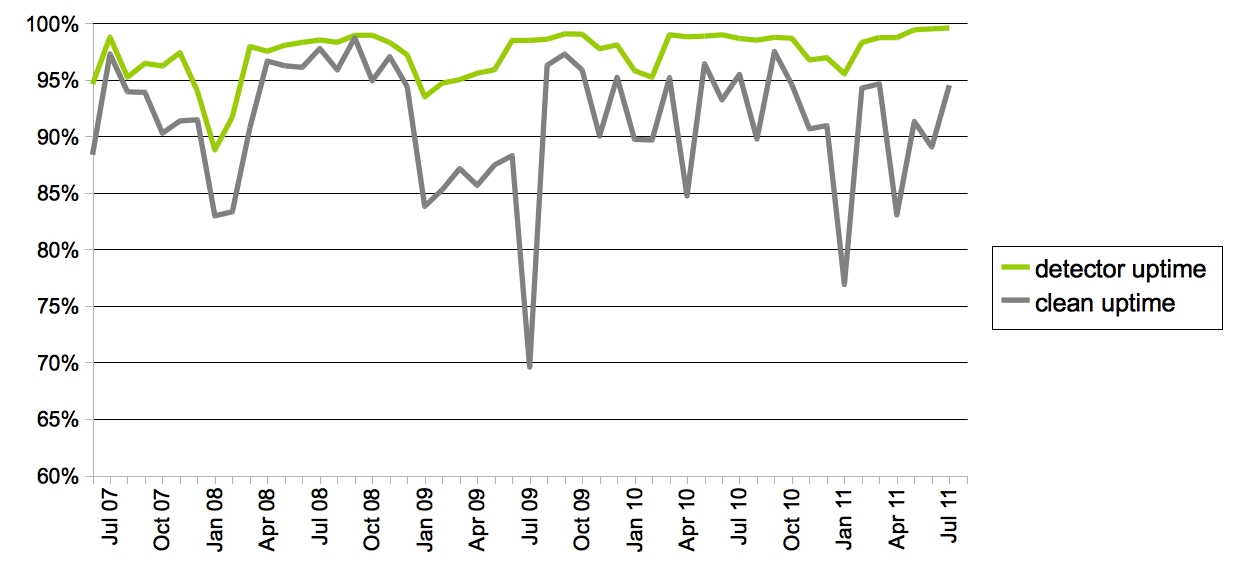

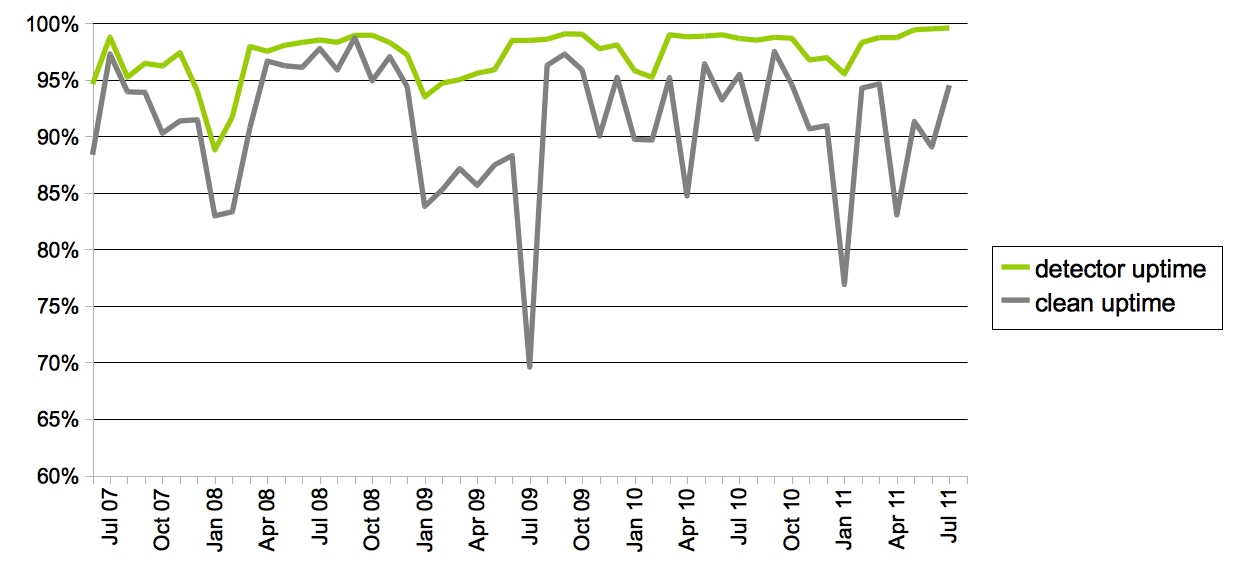

The average detector uptime was 98.3%. Of this, 89.7% of the data was standard analysis, clean uptime. Figure 2 shows the improved stability in the average monthly uptime over the past three years.

Figure 2: Total IceCube Detector Uptime and Clean Uptime

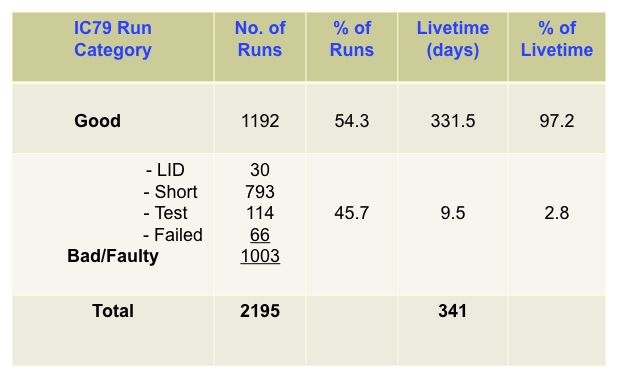

The preliminary good run list for IC79, including May 2010 through April 2011, found 94.3% of the data suitable for physics analysis. The remaining 5.7% primarily consisted of calibration runs using non-standard triggers and in-situ light sources such as the LED flashers whose data are not suitable for normal analysis. However, a portion of the detector is operated with full physics triggers during calibration runs and these data would be analyzed in the event of an extraordinary astrophysical transient event. The data quality group is currently producing an IceCube internal document of the good run list procedures. Studies of the preliminary good run list for IC86 are underway.

IceCube Live experiment control has undergone two major upgrades, V1.5.0 and V1.6.0, to expand the overall utility of the system for detector operators and for the Collaboration at large. The Recent Run display was upgraded to allow for the annotation of individual runs, sets of runs, periods of downtime, and the searching of such comments. The Run Verification and Run Monitoring systems were merged into IceCube Live and wired directly into the Recent Run page; this effort was aided by in-kind contributions. The Good Run List dataset is also supported. The incorporation of these three areas into IceCube Live represents a unification of the Verification, Run Monitoring, and the Good Run List functionality relative to the previous three separate ad-hoc designs. A real time view of the Processing and Filtering (PnF) system with graphical summaries on the Status page and a more comprehensive PnF page showing relevant latencies and rates were added. Performance of many of the Web pages was studied and improved by a factor of ten or more for certain cases. Other improvements included upgrades to the automated testing infrastructure, enhancements to graphs of monitored quantities, better alert coverage, and improved documentation.

A scripted IceCube Live deployment scheme was tested extensively on the SPTS in preparation for a comprehensive upgrade of the servers at the SPS. The upgrade of both IceCube Live machines at Pole was carried out in December with only ten minutes of total downtime. The uptime for IceCube Live over the period of this report was over 99.9%.

The Data Acquisition (DAQ) released three software revisions during the reporting period. Hobees2 was released in December 2010 to streamline the run deployment process and minimize detector down time. Jupiter was released in May 2011 to improve reliability of the central server, resolve a long-standing bug affecting extremely long trigger requests, and delivered the Slow Monopole trigger. Jupiter2 was released in June 2011 fixing file handle leaks, long DAQ startup delays, and sporadic and minor data losses by enabling the use of 16MB lookback memory in DOMs.

Supernova DAQ (SNdaq) found that 97% of the available run data from 10 October 2010 through 11 August 2010 met the minimum analysis criteria for run duration and data quality. A modification was made to exclude runs shorter than 10 minutes because they contain insufficient data to identify an actual supernova candidate. Of the 3.4% downtime, 0.7% was from runs under 10 minutes. The shorter runs can be merged by hand with previous and successive data to perform an analysis, should the need arise. The remaining down time was caused by operational issues that were subsequently mitigated.

The online filtering system performs real-time reconstruction and selection of events collected by the DAQ. Events are selected based on event quality criteria established by the IceCube collaboration physics working groups. Scripted software deployments were implemented and tested in advance of the SPS hardware upgrade deployed at South Pole during the 2010-11 austral summer. Development tasks focused on testing the system to support the upgraded computing hardware and software. Version V11-04-00 was used for the IC86 24 hour test run. Version V11-05-00 was a major upgrade in support of the start of the IC86 physics run. It included a new set of filters from the physics working groups, and new, compressed data formats allowing for the transfer of a wider variety of physics data. Online filtering was tightly integrated into IceCube Live adding alerts, status and filter rates for operator monitoring. The system documentation for winterover operators was updated and improved. The online filtering software is currently running release V11-05-01 which contains improvements for run monitoring and support for the full moon filter.

The appropriate frequency of updating DOM calibration for in-ice and IceTop data taking was studied and widely discussed. In-ice calibration is very stable and the decision was made to calibrate in-ice DOMs once per year, at the start of the physics run. IceTop DOMs are affected by weather and seasonal muon rates and will continue to be calibrated monthly. A weekly calibration phone conference keeps abreast of issues in DOM calibration both in-ice and offline. In addition to offline calibration in IC86, the DOMs are calibrated using baselines derived from the “beacon” hits, which yields a more accurate charge estimate in high-charge waveforms.

Two remote-controlled camera systems were connected to the bottom of string 80 for deployment on December 18. The cameras were operating during the entire descent down the hole while LED lamps and lasers lit up the ice wall structure. The overall structure of the dust layers in the deep ice was clearly seen and a volcanic ash layer was observed at 306 meters, exactly as predicted. The cameras now reside a few meters below the deepest optical module, at 2,453 and 2,458 meters. Short daily observations were taken during the freezing of the hole and continued once per week through March 2011 after which time monthly observations were taken. The observations showed that the frozen hole consists of very transparent ice with some fissures and a central column of ice containing bubbles. The ice bubbles correspond to about 15% of the refrozen ice. The freeze-in period lasted two and a half weeks during which time the pressure in the hole reached 530 bar.

IC86 Physics Run – The IC86 physics run began 13 May 2011. Seven new in-ice strings and 8 IceTop stations were commissioned. The TFT board finalized the physics filter content. On-line filtering improvements included better system stability when many very short runs are taken consecutively.

The spacing of the DOMs within the detector array was verified to within 1 meter using LED flashers combined with deployment data from pressure sensors and the drill. Ninety-eight percent of all IC86 in-ice DOMs are functioning normally. String 1 DOMs showed unusually high data rates after commissioning and no contributing factors were identified during string deployment or commissioning activities. The rates are asymptotically decreasing with time and the string is included in the IC86 run. There are 11 DOMs on String 7, between positions 34 and 46, that are excluded from the IC86 run. Seven DOMs do not power up, two do not trigger, and two have low rates.

TFT Board – The Triggering, Filtering and Transmission (TFT) board is in charge of adjudicating SPS resources according to scientific need. Working groups within IceCube submitted approximately 20 proposals requesting data processing, satellite bandwidth, and the use of various IceCube triggers. More sophisticated online filtering data selection techniques are used on the SPS to preserve bandwidth for other science objectives. As a result of the TFT review process, three new filters are operating in IC86: Volume trigger, the Slow Monopole trigger and a modification of the DeepCore trigger. As a result of the TFT review process, multiple filters are operating in IC86. Most of these filters are improvements over those that have been operating in the past. The TFT also approved the testing of a new SuperDST data format that will allow for significant data compression in the future.

Computing and Data Management

Computing Infrastructure – Replacement of the SPS server hardware was completed during the 2010/11 austral summer. The performance and reliability of the new hardware is high and has had a positive impact on data acquisition, processing and filtering. Sufficient processing resources are available to support enhanced event selection algorithms.

The failure rate of the new hard drives was normal, three since January, and the RAID (Redundant Array of Independent Disks) configuration protected system uptime. The rate of correctable memory ECC (Error Correction Code) errors is compatible with the failure rate at the South Pole elevation. The only hardware downtime on the new servers was attributed to a memory error on one processor cache, which resulted in a system reboot. The failure of two CISCO network switches contributed to several hours of detector downtime. Methods of improving network fault tolerance and redundancy are being investigated. Other hardware-related downtime includes DOMHub and DOM power supply failures.

The IceCube Laboratory (ICL) fire suppression system installation was finalized by RPSC in November. A test plan was designed in conjunction with RPSC to simulate all possible ICL emergencies while minimizing the effects on the operating detector. The joint planning meshed well and the commissioning of the fire suppression system was a success.

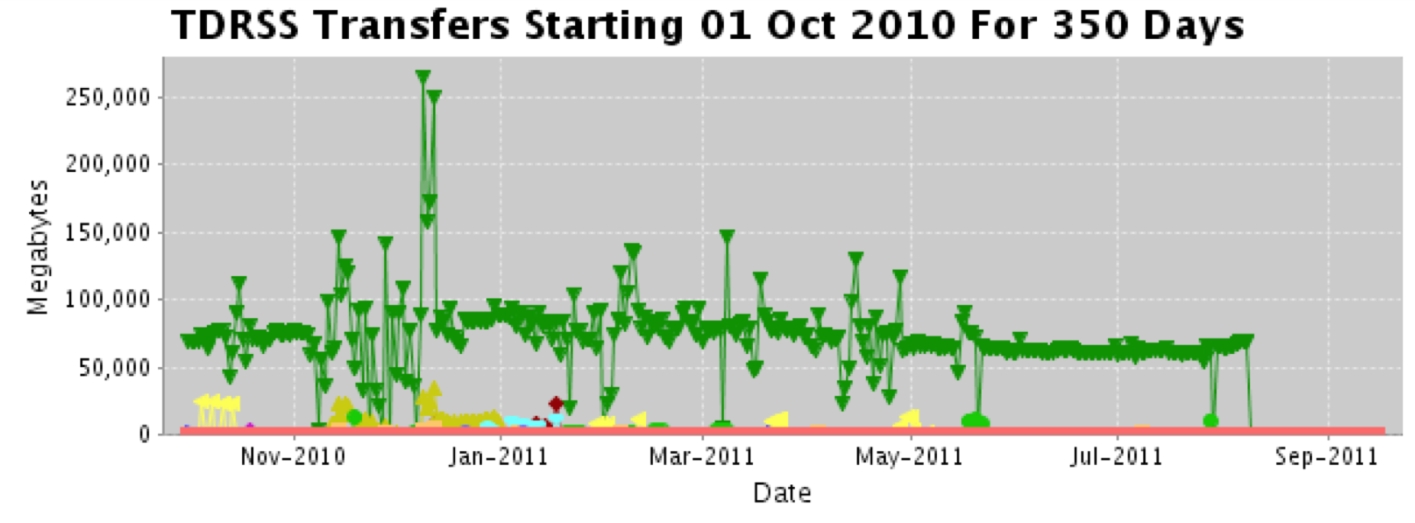

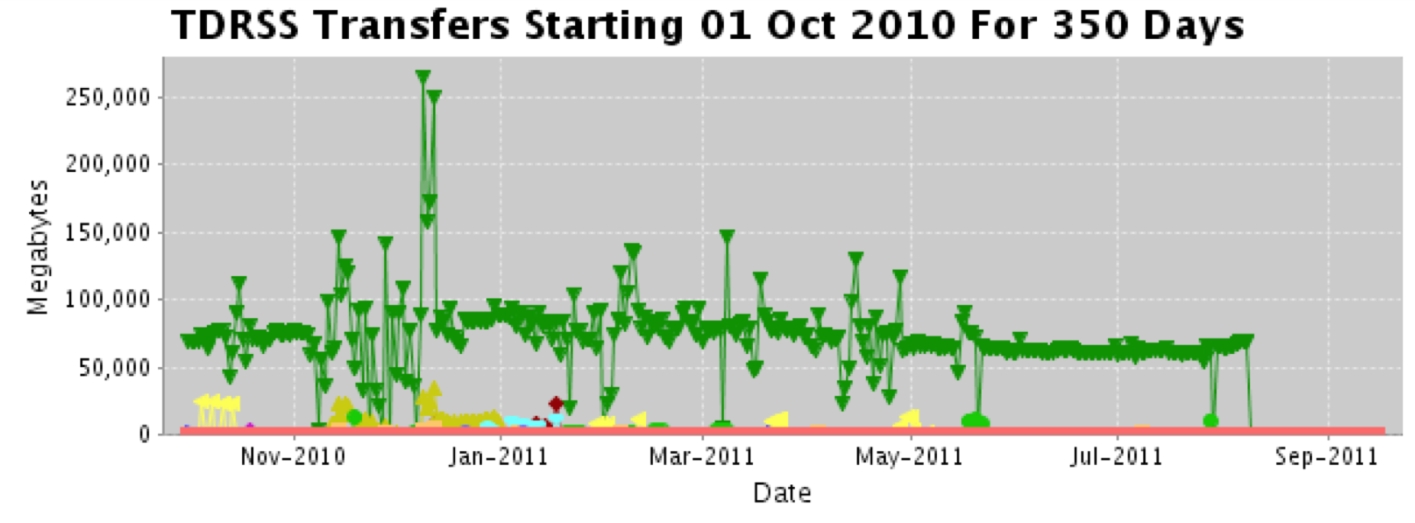

Data Movement – Data movement has performed nominally over the past year. Figure 3 shows the daily satellite transfer rates in MB/day through 8 August 2011. The IC86 filtered physics data, in green, dominates the total bandwidth. The spikes during the austral summer were related to maintenance of the SPTR. The change in the physics run from IC79 to IC86 passed nearly unnoticed due to more efficient filtering and data compression algorithms that have offset much of the IC86 data rate increase. Seasonal fluctuations in the upper atmosphere temperature effect the IceCube data rate, which can be seen in the TDRSS transfer rate. Rates are currently increasing as higher air temperatures increase the rate of high-energy muons from cosmic ray air showers. The transfer rate was nearly 90 GB/day during the austral summer dropping to 60 GB/day in June and July.

The IceCube data are archived on two sets of duplicate LTO4 data tapes. Two LTO5 tape robotics and superloader drives were installed for testing during the 2010/11 austral summer to improve archive efficiency during future seasons. The LTO5 robotics performed well; however, both tape drives have failed and the testing was suspended in July 2011. Static discharges and low relative humidity are suspected of contributing to the failures. All LTO5 hardware will be retro’d this fall.

New data movement hardware is being prepared on the SPTS for an upgrade at the South Pole during the 2011/12 austral summer. The move to common hardware will make the system consistent with the SPS server infrastructure, improving sparing, and generally improve supportability.

Data movement goals were met despite some problems with the satellite link in December. A total of 223.7 TB of data were written to LTO tapes averaging 768.7 GB/day. A total of 25.5 TB of data were sent over TDRSS averaging 83.7 GB/day.

Figure 3: TDRSS Daily Data Transfer Rates (Green Represent IceCube Filtered Data)

Figure 3: TDRSS Daily Data Transfer Rates (Green Represent IceCube Filtered Data)

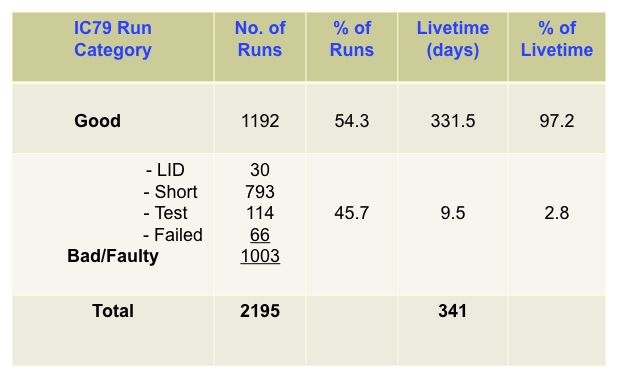

Offline Data Filtering – The IC79 offline filtering script was finalized and processing has commenced. The two-level processing in previous seasons was combined into one for IC79. For the first time, offline data filtering was done within the IceProd framework currently used for simulation production. In addition to fulfilling the objective of unifying the offline data and simulation filtering software, the various mass production features in IceProd are also harnessed for efficient data filtering. All processing is done on the npx3 computing cluster and the data through level 2 are stored in the UW–Madison data warehouse. An average of 250 NPX3 CPU cores are being used, resulting in 6000 CPU hours per day. The storage requirement for the output level 2 files is 200GB for 1 day of processed data or approximately 6TB per month. Processing was completed on July 22, 2011. The storage requirements for IC79 data are 24 TB for pole-filtered data and 61 TB for level 2. The figure below shows a summary of the results.

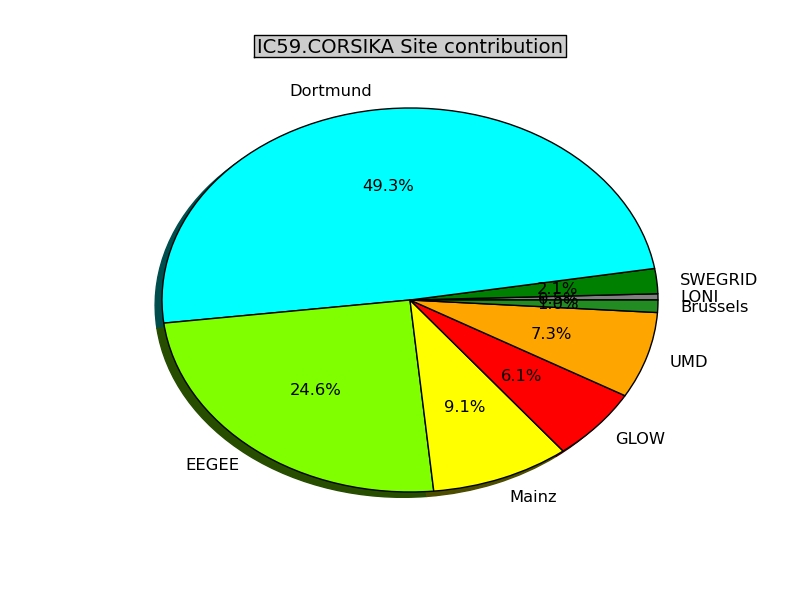

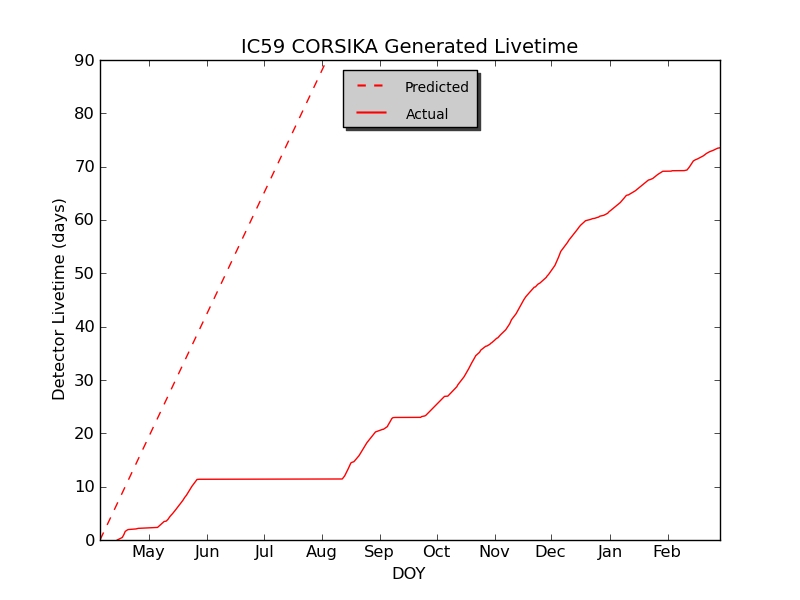

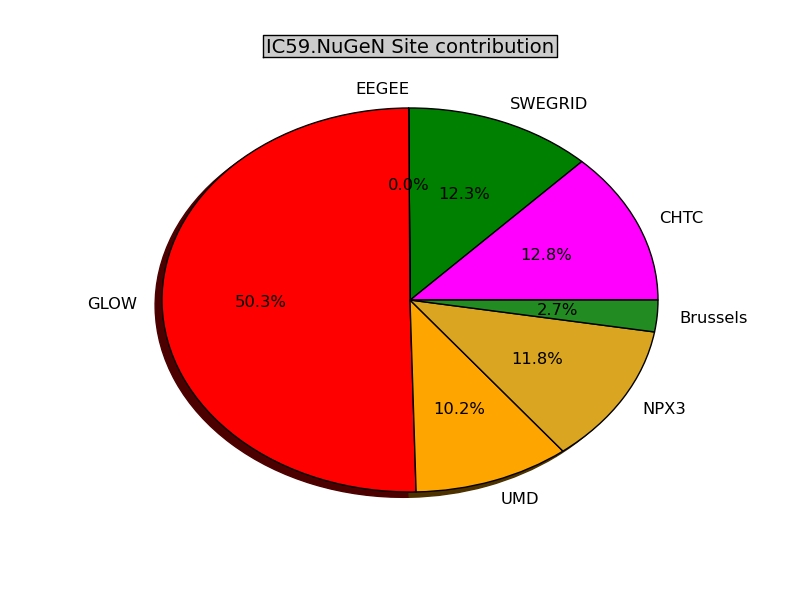

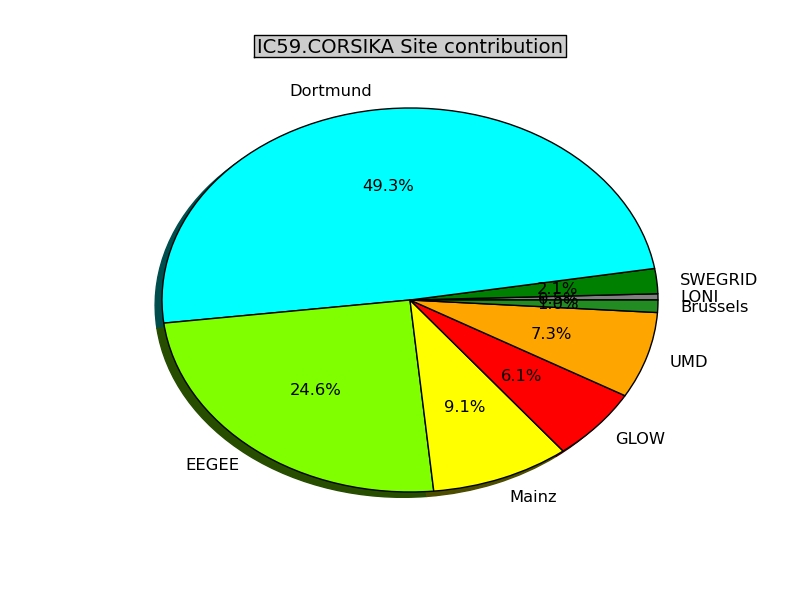

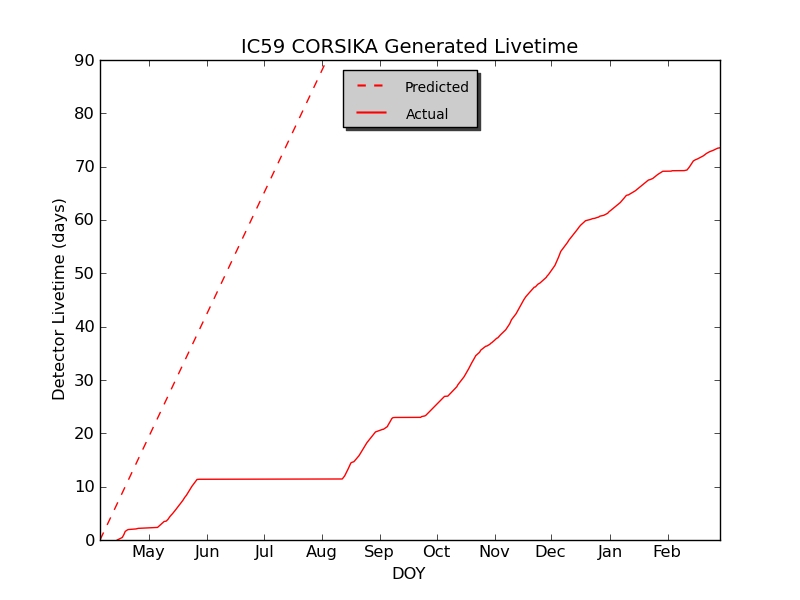

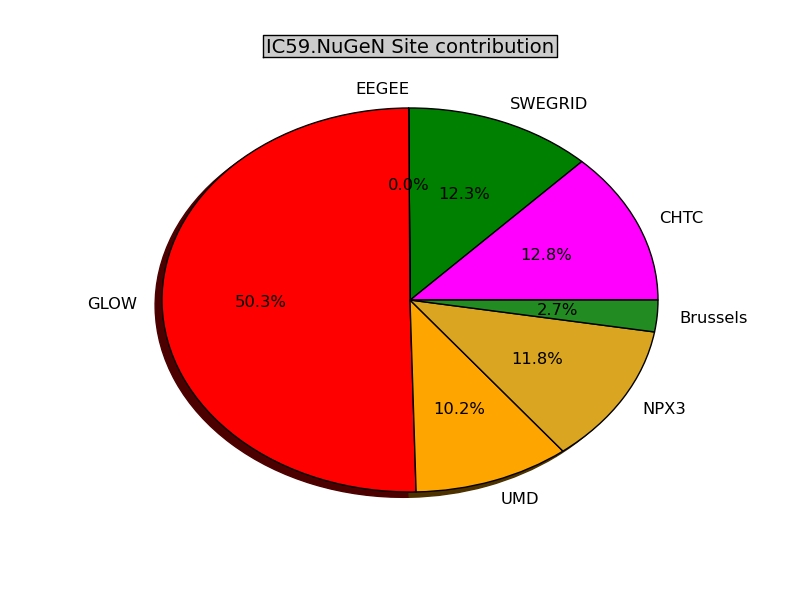

Simulation – The IC59 production of simulation data was completed with the exception of a few small datasets used for special studies of systematic uncertainties. Production ofbackground physics datasets using CORSIKA continued during the fall of 2010 and into the beginning of 2011, foliowing a significant increase in available storage capacity for the data. A dramatic increase in the production of neutrino signal simulation data and special simulation data compensated for reduced background data production. Production sites included: CHTC – UW Campus; Dortmund; EGEE - German Grid; GLOW – Grid Laboratory of Wisconsin; IIHE - Brussels; LONI – Louisiana Optical Network Infrastructure; NPX3 – UW IceCube; SWEGRID – Swedish Grid; UGent - Ghent (used primarily for IceTop simulation); and, UMD – University of Maryland.

Figure 4: Left: Cumulative Production of IC59 Background Events with CORSIKA Generator (continuous line) Compared with 2010 Plan (dashed line). Right: Relative Contribution of Production Sites.

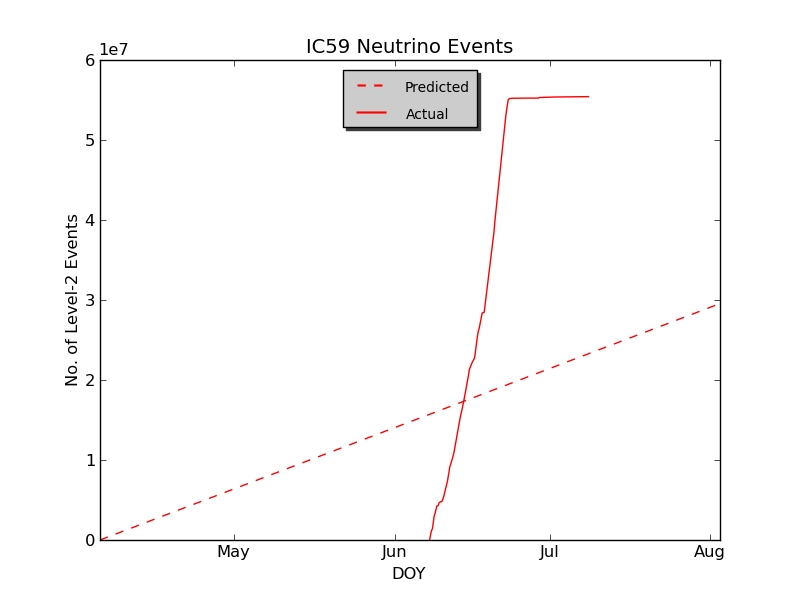

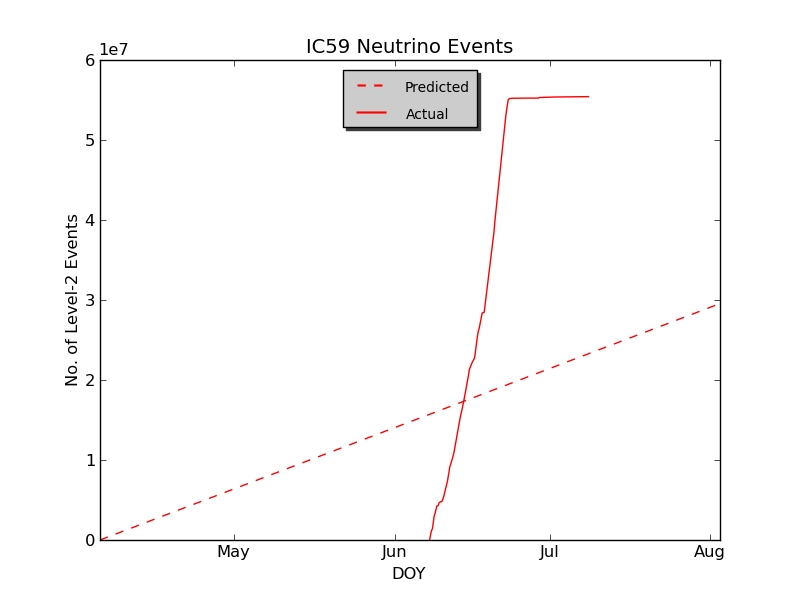

Between June and August, the target of neutrino signal event generation, for all the types, exceeded the plan by more than a factor of two (Figure 5) with a total of 18 CPU-years used.

Between June and August, the target of neutrino signal event generation, for all the types, exceeded the plan by more than a factor of two (Figure 5) with a total of 18 CPU-years used.

Figure 5: Left: Cumulative Production of IceCube-59 Neutrino Events (continuous line) Compared with 2010 Plan (dashed line). Right: Relative Contribution of Production Sites.

Production of IC86 benchmark datasets for background and neutrino signal (electron, muon, and tau neutrinos) exceeded the original plan. Benchmark datasets were produced with the newly developed direct photon propagation as an alternative to the current use of the bulky lookup photon tables. Dedicated physics datasets for IC40 were generated in DESY and in the Swedish Grid to address specific data analyses. Production of the IC79 detector simulation officially started at the beginning of August and will continue through the end of the year. Development is currently underway to implement new triggers to simulate an updated IC86 detector for physics analyses. IC86 production will be done in parallel with current IC79 simulations.

Personnel

–

A software professional was added at 0.8 FTE and a Programmer in Training at 0.5 FTE in shared support of DAQ and IceCube Live. A Data Migration Manager at 0.5 FTE was added in support of Data Management.

Section II – Financial/Administrative Performance

The University of Wisconsin–Madison is maintaining three separate accounts with supporting charge numbers for collecting IceCube M&O funding and reporting related costs: 1) NSF M&O Core Account; 2) U.S. Common Funds Account; and, 3) Non U.S. Common Funds Account.

A total of $6,900,000 was released to UW–Madison to cover the costs of Maintenance and Operations during FY2011. A total of $928,200 was directed to the U.S. Common Fund Account and the remaining $5,971,800 were directed to the IceCube M&O Core Account (Figure 6).

� FY2011

|

Funds Awarded to UW

|

IceCube M&O Core Account

|

$5,971,800

|

U.S. Common Fund Account

|

$928,200

|

TOTAL NSF Funds

|

$6,900,000

|

Figure 6: NSF IceCube M&O Funds - Federal Fiscal Year 2011

A total amount of $877,560 of the IceCube M&O FY2011 Core Funds was committed to six U.S. subawardee institutions during the first nine months of FY2011 (Figure 7). Subawardees submit invoices to receive reimbursement against their actual IceCube M&O costs. Deliverable commitments made by each subawardee institution are monitored throughout the year.

� Institution

|

Major Responsibilities

|

FY2011 M&O Funds

|

Lawrence Berkeley National Laboratory

|

Detector Verification; Detector Calibration.

|

$65k

|

Pennsylvania State University

|

Detector Verification, high level monitoring and calibration;

|

$34k

|

University of California at Berkeley

|

Calibration; Monitoring

|

$82k

|

University of Delaware, Bartol Institute

|

IceTop Surface Array Calibration, Monitoring and Simulation

|

$166k

|

University of Maryland at College Park

|

Support IceTray software framework; on-line filter; simulation production.

|

$499k

|

University of Wisconsin at River Falls

|

Education & Outreach

|

$31k

|

Total

|

|

$878k

|

Figure 7: IceCube M&O Subawardee Institutions - Major Responsibilities and FY2011 Funds

IceCube M&O Common Fund Contributions

The IceCube M&O Common Fund (CF) was established to enable collaborating institutions to contribute to the costs of maintaining the computing hardware and software required to manage experimental data prior to processing for analysis. Each institution contributed to the Common Fund based on the total number of the institution’s Ph.D. authors at the established rate of $13,650 per Ph.D. author.

The Collaboration updated t

he author count

and the

Memorandum of Understanding (MoU) for M&O

at the Collaboration meeting in April 2011.

� | |

|

Planned

|

|

Actual Received to date

(7/31/2011)

|

Total Common Funds

|

127

|

$1,733,550

|

|

$1,726,484

|

U.S. Contribution

|

68

|

$928,200

|

|

$928,200

|

Non U.S. Contribution

|

59

|

$805,350

|

|

$798,124

|

| |

|

|

|

|

Figure 8: Planned and Actual CF Contributions, for Year four of M&O, April 1st, 2010 – March 31st, 2011

Common Fund Expenditures

M&O activities identified as appropriate for support from the Common Fund are those core activities that are of common necessity for reliable operation of the detector and the computing infrastructure (defined in the Maintenance & Operations Plan). Figure 9 includes the major purchases for the 2010/2011 South Pole System upgrade and for the FY11 UW data center and network upgrade, funded

by both M&O Common Fund ($848 k)

and MREFC Pre-Ops ($878 k).

� | System upgrade

|

Item

|

Vendor

|

Line Items

|

Cost Category

|

Amount

|

| South Pole System

|

Servers

|

DELL

|

81 DELL R710 Servers

|

Capital Equipment

|

$438 k

|

| South Pole System

|

Servers

|

DELL

|

5 DELL R610 Servers

|

Capital Equipment

|

$25 k

|

| South Pole System

|

Servers

|

DELL

|

Service for DELL R610/R710

|

Service Agreements

|

$79 k

|

| South Pole System

|

Servers

|

DELL

|

Spare parts for DELL servers

|

M&S

|

$30 k

|

| South Pole System

|

Storage

|

HP

|

8 HP Storage Works LTO-4 Ultrium 1840

|

Capital Equipment

|

$28 k

|

| South Pole System

|

Storage

|

HP

|

2 HP Storage Works LTO-4 Ultrium 1840 -(spare)

|

M&S

|

$10 k

|

| South Pole System

|

Storage

|

DELL

|

70 146 GB SCSI 2.5inch Hard Drive

|

M&S

|

$21 k

|

| South Pole System

|

Tapes

|

MNJ

|

2 Quantum SUPERLOADER 3 LTO5 TAPE 1DR

|

Capital Equipment

|

$10 k

|

| South Pole System

|

Media

|

DELL

|

Tape Media for LTO4-120, 100 Pack

|

M&S

|

$45 k

|

| South Pole System

|

Media

|

DELL

|

Tape Media for LTO5 Cus 100 Pack

|

M&S

|

$45 k

|

| Network

|

HW

|

Core BTS

|

CISCO Switches and Hardware

|

Capital Equipment

|

$129 k

|

| Network

|

SW

|

Core BTS

|

CISCO Software Licenses

|

M&S

|

$18 k

|

| Network

|

Service

|

Core BTS

|

SMARTNET (Service)

|

Service Agreements

|

$14 k

|

| Network

|

Service

|

Core BTS

|

Cisco SMARTNet Annual Maintenance Contract

|

Service Agreements

|

$33 k

|

| Network

|

Cooling

|

H & H

|

Daikin Air Mini split cooling system

|

M&S

|

$15 k

|

| Network

|

HW

|

Core BTS

|

2 CISCO N2K GE, 2PS, 1Fan Module,

|

Capital Equipment

|

$11 k

|

| UW Data Center

|

Storage

|

Transource

|

Storage extension AMS2000.7 Tray HD expansion units, 336 2TB disks

|

Capital Equipment

|

$385 k

|

| UW Data Center

|

UPS

|

HP

|

5 HP R5500 XR UPS

|

M&S

|

$17 k

|

| UW Data Center

|

Servers

|

DELL

|

48 PowerEdge M610 BladeServer 2xx5670 Processor, 48GB

|

Capital Equipment

|

$200 k

|

| UW Data Center

|

Servers

|

DELL

|

3 Blade Server Enclosure M1000E

|

Capital Equipment

|

$11 k

|

| UW Data Center

|

Servers

|

DELL

|

Service Support for 51 Blade Servers and 12 PowerConnect switches

|

Service Agreements

|

$43 k

|

| UW Data Center

|

Servers

|

DELL

|

6 Dell PowerEdge R710 server 48GB Memory, dual Xeon X5670

|

Capital Equipment

|

$31 k

|

| UW Data Center

|

Servers

|

DELL

|

ProSupport for 6 Dell PowerEdge R710 servers

|

Service Agreements

|

$12 k

|

| UW Data Center

|

Storage

|

DELL

|

PowerVault MD3200i 12*2TB Disks

|

Capital Equipment

|

$10 k

|

| UW Data Center

|

Servers

|

DELL

|

4 PowerEdge R610, and 3 RowerEdge R510, 12GB Memory, single Xeon E5630

|

Capital Equipment

|

$33 k

|

| UW Data Center

|

Network

|

DELL

|

20 Brocade 1020 Dual-Port 10 Gbps FCoE CAN

|

Capital Equipment

|

$15 k

|

| UW Data Center

|

Servers

|

DELL

|

4 PowerEdge R710, 24GB Memory, dual Xeon X5670, 8 146GB Drives

|

Capital Equipment

|

$19 k

|

| Total

|

|

$1,726k

|

Figure 9: IceCube FY2011 Computing Infrastructure Upgrade

Section III – Project Governance & Upcoming Events

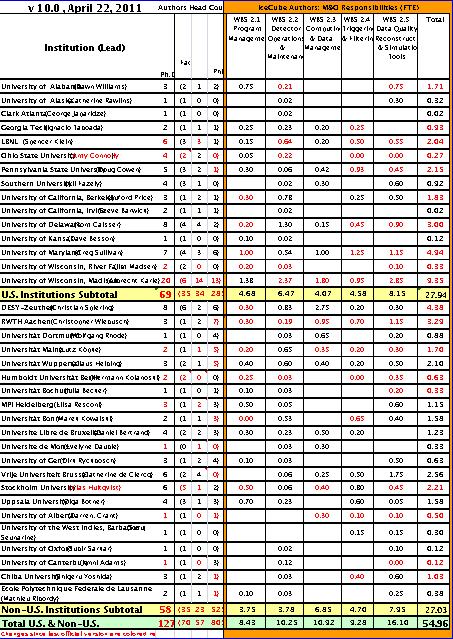

� The detailed M&O institutional responsibilities and PhD authors head count are being revised twice a year for the IceCube collaboration meetings, as part of the institutional Memorandum of Understanding (MoU) documents. The MoU was last revised in April 2011 for the Spring collaboration meeting in Madison (Figure 10), and the next revision will be posted in September 2011 at the Fall collaboration meeting in Uppsala.

The revised IceCube Collaboration Governance Document (Rev 6.4) was submitted to NSF on February 23, 2011, as appendix to the IceCube Maintenance and Operations Plan (M&OP).

IceCube M&O Memorandum of Understanding, Effort and Authors Head Count Summary

Figure 10: IceCube M&O MoU Summary, v10.0 April 22, 2011

IceCube Major Meetings and Events

� | Data Acquisition Subsystem Hardware Review

|

August 2-4, 2011

|

Simulation Workshop

|

Aug 29-Sept 2, 2011

|

IceCube Fall Collaboration Meeting at Uppsala University, Sweden

|

September 19-23, 2011

|

IceCube IOFG Meeting, Uppsala

|

September 21, 2011

|

Detector Operations Workshop

|

October, 2011

|

| |

|

| |

|

Acronym List

� CF Common Funds

DAQ Data Acquisition System

DOM Digital Optical Module

IceCube Live The system that integrates control of all of the detector’s critical subsystems

IceProd IceCube Simulation production custom-made software

IceTray IceCube Core Analysis software framework is part of the IceCube core software library

ICL IceCube Laboratory (South Pole)

IRC IceCube Research Center

KSTF Knowles Science Teaching Fellow

M&OP Maintenance & Operations Plan

MoU Memorandum of Understanding between UW-Madison and all collaborating institutions

PnF Process and Filtering

RPSC Raytheon Polar Services Company

SAC Scientific Advisory Committee

SCAP Software & Computing Advisory Panel

SPS South Pole System

TDRSS The Tracking and Data Relay Satellite System is a network of communications satellites

TFT Board Trigger Filter and Transmit Board

|

|

|

Back to top

FY11_Annual_RPT

19

Between June and August, the target of neutrino signal event generation, for all the types, exceeded the plan by more than a factor of two (Figure 5) with a total of 18 CPU-years used.

Between June and August, the target of neutrino signal event generation, for all the types, exceeded the plan by more than a factor of two (Figure 5) with a total of 18 CPU-years used.