IceCube Maintenance & Operations FY17 (PY2) Quarter 3 Report: Apr 2017 – Dec 2017

Cooperative Agreement: PLR-1600823 December 31, 2017

IceCube Maintenance and Operations

Fiscal Year 2017 PY2 Quarter 3 Report

April 1, 2017 – December 31, 2017

Submittal Date: February 1, 2018

____________________________________

University of Wisconsin–Madison

This report is submitted in accordance with the reporting requirements set forth in the IceCube Maintenance and Operations Cooperative Agreement, PLR-1600823.

Foreword

This FY2017 (PY2) Mid-Year Report is submitted as required by the NSF Cooperative Agreement PLR-1600823. This report covers the 9-month period beginning April 1, 2017 and concluding December 31, 2017. The status information provided in the report covers actual common fund contributions received through December 31, 2017 and the full 86-string IceCube detector (IC86) performance through September 1, 2017.

Table of Contents

| Foreword

| | 2 |

| | |

| Section I – Financial/Administrative Performance

| | 4 |

| | |

| Section II – Maintenance and Operations Status and Performance

| 6 |

| Detector Operations and Maintenance

| 6 |

| Computing and Data Management Services

| 11 |

| Data Processing and Simulation Services

| 19 |

| Software Coordination

| 21 |

| Calibration

| 22 |

| Program Management

| 23 |

| | |

| Section III – Project Governance and Upcoming Events

| | 27 |

| | |

| | |

| | |

| | |

| | |

| | |

| |

| Section I – Financial/Administrative Performance

|

| |

| The University of Wisconsin–Madison is maintaining three separate accounts with supporting charge numbers for collecting IceCube M&O funding and reporting related costs: 1) NSF M&O Core account, 2) U.S. Common Fund account, and 3) Non-U.S. Common Fund account.

|

| |

| The first PY2 installment of $5,250,000 was released to UW–Madison to cover the costs of maintenance and operations during the first three quarters of PY2 (FY2017/FY2018): $7

98,525 was directed to the U.S. Common Fund account based on the number of U.S. Ph.D Authors in the last version of the institutional MoU’s, and the remaining $4,451,475 was directed to the IceCube M&O Core account. The second PY2 installment of $1,750,000 is expected to be released to UW–Madison to cover the costs of maintenance and operations during the remaining quarter of PY2 (FY2018): $266,175 will be directed to the U.S. Common Fund account, and the remaining $1,483,825 will be directed to the IceCube M&O Core account (Figure 1).

|

| |

PY2: FY2017 / FY2018

|

Funds

Awarded

to UW for Apr 1, 2017 – March 31, 2018

|

Funds

to Be Awarded

to UW for Apr 1, 2017 – March 31, 2018

|

IceCube M&O Core account

|

$4,451,475

|

$1,483,825

|

U.S. Common Fund account

|

$798,525

|

$266,175

|

TOTAL NSF Funds

|

$5,250,000

|

$1,750,000

|

Table 1: NSF IceCube M&O Funds – PY2 (FY2017 / FY2018)

Of the IceCube M&O PY2 (FY2017/2018) Core funds, $1,034,921 were committed to the U.S. subawardee institutions based on their statement of work and budget plan. The institutions submit invoices to receive reimbursement against their actual IceCube M&O costs. Table 2 summarizes M&O responsibilities and total FY2017/2018 funds for the subawardee institutions.

�

Institution

|

Major Responsibilities

|

Funds

|

Lawrence Berkeley National Laboratory

|

DAQ maintenance, computing infrastructure

|

$91,212

|

Pennsylvania State University

|

Computing and data management, simulation production, DAQ maintenance

|

$69,800

|

University of Delaware, Bartol Institute

|

IceTop calibration, monitoring and maintenance

|

$158,303

|

University of Maryland at College Park

|

IceTray software framework, online filter, simulation software

|

$595,413

|

University of Alabama at Tuscaloosa

|

Detector calibration, reconstruction and analysis tools

|

$24,229

|

Michigan State University

|

Simulation software, simulation production

|

$27,965

|

South Dakota School of Mines and Technology

(added in July 2017)

|

Simulation production and reconstruction

|

$54,749

|

Total

|

|

$1,021,671

|

Table 2: IceCube M&O Subawardee Institutions – PY2 (FY2017/2018) Major Responsibilities and Funding

IceCube NSF M&O Award Budget, Actual Cost and Forecast

The current IceCube NSF M&O 5-year award was established in the middle of Federal Fiscal Year 2016, on April 1, 2016. The following table presents the financial status five months into the Year 2 of the award, and shows an estimated balance at the end of PY2.

Total awarded funds to the University of Wisconsin (UW) for supporting IceCube M&O from the beginning of PY1 through part of PY2 are $12,318K. With the last PY2 planned installment of $1,750K, the total PY1-2 budget is $14,068K. Total actual cost as of August 31, 2017 is $9,422K and open commitments against purchase orders and subaward agreements are $1,114K. The current balance as of August 31, 2017 is $3,532K. With a projection of $3,582K for the remaining expenses during the final seven months of PY2, the estimated negative balance at the end of PY2 is -$50K, which is 0.4% of the PY2 budget (Table 3).

� (a)

|

(b)

|

(c)

|

(d)= a - b - c

|

(e)

|

(f) = d – e

|

YEARS 1-2 Budget

Apr.’16-Mar.’18

|

Actual Cost To Date through

Dec 31, 2017

|

Open Commitments

on

Dec 31 2017

|

Current Balance

on

Dec. 31, 2017

|

Remaining Projected Expenses

through Mar. 2018

|

End of PY2 Forecast Balance on Mar. 31, 2018

|

$14,068K

|

$11,640K

|

$1,067K

|

$1,361K

|

$1,405K

|

-$44K

|

Table

3:

IceCube NSF M&O Award Budget, Actual Cost and Forecast

IceCube M&O Common Fund Contributions

The IceCube M&O Common Fund was established to enable collaborating institutions to contribute to the costs of maintaining the computing hardware and software required to manage experimental data prior to processing for analysis.

Each institution contributes to the Common Fund, based on the total number of the institution’s Ph.D. authors, at the established rate of $13,650 per Ph.D. author.

The Collaboration updates t

he Ph.D. author count

twice a year before each collaboration meeting

in conjunction with the update to the IceCube Memorandum of Understanding for M&O.

The M&O activities identified as appropriate for support from the Common Fund are those core activities that are agreed to be of common necessity for reliable operation of the IceCube detector and computing infrastructure and are listed in the Maintenance & Operations Plan.

Table 4 summarizes the planned and actual Common Fund contributions for the period of April 1, 2017–March 31, 2018, based on v22.0 of the IceCube Institutional Memorandum of Understanding, from April 2017

.

The final non-U.S. contributions are underway, and it is anticipated that most of the planned contributions will be fulfilled.

� | |

Ph.D. Authors

|

Planned Contribution

|

|

Actual Received

|

Total Common Funds

|

137

|

$1,815,450

|

|

$1,743,557

|

U.S. Contribution

|

71

|

$969,150

|

|

$969,150

|

Non-U.S. Contribution

|

66

|

$846

,300

|

|

$774,407

|

| |

|

|

|

|

Table 4: Planned and Actual CF Contributions for the period of April 1, 2017–March 31, 2018

Section II – Maintenance and Operations Status and Performance

Detector Operations

and Maintenance

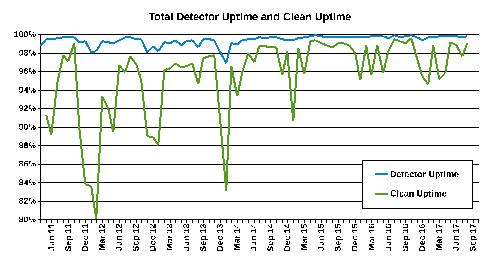

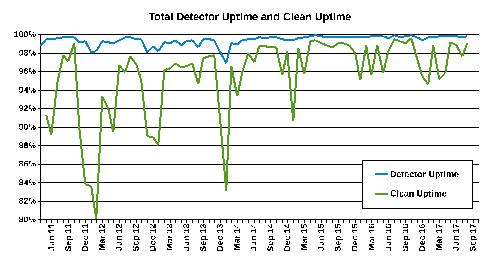

Detector Performance — During the period from February 1, 2017, to September 1, 2017, the detector uptime, defined as the fraction of the total time that some portion of IceCube was taking data, was 99.80%, exceeding our target of 99% and close to the maximum possible, given our current data acquisition system. The clean uptime for this period, indicating full-detector analysis-ready data, was 97.76%, exceeding our target of 95%. Historical total and clean uptimes of the detector are shown in Figure 1.

Figure 2 shows a breakdown of the detector time usage over the reporting period. The partial-detector good uptime was 0.71% of the total and includes analysis-ready data with fewer than all 86 strings. Excluded uptime includes maintenance, commissioning, and verification data and required 1.33% of detector time. The unexpected detector downtime was limited to 0.2%.

Figure 1: Total IceCube Detector Uptime and Clean Uptime

Figure 1: Total IceCube Detector Uptime and Clean Uptime

Figure 2: Cumulative IceCube Detector Time Usage, February 1, 2017 – September 1, 2017

Figure 2: Cumulative IceCube Detector Time Usage, February 1, 2017 – September 1, 2017

Hardware Stability — One DOM (13–36 “Broach”) failed during this reporting period, after a bimonthly full-detector power cycle used to reset the internal clocks on the DOMs. This DOM’s failsafe bootloader appears to be corrupted and cannot be reprogrammed remotely (by design, as the failsafe). In order to further reduce power-cycling stress on the DOMs, we have modified our operational procedures to “softboot” the DOMs every two months instead of power-cycling. This DOM’s wire pair partner is currently disconnected, but we believe it can be returned into service. The total number of active DOMs is currently 5402 (98.5% of deployed DOMs), plus four scintillator panels and the IceACT trigger mainboard. Additionally, one custom GPS timing fanout (DSB) card failed during the reporting period. We believe the card can be repaired with a fuse replacement.

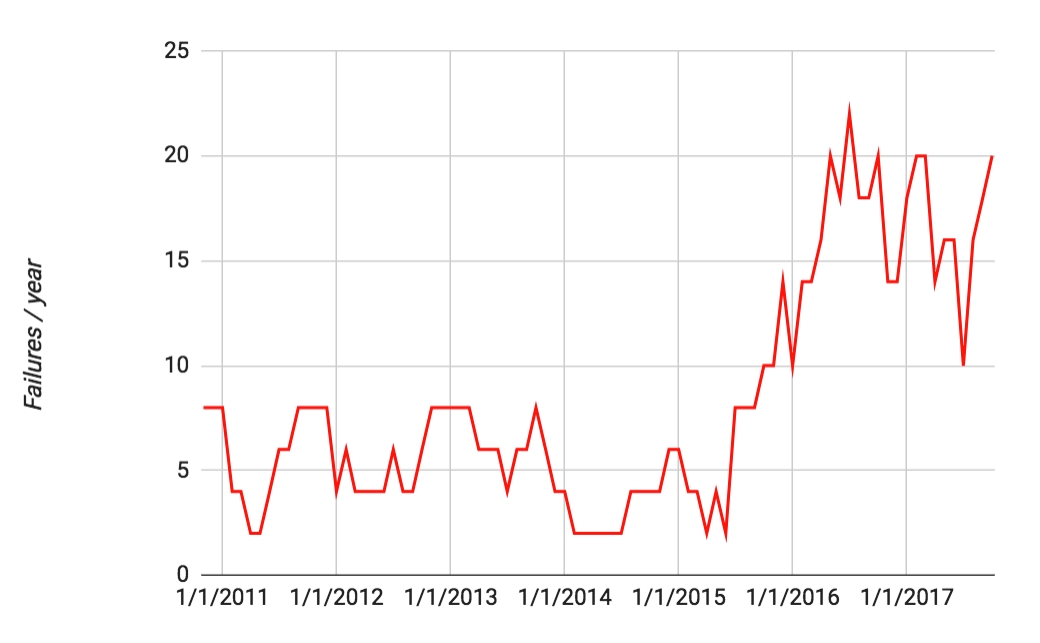

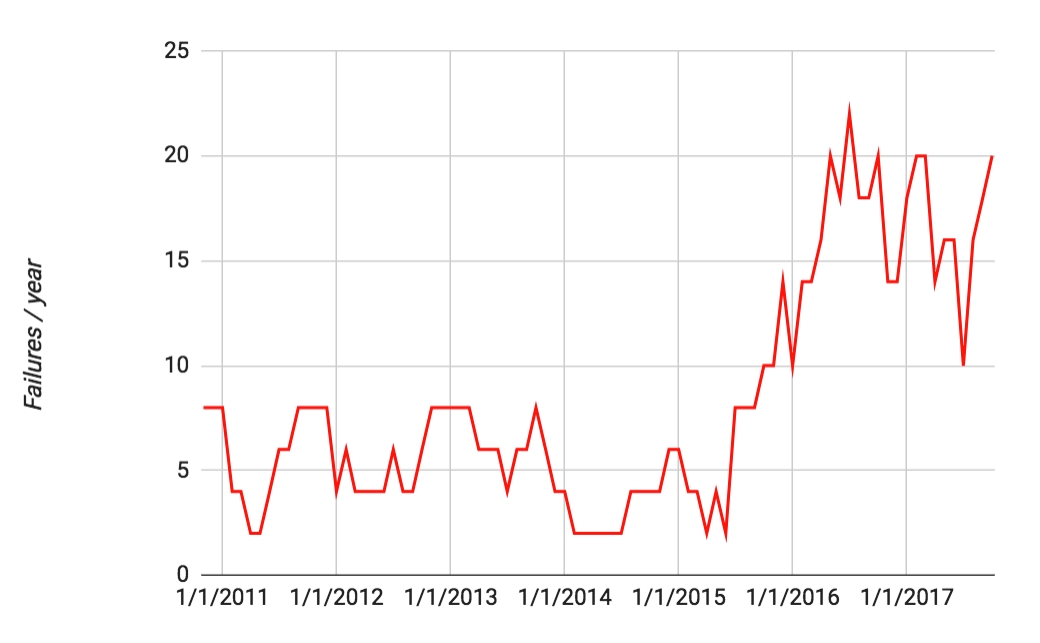

Figure 3: Acopian (DOM) power supply failure rate vs. time (6-month rolling average).

Figure 3: Acopian (DOM) power supply failure rate vs. time (6-month rolling average).

The failure rate has been stabilized with a hardware replacement but is not decreasing as expected.

The failure rate in the commercial Acopian power supplies that supply the DC voltage to the DOMs from the DOMHubs increased starting in late 2015 (Figure 3). Failed units from the past two seasons were sent back to the manufacturer for analysis, leading to a modified design with theoretically improved robustness. During the 2016–17 austral summer season, we performed a complete replacement of the supplies using the updated units. The failure rate has stabilized; however, it does not appear to be decreasing back to the previous stable levels. We continue to consult with Acopian engineers on understanding the failure mode.

This austral summer season, we plan to add monitoring capabilities on the AC input power to search for transients that might cause such failures. Additionally, we plan a limited test of alternate DC power supplies (Mean Well MSP-200-48) that have been successfully tested in DOMHubs at SPTS.

IC86 Physics Runs — The seventh season of the 86-string physics run, IC86–2017, began on May 18, 2017. Detector settings were updated using the latest yearly DOM calibrations from March 2017. Changes to the online filtering system include: updated neutrino candidate selection for real-time followup; a new real-time starting-track selection (ESTReS); an updated Extremely High Energy (EHE) filter; and retirement of a specialized DeepCore filter that is no longer used. The run start also marked the production release of several new operational components, including the I3Live Moni2.0 monitoring system, and the new GCD database system, both described in more detail below.

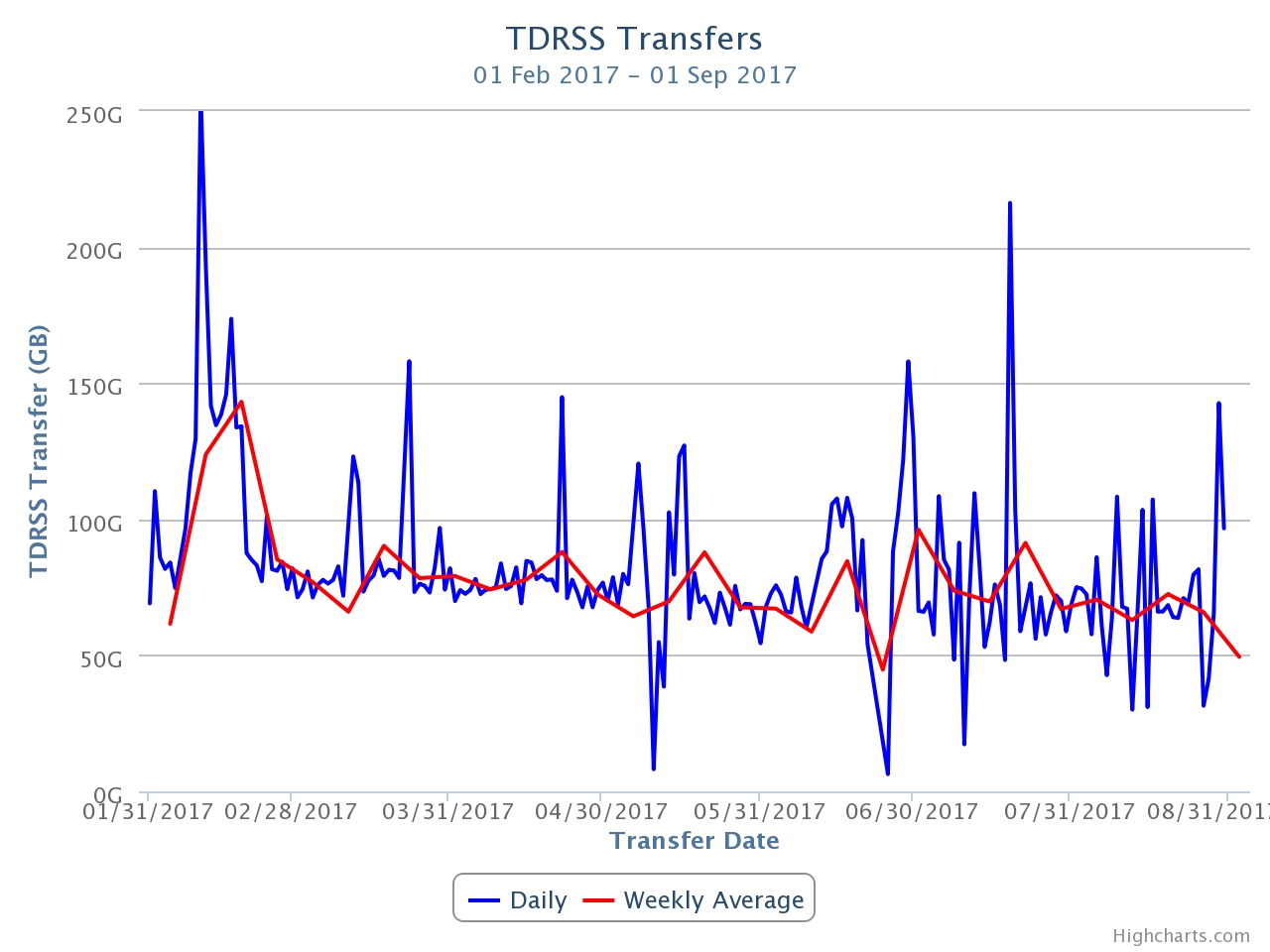

For this reporting period, the average TDRSS daily transfer rate was approximately 67 GB/day. The bandwidth saved from filter optimizations has allowed us to reserve more for HitSpool data transfer, saving all untriggered IceCube hits within a time period of interest. We have recently added alert mechanisms by which we save HitSpool data around Fermi-LAT solar flares and LIGO gravitational wave alerts. A large amount of HitSpool data around the exceptional X-class solar flares of September 6, 2017 was saved to archival disk and will be hand-carried north.

Data Acquisition — The IceCube Data Acquisition System (DAQ) has reached a stable state, and consequently the frequency of software releases has slowed to the rate of 3–4 per year. Nevertheless, the DAQ group continues to develop new features and patch bugs. During the reporting period, the following accomplishments are noted:

·

Delivery of the pDAQ:Rhinelander release in July 2017, which enables filling triggered event data requests directly from the HitSpool file cache. This marks a major milestone in decoupling the trigger system from the hub data processing, in order to pare detector downtime to the absolute minimum.

·

Work towards the next DAQ release in late 2017, which will include an improved process communication framework used among the DAQ components.

GCD Database System —The database for geometry, calibration, and detector status (GCD) information and associated code has grown difficult to maintain and is not easily extensible, either to new hardware such as the prototype scintillators, or to improved calibration techniques such as the recently-deployed online DOM gain corrections. We have developed a new GCD generation system (“gcdserver”) that uses a new underlying database and rewritten interface code. The new system has been successfully deployed online for the IC86–2017 run start, as well as also being used in offline “Level2” data processing.

Online Filtering — The online filtering system (“PnF”) performs real-time reconstruction and selection of events collected by the data acquisition system and sends them for transmission north via the data movement system. In addition to the standard filter changes to support the IC86–2017 physics run start, PnF was modified to use the new GCD database system to supply the necessary metadata for event processing.

Monitoring — The IceCube Run Monitoring system, I3Moni, provides a comprehensive set of tools for assessing and reporting data quality. IceCube collaborators participate in daily monitoring shift duties by reviewing information presented on the web pages and evaluating and reporting the data quality for each run. The original monolithic monitoring system processes data from various SPS subsystems, packages them in files for transfer to the Northern Hemisphere, and reprocesses them in the north for display on the monitoring web pages. In a new monitoring system, I3Moni 2.0, all detector subsystems report their data directly to IceCube Live.

After a thorough beta-testing period during which both monitoring systems were run in parallel, the I3Moni2.0 system has replaced the old system for daily monitoring activities, and the old system was retired at the IC86–2017 run start.

Experiment Control — Development of IceCube Live, the experiment control and monitoring system, is still quite active. This reporting period has seen two releases with the following highlighted features:

·

Live v3.0.0 (May 2017): 46 separate issues and feature requests have been resolved. This major release includes the production version of the I3Moni 2.0 monitoring system, and includes support for the new GCD database system.

·

Live v3.1.0 (September 2017): 25 separate issues and feature requests have been resolved, including usability improvements suggested by monitoring shifters, and support for database authentication.

Features planned for the next few releases include improving the “billboard” web page used as the primary display for detector status, and reworking the recent runs page for speed and efficiency. The uptime for the I3Live experiment control system during the reporting period was greater than 99.999%.

Supernova System — The supernova data acquisition system (SNDAQ) found that 99.8% of the available data from April 2017 to September 2017 met the minimum analysis criteria for run duration and data quality for sending triggers. An additional 0.03% of the data is available in short physics runs with less than 10-minute duration. While forming a trigger is not possible in these runs, the data are available for reconstructing a supernova signal.

A SNDAQ update (BT_XIII_moni) was released in July 2017 to fix a few minor issues with the monitoring system. Efforts to include a data-driven trigger that is independent of an assumed signal shape are under way.

Surface Detectors — Snow accumulation on the IceTop tanks continues to reduce the trigger rate of the surface array by ~10%/year. Uncertainty in the attenuation of the electromagnetic component of air showers due to snow is the largest systematic uncertainty in IceTop’s cosmic ray energy spectrum measurement. The snow also complicates IceTop’s cosmic-ray composition measurements, as it makes individual air showers look “heavier” by changing the electromagnetic/muon ratio of particles in the shower.

A decision by the NSF and the support contractor has been taken to stop any further snow management efforts. With this in mind, we have developed a plan to restore the full operational efficiency of the IceCube surface component using plastic scintillator panels on the snow surface above the buried IceTop tanks. By detecting particle showers in coincidence with IceTop and IceCube, the scintillator upgrade allows a) determination of the shower attenuation due to snow as a function of energy and shower zenith angle, and b) restoration of the sensitivity to low-energy showers lost due to snow accumulation on the tanks.

Four prototype scintillators were deployed during the 2015–16 pole season at IceTop stations 12 and 62. These scintillators have been integrated into the normal IceCube data stream and have been taking data continuously since early 2016. However, this design is not scalable since it relies on IceTop freeze control cables that are not accessible at all stations, and it requires open DOM readout (DOR) card slots in the ICL, of which most are already used. Development of a new version of the scintillators is underway that includes a new digitization and readout system, a different photodetection technology (SiPMs), and a streamlined, lighter housing.

Figure 4: New scintillator central station DAQ electronics (digital readout variant).

Figure 4: New scintillator central station DAQ electronics (digital readout variant).

Two prototype versions of the new scintillator station design will be deployed during the 2017–18 season, using different data-acquisition electronics and panel readout schemes (analog vs. digital). The station panels will be equipped with poles and brackets that will allow them to be elevated above the snow surface and subsequently raised to avoid being buried in the snow.

A prototype air Cherenkov telescope (IceACT) was installed on the IceCube Lab in the 2015–16 austral summer season. During the polar night, IceACT can be used to cross-calibrate IceTop by detecting the Cherenkov emission from cosmic ray air showers; it may also prove useful as a supplementary veto technique. IceACT was uncovered at sunset again in 2017 and has been taking data during the austral winter. A major camera upgrade is planned for the 2017–18 season.

Operational Communications & Real-time Alerts — Communication with the IceCube winterovers, timely delivery of detector monitoring information, and login access to SPS are critical to IceCube’s high-uptime operations. Several technologies are used for this purpose, including ssh/scp, the IMCS e-mail system, and IceCube’s own Iridium modem(s).

We have now developed our own Iridium RUDICS-based transport software (IceCube Messaging System, or I3MS) and have moved monitoring data to our own Iridium modems as of the 2015–16 austral summer season. We retired the Iridium short-burst-data system (ITS) in October 2016 and have added its modem to the I3MS system to increase bandwidth.

This past austral summer season, the contractor installed a new antenna housing “doghouse” on the roof of the ICL. This will alleviate overcrowding and self-interference of antennas in the existing doghouse. We have monitored the temperature of the new doghouse over the winter and discovered issues with the door seal that will be addressed by the contractor. After repairs, we will migrate all GPS and Iridium antennas to the new housing in the 2017–18 season.

Personnel — No changes.

Computing and Data Management Services

South Pole System - This time period started with the hiring process of our WinterOver detector operators for the upcoming season. Over 50 applications were screened and 15 phone interviews conducted. Five candidates were selected for in-person interviews and job offers were made to the two strongest candidates. Two more candidates were selected as a backup (these candidates were also referred to the South Pole Telescope). After successfully PQ-ing both primary candidates training in Madison started on August 1st and will continue until mid October when both operators will fly to Denver for organized team activities with ASC.

The ever changing software requirements for our online software at Pole forced us to redesign our approach regarding software management and maintenance. In order to provide up-to-date software packages not readily provided by the official Linux repositories as well as IceCube specific software packages a custom snapshot of the IceCube software from CernVM file system (CVMFS) was produced and tested at the South Pole Test system (SPTS). This allows us to run modern versions of Python, Boost, C++ and other software independent of the underlying operating system of a machine and provides a more common development basis between the online system in the South and the offline system in the Northern hemisphere. This software snapshot will be rolled out at the South Pole System (SPS) during the early phases of the upcoming summer season.

With the transition to the 2017 run configuration in May there were significant changes introduced in the way IceCube monitors and reports physics data. The old “Calibration and Verification” and “i3moni” systems were decommissioned as they are now fully integrated in IceCube’s new “Moni2.0” physics monitoring infrastructure. This new monitoring approach makes heavy use of the new MongoDB database system. Until now our database server has been a shared resource, providing several database systems (mongoDB, MySQL, PostgreSQL) on reliable, but stand-alone, hardware. To account for the increased importance of MongoDB for our operation the database setup was completely redesigned, allowing for high-availability failover, load-balancing, as well as increased security and data protection mechanisms. This multi-host setup is fully operational at SPTS and will come online at SPS once our science cargo arrives at Pole in the summer.

The new scintillator setup to be installed at South Pole during the upcoming season needs to be integrated in the ICL network setup. For this, a new data acquisition host (‘sdaq01’) was configured and set up for testing at SPTS and necessary network extensions (VLANs and address spaces) were assigned. Testing of this new setup at the Physical Sciences Lab (PSL) is ongoing and once the final design is determined it will be rolled out at Pole in parallel to the scintillator installations.

Scintillator testing at PSL was ramped up in preparation for the big summer activities. To facilitate easier access to remote equipment, three KVM switches were installed in the mDFL freezer control rooms.

Two laptops were ordered and configured to drive the big event display in the new visitor area in the B2 science lab at South Pole station. These machines require non-standard software and operating system and will be hooked up directly to the IceCube network in B2 to display live events from the detector.

To ensure uninterrupted data taking for the next year we ordered, tested and shipped over 4,000lbs of cargo to South Pole via NSF’s cargo stream. This includes batteries to replenish ~50% of our UPSes, and miscellaneous spare parts used during the current winter (hard drives, power supplies, etc.).

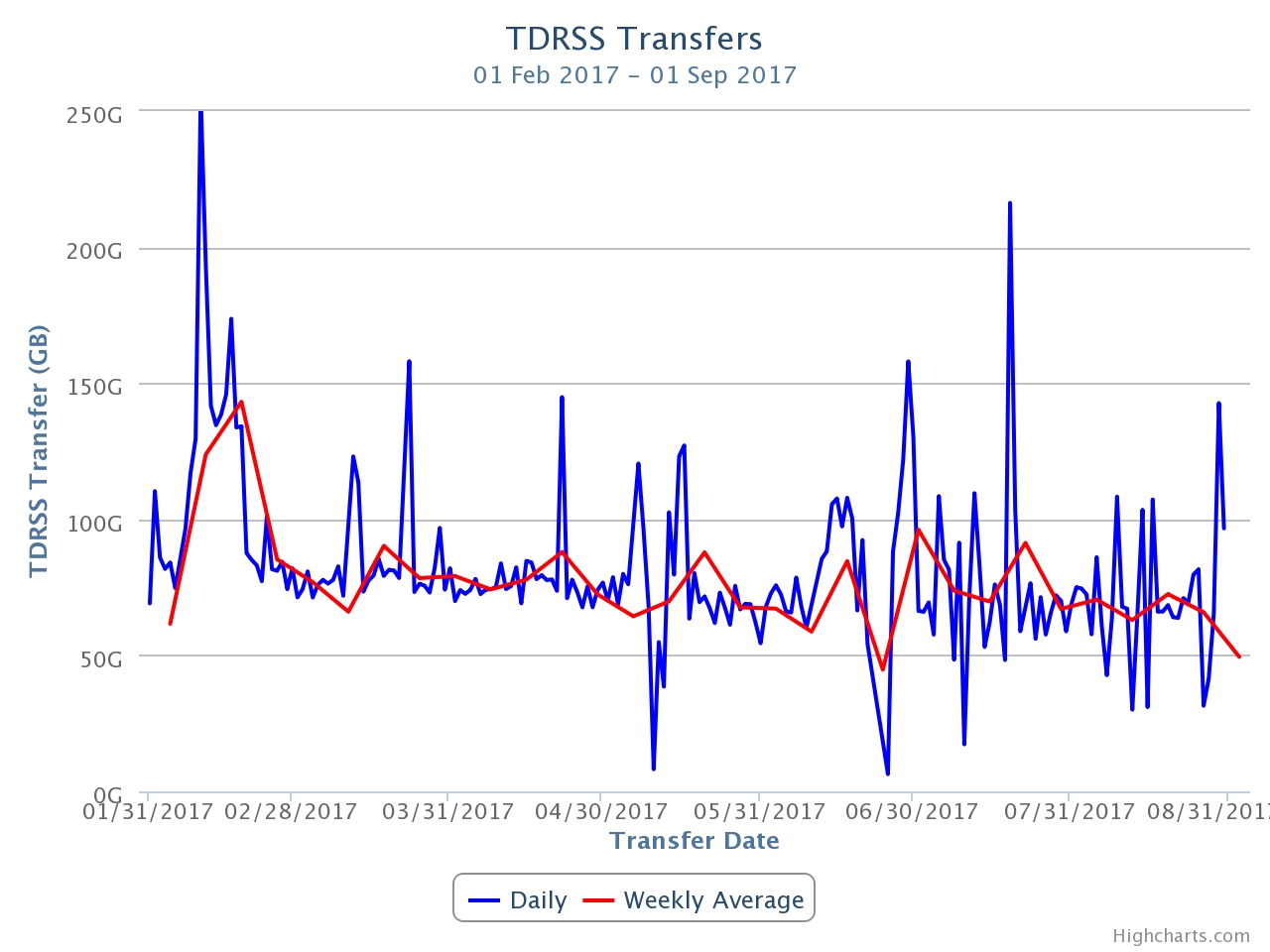

Data Transfer – Data transfer has performed nominally over the past ten months. Between February 2017 and August 2017 a total of 15.2 TB of data were transferred from the South Pole to UW-Madison via TDRSS, at an average rate of 73.2 GB/day. Figure 1 shows the daily satellite transfer rate and weekly average satellite transfer rate in GB/day through August 2017. The IC86 filtered physics data are responsible for 95% of the bandwidth usage.

Since September 2016 the new JADE software is handling all the IceCube data flows: disk archive at the South Pole, satellite transfer to UW-Madison and long term archive to tape silos at NERSC and DESY. The new data management software is more stable and resilient than its predecessor. This has been confirmed by the several months experience from the Winterovers and IT staff at UW-Madison operating the system, which has run smoothly with less maintenance effort.

The JADE software development continues to be very active in order to add new functionality to address new IceCube needs and further improve the automation and ease of use. One important new feature that was added during the reporting period is “Priority Groups”. The IceCube data acquisition system is now capable of reacting to operator-generated requests to store primary “hitspool” data for studying special events in greater detail. Some of these requests can generate data sets in the order of tens to hundreds of Gigabytes in a short period of time. This data is of interest to researchers therefore it needs to be transferred via satellite. The volume of this data can be quite high, therefore a mechanism to steer data transfers according to their priority is needed in order to avoid opportunistic “hitspool” requests delaying the regular filtered data flow. The new “Priority Groups” feature in JADE allows operators to configure different priorities per stream, or per file, and in this way manage satellite bandwidth in a more efficient way. This feature has also been successfully used to transfer special calibration data, allowing scientists to analyze it within few days instead of waiting for the Pole season.

Figure 1: TDRSS Data Transfer Rates, February 1, 2017–August 31, 2017. The daily transferred volumes are shown in blue and, superimposed in red, the weekly average daily rates are also displayed.

Data Archive – The IceCube raw data are archived to two copies on independent hard disks. During the reporting period (February 2017 to August 2017) a total of 217.8 TB of unique data were archived to disk averaging 1.0 TB/day.

In May 2017, the set of archival disks containing the raw data taken by IceCube during 2016 was received at UW-Madison. We are now using the new JADE software for handling this data. Once JADE has processed the files, their metadata is indexed and the bundled and prepared for being replicated to the long-term archive.

In December 2015, a Memorandum of Understanding was signed between UW-Madison and NERSC/LBNL by which NERSC agreed to provide long-term archive services for the IceCube data until 2019. By implementing the long-term archive functionality using a storage facility external to the UW-Madison data center, we aim for an improved service at lower cost, since large facilities that routinely manage data at the level of hundreds of petabytes benefit of economies of scale that ultimately make the process more cost efficient.

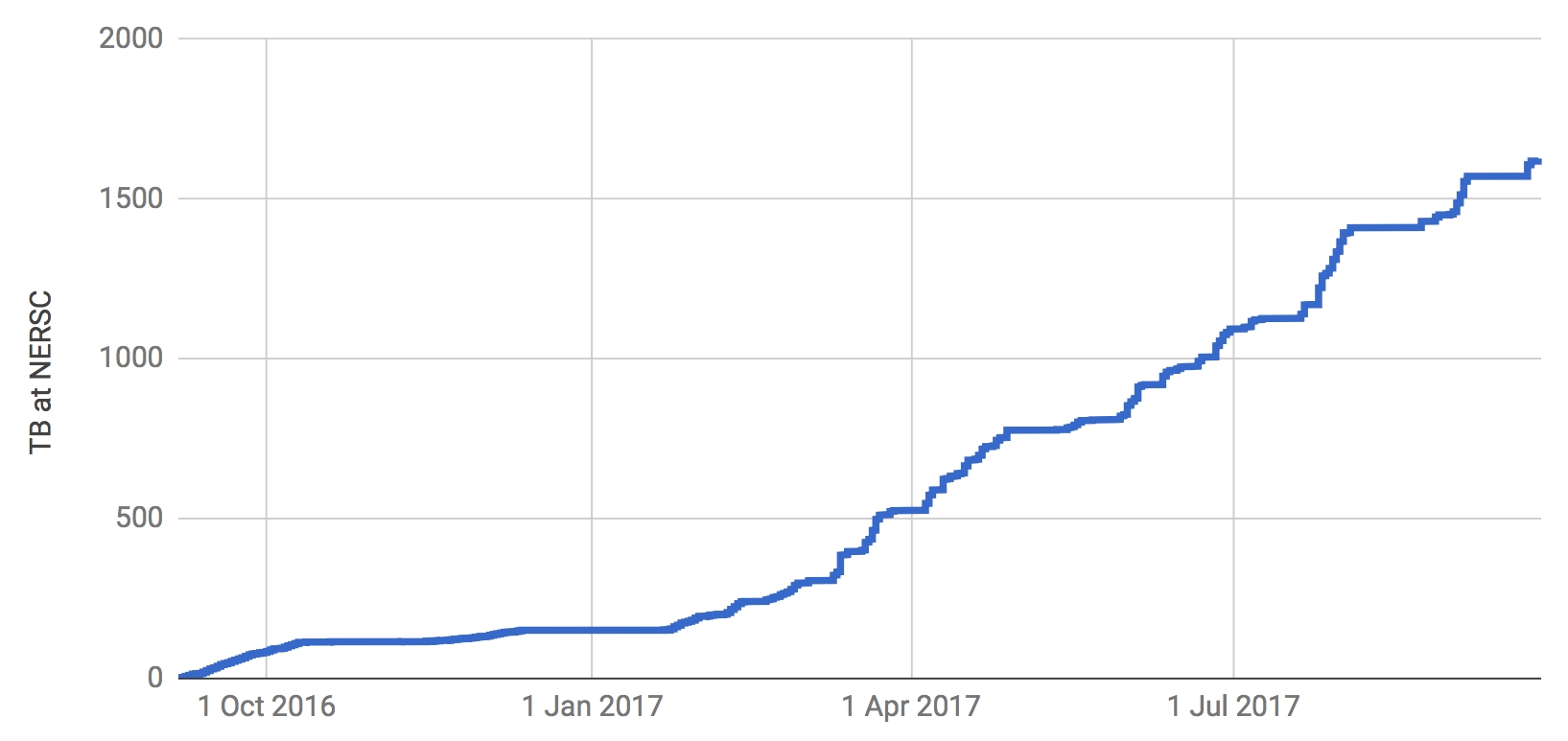

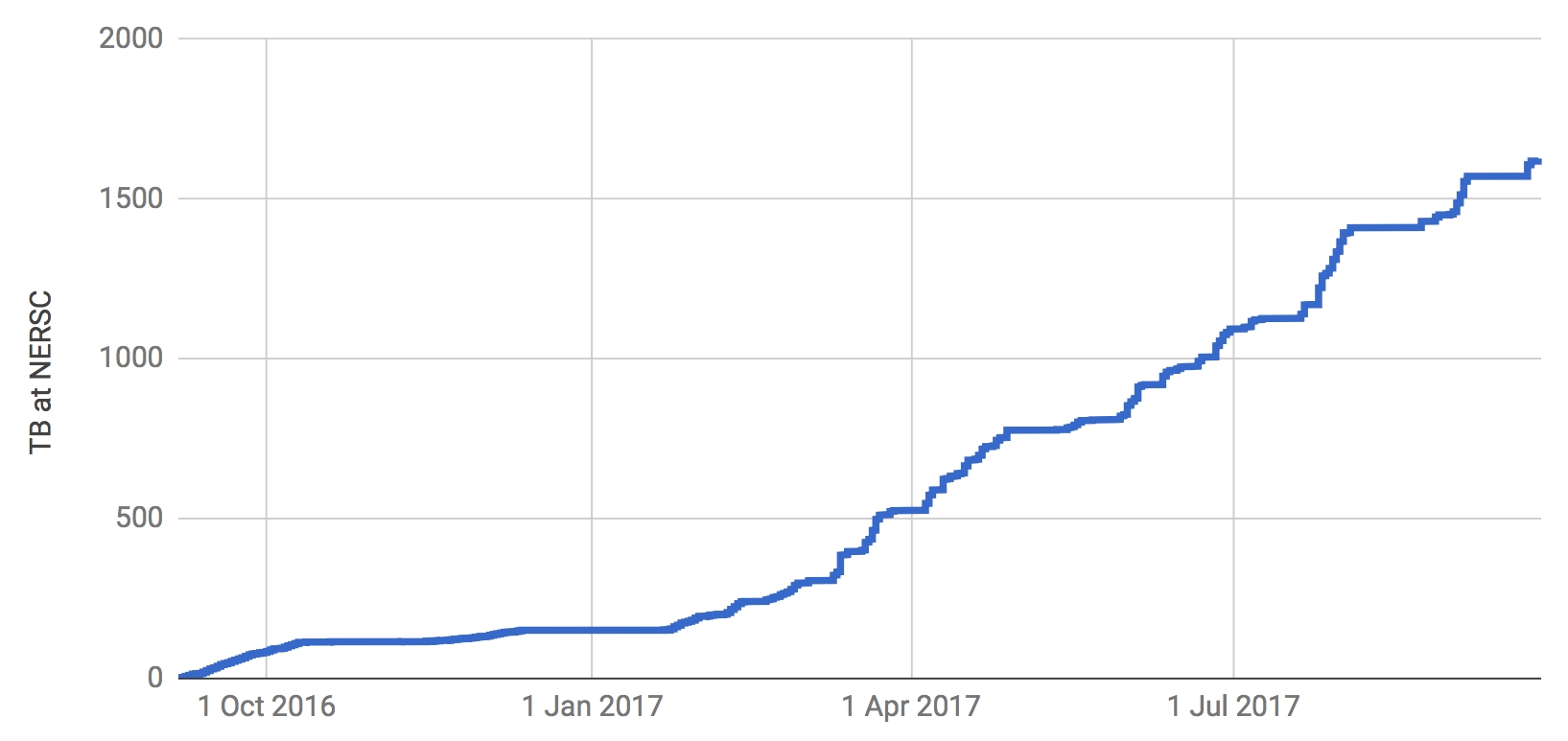

Since September 2016 we have been using the JADE software to bundle IceCube data files at UW-Madison and transfer them to NERSC. At the time of writing this report, the total volume of data archived at NERSC was 1625 TB. Figure 7 shows the rate at which data has been archived to NERSC since the start of this service in September 2016. The plan is to keep this archive stream constantly active while working on further JADE functionality that will allow us to steadily increase the performance and automation of this long term archive data flow.

Figure 1: TDRSS Data Transfer Rates, February 1, 2017–August 31, 2017. The daily transferred volumes are shown in blue and, superimposed in red, the weekly average daily rates are also displayed.

Data Archive – The IceCube raw data are archived to two copies on independent hard disks. During the reporting period (February 2017 to August 2017) a total of 217.8 TB of unique data were archived to disk averaging 1.0 TB/day.

In May 2017, the set of archival disks containing the raw data taken by IceCube during 2016 was received at UW-Madison. We are now using the new JADE software for handling this data. Once JADE has processed the files, their metadata is indexed and the bundled and prepared for being replicated to the long-term archive.

In December 2015, a Memorandum of Understanding was signed between UW-Madison and NERSC/LBNL by which NERSC agreed to provide long-term archive services for the IceCube data until 2019. By implementing the long-term archive functionality using a storage facility external to the UW-Madison data center, we aim for an improved service at lower cost, since large facilities that routinely manage data at the level of hundreds of petabytes benefit of economies of scale that ultimately make the process more cost efficient.

Since September 2016 we have been using the JADE software to bundle IceCube data files at UW-Madison and transfer them to NERSC. At the time of writing this report, the total volume of data archived at NERSC was 1625 TB. Figure 7 shows the rate at which data has been archived to NERSC since the start of this service in September 2016. The plan is to keep this archive stream constantly active while working on further JADE functionality that will allow us to steadily increase the performance and automation of this long term archive data flow.

Figure 2: Volume of IceCube data archived at the NERSC tape facility by the JADE Long Term Archive service as a function of time.

Computing Infrastructure at UW-Madison

The total amount of data stored on disk in the data warehouse at UW-Madison is 6052 TB: 1914 TB for experimental data, 3853 TB for simulation and analysis and 285 TB for user data.

Two SuperMicro 6048R-E1CR36L servers with 36 8TB disks each were purchased in March. The main driver for this storage expansion was to provide operational buffer space for the new data archive workflows that index and bundle the data received from the South Pole and prepare it to be transferred to NERSC and DESY for long term archive

The IceCube computing cluster at UW-Madison has continued to deliver reliable data processing services. Boosting the GPU computing capacity has been a high priority of the project since the Collaboration decided to use GPUs for the photon propagation part of the simulation chain back in 2012. Direct photon propagation was found to provide the precision required, and it happens to be very well suited for the GPU hardware, running about 100 times faster than in CPUs.

An expansion of the GPU cluster was purchased in April, consisting of eight SuperMicro 4027GR-TR chassis each containing eight Nvidia GTX 1080 GPU cards, two Xeon E5-2637 v4 processors, 64 GB of RAM and 2TB of disk. This cluster was deployed at the particle astrophysics research computing center at the University of Maryland, and it augmented by about 20% the total GPU computing capacity of the IceCube cluster at UW-Madison.

The focus for the GPU cluster is to provide the required capacity to fulfill the Collaboration direct photon propagation simulation needs. These needs have been estimated to be higher than the capacity of the GPU cluster at UW-Madison. Additional GPU resources at several IceCube sites, plus specific supercomputer allocations, allow us to try and reach that required capacity.

Distributed Computing - In March 2016, a new procedure to formally gather computing pledges from collaborating institutions was started. This data will be collected twice a year as part of the already existing process by which every IceCube institution updates its MoU before the Collaboration week meeting. Institutions that pledge computing resources for IceCube are asked to provide information on the average number of CPUs and GPUs that they commit to provide for IceCube simulation production during the next period. Table 1 shows the computing pledges per institution as of May 2017:

Figure 2: Volume of IceCube data archived at the NERSC tape facility by the JADE Long Term Archive service as a function of time.

Computing Infrastructure at UW-Madison

The total amount of data stored on disk in the data warehouse at UW-Madison is 6052 TB: 1914 TB for experimental data, 3853 TB for simulation and analysis and 285 TB for user data.

Two SuperMicro 6048R-E1CR36L servers with 36 8TB disks each were purchased in March. The main driver for this storage expansion was to provide operational buffer space for the new data archive workflows that index and bundle the data received from the South Pole and prepare it to be transferred to NERSC and DESY for long term archive

The IceCube computing cluster at UW-Madison has continued to deliver reliable data processing services. Boosting the GPU computing capacity has been a high priority of the project since the Collaboration decided to use GPUs for the photon propagation part of the simulation chain back in 2012. Direct photon propagation was found to provide the precision required, and it happens to be very well suited for the GPU hardware, running about 100 times faster than in CPUs.

An expansion of the GPU cluster was purchased in April, consisting of eight SuperMicro 4027GR-TR chassis each containing eight Nvidia GTX 1080 GPU cards, two Xeon E5-2637 v4 processors, 64 GB of RAM and 2TB of disk. This cluster was deployed at the particle astrophysics research computing center at the University of Maryland, and it augmented by about 20% the total GPU computing capacity of the IceCube cluster at UW-Madison.

The focus for the GPU cluster is to provide the required capacity to fulfill the Collaboration direct photon propagation simulation needs. These needs have been estimated to be higher than the capacity of the GPU cluster at UW-Madison. Additional GPU resources at several IceCube sites, plus specific supercomputer allocations, allow us to try and reach that required capacity.

Distributed Computing - In March 2016, a new procedure to formally gather computing pledges from collaborating institutions was started. This data will be collected twice a year as part of the already existing process by which every IceCube institution updates its MoU before the Collaboration week meeting. Institutions that pledge computing resources for IceCube are asked to provide information on the average number of CPUs and GPUs that they commit to provide for IceCube simulation production during the next period. Table 1 shows the computing pledges per institution as of May 2017:

� Site

|

Pledged CPUs

|

Pledged GPUs

|

Aachen

|

83

|

29

|

Alabama

|

|

6

|

Canada

|

1700

|

180

|

Brussels

|

1000

|

14

|

Chiba

|

196

|

6

|

Delaware

|

272

|

|

DESY-ZN

|

1400

|

180

|

Dortmund

|

2304

|

50

|

LBNL

|

114

|

|

Mainz

|

24

|

20

|

Marquette

|

96

|

16

|

MSU

|

500

|

16

|

UMD

|

350

|

112

|

UW-Madison

|

700

|

376

|

TOTAL

|

8739

|

1005

|

Table 1: Computing pledges for simulation production from IceCube Collaboration institutions as of May 2017.

We are implementing a feedback planning process by which the numbers from available resources from computing pledges are regularly compared to the simulation production needs and resources used. The goal is to be able to manage more efficiently the global resource utilization and to be able to react to changes in computing needs required to meet IceCube science goals.

A strong focus has been put in the last years to enlarge the distributed infrastructure and make it more efficient. The main strategy to accomplish this has been to try and simplify the process for sites to join the IceCube distributed infrastructure, and also to reduce the effort needed to keep sites connected to it. To do this, we have progressively implemented an infrastructure based on Pilot Jobs.

Pilot Jobs provide a homogeneous interface to heterogeneous computing resources. Also, they enable more efficient scheduling by delaying the decision of matching resources to payload.

In order to implement this Pilot Job paradigm for the distributed infrastructure IceCube makes use of some of the federation technologies within HTCondor

1

. Pilot Jobs in HTCondor are called “glideins” and consist of a specially configured instance of the HTCondor worker node component, which is then submitted as a job to external batch systems.

Several of the sites that provide computing for IceCube are also resource providers for other scientific experiments that make use of distributed computing infrastructures. Thanks to this, they already provide a standard (Grid) interface to their batch systems. In these cases we can leverage the standard GlideinWMS infrastructure operated by the Open Science Grid

2

project for integrating those

resources into the central pool at UW-Madison and provide transparent access to them via the standard HTCondor tools. The sites that use this mechanism to integrate with the IceCube global workload system are: Aachen, Canada, Brussels, DESY, Dortmund, Wuppertal and Manchester.

Some of the IceCube collaborating institutions that provide access to local computing resources do not have a Grid interface. Instead, access is only possible by means of a local account. To address those sites we have developed a lightweight version of a glidein Pilot Job factory that can be deployed as a cron job in the user’s account. The codename of this software is “pyGlidein” and it allows us to seamlessly integrate these local cluster resources with the IceCube global workload system so that jobs can run anywhere in a way which is completely transparent for users. The sites that currently use this mechanism are: Canada, Brussels, DESY, Dortmund, Mainz, Marquette, MSU, Munich, NBI, PSU, UMD and Uppsala. There are ongoing efforts at the Delaware, Chiba and LBNL sites to deploy the pyGlidein system.

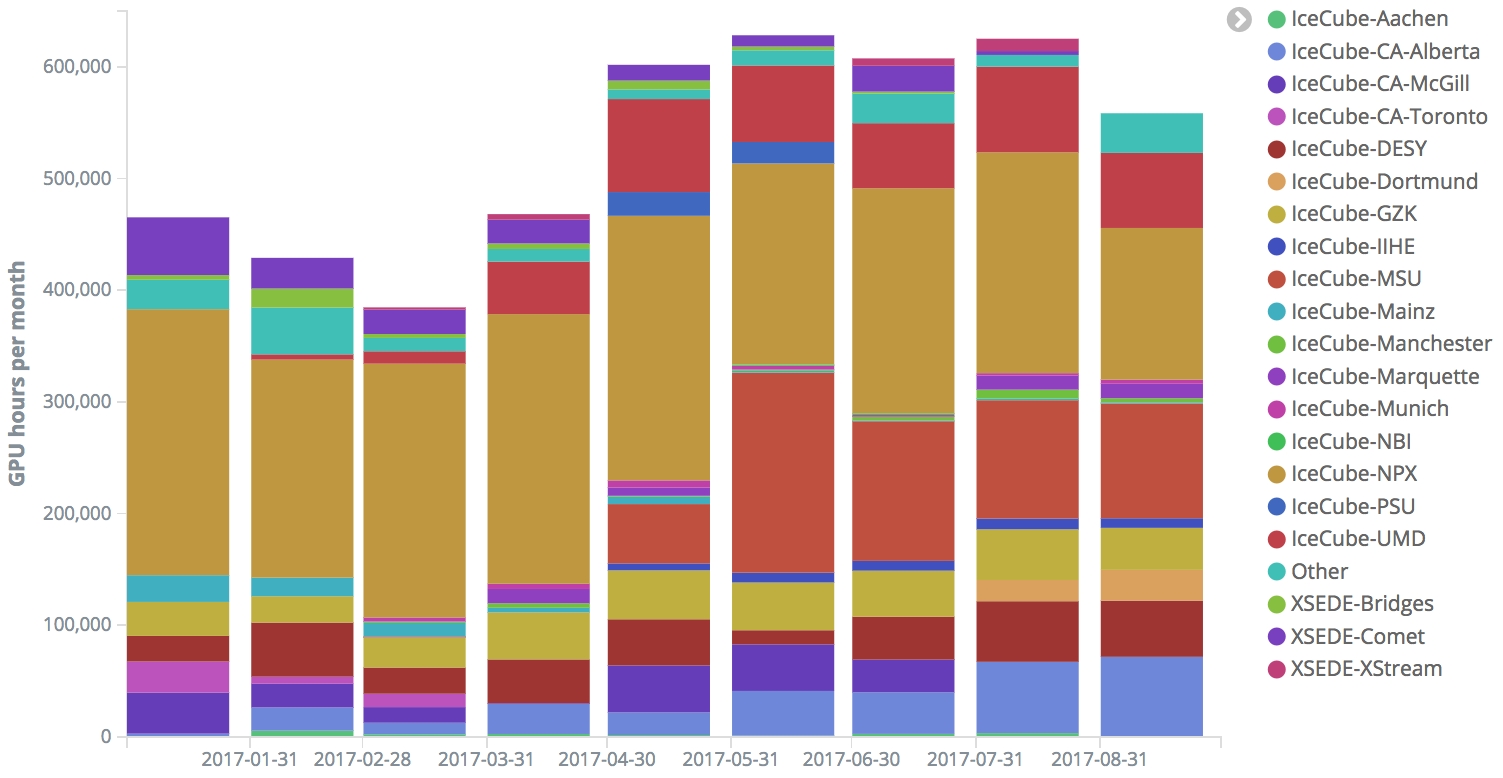

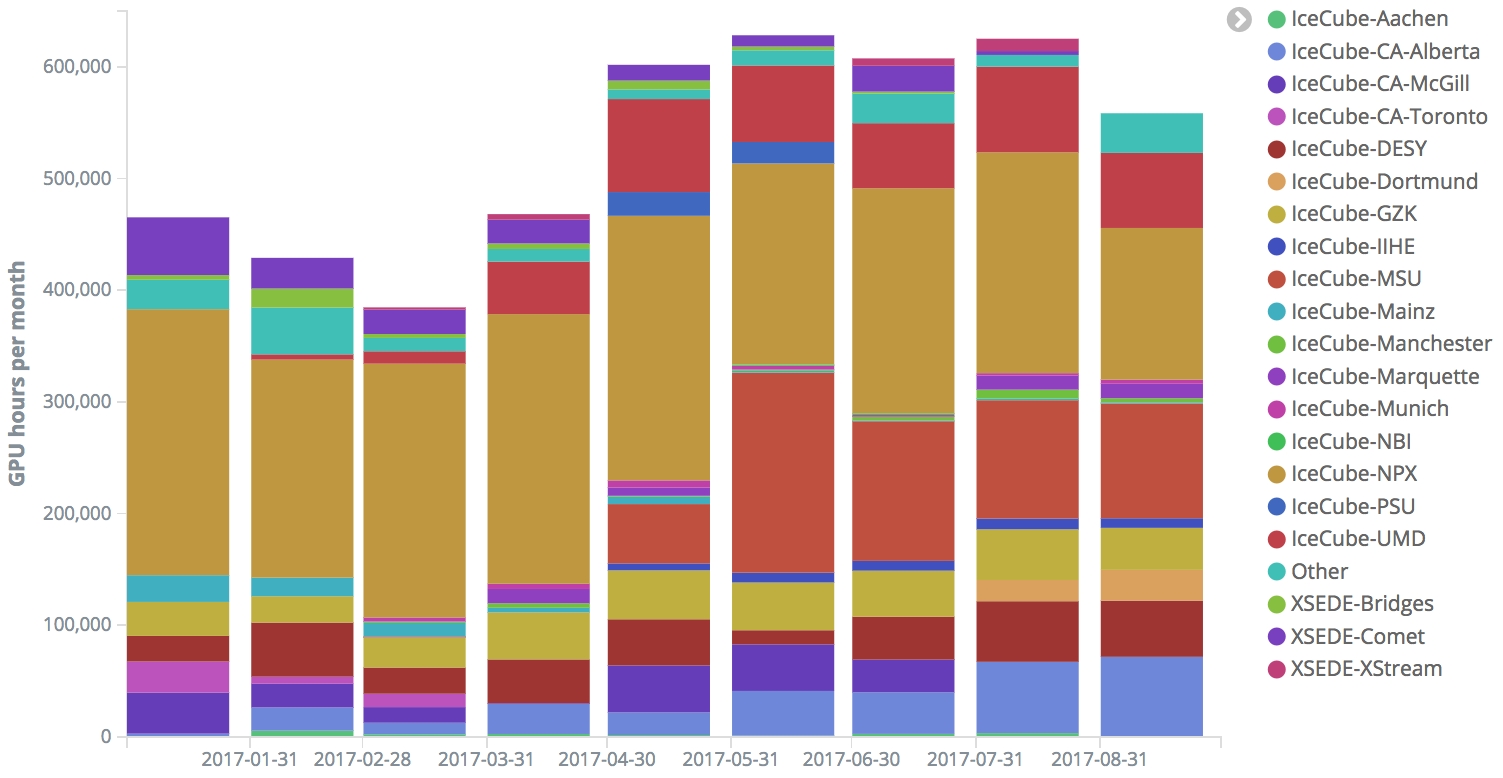

Figure 3 shows the GPU capacity used by IceCube jobs at the various sites contributing to this distributed infrastructure. We continue observing a growing trend both in the number of sites integrated, as well as the computing time delivered.

Figure 3: GPU hours per month used by IceCube jobs since the start of the year. Each color represents a different site participating in the distributed infrastructure. The number of sites has steadily increased during this period, making the pool of available resources significantly larger.

Beyond the computing capacity provided by IceCube institutions, and the opportunistic access to Grid sites that are open to share their idle capacity, IceCube started exploring the possibility of getting additional computing resources from targeted allocation requests submitted to Supercomputing facilities such as the NSF Extreme Science and Engineering Discovery Environment (XSEDE). IceCube submitted a first research allocation request to XSEDE in October 2015 (allocation number TG-PHY150040) that was awarded with compute time in two GPU-capable systems: SDSC Comet

3

and PSC Bridges

4

. In April 2017 we submitted a renewal for this research allocation, which this time was awarded with compute time in three systems: SDSC Comet, with 146.000 SUs, PSC Bridges, with 157.000 SUs and Stanford XStream

5

, with 475.000 SUs.

After several months of continuously running in XSEDE supercomputers, IceCube stands out as one of the largest GPU users. This contribution was acknowledged by the mention to the IceCube experiment in the press release

6

published by the San Diego Supercomputing Center to announce a Comet GPU upgrade in May.

With the aim to continue exploring the possibilities to expand the pool of available computing resources for IceCube, in April 2017 we requested a test allocation for the TITAN

7

supercomputer, at the DOE Oak Ridge National Laboratory. We were awarded 1 million TITAN-hours via the “Director’s Discretionary Allocation” program and we have 12 months to use them and test the

feasibility of running IceCube payloads in that system. The TITAN supercomputer is a very attractive resource because of its large number of GPUs: 18,688

NVIDIA Tesla K20. However, its runtime environment is very different from the standard HTC clusters where IceCube usually runs. We have an ongoing project to try to investigate the best options to integrate TITAN with the IceCube computing infrastructure in an efficient way.

In order to integrate all these heterogeneous infrastructures, we strongly rely on the HTCondor software and the various services that the Open Science Grid (OSG) project has built and operates around it. We continue being active in the OSG and HTCondor communities by participating in discussions and workshops. During the reporting period, IceCube members made oral contributions to the OSG all hands meeting in La Jolla, and the HTCondor workshop in Madison. We also contributed to the 2017 OSG annual report, which highlights the fact that IceCube is the experiment that integrates a larger number of clusters into OSG.

Figure 3: GPU hours per month used by IceCube jobs since the start of the year. Each color represents a different site participating in the distributed infrastructure. The number of sites has steadily increased during this period, making the pool of available resources significantly larger.

Beyond the computing capacity provided by IceCube institutions, and the opportunistic access to Grid sites that are open to share their idle capacity, IceCube started exploring the possibility of getting additional computing resources from targeted allocation requests submitted to Supercomputing facilities such as the NSF Extreme Science and Engineering Discovery Environment (XSEDE). IceCube submitted a first research allocation request to XSEDE in October 2015 (allocation number TG-PHY150040) that was awarded with compute time in two GPU-capable systems: SDSC Comet

3

and PSC Bridges

4

. In April 2017 we submitted a renewal for this research allocation, which this time was awarded with compute time in three systems: SDSC Comet, with 146.000 SUs, PSC Bridges, with 157.000 SUs and Stanford XStream

5

, with 475.000 SUs.

After several months of continuously running in XSEDE supercomputers, IceCube stands out as one of the largest GPU users. This contribution was acknowledged by the mention to the IceCube experiment in the press release

6

published by the San Diego Supercomputing Center to announce a Comet GPU upgrade in May.

With the aim to continue exploring the possibilities to expand the pool of available computing resources for IceCube, in April 2017 we requested a test allocation for the TITAN

7

supercomputer, at the DOE Oak Ridge National Laboratory. We were awarded 1 million TITAN-hours via the “Director’s Discretionary Allocation” program and we have 12 months to use them and test the

feasibility of running IceCube payloads in that system. The TITAN supercomputer is a very attractive resource because of its large number of GPUs: 18,688

NVIDIA Tesla K20. However, its runtime environment is very different from the standard HTC clusters where IceCube usually runs. We have an ongoing project to try to investigate the best options to integrate TITAN with the IceCube computing infrastructure in an efficient way.

In order to integrate all these heterogeneous infrastructures, we strongly rely on the HTCondor software and the various services that the Open Science Grid (OSG) project has built and operates around it. We continue being active in the OSG and HTCondor communities by participating in discussions and workshops. During the reporting period, IceCube members made oral contributions to the OSG all hands meeting in La Jolla, and the HTCondor workshop in Madison. We also contributed to the 2017 OSG annual report, which highlights the fact that IceCube is the experiment that integrates a larger number of clusters into OSG.

Personnel

A new Linux system administrator “devops” position to work on the IceCube distributed computing infrastructure was posted and filled in by Heath Skarlupka, who started working in the project on August 21

st

2017.

Data Release

IceCube is committed to the goal of releasing data to the scientific community. The following links contain data sets produced by AMANDA/IceCube researchers along with a basic description. Due to challenging demands on event reconstruction, background rejection and systematic effects, data will be released after the main analyses are completed and results are published by the IceCube Collaboration.

Since summer 2016, thanks to UW-Madison subscribing to the EZID

8

service we have the capability of issuing persistent identifiers for datasets. These are Digital Object Identifiers (DOI) that follow the DataCite metadata standard

9

.

We are in the process of rolling out a process for ensuring that all datasets made public by IceCube have a DOI and use the DataCite metadata standard capability to “link” it to the associated publication, whenever this is applicable. The use of DataCite DOIs to identify IceCube public datasets increases their visibility by making them discoverable in the search.datacite.org portal (see

https://search.datacite.org/works?resource-type-id=dataset&query=icecube

)

Datasets (last release on 15 Nov 2016):

http://icecube.wisc.edu/science/data

The pages below contain information about the data that were collected and links to the data files.

1.

A combined maximum-likelihood analysis of the astrophysical neutrino flux:

·

https://doi.org/10.21234/B4WC7T

3.

Search for point sources with first year of IC86 data:

·

https://doi.org/10.21234/B4159R

4.

Search for sterile neutrinos with one year of IceCube data:

·

http://icecube.wisc.edu/science/data/IC86-sterile-neutrino

5.

The 79-string IceCube search for dark matter:

·

http://icecube.wisc.edu/science/data/ic79-solar-wimp

6.

Observation of Astrophysical Neutrinos in Four Years of IceCube Data:

·

http://icecube.wisc.edu/science/data/HE-nu-2010-2014

7.

Astrophysical muon neutrino flux in the northern sky with 2 years of IceCube data:

·

https://icecube.wisc.edu/science/data/HE_NuMu_diffuse

8.

IceCube-59: Search for point sources using muon events:

·

https://icecube.wisc.edu/science/data/IC59-point-source

9.

Search for contained neutrino events at energies greater than 1 TeV in 2 years of data:

·

http://icecube.wisc.edu/science/data/HEnu_above1tev

10.

IceCube Oscillations: 3 years muon neutrino disappearance data:

·

http://icecube.wisc.edu/science/data/nu_osc

11.

Search for contained neutrino events at energies above 30 TeV in 2 years of data:

·

http://icecube.wisc.edu/science/data/HE-nu-2010-2012

12.

IceCube String 40 Data:

·

http://icecube.wisc.edu/science/data/ic40

13.

IceCube String 22–Solar WIMP Data:

·

http://icecube.wisc.edu/science/data/ic22-solar-wimp

14.

AMANDA 7 Year Data:

·

http://icecube.wisc.edu/science/data/amanda

Data Processing and Simulation Services

Data Reprocessing –

At the end of 2012, the IceCube Collaboration agreed to store the compressed SuperDST as part of the long-term archive of IceCube data. The decision taken was that this change would be implemented from the IC86-2011 run onwards. A server and a partition of the main tape library for input were dedicated to this data reprocessing task. Raw tapes are read to disk and the raw data files processed into SuperDST, which is saved in the data warehouse.

Now that all tapes from the Pole are in hand, we plan to complete the last 10% of this reprocessing task. The total number of files for seasons IC86-2011, IC86-2012 and IC86-2013 is 695,875; we have 67,812 remaining to be processed. The file breakdown per year is as follows: IC86-2011: 221,687 already processed out of 236,611. IC86-2012: 215,934 processed out of 222,952. IC86-2013: 190,442 processed out of 236,312. About 58,000 of the remaining files are in about 100 tapes; the rest are spread over about another 350. Tape dumping procedures are being integrated with the copy of raw data to NERSC

.

Offline Data Filtering –

The data collection for the IC86-2017 season started on May 18, 2017. A new compilation of data processing scripts had been previously validated and benchmarked with the data taken during the 24-hour test run using the new configuration. The differences with respect to the IC86-2016 season scripts are minimal. Therefore, we estimate that the resources required for the offline production will be about 750,000 CPU hours on the IceCube cluster at UW-Madison datacenter. 100 TB of storage is required to store both the Pole-filtered input data and the output data resulting from the offline production. We were able to reduce the required storage by utilizing a more efficient compression algorithm in offline production. Since season start we are using a new database structure at pole and in Madison for offline production. The data processing is proceeding smoothly and no major issues occurred. Level2 data are typically available one and a half weeks after data taking.

Additional data validations have been added to detect data value issues and corruption. Replication of all the data at the DESY-Zeuthen collaborating institution is being done in a timely manner.

The re-processing (pass2) has started on June 1st, 2017, and is about 75% complete. Seven years (2010 - 2016) are currently re-processed. Four years start at sDST level (2011 - 2014) and three years at raw data. Starting at raw data was required for 2010 since sDST data was not available. Since sDST data for 2015 and 2016 has already been SPE corrected, a re-processing of sDST data was required in order to apply the latest SPE fits as we perform for the other seasons.

The estimated resources for pass2 are 11,000,000 CPU hours and 520 TB storage for sDST and Level2 data.

Level3 data production for the current season is planned for four physics analysis groups. The cataloging and bookkeeping for Level3 data is the same as for Level2.

The additional required resources to process the pass2 Level2 data to Level3 has been estimated to 4,000,000 CPU hours and 30 TB storage.

Simulation –

The production of IC86 Monte Carlo simulations of the IC86-2012 detector configuration concluded in October of 2016. A new production of Monte Carlo simulations has since begun with the IC86-2016 detector configuration. This configuration is representative of previous trigger and filter configurations from 2012, 2013, 2014 and 2015 as well as 2016. As with previous productions, direct generation of Level 2 background simulation data is used to reduce storage space requirements. However, as part of the new production plan, intermediate photon-propagated data is now being stored on disk in DESY and reused for different detector configurations in order to reduce GPU requirements. This transition to the 2016 configuration was done in conjunction with a switch to IceSim 5 which contains improvements in memory and GPU utilization in addition to previous improvements to correlated noise generation, Earth modeling, and lepton propagation. Current simulations are running on IceSim 5.1.3 with further improvements and bug fixes for various modules. Direct photon propagation is currently done on dedicated GPU hardware located at several IceCube Collaboration sites and through opportunistic grid computing where the number of such resources continues to grow.

The simulation production team organizes periodic workshops to explore better and more efficient ways of meeting the simulation needs of the analyzers. This includes both software improvements as well as new strategies and providing the tools to generate targeted simulations optimized for individual analyses instead of an one-size-fits-all approach.

The centralized production of Monte Carlo simulations has moved away from running separate instances of IceProd to a single central instance that relies on GlideIns running at satellite sites. Production has been transitioning to a newly redesigned simulation scheduling system IceProd2. A full transition to IceProd 2 was completed during the Spring 2017 Collaboration Meeting. Production throughput on IceProd2 has continually increased due to incorporation of an increasing number of dedicated and opportunistic resources and a number of code optimizations. A new set of monitoring tools is currently being developed in order to keep track of efficiency and further optimizations.

IceCube Software Coordination

The software systems spanning the IceCube Neutrino Observatory, from embedded data acquisition code to high-level scientific data processing, benefit from concerted efforts to manage their complexity. In addition to providing comprehensive guidance for the development and maintenance of the software, the IceCube Software Coordinator, Alex Olivas, works in conjunction with the IceCube Coordination Committee, the IceCube Maintenance and Operations Leads, the Analysis Coordinator, and the Working Group Leads to respond to current operational and analysis needs and to plan for anticipated evolution of the IceCube software systems. In the last year, software working group leads have been appointed to the following groups: core software, simulation, reconstruction, science support, and infra-structure. Continuing efforts are underway to ensure the software group is optimizing in-kind contributions to the development and maintenance of IceCube's physics software stack.

The IceCube collaboration contributes software development labor

via the biannual MoU updates. Software code sprints are organized monthly with the software developers to tackle topical issues. Progress is tracked, among other means, by tracking open software tickets tied to monthly milestones.

Recent major advances in core software include:

·

Implementation of zlib compression in IceTray, saving approx. 30% disk space;

·

Optimization of CLSim GPU utilization by more than a factor of 2;

·

Memory tracking instrumentation which led to discovery and remedy of significant memory leaks, improving simulation throughput;

·

The software coordinator ran a 3-month course on C++ for IceCube collaborators with several dozen attending;

·

Improvements in “sanity checker” automated instrumentation to validate new simulation software releases, with aim to eventually decrease detection latency of serious physics-level bugs from months to days;

Calibration

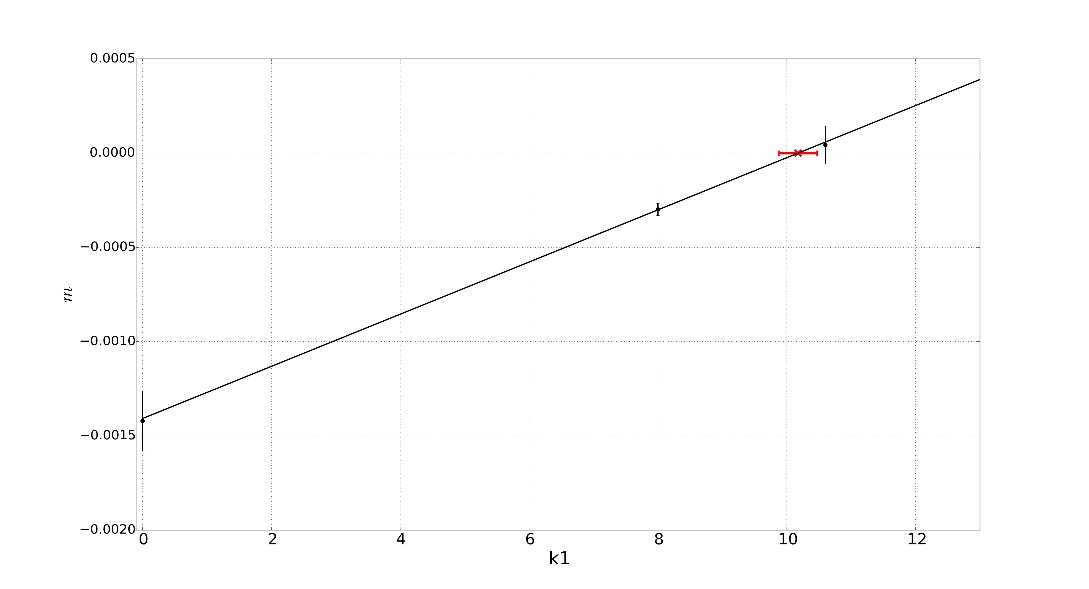

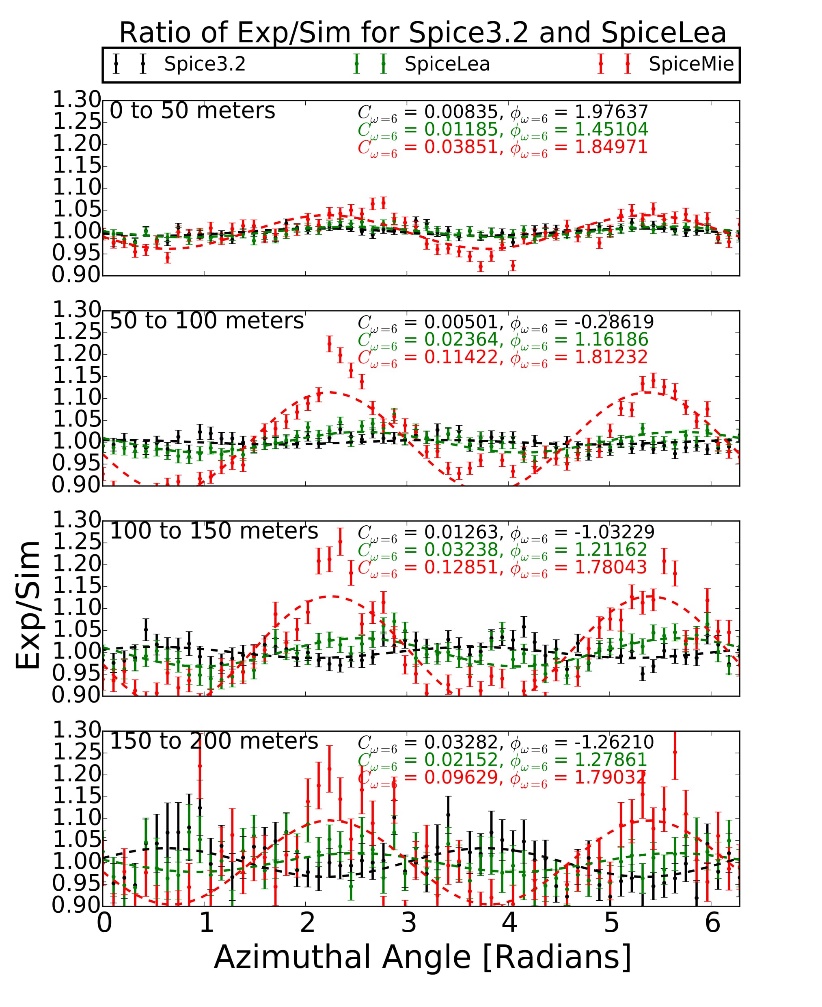

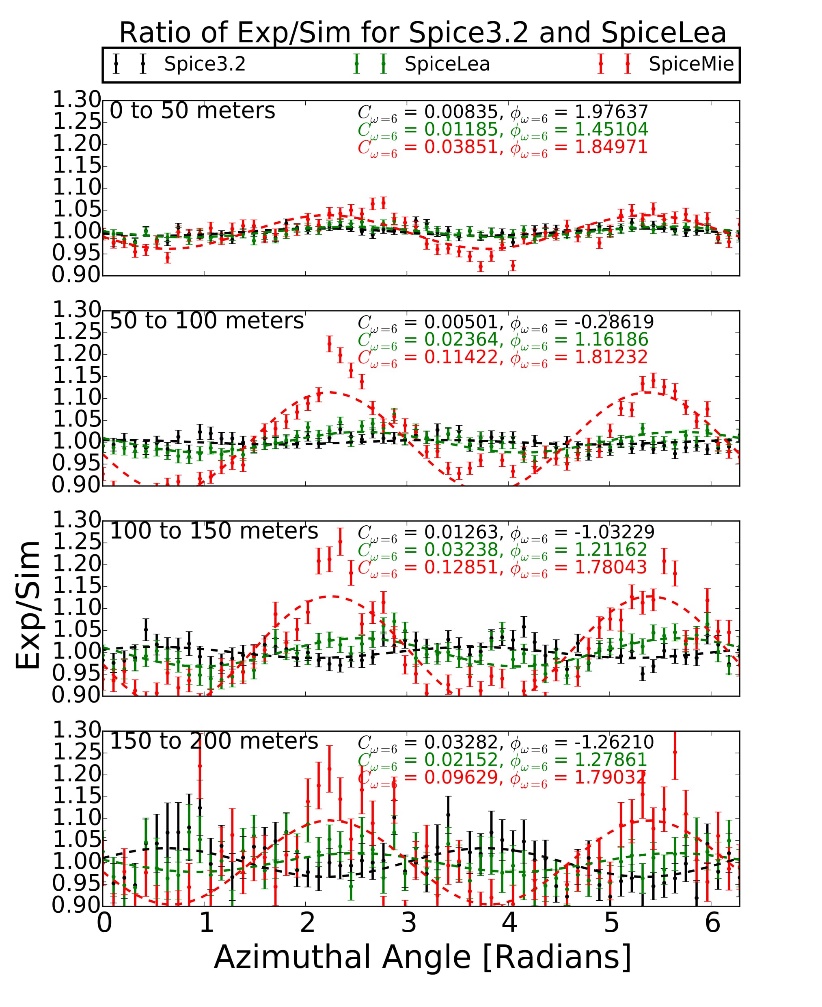

Fig 1: The ratio of the observed charge as a function of azimuth normalized to the average observed charge from atmospheric muons between data and simulation. The red, green and black data points correspond to simulation with 0, 8, and 10.6% anisotropy strength, respectively. The lines are drawn using the amplitude and phase determined by decomposing these ratios into Fourier space, and picking out the w = 2 component corresponding to the anisotropy.

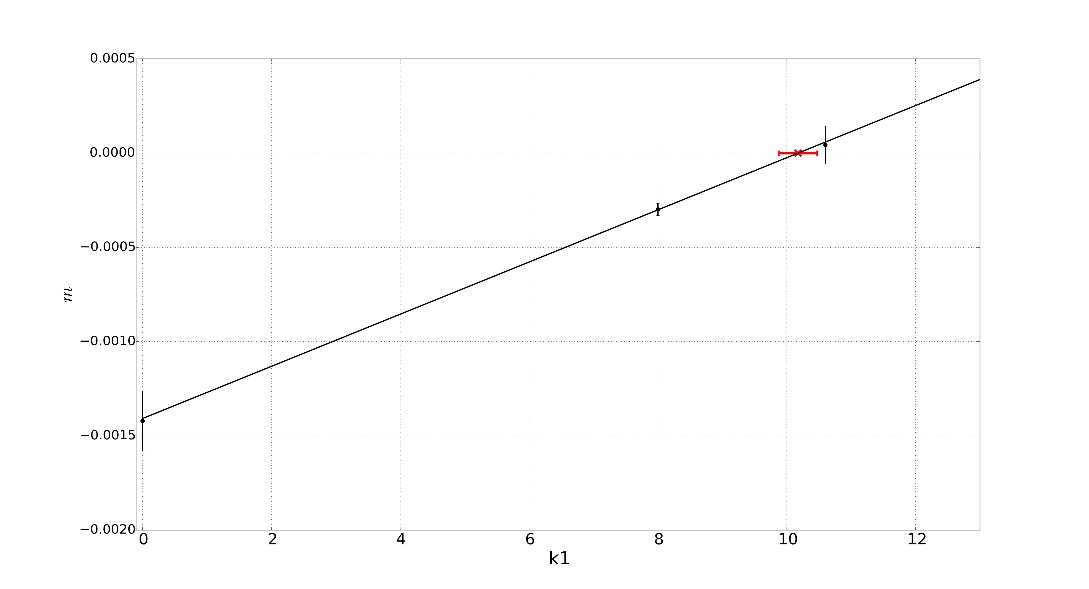

A primary focus of the calibration group continues to be improving the knowledge of the glacial ice properties. It is known that the ice scattering exhibits an anisotropy, where the scattering is stronger along the same axis as the ice tilt, and weaker along the orthogonal axis. It is possible to measure this anisotropy using both LED flasher data and down-going atmospheric muons. The later method uses Monte Carlo simulations with different anisotropy strengths, and compares the resulting azimuthal charge distribution between data and the simulations for increasing muon track-to-DOM distances as shown in Fig. 1. By decomposing the ratio of data and MC into Fourier space, the amplitude of the frequency mode corresponding to the anisotropy (w = 2) is determined for each distance bin, and for each anisotropy strength. If the simulated anisotropy strength is realized in data, then the change of the amplitude versus distance should be 0. Therefore, the anisotropy can be determined from the slope of the linear trend between the amplitude and distance for the different simulations. The optimal strength of anisotropy is approximately 10.2%, shown in Fig. 2 by the red ‘x’, is consistent with the optimal value determined using flasher data, providing confidence in our ability to measure the anisotropy.

A primary focus of the calibration group continues to be improving the knowledge of the glacial ice properties. It is known that the ice scattering exhibits an anisotropy, where the scattering is stronger along the same axis as the ice tilt, and weaker along the orthogonal axis. It is possible to measure this anisotropy using both LED flasher data and down-going atmospheric muons. The later method uses Monte Carlo simulations with different anisotropy strengths, and compares the resulting azimuthal charge distribution between data and the simulations for increasing muon track-to-DOM distances as shown in Fig. 1. By decomposing the ratio of data and MC into Fourier space, the amplitude of the frequency mode corresponding to the anisotropy (w = 2) is determined for each distance bin, and for each anisotropy strength. If the simulated anisotropy strength is realized in data, then the change of the amplitude versus distance should be 0. Therefore, the anisotropy can be determined from the slope of the linear trend between the amplitude and distance for the different simulations. The optimal strength of anisotropy is approximately 10.2%, shown in Fig. 2 by the red ‘x’, is consistent with the optimal value determined using flasher data, providing confidence in our ability to measure the anisotropy.

Fig 2: The slopes, m, of the change in amplitude of w=2 Fourier mode versus track-to-DOM distance (y-axis), as a function of each simulated anisotropy, k1 (x-axis). The slopes are linearly dependent on the simulation anisotropy strength, with an intercept at 0 achieved for an anisotropy of approximately 10.2%, which is indicated by the red cross.

Program Management

Management & Administration

–

The primary management and administration effort is to ensure that tasks are properly defined and assigned, that the resources needed to perform each task are available when needed, and that resource efficiency is tracked to accomplish the task requirements and achieve IceCube’s scientific objectives. Efforts include:

·

A complete re-baseline of the IceCube M&O Work Breakdown Structure to reflect the structure of the principal resource coordination entity, the IceCube Coordination Committee.

·

The PY2 M&O Plan was submitted in May 2017.

·

The detailed M&O Memorandum of Understanding (MoU) addressing responsibilities of each collaborating institution was revised for the collaboration meeting in Madison, WI, May 1-5, 2017.

IceCube M&O

–

PY2 (FY2016/2017) Milestones Status:

� | Milestone

|

Month

|

| Revise the Institutional Memorandum of Understanding (MOU v22.0) - Statement of Work and Ph.D. Authors head count for the spring collaboration Meeting

|

April 2017

|

| Report on Scientific Results at the Spring Collaboration Meeting

|

May 1-5 2017

|

| Submit for NSF approval, a revised IceCube Maintenance and Operations Plan (M&OP) and send the approved plan to non-U.S. IOFG members.

|

May 2017

|

| Revise the Institutional Memorandum of Understanding (MOU v23.0) - Statement of Work and Ph.D. Authors head count for the fall collaboration meeting

|

September 2017

|

| Report on Scientific Results at the Fall Collaboration Meeting

|

October 2-6, 2017

|

| Submit for NSF approval a mid-year report which describes progress made and work accomplished based on objectives and milestones in the approved annual M&O Plan.

|

September 30, 2017

|

| Revise the Institutional Memorandum of Understanding (MOU v24.0) - Statement of Work and Ph.D. Authors head count for the spring collaboration meeting

|

April 2018

|

Engineering, Science & Technical Support – Ongoing support for the IceCube detector continues with the maintenance and operation of the South Pole Systems, the South Pole Test System, and the Cable Test System. The latter two systems are located at the University of Wisconsin–Madison and enable the development of new detector functionality as well as investigations into various operational issues, such as communication disruptions and electromagnetic interference. Technical support provides for coordination, communication, and assessment of impacts of activities carried out by external groups engaged in experiments or potential experiments at the South Pole. The IceCube detector performance continues to improve as we restore individual DOMs to the array at a faster rate than problem DOMs are removed during normal operations.

Education & Outreach (E&O) – The IceCube Collaboration has had significant outcomes from their efforts, organized around four main themes:

1) Reaching motivated high school students and teachers through IceCube Masterclasses and middle school students with the South Pole Experiment Contest

2) Providing intensive research experiences for teachers (in collaboration with PolarTREC) and for undergraduate students (NSF science grants, International Research Experience for Students (IRES), and Research Experiences for Undergraduates (REU) funding)

3) Engaging the public through web and print resources, graphic design, webcasts with IceCube staff at the Pole, and displays

4) Developing and implementing semiannual communication skills workshops held in conjunction with IceCube Collaboration meetings

The IceCube Masterclasses continue to be promoted within the collaboration as well as externally at national and international levels. The 2017 edition, held on March 8, 12 and 22, included 15 institutions and over 200 students. A workshop featuring the IceCube Masterclasses was given at the 2017 summer meeting of the American Association of Physics Teachers in Cincinnati, Ohio, drawing attendees from diverse institutions, including high schools, community colleges, and comprehensive and research universities. A new project, the South Pole Experiment Contest (

spexperiment.icecube.wisc.edu

) for middle school students, was launched based on the 2016-17 contest organized in Belgium by IceCube graduate student Gwenhael De Wasseige. In this year’s event, students from the US, Belgium, and Germany will compete for the chance to have an experiment they designed performed at the South Pole.

Science teacher Lesley Anderson from High Tech High in Chula Vista, CA, will deploy to the South Pole in November-December 2017 with IceCube through the PolarTREC program. Over the summer, Lesley worked with 2016 IceCube PolarTREC teacher Kate Miller and AMANDA TEA teacher Eric Muhs. They developed and delivered a nine-day math-science enrichment course that included IceCube science for the UW–River Falls Upward Bound program (July 10-21, 2017).

Eight undergraduate students, four each at Vrije Universiteit Brussel and Universität Mainz, had ten-week research experiences in the summer of 2017, supported by NSF IRES funding. The students came from UWRF, UW–Madison, Michigan State, Penn State, South Dakota School of Mines and Technology, and Drexel University. UWRF’s astrophysics REU program through NSF selected three men, all from underrepresented groups, and three women from over 60 applicants for ten-week summer 2017 research experiences, including attending the IceCube software and science boot camp held at WIPAC. Multiple IceCube institutions also supported research opportunities for undergraduates. Four of the students from the 2016 UWRF IRES program attended the IceCube Collaboration meeting in May, 2017, at UW–Madison.

The IceCube scale model constructed at Drexel University was seen by thousands of people at the World Science Festival in New York City on June 4, 2017. IceCube Collaborators from Drexel, Stony Brook, UW–Madison, and UWRF fielded questions from large crowds for about 10 hours. More transportable 1m x 1m x 1m models are under construction at York University with art/science professor Mark-David Hosale and at the University of Maryland, Stockholm University, and Drexel University.

Progress on the NSF Advancing Informal STEM Learning project, a joint WIPAC and Wisconsin Institutes for Discovery project to create interactive and immersive learning programs based on touch tables and virtual reality devices, was displayed at the spring IceCube Collaboration meeting in Madison. The feedback from users at the collaboration meeting was largely positive, and helpful suggestions were submitted by viewers for consideration.

The E&O team continues to work closely with the communication team. Science news summaries of IceCube publications, written at a level accessible to science-literate but nonexpert audiences, are produced and posted regularly on the IceCube website and highlighted on social media. Eleven research news articles were published in the review period along with a dozen project updates and weekly news items on IceCube activities at the South Pole. The IceCube website has an average of 20,000 page views per month, with about 73% new visitors. Followers on Facebook and Twitter have grown by about 7%.

Continuing our efforts to increase the production of multimedia resources for outreach and communications, two new videos have been published. The first one, which premiered during the APS March Meeting, is an introduction to IceCube that highlights the success of the construction of the first gigaton neutrino detector and summarizes the main contributions of IceCube to neutrino astronomy. In a second video, produced at WIPAC and launched during the IceCube Collaboration week in May, the focus is more on what we have learned with IceCube so far and on potential discoveries ahead, including the need for a larger detector. Videos taken by IceCube winterovers or by PolarTREC teachers contribute to our efforts to increase engaging multimedia content. IceCube’s YouTube channel has reached 100,000 views during this period, an audience that is even greater if videos about IceCube hosted by other organizations and platforms are taken into account. As an example, two specific videos—one by our last PolarTREC teacher and the other on the TED channel—have reached more than 300,000 views.

We hosted a fifth edition of the communications training workshops, a professional development program that also aims to improve the reach of IceCube outreach efforts by engaging our collaborators in more inclusive and well-targeted communications strategies.

Activities under the guidance of the IceCube diversity and inclusion task force have also been growing. The May collaboration week in Madison featured an invited talk to address gender bias in recruiting. The IceCube women’s meetings were reinstated to improve mentoring of our female collaborators but also to identify actions to increase diversity and inclusion in our team. Initial metrics for assessing gender diversity in IceCube were presented to the collaboration, and the strategy to make these metrics sustainable and to include other diversity demographics is under discussion.

Section III – Project Governance and Upcoming Events

The detailed M&O institutional responsibilities and Ph.D. author head count is revised twice a year at the time of the IceCube Collaboration meetings. This is formally approved as part of the institutional Memorandum of Understanding (MoU) documentation. The MoU was last revised in April 2017 for the Spring collaboration meeting in Madison, WI (v22.0), and the next revision (v23.0) will be posted in September 2017 at the Fall collaboration meeting in Berlin, Germany.

IceCube Collaborating Institutions

As of September 2017, the IceCube Collaboration consists of 48 institutions in 12 countries (25 U.S. and Canada, 19 Europe and 4 Asia Pacific).

The list of current IceCube collaborating institutions can be found on:

http://icecube.wisc.edu/collaboration/collaborators

IceCube Major Meetings and Events

IceCube Spring Collaboration Meeting – Madison, WI May 1–5, 2017

International Oversight and Finance Group – Madison, WI May 6–7, 2017

IceCube Fall Collaboration Meeting – Berlin, Germany October 2–7, 2017

Science Advisory Committee Meeting – Pasadena, CA September 11-12, 2017

IceCube Spring Collaboration Meeting – Atlanta, GA March 20-24, 2018

Back to top

Acronym List

CnV Calibration and Verification

CPU Central Processing Unit

CVMFS CernVM-Filesystem

DAQ Data Acquisition System

DOM Digital Optical Module

E&O Education and Outreach

I3Moni IceCube Run Monitoring system

IceCube Live The system that integrates control of all of the detector’s critical subsystems; also “I3Live”

IceTray IceCube core analysis software framework, part of the IceCube core software library

MoU Memorandum of Understanding between UW–Madison and all collaborating institutions

PMT Photomultiplier Tube

PnF Processing and Filtering

SNDAQ Supernova Data Acquisition System

SPE Single photoelectron

SPS South Pole System

SuperDST Super Data Storage and Transfer, a highly compressed IceCube data format

TDRSS Tracking and Data Relay Satellite System, a network of communications satellites

TFT Board Trigger Filter and Transmit Board

WIPAC Wisconsin IceCube Particle Astrophysics Center

Back to top

FY17_PY2_Qtr3_RPT

5

A primary focus of the calibration group continues to be improving the knowledge of the glacial ice properties. It is known that the ice scattering exhibits an anisotropy, where the scattering is stronger along the same axis as the ice tilt, and weaker along the orthogonal axis. It is possible to measure this anisotropy using both LED flasher data and down-going atmospheric muons. The later method uses Monte Carlo simulations with different anisotropy strengths, and compares the resulting azimuthal charge distribution between data and the simulations for increasing muon track-to-DOM distances as shown in Fig. 1. By decomposing the ratio of data and MC into Fourier space, the amplitude of the frequency mode corresponding to the anisotropy (w = 2) is determined for each distance bin, and for each anisotropy strength. If the simulated anisotropy strength is realized in data, then the change of the amplitude versus distance should be 0. Therefore, the anisotropy can be determined from the slope of the linear trend between the amplitude and distance for the different simulations. The optimal strength of anisotropy is approximately 10.2%, shown in Fig. 2 by the red ‘x’, is consistent with the optimal value determined using flasher data, providing confidence in our ability to measure the anisotropy.

A primary focus of the calibration group continues to be improving the knowledge of the glacial ice properties. It is known that the ice scattering exhibits an anisotropy, where the scattering is stronger along the same axis as the ice tilt, and weaker along the orthogonal axis. It is possible to measure this anisotropy using both LED flasher data and down-going atmospheric muons. The later method uses Monte Carlo simulations with different anisotropy strengths, and compares the resulting azimuthal charge distribution between data and the simulations for increasing muon track-to-DOM distances as shown in Fig. 1. By decomposing the ratio of data and MC into Fourier space, the amplitude of the frequency mode corresponding to the anisotropy (w = 2) is determined for each distance bin, and for each anisotropy strength. If the simulated anisotropy strength is realized in data, then the change of the amplitude versus distance should be 0. Therefore, the anisotropy can be determined from the slope of the linear trend between the amplitude and distance for the different simulations. The optimal strength of anisotropy is approximately 10.2%, shown in Fig. 2 by the red ‘x’, is consistent with the optimal value determined using flasher data, providing confidence in our ability to measure the anisotropy.