Searches for Neutrinos from Gamma Ray Bursts with the

AMANDA-II and IceCube Detectors

by

Erik Albert Strahler

A dissertation submitted in partial fulfillment of the

requirements for the degree of

Doctor of Philosophy

(Physics)

at the

University of Wisconsin – Madison

2009

?

c

Copyright by Erik Albert Strahler 2009

All Rights Reserved

Searches for Neutrinos from Gamma Ray Bursts with the

AMANDA-II and IceCube Detectors

Erik Albert Strahler

Under the supervision of Professor Albrecht Karle

At the University of Wisconsin — Madison

Gamma-ray bursts (GRBs) are the most energetic phenomenon in the universe, releasing

isotropic equivalent energies of O(10

52

) ergs over short time scales. While it is possible to wholly

explain the keV-GeV observed photons by purely electromagnetic processes, it is natural to consider

the implications of concurrent hadronic (proton) acceleration in these sources. Such processes make

GRBs one of the leading candidates for the sources of the ultra high-energy cosmic rays as well as

sources of associated high energy (TeV-PeV) neutrinos. We have performed searches for such neutri-

nos from 85 northern sky GRBs with the AMANDA-II neutrino detector. No signal is observed and

upper limits are set on the emission from these sources. Additionally, we have performed a search for

41 northern sky GRBs using the 22-string configuration of the IceCube neutrino telescope, employing

an unbinned maximum-likelihood method and individual modeling of the predicted emission from

each burst. This search is consistent with the background-only hypothesis and we set upper limits

on the emission.

Albrecht Karle (Adviser)

i

High energy experimental physics has largely become a field of collaborations, and neutrino

astronomy in particular involves the combined efforts of many scientists from diverse institutions. I

would like to acknowledge the hard work of the many physicists in the IceCube collaboration, without

whom these analyses would have been impossible.

I owe thanks to Albrecht Karle, for first interesting me in gamma-ray bursts and for advice

over the years. I am grateful for the opportunity he gave me to join IceCube and contribute.

Francis Halzen provided much useful insight on matters theoretical, and Gary Hill was of great

help with many questions regarding statistics and event selection methods. Chad Finley helped me

to (finally) figure out the log-likelihood analysis, and was a great sounding board for working out

problems with signal weighting. Jon Dumm contributed invaluable advice on scripts and IceCube

software.

The members of the GRB working group deserve special thanks, especially Ignacio Taboada.

He was ever ready with suggestions, feedback, and advice. In analyzing IceCube data, I have been

very fortunate to collaborate with Alexander Kappes and Phil Roth. Working with them has been

more productive (and much more enjoyable) than it would have been alone.

Thanks of a different sort goes to Kael Hanson and Hagar Landsman. Kael involved me with

DOM testing when I first joined the collaboration and Hagar helped me learn the intricacies of the

testing framework. Without them I never would have had the opportunity to visit the south pole,

one of the most unique experiences of my life.

Finally, I would like to thank my family and friends, both for encouraging me in my pursuit of

physics, and probably more importantly, for providing me with so many great experiences through

my years in Madison.

ii

Contents

List of Tables

viii

List of Figures

x

1 Introduction

1

1.1 The Cosmic Ray Connection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1

1.1.1 Fermi Acceleration of Particles . . . . . . . . . . . . . . . . . . . . . . . . . . .

2

1.2 Previous Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

1.3 Organization of the Thesis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

2 Gamma-ray Bursts

12

2.1 History . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

2.2 Fireball Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.2.1 Energy Spectra . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.2.2 Compactness . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

2.2.3 Emission Mechanism . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.2.4 Collimated Emission - Reducing the Energy Budget . . . . . . . . . . . . . . . 21

2.3 Neutrino Production . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.3.1 Prompt Neutrinos . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.3.2 Precursor Neutrinos . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.3.3 Afterglow Neutrinos . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.4 Neutrino Oscillation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

iii

3 Neutrino Detection and Reconstruction

33

3.1 Interaction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

3.2

Ceˇ renkov Radiation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

3.3 Muon Energy Losses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

3.4 Backgrounds . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

3.5 Ice Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

3.6 Reconstruction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

3.6.1 Line-Fit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

3.6.2 Direct Walk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.6.3 JAMS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.6.4 Pandel Likelihood . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.6.5 Bayesian Likelihood . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

3.6.6 Iterative Reconstruction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

3.6.7 Energy Reconstruction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

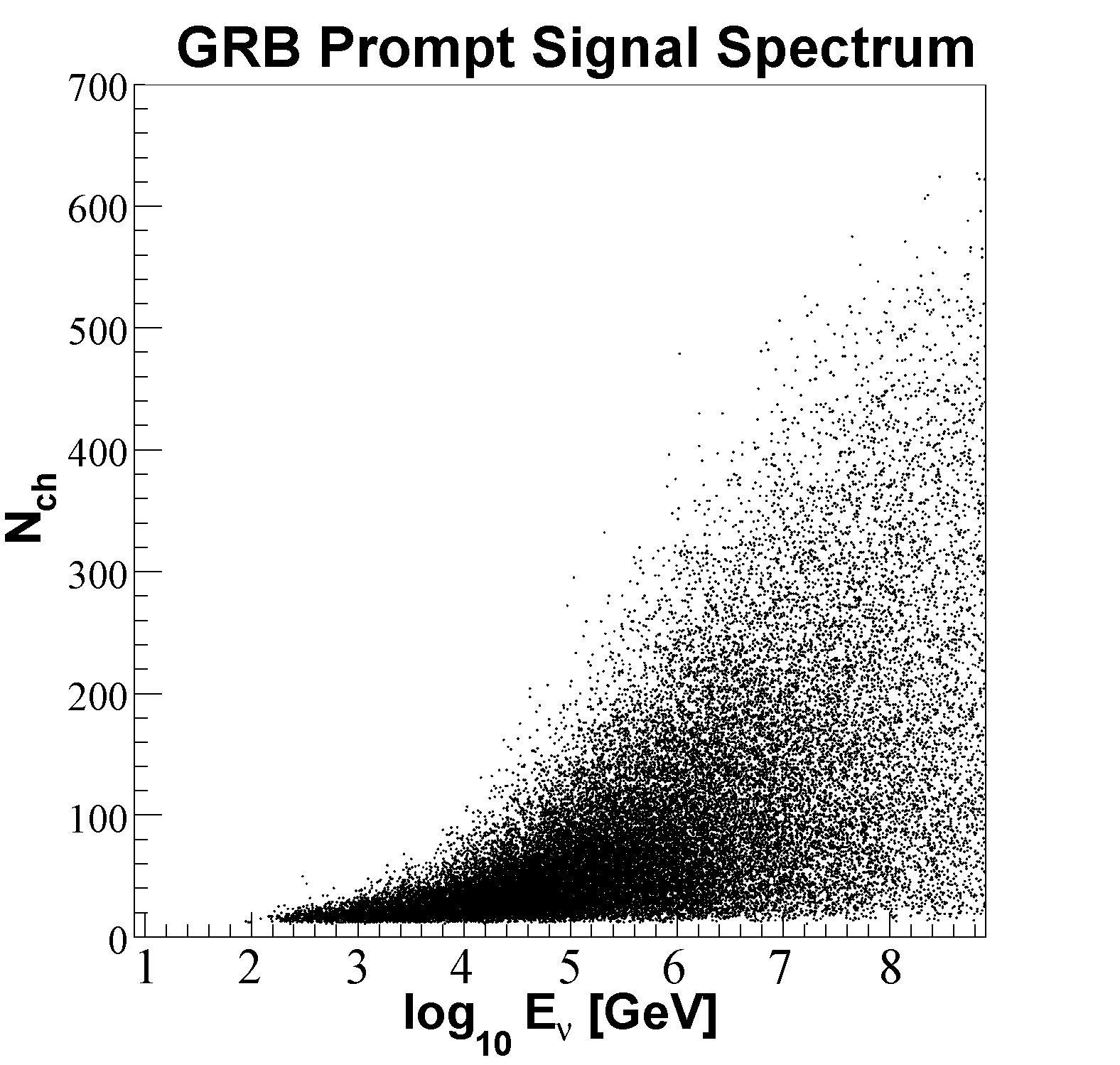

3.6.7.1 N

ch

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

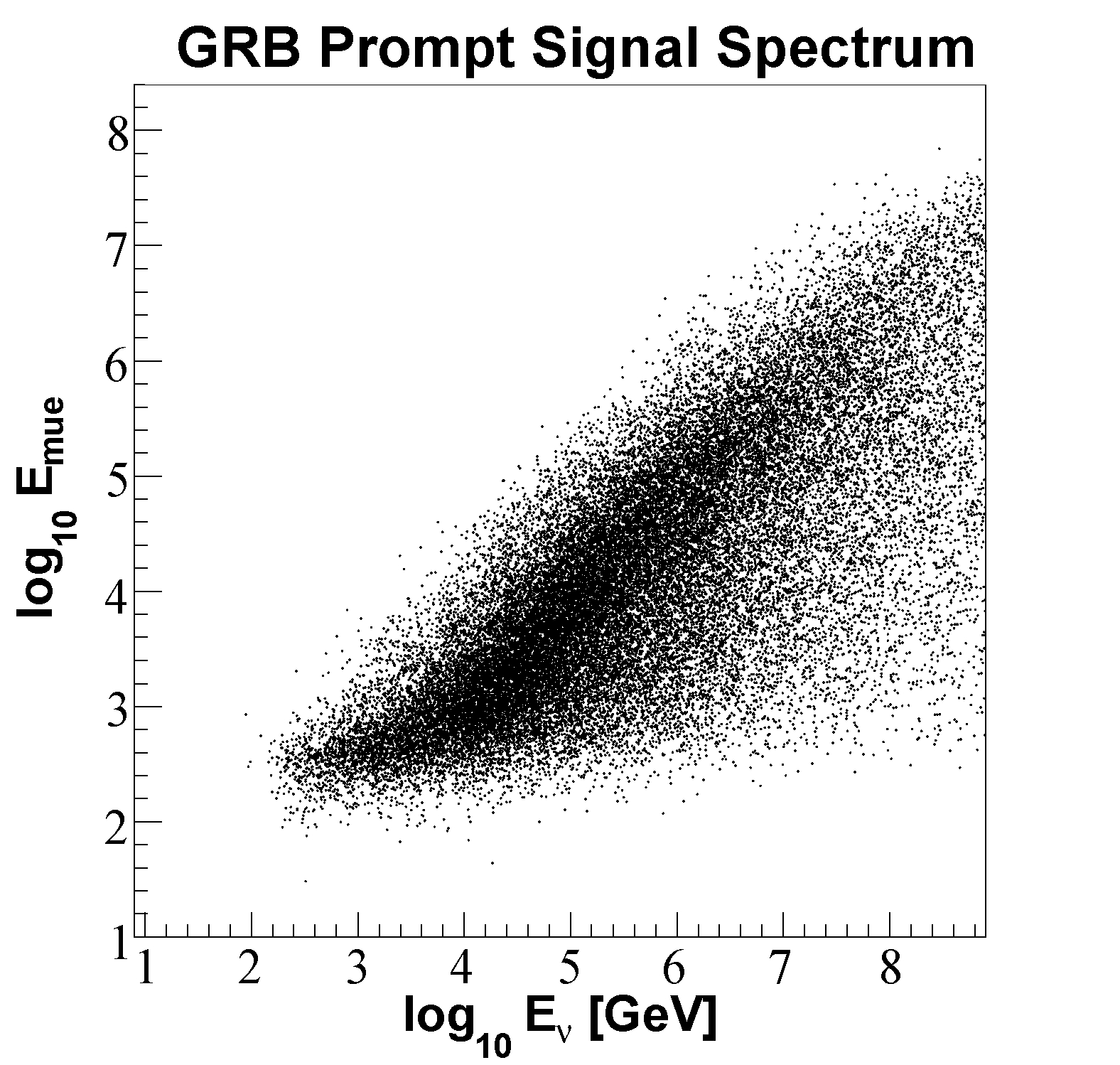

3.6.7.2 Mue . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4 Detectors

53

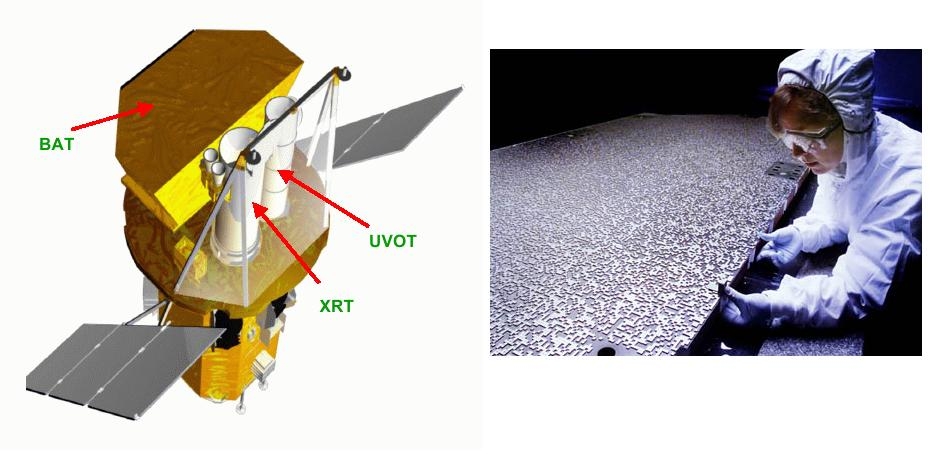

4.1 Satellites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

4.1.1 Swift . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

4.1.2 HETE-II . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

4.1.3 INTEGRAL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

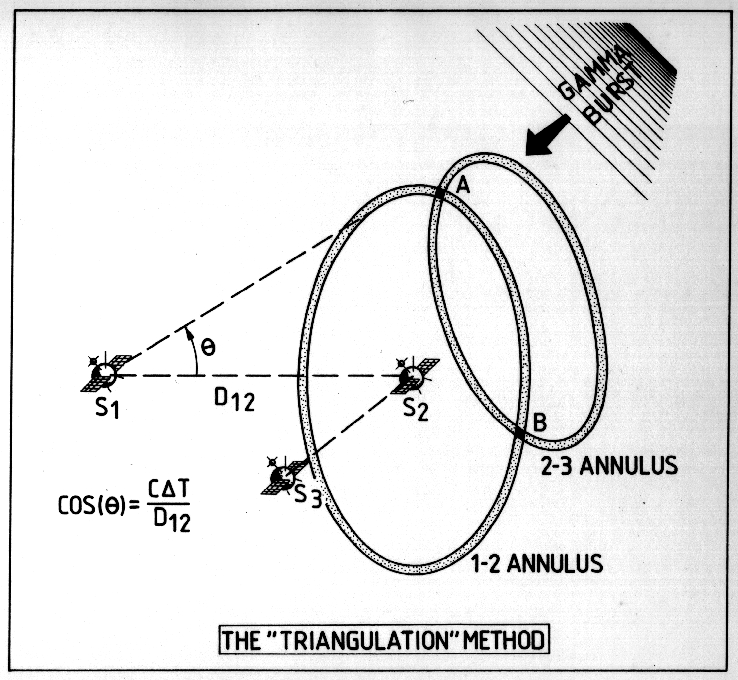

4.1.4 IPN3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

4.1.5 Suzaku . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

4.1.6 AGILE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

4.2 AMANDA-II . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

4.2.1 The Optical Module and µDAQ . . . . . . . . . . . . . . . . . . . . . . . . . . 57

4.2.2 Calibration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

4.3 IceCube . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

iv

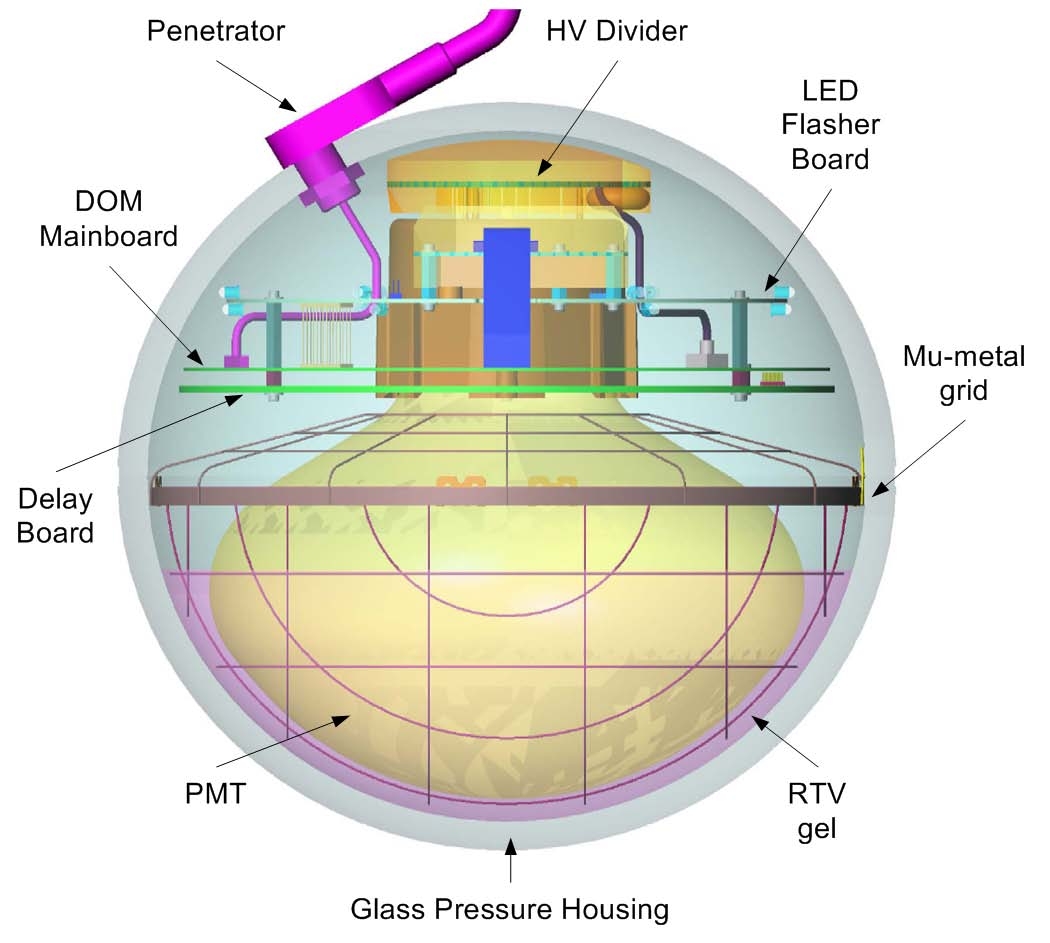

4.3.1 The Digital Optical Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

4.3.2 IceCube DAQ . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

4.3.2.1 Local Coincidence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

5 Gamma-Ray Burst Selection

66

5.1 Satellites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

5.2 Blindness . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

5.3 AMANDA-II . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

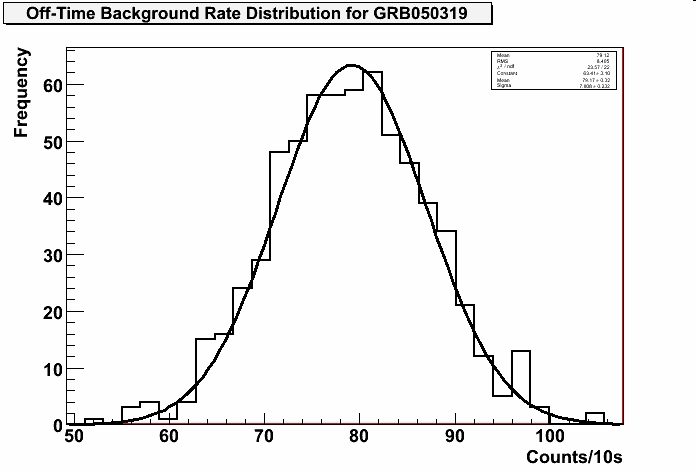

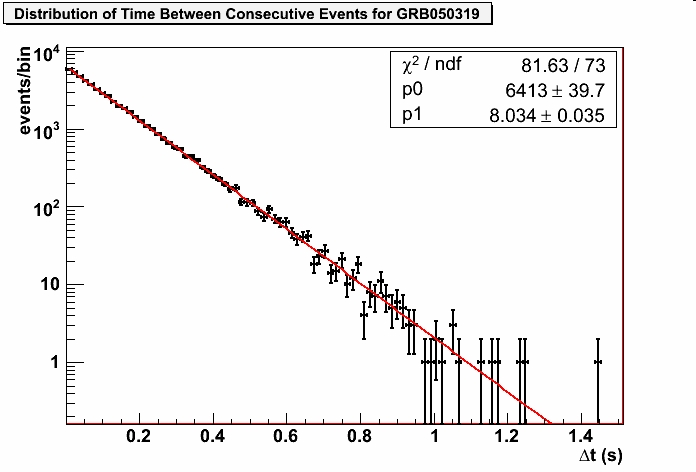

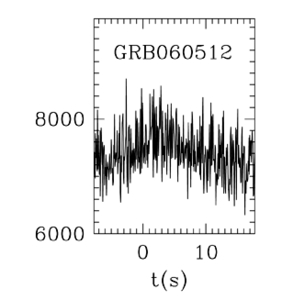

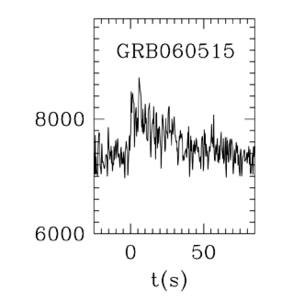

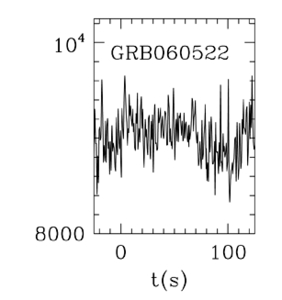

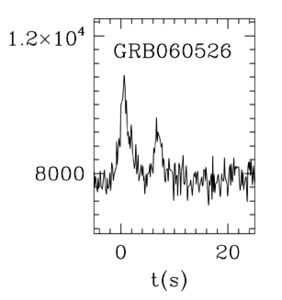

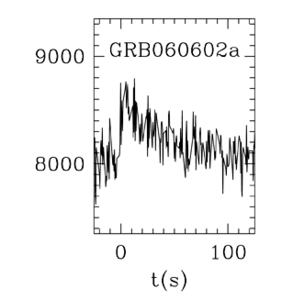

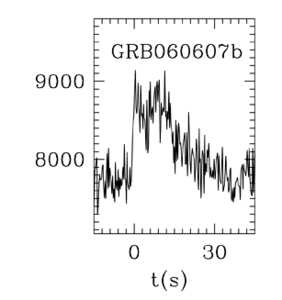

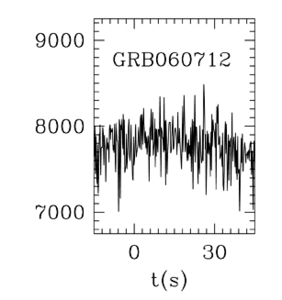

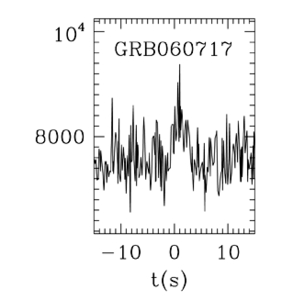

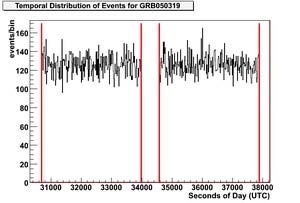

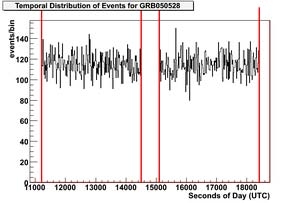

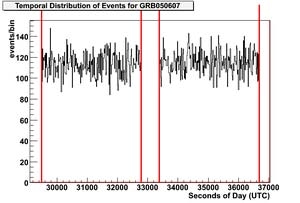

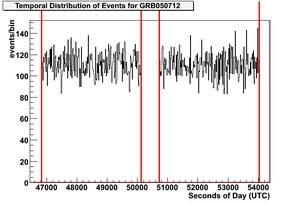

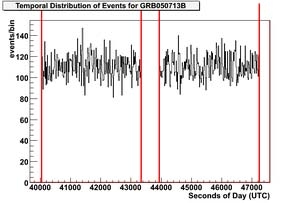

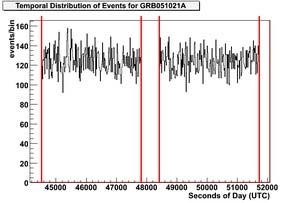

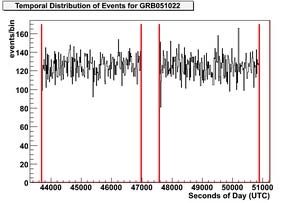

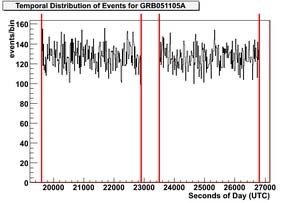

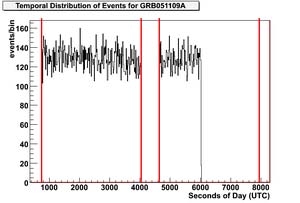

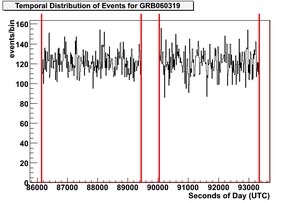

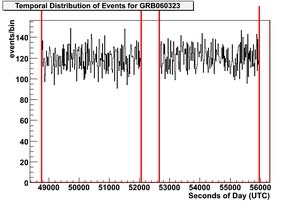

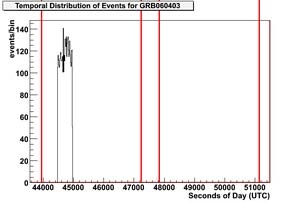

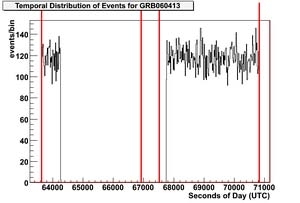

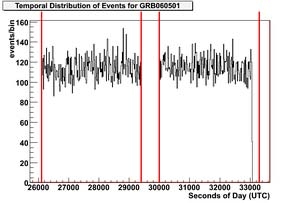

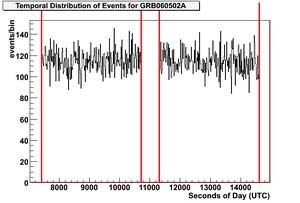

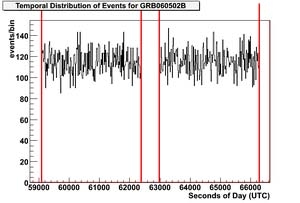

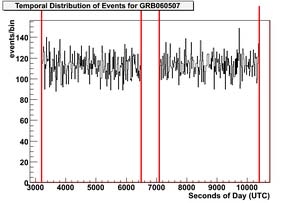

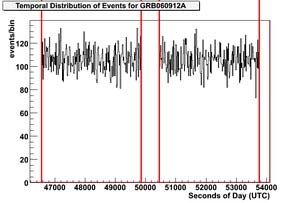

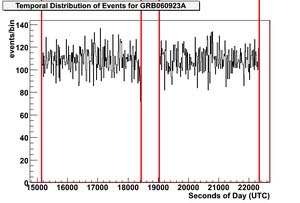

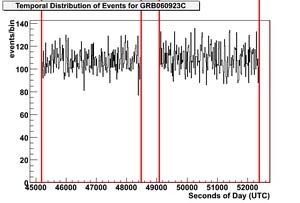

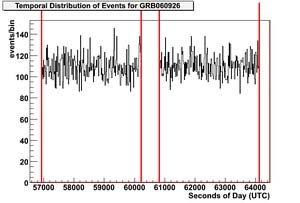

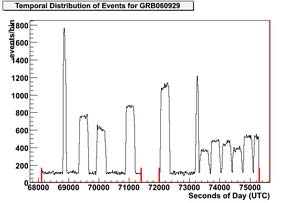

5.3.1 Detector Stability Criteria . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

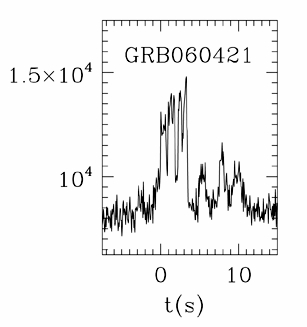

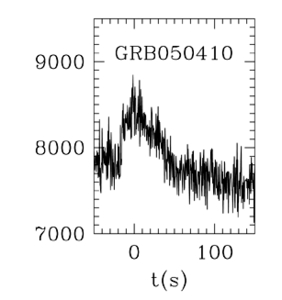

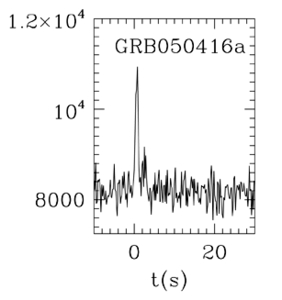

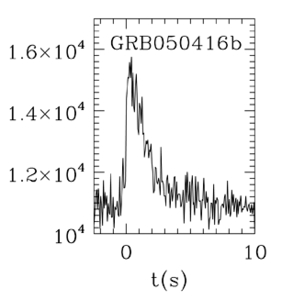

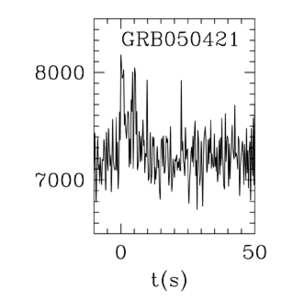

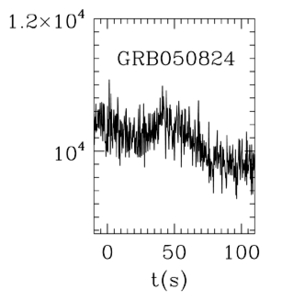

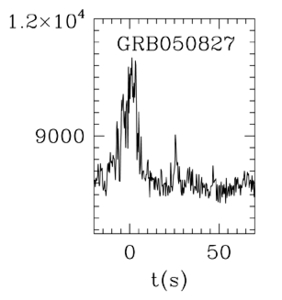

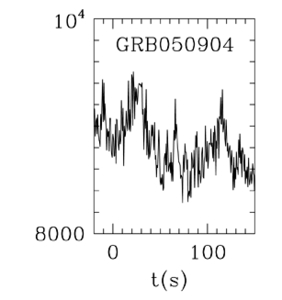

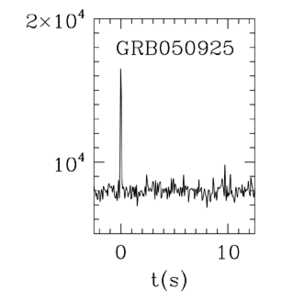

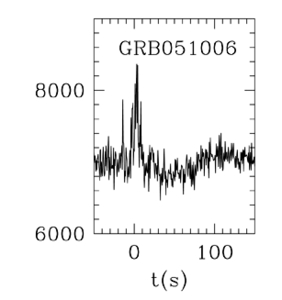

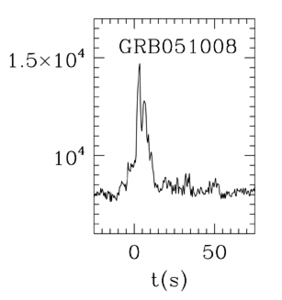

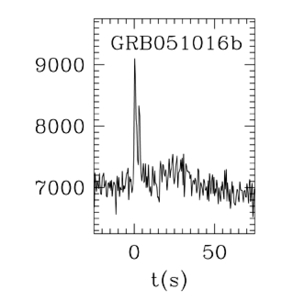

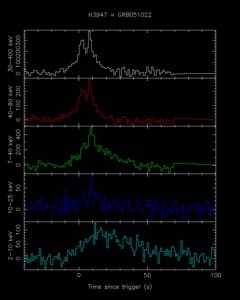

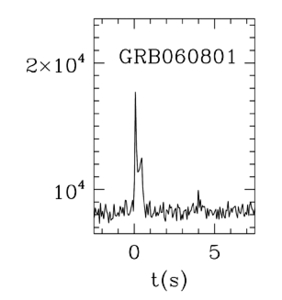

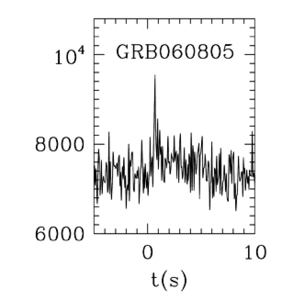

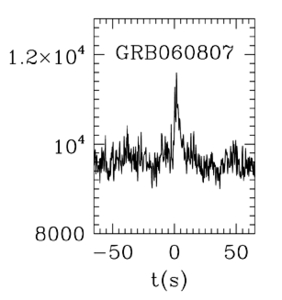

5.3.2 Burst Duration Determination . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

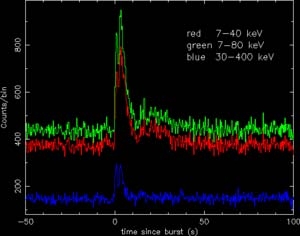

5.4 IceCube . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

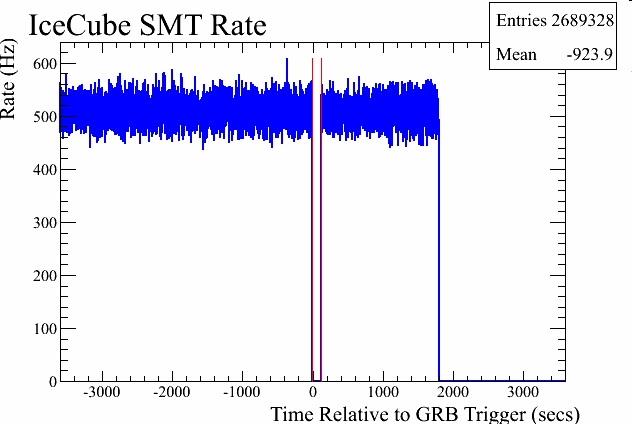

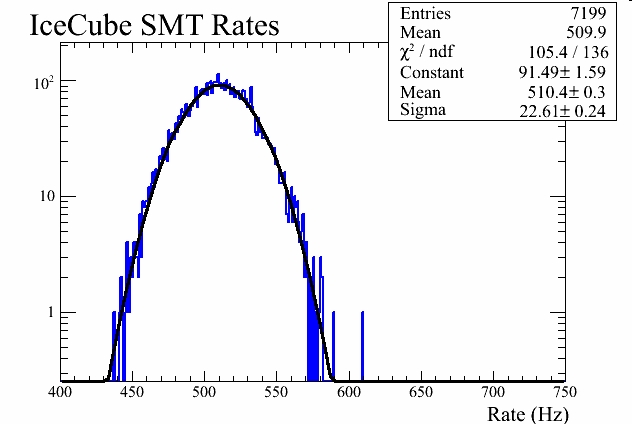

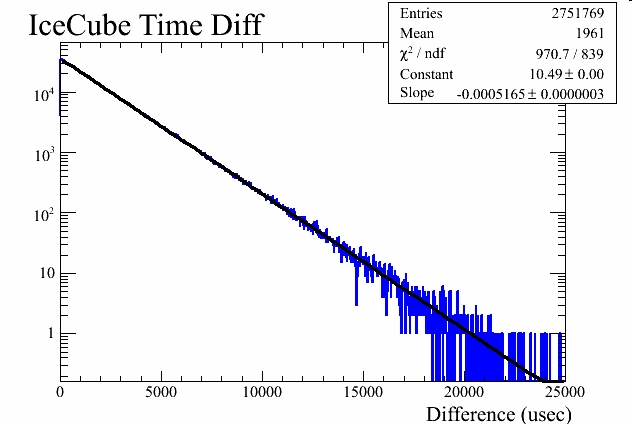

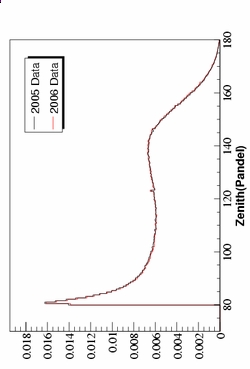

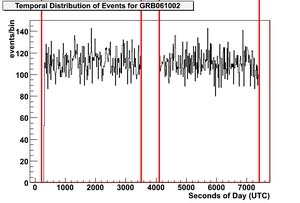

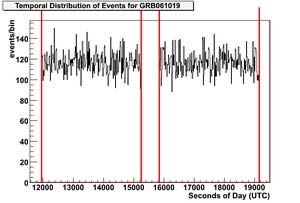

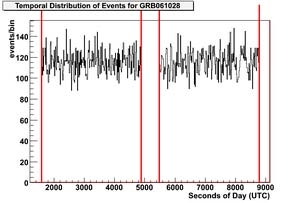

5.4.1 Detector Stability Criteria . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

5.4.2 Burst Duration Determination . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

6 Simulation

78

6.1 Generators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

6.2 Propagators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

6.3 Detector Simulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

6.3.1 AMANDA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

6.3.2 IceCube . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

7 AMANDA-II Analysis

81

7.1 Filtering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

7.1.1 Level 1 Filter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

7.1.2 Level 2 Filter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

7.1.3 Level 3 Filter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

7.1.4 Flare Checking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

7.1.5 Starting Dataset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

7.2 Signal Spectrum . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

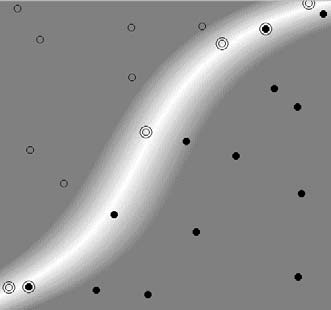

7.3 Event Selection Variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

v

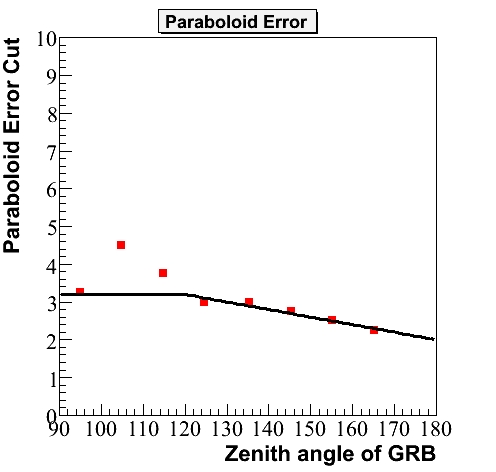

7.3.1 Paraboloid Sigma . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

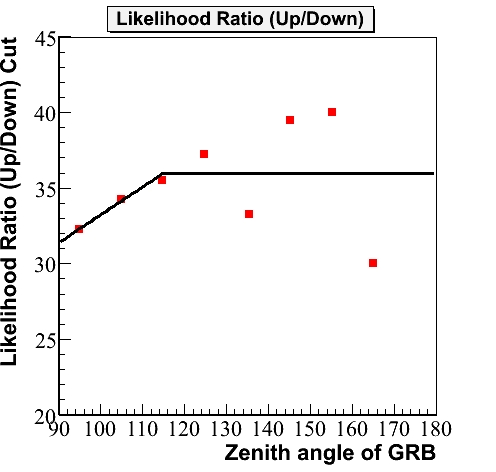

7.3.2 Likelihood Ratio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

7.3.3 Space Angle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

7.3.4 Other Variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

7.3.5 Temporal Coincidence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

7.4 Optimization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

7.5 Final Event Sample . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

7.6 Sensitivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

7.7 Discovery Potential . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

7.8 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

8 IceCube Analysis

107

8.1 Filtering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

8.1.1 Online Muon Filter / Level 1 Filter . . . . . . . . . . . . . . . . . . . . . . . . 108

8.1.2 Level 2 Filter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

8.1.3 Level 3 Filter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

8.1.4 Starting Event Sample . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

8.2 Signal Spectrum . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

8.2.1 Precursor Emission . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

8.2.2 Prompt Emission . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

8.2.3 Extended Window Search . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

8.3 Event Selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

8.3.1 Reduced Log-Likelihood . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

8.3.2 Bayesian Likelihood Ratio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

8.3.3 Paraboloid Sigma . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

8.3.4 Umbrella Likelihood Ratio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

8.3.5 Split Reconstruction Minimum Zenith . . . . . . . . . . . . . . . . . . . . . . . 117

8.3.6 Direct Hits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

8.3.7 Cut Selection and Neutrino Level Sample . . . . . . . . . . . . . . . . . . . . . 118

vi

8.4 Unbinned Analysis Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

8.5 Sensitivity and Discovery Potential . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

8.5.1 Trials Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

8.6 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

9 Systematic Error Analysis

136

9.1 Sources of Systematic Error . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

9.1.1 Neutrino Cross-Section . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

9.1.2 Muon Propagation / Earth Model . . . . . . . . . . . . . . . . . . . . . . . . . 137

9.1.3 Reconstruction Bias . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

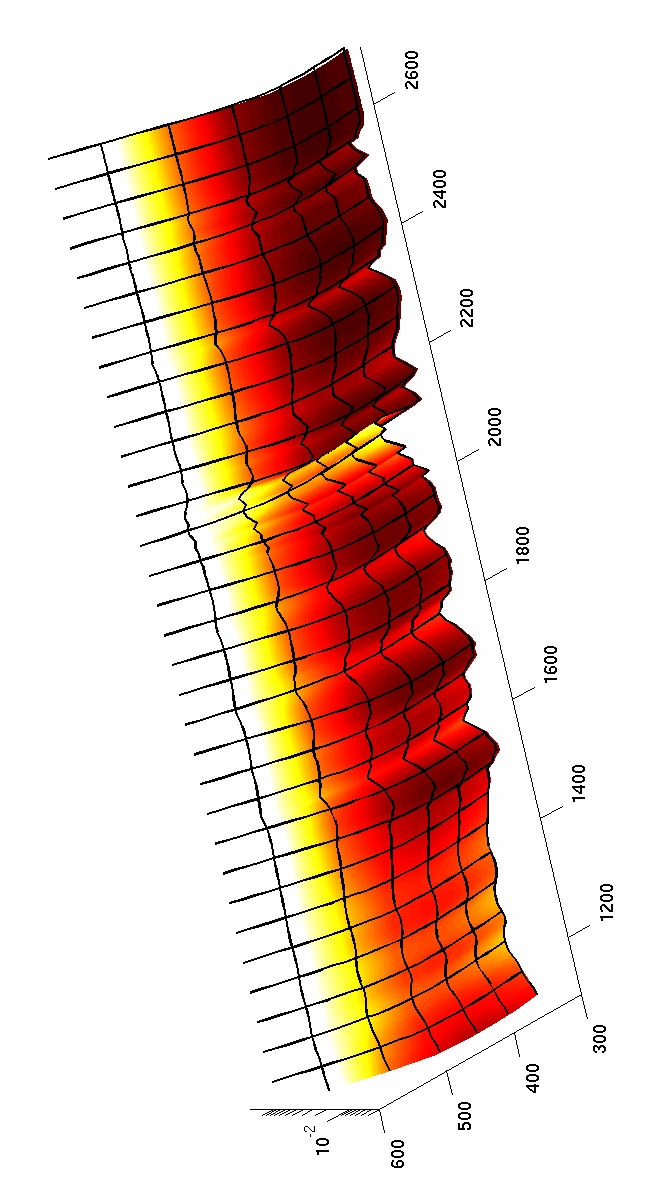

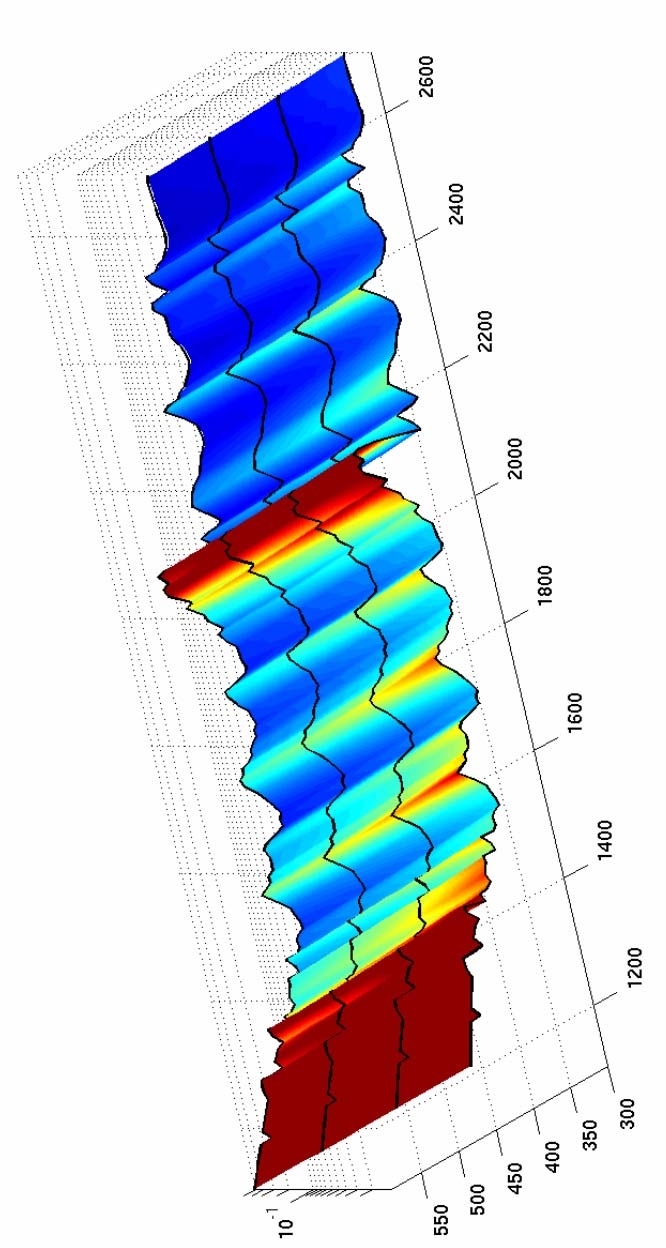

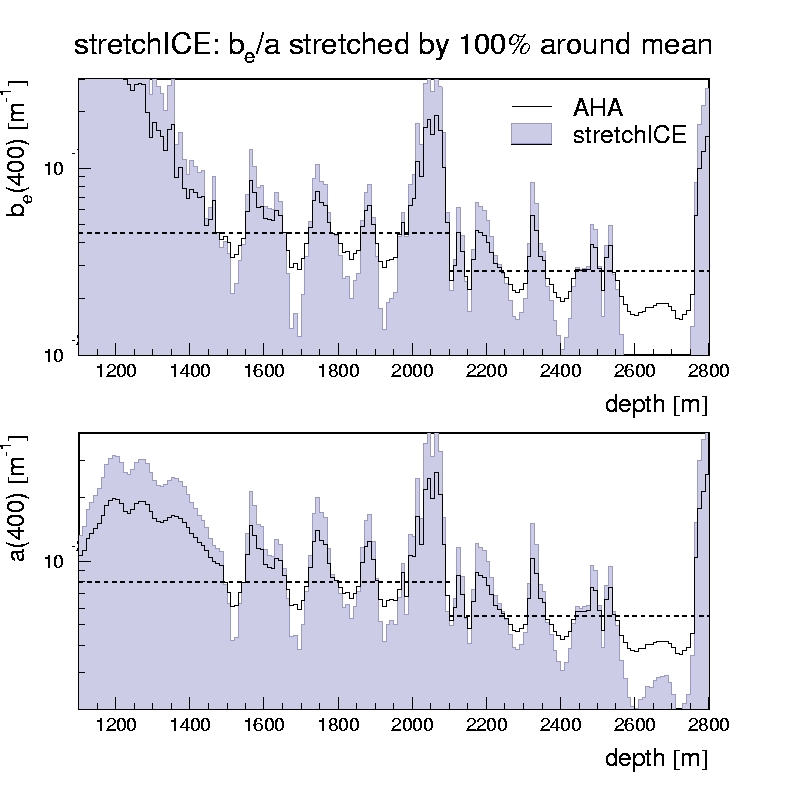

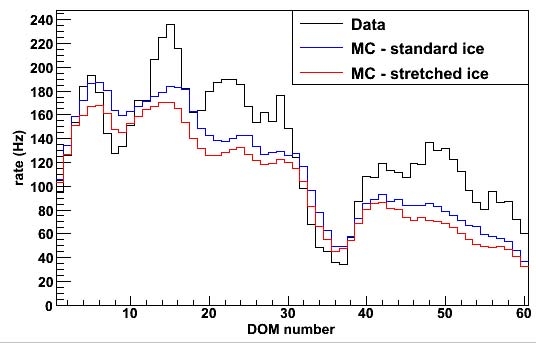

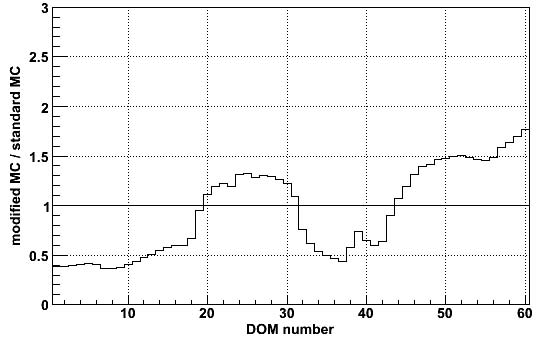

9.1.4 Ice Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

9.1.5 Timing Resolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

9.1.6 (D)OM Efficiency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

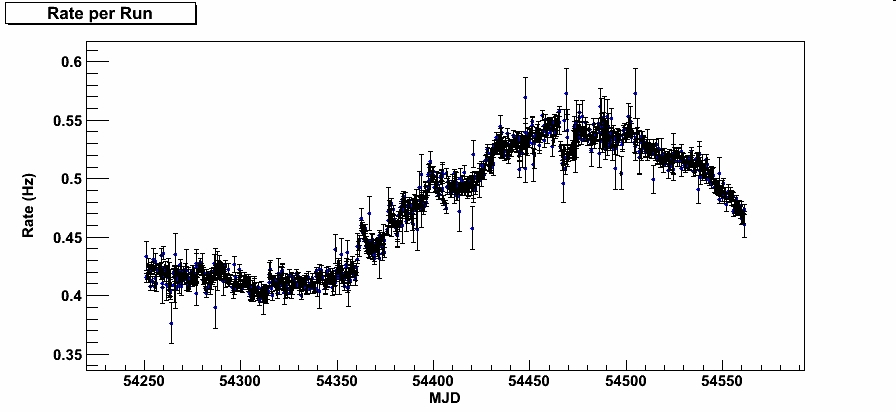

9.1.7 Seasonal Background Variations . . . . . . . . . . . . . . . . . . . . . . . . . . 140

10 Conclusions

142

10.1 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

10.2 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

10.2.1 Previous Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

10.2.2 Sensitivity to gamma rays from GRBs . . . . . . . . . . . . . . . . . . . . . . . 147

10.3 Outlook . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

Bibliography

152

A List of Abbreviations

160

B Sensitivity Studies for the Full Detector

163

B.1 Geometries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

B.2 GRB Sample . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

B.3 Event Selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

vii

B.4 Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

C Event Selection with Support Vector Machines

170

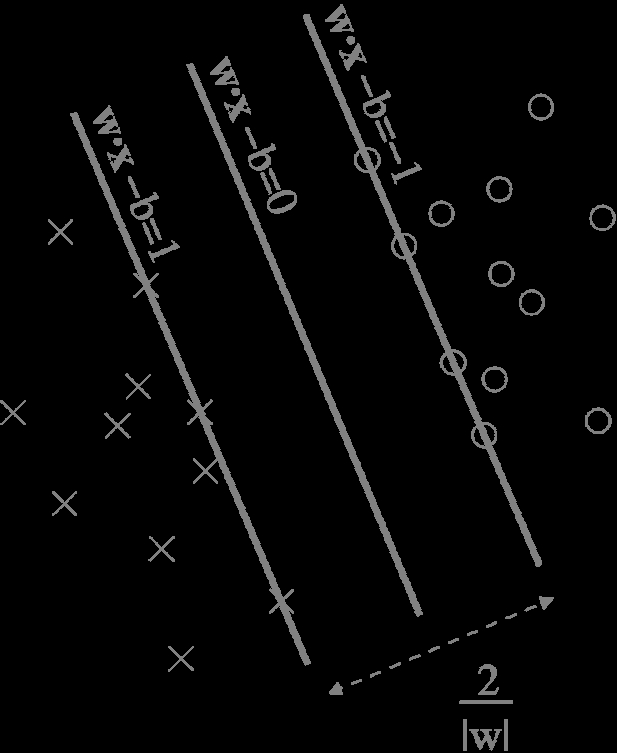

C.1 Fully Separable, Linear Case . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

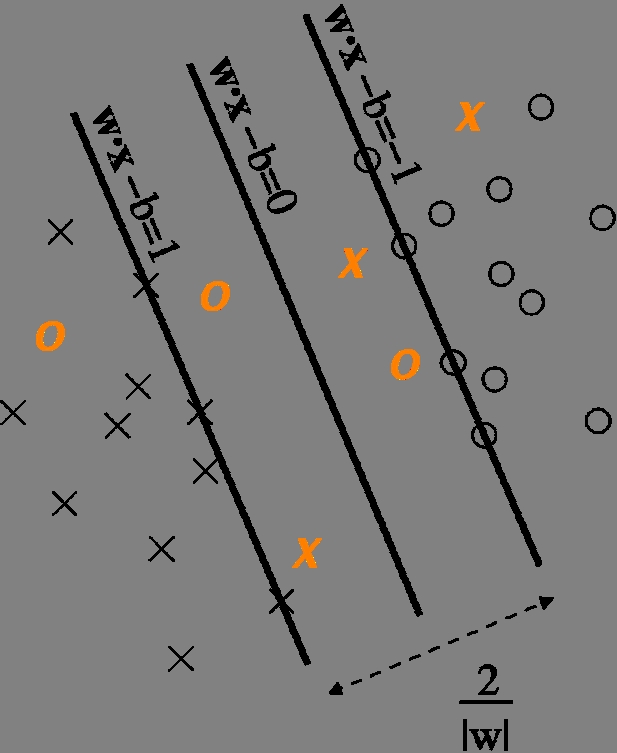

C.2 Non-Separable, Linear Case (Soft Margin) . . . . . . . . . . . . . . . . . . . . . . . . . 173

C.3 Non-Linear Case . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

C.4 Example Application to a GRB Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . 176

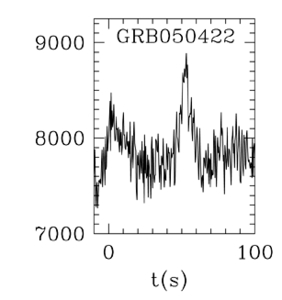

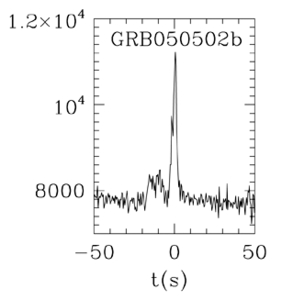

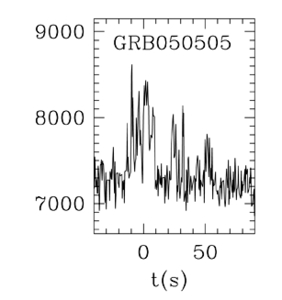

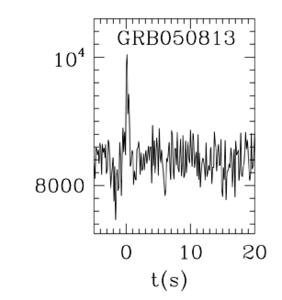

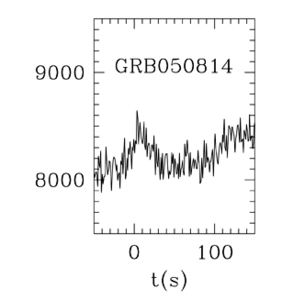

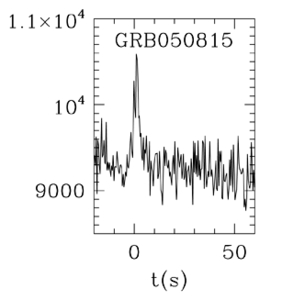

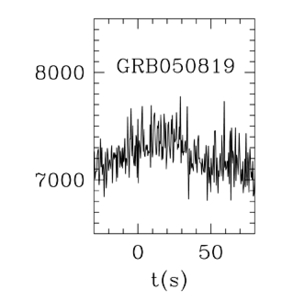

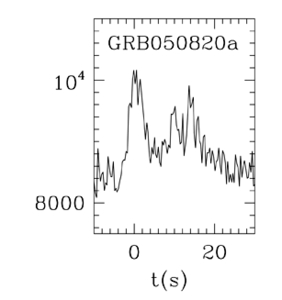

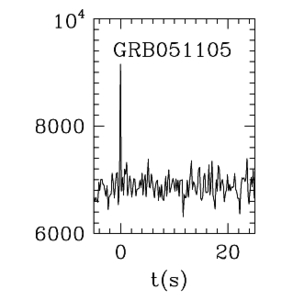

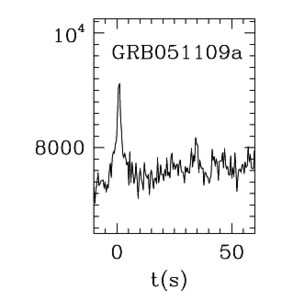

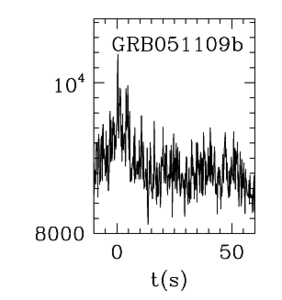

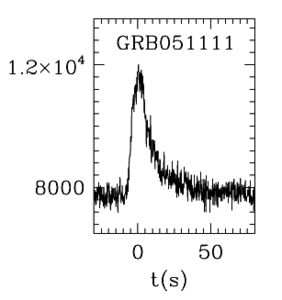

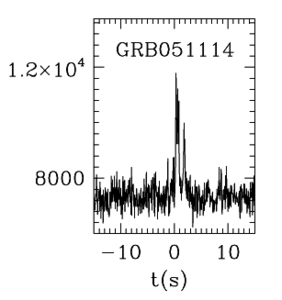

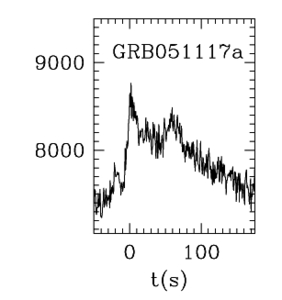

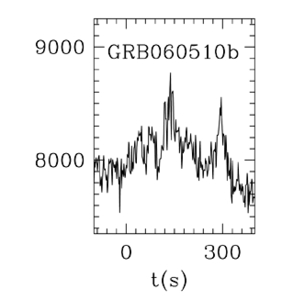

D Light Curves of 2005-2006 Northern Sky GRBs

184

E Stability Plots for 2005-2006 Northern Sky GRBs

187

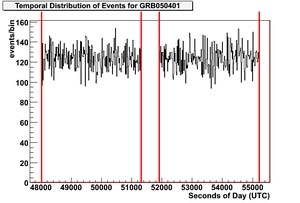

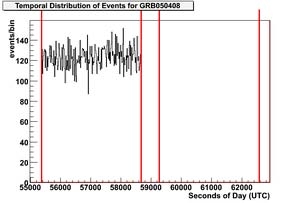

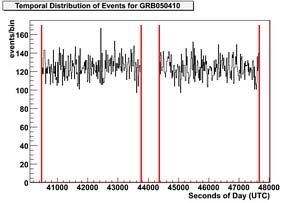

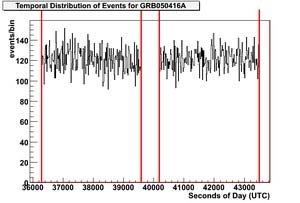

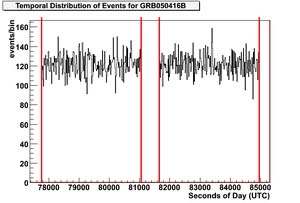

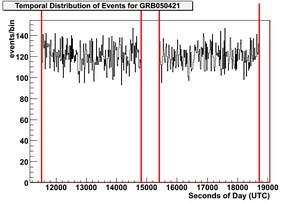

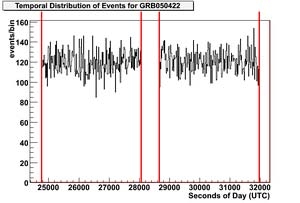

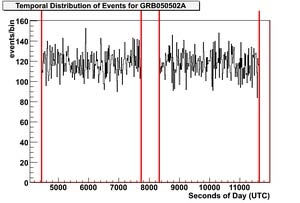

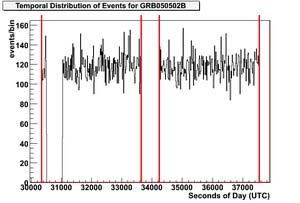

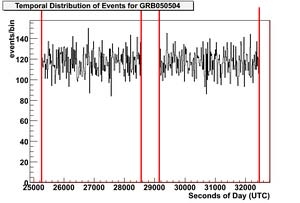

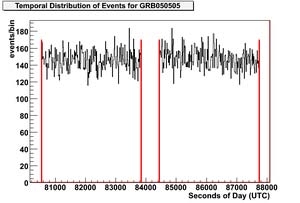

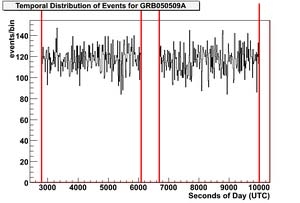

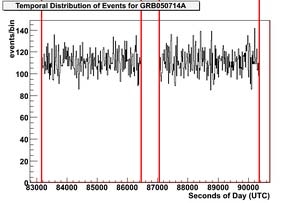

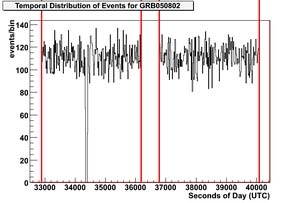

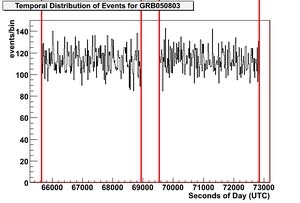

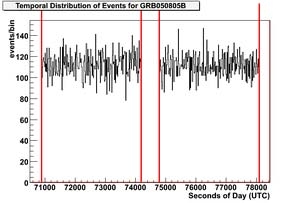

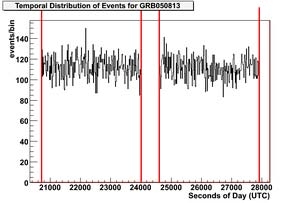

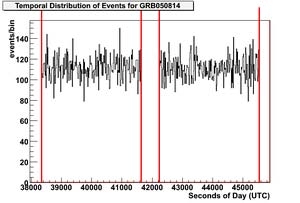

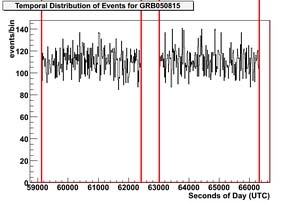

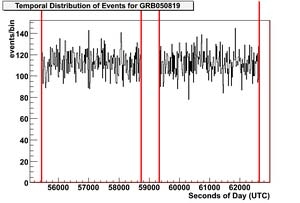

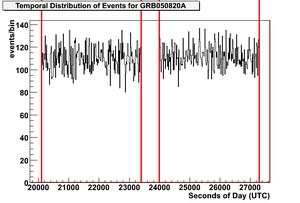

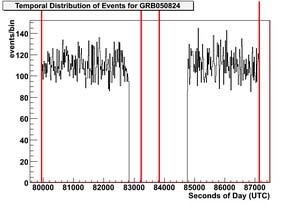

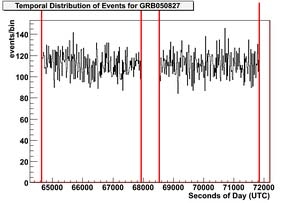

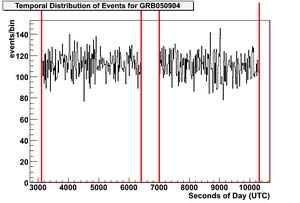

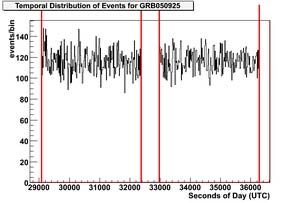

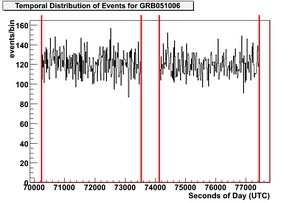

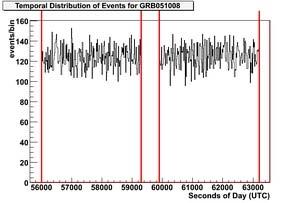

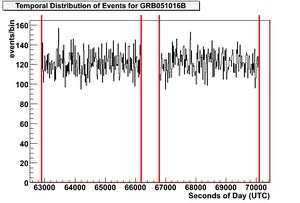

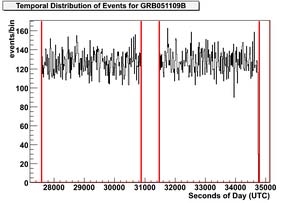

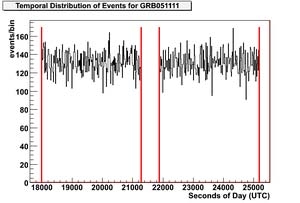

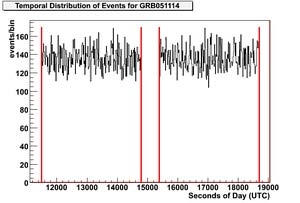

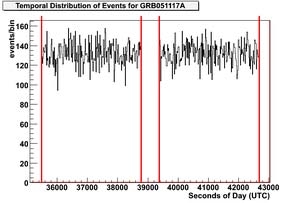

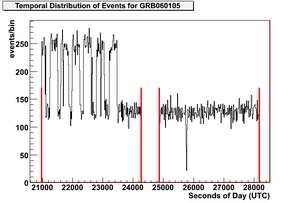

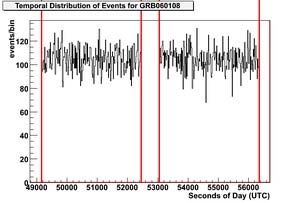

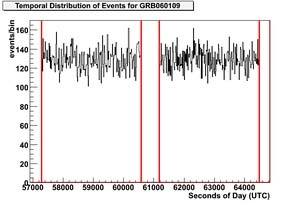

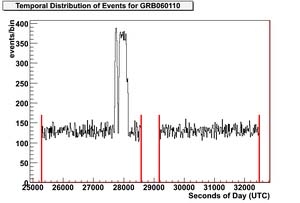

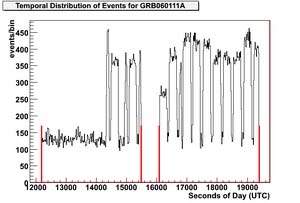

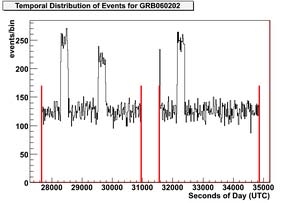

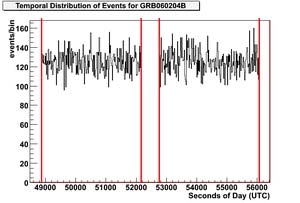

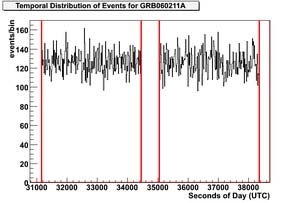

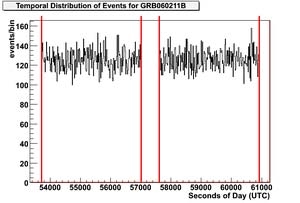

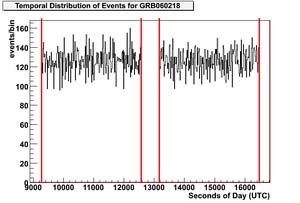

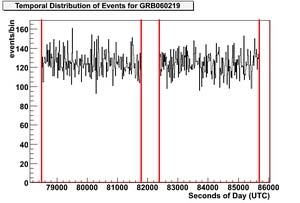

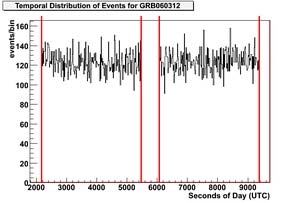

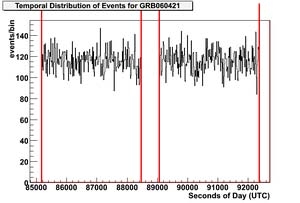

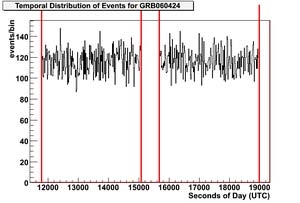

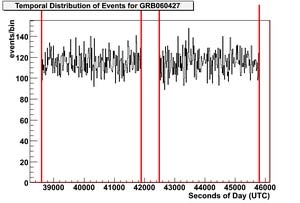

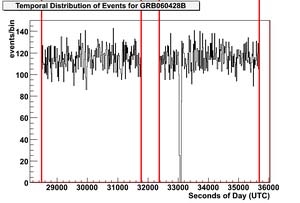

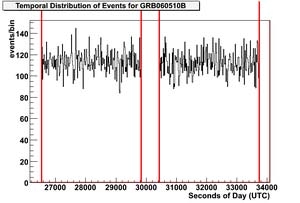

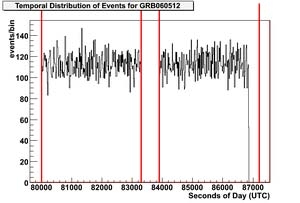

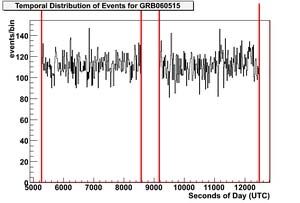

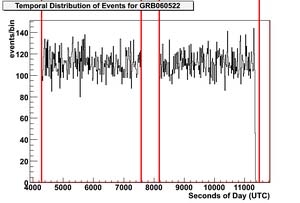

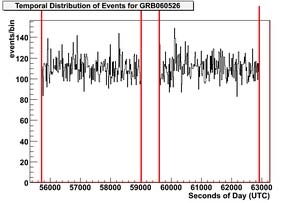

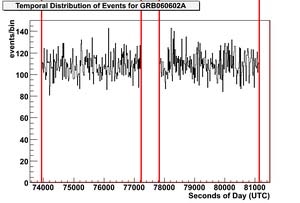

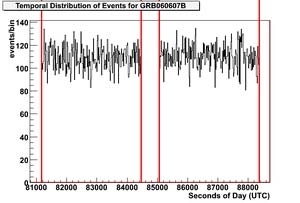

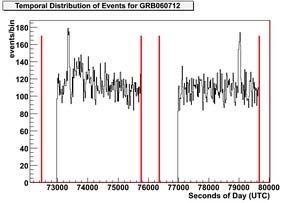

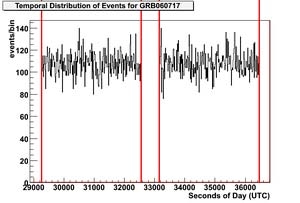

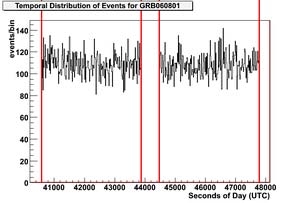

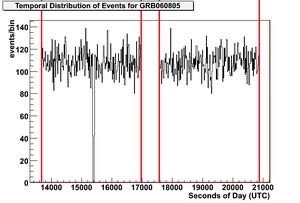

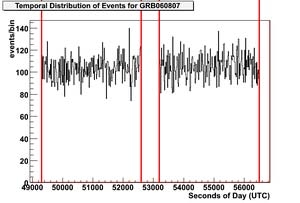

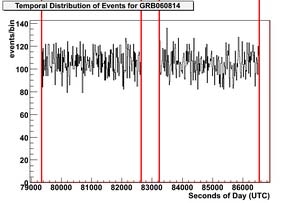

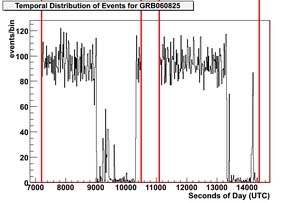

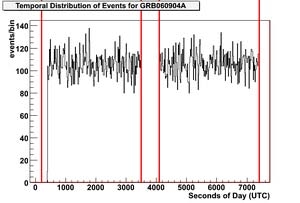

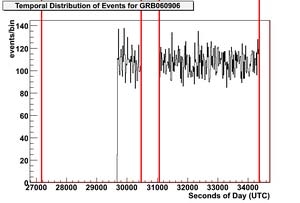

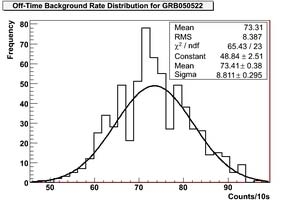

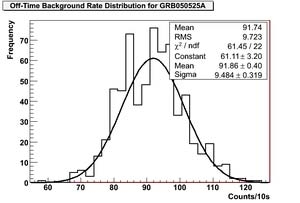

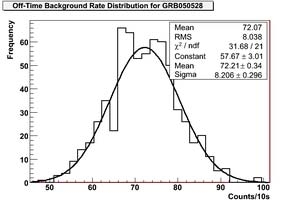

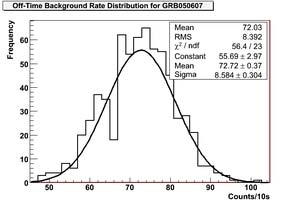

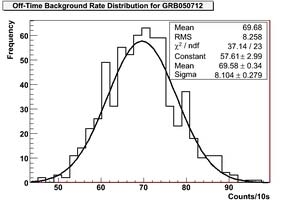

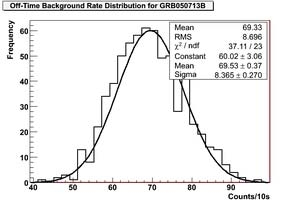

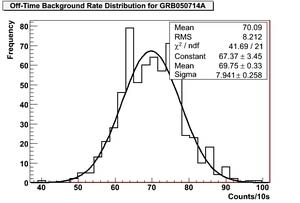

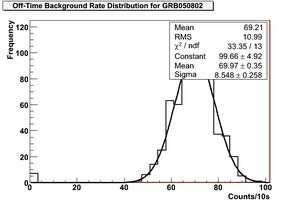

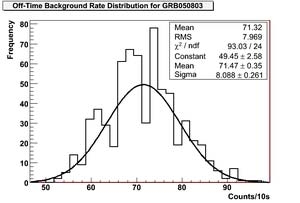

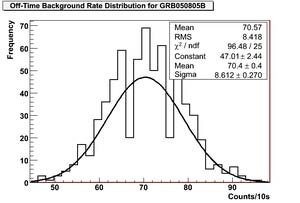

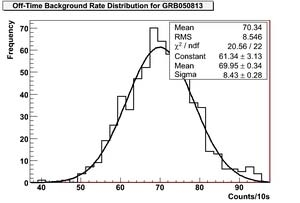

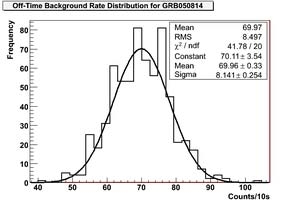

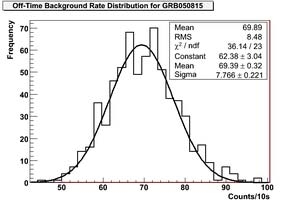

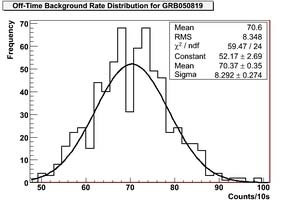

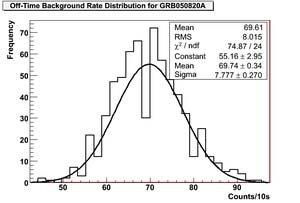

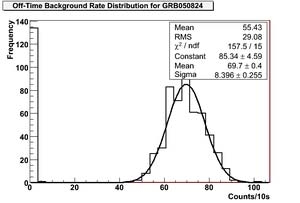

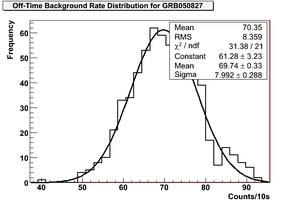

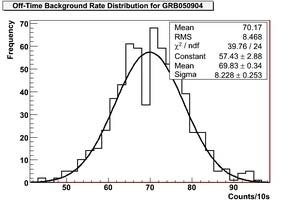

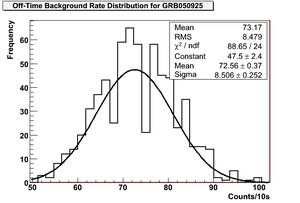

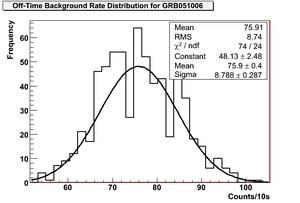

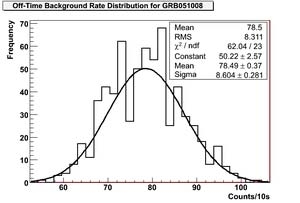

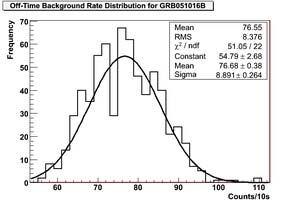

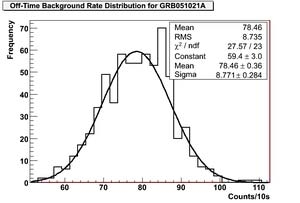

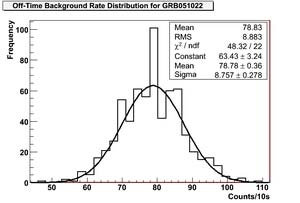

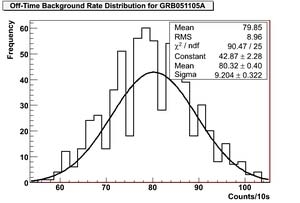

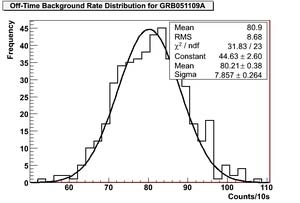

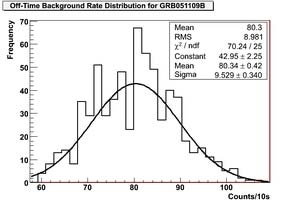

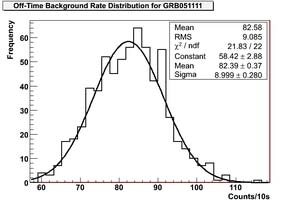

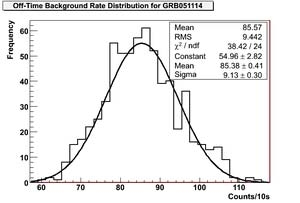

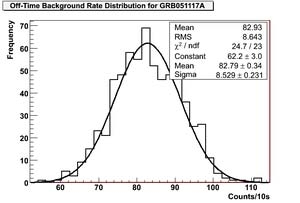

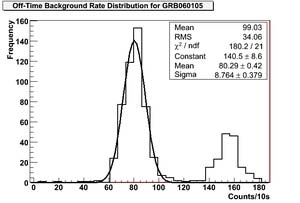

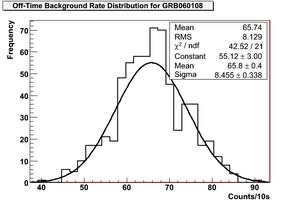

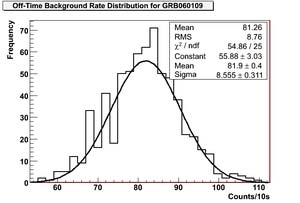

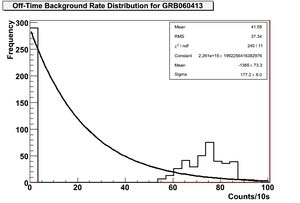

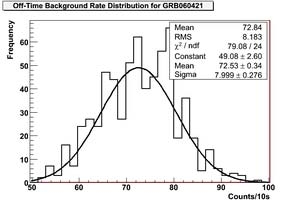

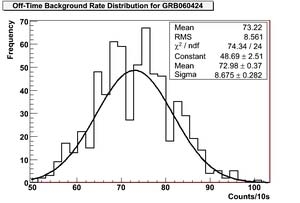

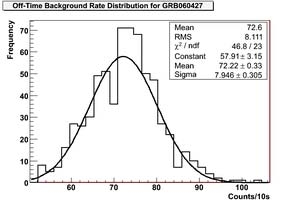

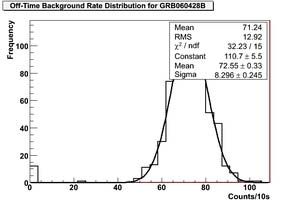

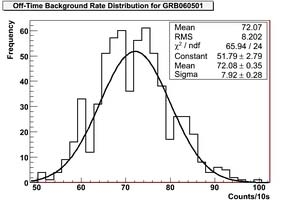

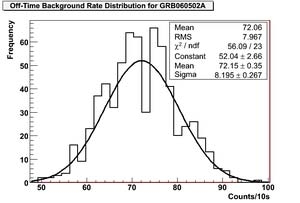

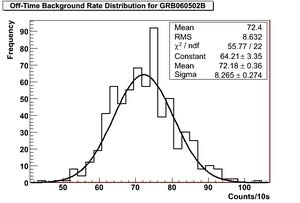

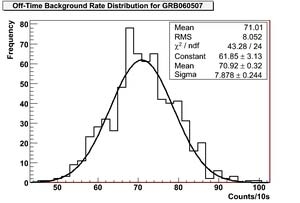

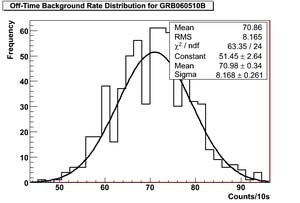

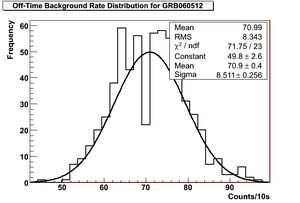

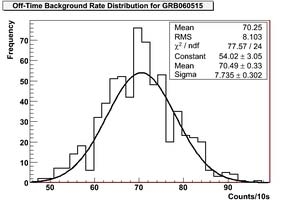

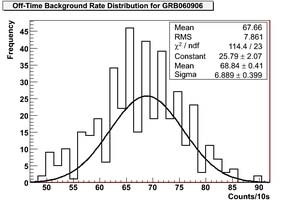

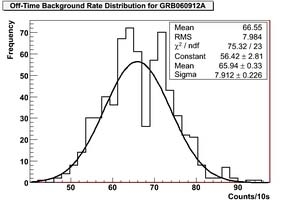

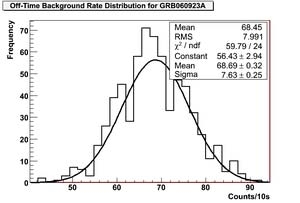

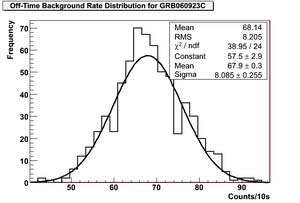

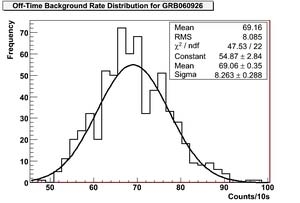

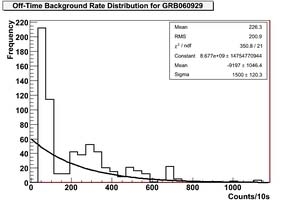

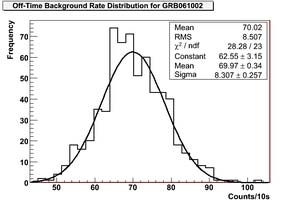

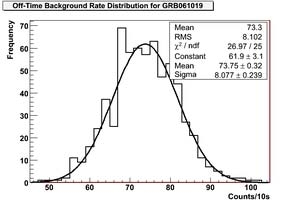

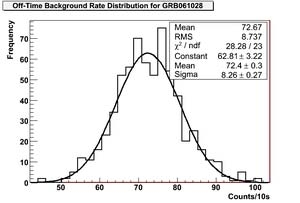

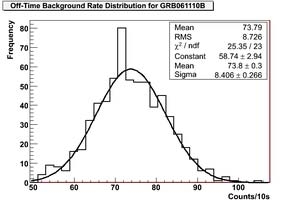

E.1 Filter Level Rates in Background Windows . . . . . . . . . . . . . . . . . . . . . . . . 187

E.2 Distribution in Filter Level Rate per 10s . . . . . . . . . . . . . . . . . . . . . . . . . . 192

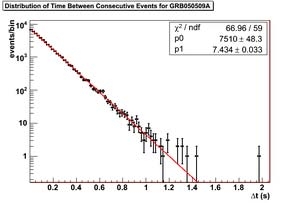

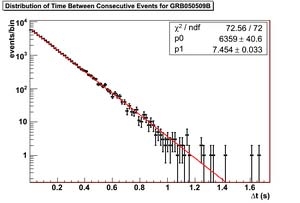

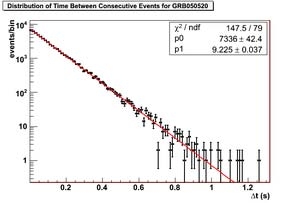

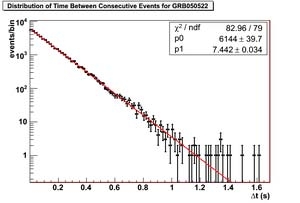

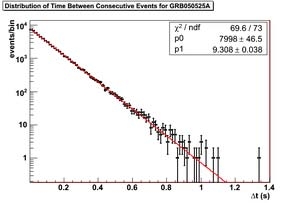

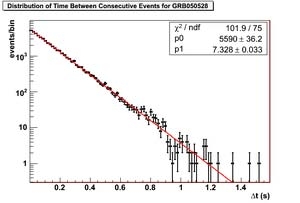

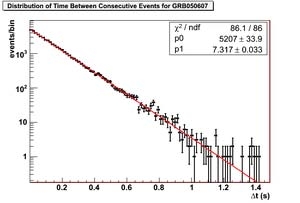

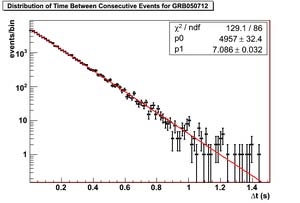

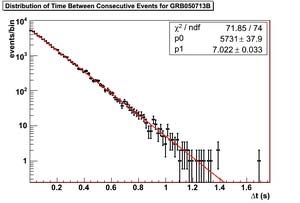

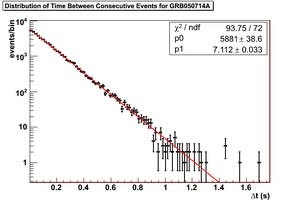

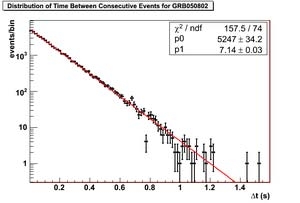

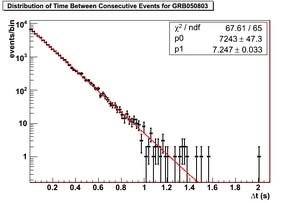

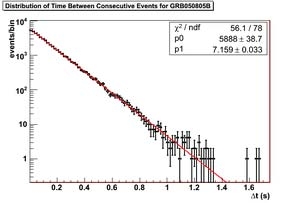

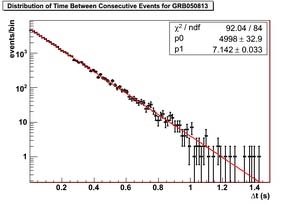

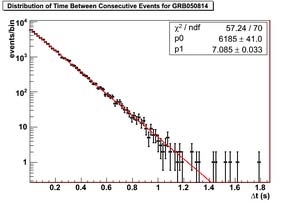

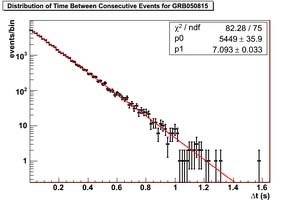

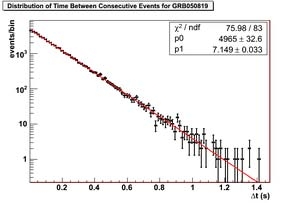

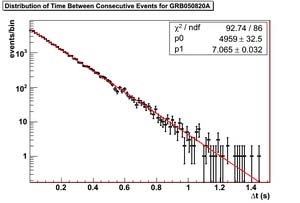

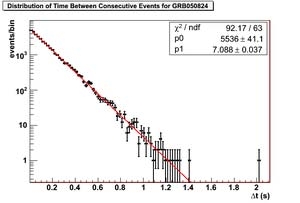

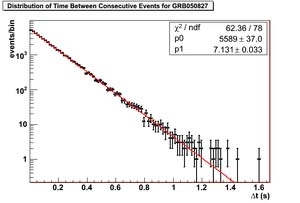

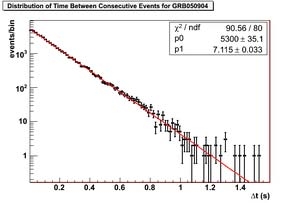

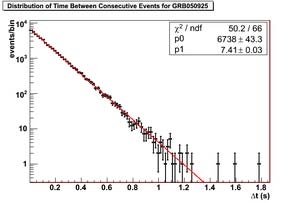

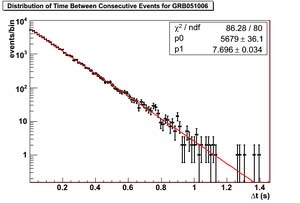

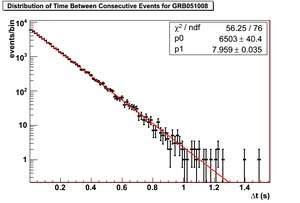

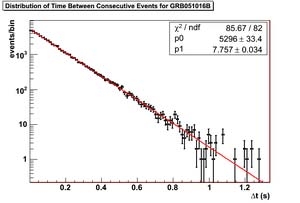

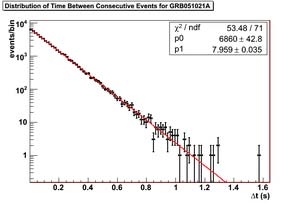

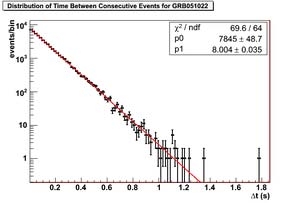

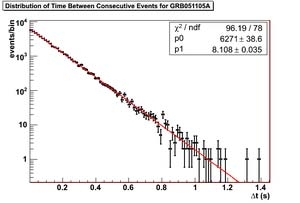

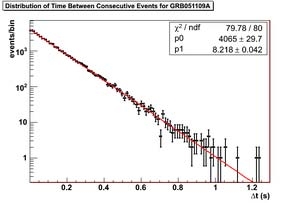

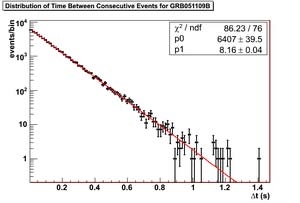

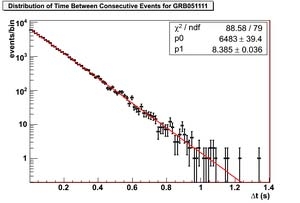

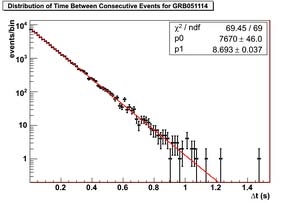

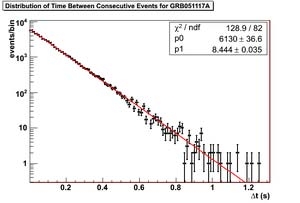

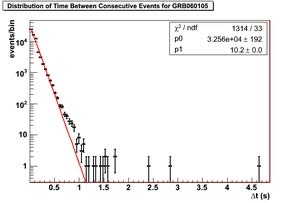

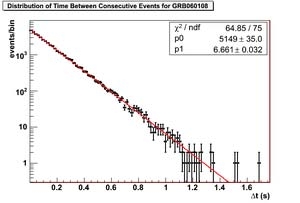

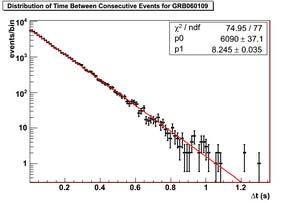

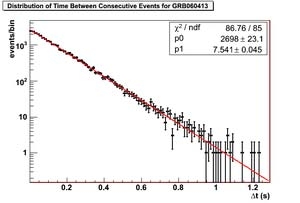

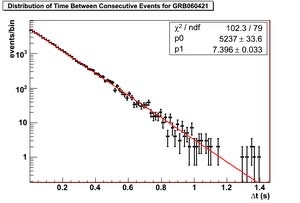

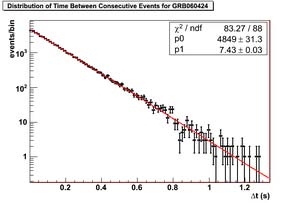

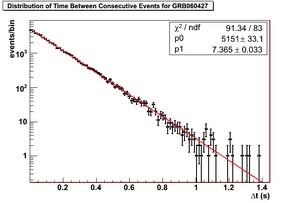

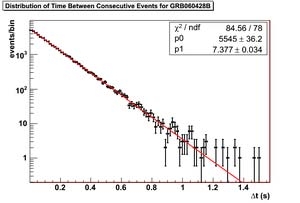

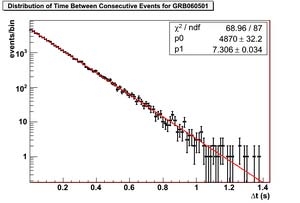

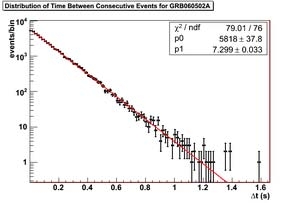

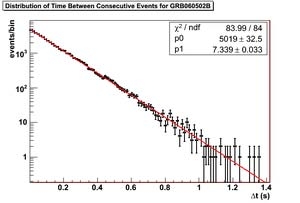

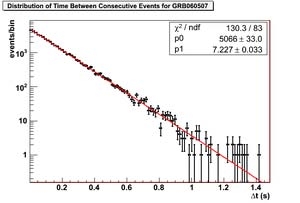

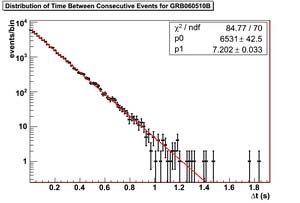

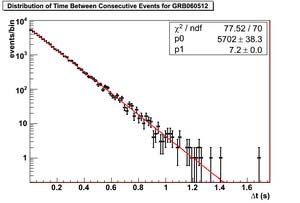

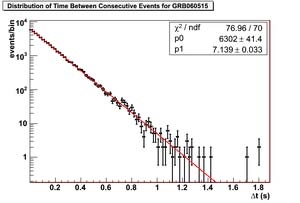

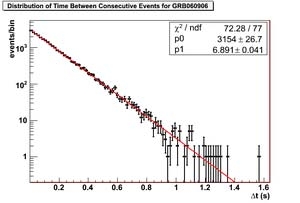

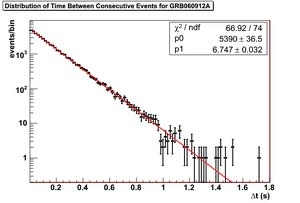

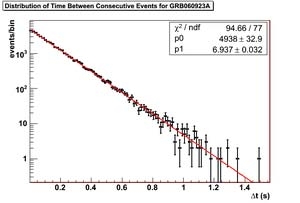

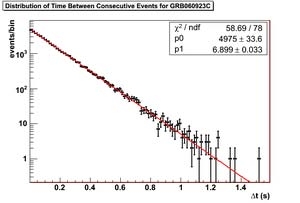

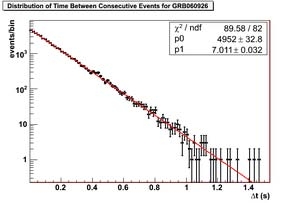

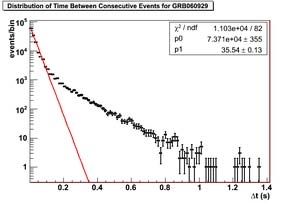

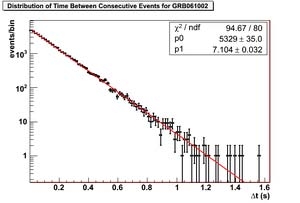

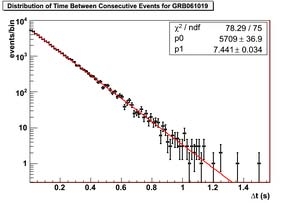

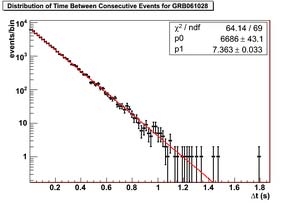

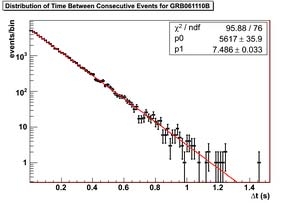

E.3 Time Differences Between Subsequent Events . . . . . . . . . . . . . . . . . . . . . . . 197

viii

List of Tables

2.1 Average BATSE GRB Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

4.1 Main Parameters of GRB-detecting Satellites . . . . . . . . . . . . . . . . . . . . . . . 53

5.1 GRBs Lacking Detector Data in 2005-2006 . . . . . . . . . . . . . . . . . . . . . . . . 68

5.2 GRBs with Incomplete Stability Windows in 2005-2006 . . . . . . . . . . . . . . . . . 69

5.3 GRBs with Problem Data in 2005-2006 . . . . . . . . . . . . . . . . . . . . . . . . . . 71

5.4 2005-2006 Burst Sample . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

5.5 GRBs with Problem Data in 2007-2008 . . . . . . . . . . . . . . . . . . . . . . . . . . 76

5.6 2007-2008 Burst Sample . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

7.1 Summary of 2005-2006 AMANDA-II upgoing muon filters. . . . . . . . . . . . . . . . . 82

7.2 AMANDA-II upgoing filter passing rates. . . . . . . . . . . . . . . . . . . . . . . . . . 84

7.3 Event Selection for the 2005-2006 AMANDA analysis . . . . . . . . . . . . . . . . . . 94

7.4 Final Event Rates for 2005-2006 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

7.5 Discovery Potential for 2005-2006 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

8.1 Summary of 22-string IceCube upgoing muon filters. . . . . . . . . . . . . . . . . . . . 108

8.2 Average Parameters for Swift GRBs . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

8.3 GRB Spectral Information for 2007-2008 . . . . . . . . . . . . . . . . . . . . . . . . . . 113

8.4 Modified Extended Time Windows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

8.5 Event Selection Criteria for 2007-2008 . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

8.6 Final Event Rates for 2007-2008 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

ix

8.7 Sensitivity and Discovery Potential for 2007-2008 . . . . . . . . . . . . . . . . . . . . . 133

8.8 Upper Limits for 2007-2008 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

9.1 AMANDA-II Systematic Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

9.2 IceCube Systematic Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

10.1 Results of Previous and Current GRB Searches . . . . . . . . . . . . . . . . . . . . . . 143

B.1 Simulation Remaining After SBM Selection . . . . . . . . . . . . . . . . . . . . . . . . 165

B.2 Event Rates for the Full IceCube Detector . . . . . . . . . . . . . . . . . . . . . . . . . 168

x

List of Figures

1.1 The Cosmic Ray Spectrum . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3

1.2 First Order Fermi Acceleration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6

1.3 Hillas Diagram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

9

2.1 The Vela 5 Satellite . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

2.2 GRB Duration Distribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.3 GRB Spatial Distribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

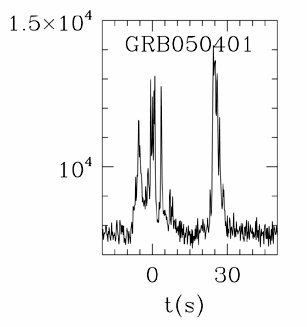

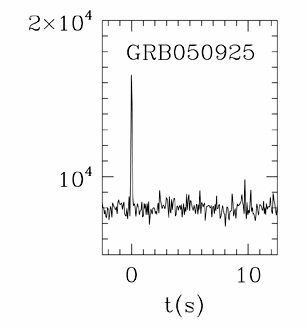

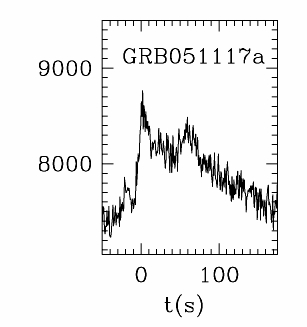

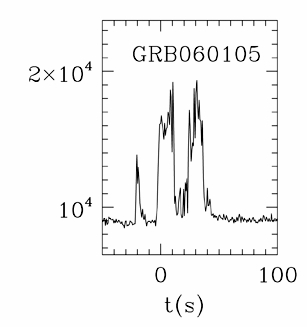

2.4 Sample GRB Lightcurves . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.5 Effect of Relativistic Aberration on Time Variability . . . . . . . . . . . . . . . . . . . 19

2.6 Temporal Jet Break in GRB990510 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.7 Calibrated GRB Energy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.8 Prompt and Precursor Neutrino Emission in the Fireball Model . . . . . . . . . . . . . 28

3.1 Neutrino Cross-section and Interaction Length . . . . . . . . . . . . . . . . . . . . . . 34

3.2 Ceˇ renkov Radiation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

3.3 Muon Energy Loss in Ice . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

3.4 Angular Distribution of Atmospheric Muons . . . . . . . . . . . . . . . . . . . . . . . . 39

3.5 The Earth as a Muon Filter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

3.6 Optical Properties of South Pole Ice . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

3.7 Reconstruction Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

3.8 Pandel Parameterization of Light Delay . . . . . . . . . . . . . . . . . . . . . . . . . . 46

3.9 N

ch

as a Function of Neutrino Energy . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

xi

3.10 N

ch

Distribution for Various Spectra . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

3.11 ?

mue

as a Function of Neutrino Energy . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

3.12 ?

mue

Distribution for Various Spectra . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

3.13 Comparison of Energy Estimators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

4.1 The Swift Satellite . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

4.2 Triangulation with IPN3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

4.3 The AMANDA-II Detector . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

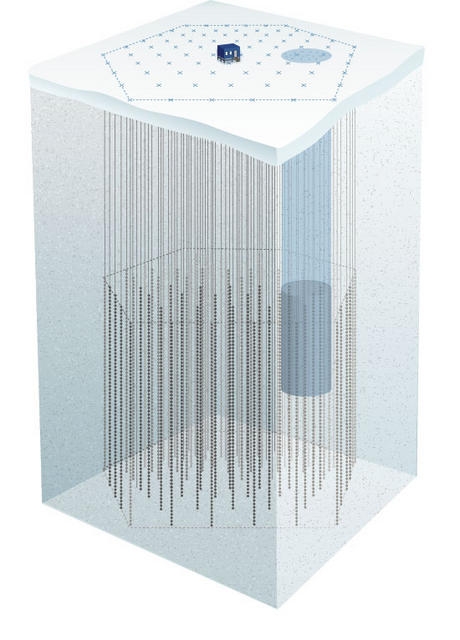

4.4 The IceCube Detector . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

4.5 Wavelength Dependence of DOM properties . . . . . . . . . . . . . . . . . . . . . . . . 63

4.6 Schematic of a DOM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

4.7 Sample ATWD and PMT ADC Waveforms . . . . . . . . . . . . . . . . . . . . . . . . 65

5.1 Blindness for GRB Searches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

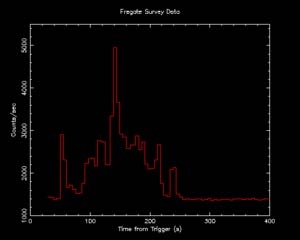

5.2 Sample AMANDA Stability Plots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

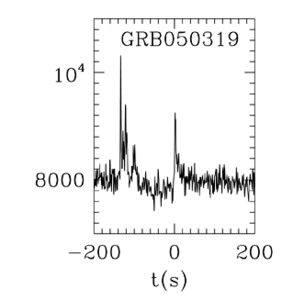

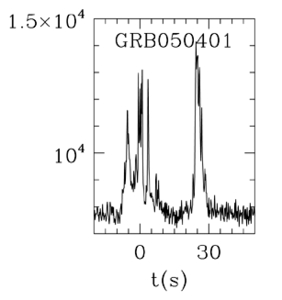

5.3 Burst Duration Definition for 2005-2006 . . . . . . . . . . . . . . . . . . . . . . . . . . 72

5.4 Sample IceCube Stability Plots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

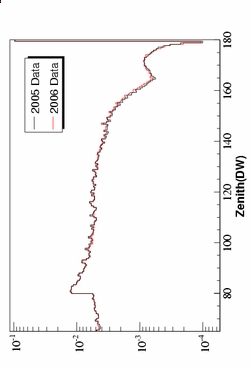

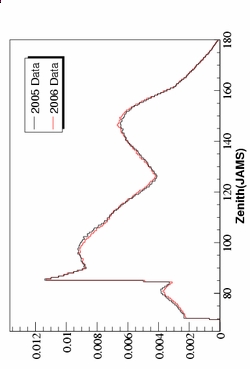

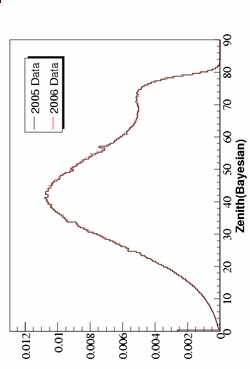

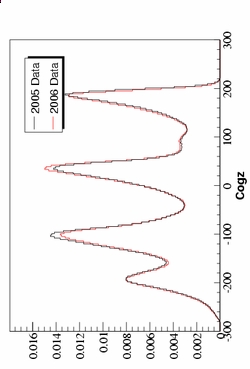

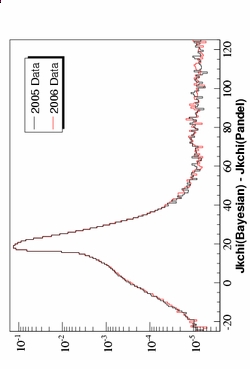

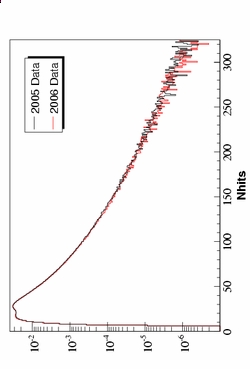

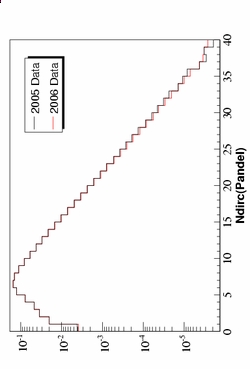

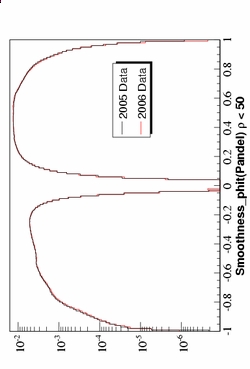

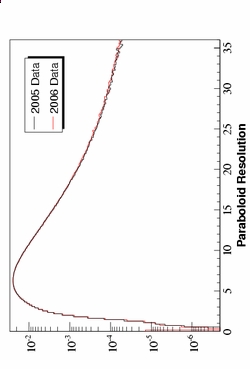

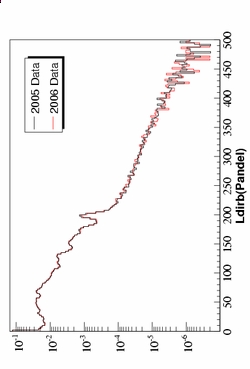

7.1 Comparison of 2005 and 2006 data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

7.2 AMANDA Response to GRB Signal at Filter Level . . . . . . . . . . . . . . . . . . . . 86

7.3 Background Contamination at Filter Level for 2005-2006 . . . . . . . . . . . . . . . . . 87

7.4 Paraboloid Sigma at Filter Level for 2005-2006 . . . . . . . . . . . . . . . . . . . . . . 88

7.5 Likelihood Ratio at Filter Level for 2005-2006 . . . . . . . . . . . . . . . . . . . . . . . 89

7.6 Space Angle at Filter Level for 2005-2006 . . . . . . . . . . . . . . . . . . . . . . . . . 89

7.7 Direct Hits Problem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

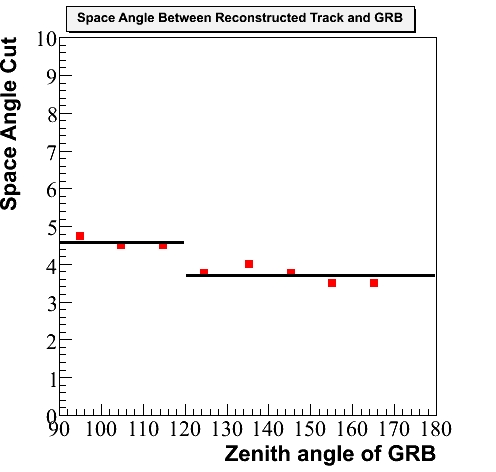

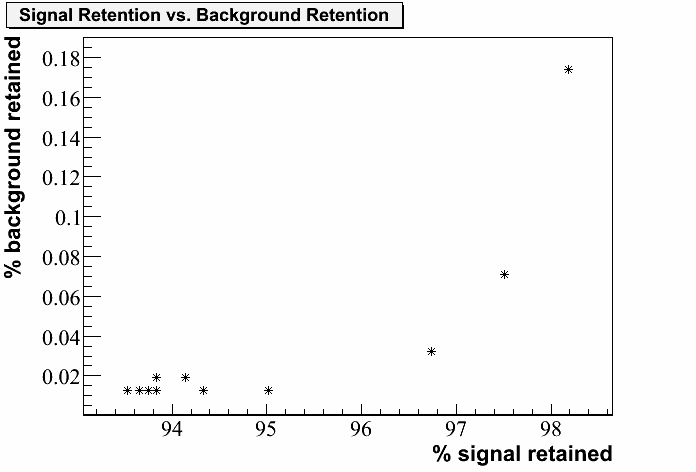

7.8 Cut Parameterization for 2005-2006 . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

7.9 Zenith Dependence of Parameter Distributions for 2005-2006 . . . . . . . . . . . . . . 96

7.10 Partial Cut Application for 2005-2006 . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

7.11 Simulation and data comparison at final cut level for 2005-2006 . . . . . . . . . . . . . 99

7.12 Paraboloid Sigma at Final Cut Level for 2005-2006 . . . . . . . . . . . . . . . . . . . . 100

xii

7.13 Likelihood Ratio at Final Cut Level for 2005-2006 . . . . . . . . . . . . . . . . . . . . 101

7.14 Space Angle at Final Cut Level for 2005-2006 . . . . . . . . . . . . . . . . . . . . . . . 102

7.15 Final Cut Efficiency for 2005-2006 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

7.16 AMANDA Neutrino Effective Area . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

7.17 Model Discovery Potential for 2005-2006 . . . . . . . . . . . . . . . . . . . . . . . . . . 105

7.18 Upper Limit of the AMANDA analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

8.1 Event Rates at Filter Level for 2007-2008 . . . . . . . . . . . . . . . . . . . . . . . . . 110

8.2 IceCube Response to Precursor Neutrinos . . . . . . . . . . . . . . . . . . . . . . . . . 111

8.3 Neutrino Spectra for 41 GRBs Observed in 2007-2008 . . . . . . . . . . . . . . . . . . 115

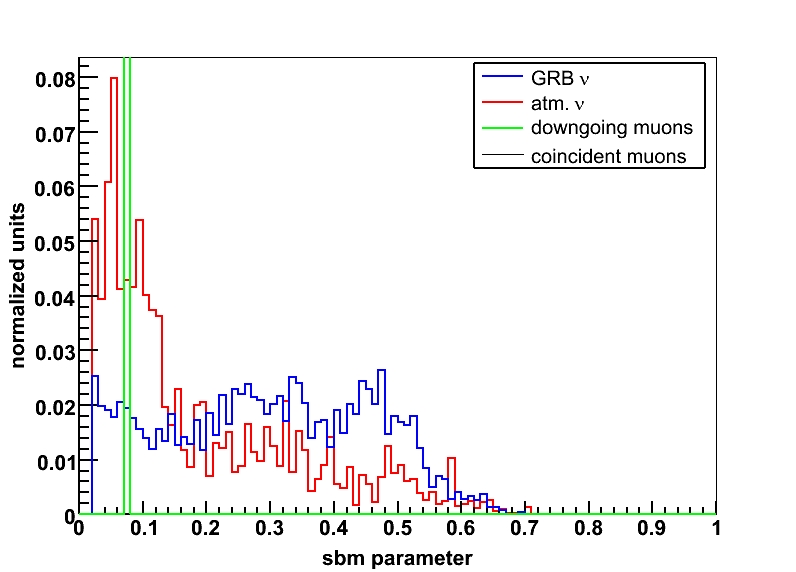

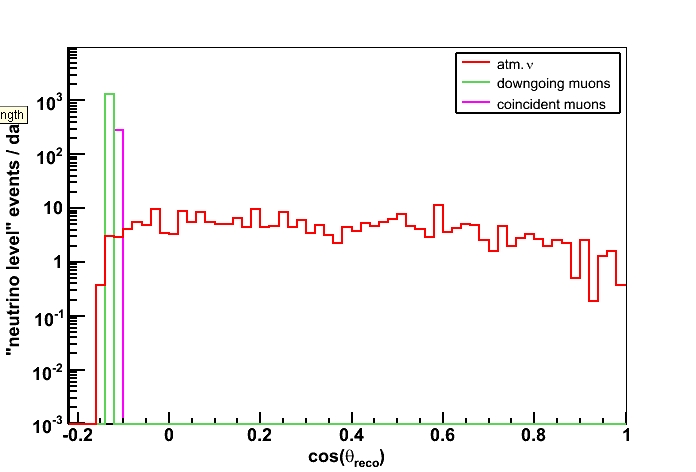

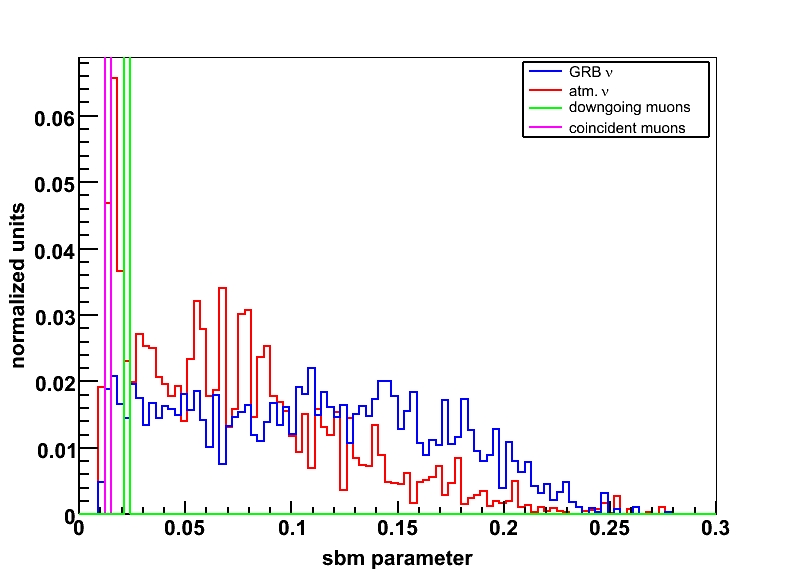

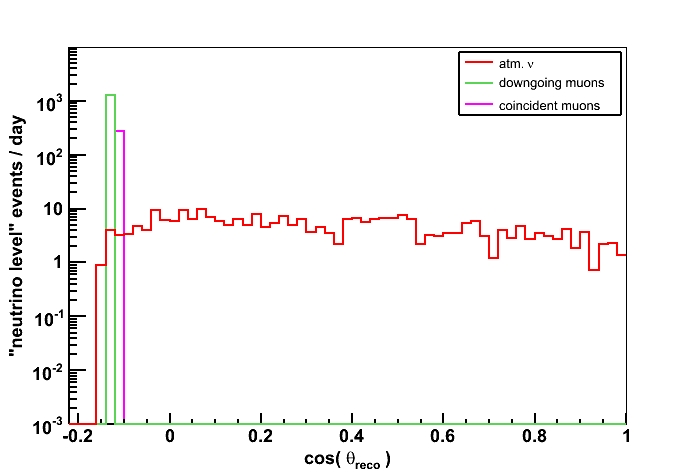

8.4 Cut Efficiency for Several Event Selection Parameters . . . . . . . . . . . . . . . . . . 119

8.5 Event Rates at Neutrino Level for 2007-2008 . . . . . . . . . . . . . . . . . . . . . . . 120

8.6 Cumulative Point Spread Function for 2007-2008 . . . . . . . . . . . . . . . . . . . . . 121

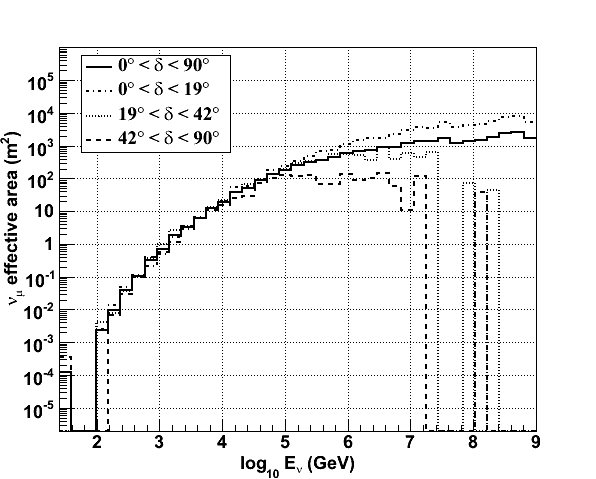

8.7 22-String IceCube Muon Neutrino Effective Area . . . . . . . . . . . . . . . . . . . . . 122

8.8 Signal Efficiency for 2007-2008 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

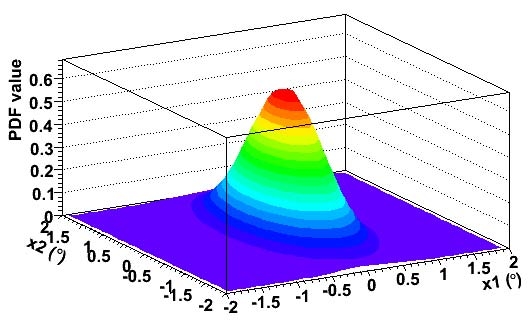

8.9 Spatial Signal PDF . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

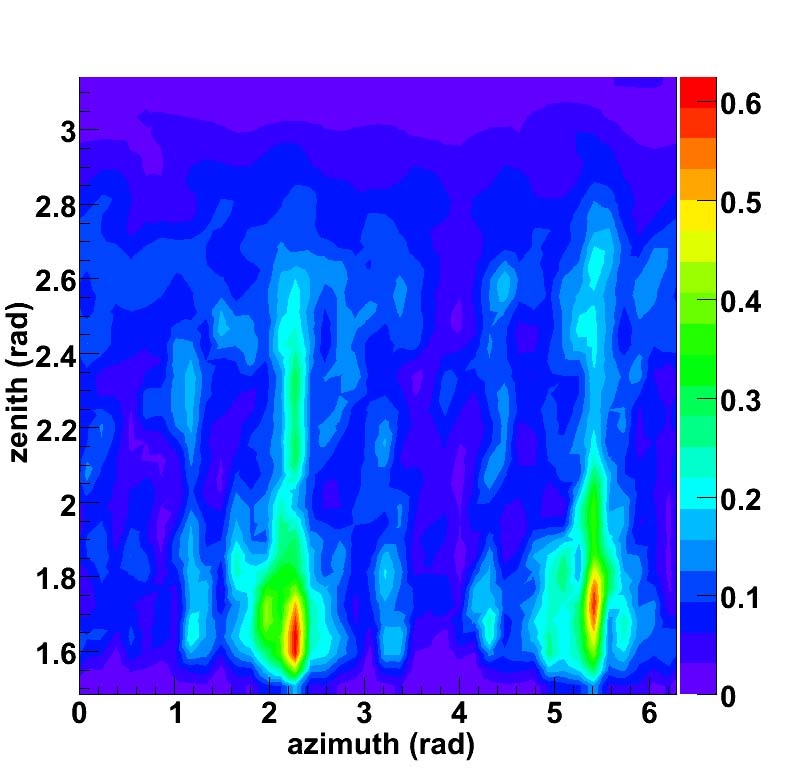

8.10 Spatial Background PDF for IceCube . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

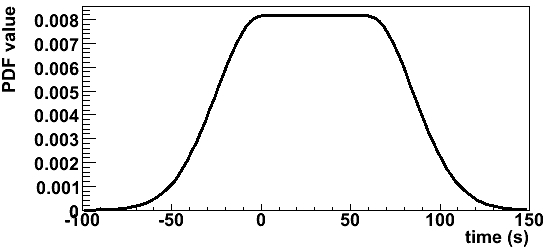

8.11 Temporal Signal PDF . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

8.12 Energy PDFs for Several Spectra . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

8.13 Test Statistic Distribution for Background Only Hypothesis . . . . . . . . . . . . . . . 131

8.14 Discovery Potential for 2007-2008 IceCube Analysis . . . . . . . . . . . . . . . . . . . . 133

8.15 Upper Limits for 2007-2008 IceCube Analysis . . . . . . . . . . . . . . . . . . . . . . . 135

9.1 Stretched Ice Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

9.2 Systematic Error of Ice Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

9.3 Seasonal Variation in Data Rate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

10.1 Comparison of Results with Previous Limits . . . . . . . . . . . . . . . . . . . . . . . . 145

10.2 Opacity of the Universe to High Energy Photons . . . . . . . . . . . . . . . . . . . . . 148

10.3 Neutrino Effective Area for Multiple Detectors . . . . . . . . . . . . . . . . . . . . . . 150

xiii

B.1 IceCube Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

B.2 Neutrino Spectra for 142 Northern Sky GRBs . . . . . . . . . . . . . . . . . . . . . . . 165

B.3 Default Geometry Event Selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

B.4 Extended Geometry Event Selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

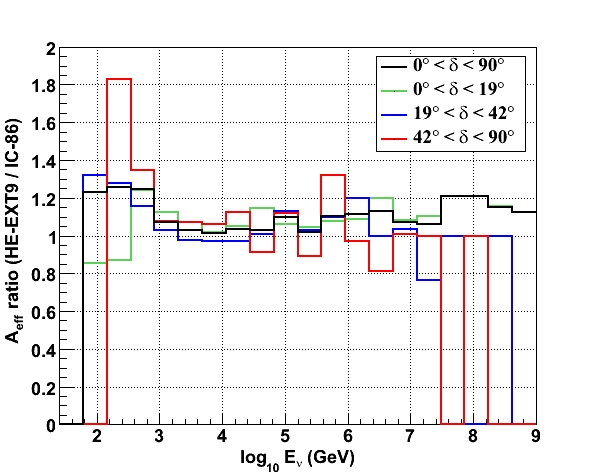

B.5 Effective Areas for the Full Detector . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

B.6 Discovery Potential of IceCube for GRBs . . . . . . . . . . . . . . . . . . . . . . . . . 169

C.1 Hyperplanes in a 2D Support Vector Machine . . . . . . . . . . . . . . . . . . . . . . . 171

C.2 Linear, Fully Separable SVM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

C.3 Linear, Non-Separable SVM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

C.4 SVM Kernel Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

C.5 GRB Feature Vector Distribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

C.6 Over-fitting of an SVM classifier . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

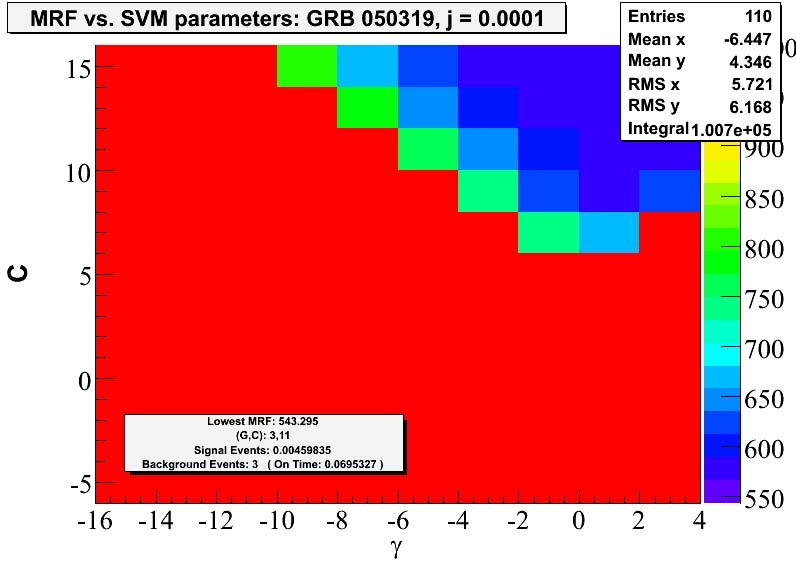

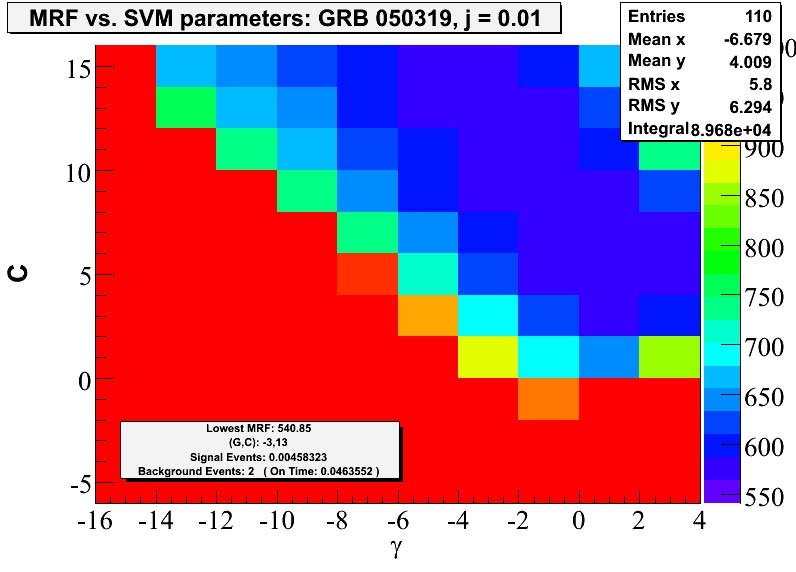

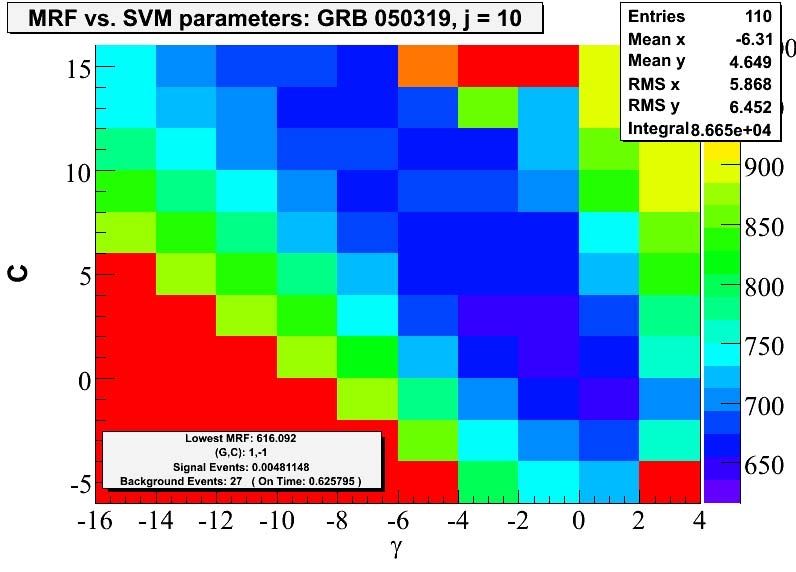

C.7 Grid Search for SVM Optimization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

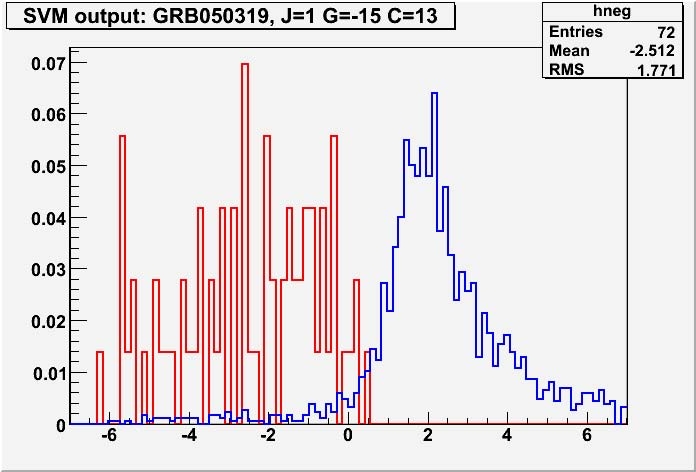

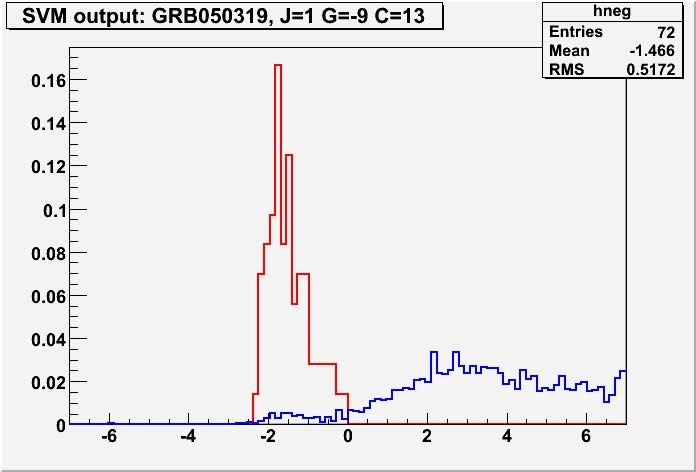

C.8 Effect of Kernel Variation on SVM Output . . . . . . . . . . . . . . . . . . . . . . . . 182

C.9 Global Optimization of SVM Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . 183

1

Chapter 1

Introduction

High energy neutrinos provide a unique perspective on the cosmos. As neutral and weakly interacting

particles they point back to their sources over all energy ranges and distance scales. This can be

contrasted to cosmic rays which are bent in galactic and intergalactic magnetic fields, or photons,

which can be blocked by intervening matter or attenuated at high energies via pair production with

background light. Thus neutrinos allow us to view the deep universe with a clarity unparalleled in

other channels. In addition, the detection of astrophysical high energy neutrinos would be a clear

signature of sources of hadronic acceleration. Such a detection would have extremely important

consequences in the astrophysics community, as it could explain the origins of the mysterious ultra-

high energy cosmic rays (UHECR). One of the most likely candidates for the production of cosmic

neutrinos are the enigmatic Gamma-Ray Bursts. Extremely brilliant emitters of high energy photons

over time scales ranging from tenths to thousands of seconds, GRBs have a rich observational history

and well developed theories with predictions for neutrino fluxes of energy MeV-EeV.

1.1 The Cosmic Ray Connection

The cosmic rays are composed mostly of protons, with a small fraction of heavier elements

that varies with energy. They are distributed nearly isotropically over the sky and universally follow

a power law spectrum over many decades in energy (see Fig. 1.1). This spectrum falls as E

? 2.7

up to

∼ 4 PeV, where is steepens to E

? 3.7

. This change is spectral slope is known as the knee. Through the

knee, the cosmic rays are thought to be galactic in origin, likely accelerated in supernova remnants

(see section 1.1.1). A good phenomenological description of the steepening is a many-knee model

2

(poly gonato from Greek) [1, 2]. Each element exhibits a cutoff in its spectrum due to the maximum

acceleration possible in SNR, but heavier elements extend to higher energy. When added together,

the spectrum is well modeled up to ∼ 10

18.5

eV. At this point it hardens to E

? 2.7

again, a feature

known as the ankle. The ultra-high energy cosmic rays above the ankle are thought to be due to

extragalactic sources. At the highest energies they will be suppressed by interactions with the cosmic

microwave background (GZK effect).

Protons accelerated to ultra-high energy will produce neutrinos through interactions with

surrounding matter. While the protons will be isotropized by intervening magnetic fields (except at

the very highest energies), the neutrinos will stream straight to the Earth. If we can detect these

high energy neutrinos we will unveil the sources of the UHECR.

1.1.1 Fermi Acceleration of Particles

First proposed in 1949, Fermi suggested that charged particles would elastically scatter off

turbulent variations in magnetic fields, stochastically gaining energy. Let us follow his work and

determine the energy change in each interaction. A further description may be found in [4].

Let us assume that the “magnetic mirrors” move randomly and have a characteristic velocity

V while the particle moves with velocity v. We further assume that the clouds of gas containing

these mirrors are massive and thus are essentially unaffected by collisions with particles. We thus

choose the center of momentum frame of the cloud for our discussion. The particle energy incident

at angle θ with the magnetic mirror is then given by

E

?

= γ(E + Vp cos θ)

(1.1)

and the momentum normal to the mirror surface is

p

?

x

= p

?

cos θ

?

= γ

?

p cos θ +

VE

c

2

?

(1.2)

In the elastic collision, energy is conserved in the center of momentum frame, and momentum is

inverted. In the observer’s frame this yields a final energy

??? ????? ???????????? ?? ?????? ????

3

??

????? ? ???? ??? ?????? ??? ???????? ?? ??? ?????? ??? ?????? ?? ?????? ???? ??

???????? ?? ??? ??? ???? ?????? ?? ??? ?? ???????? ?????? ????? ???? ?? ?

?????? ??? ??? ??????? ?? ?????????? ?????? ????????? ?? ??????? ?? ? ? ?????? ??

??? ??? ??????? ?? ?????? ????? ?? ??? ?????? ???? ?? ??? ??????? ???????? ???

??????????? ?? ?????? ???????? ????? ?? ??? ?? ?????? ??? ???? ???? ???? ? ? ???

???????? ???? ? ??? ?? ????????? ???? ??????? ???????? ??????? ??? ??????????

??? ????? ?? ?????? ?? ?? ????????? ??????????? ?? ???? ? ?? ?? ?? ??? ?????? ??

????????? ??? ? ? ????????????

???

????? ???????????? ?? ?????? ????

?? ????? ???????????? ??????? ????????? ??? ??????????? ?????????? ??????? ?????

??????? ?? ???????? ???? ??????????? ?? ??? ??????? ?? ? ??? ??????? ??? ???

??? ????????? ??? ?? ???????? ?????? ??????????????? ???? ???????? ????????

Figure 1.1: The cosmic ray energy spectrum at earth. Below the knee, the cosmic rays are

thought to be galactic in origin. In the high energy tail, sources are unknown. (from [3])

4

E

??

= γ(E

?

+ Vp

?

x

)

(1.3)

= γ

2

E

?

1+

2V v cos θ

c

2

+

?

V

c

?

2

?

(1.4)

The change in energy is thus

E

??

? E =∆E =

2V v cos θ

c

2

+ 2

?

V

c

?

2

(1.5)

where we have expanded to second order in V/c. Note that to first order the total energy change

tends to zero, as the gain of head-on collisions is canceled by the loss in following collisions. This is

not quite true, as the rates are proportional to the relative velocities of approach, v + V cos θ and

v ? V cos θ. Thus, slightly more head-on collisions occur, yielding a net increase in energy. Averaging

over all angles of incidence yields a total fractional energy change

∆E

E

=

8

3

?

V

c

?

2

(1.6)

This is Fermi’s famous result, that the energy increases only with the 2nd order of V/c. While this

mechanism indeed produces a power law, it is deficient for explaining the highest energy cosmic rays.

The velocities of the clouds containing the mirrors are typically small relative to light, and the mean

free path of cosmic rays is large, hence it would take an extremely long time to accelerate particles to

the appropriate energies. Given turbulence on a sufficiently small scale such as in supernova remnants

this can be overcome. However, we must also account for ionization losses which scale with energy.

When combined, 2nd order Fermi acceleration cannot account for the cosmic ray spectrum.

If we could somehow find a situation in which every collision was head-on, Eq. 1.5 tells us

that the energy would increase in every case and be proportional to V/c. This is the so-called 1st

order Fermi acceleration. This can be achieved if strong shocks exist in the accelerator. There are

two approaches to the problem; considering the diffusion equation that governs the evolution of the

momentum distribution around the shocks [5], and following the behavior of individual particles [6].

5

We adopt the latter approach in the following.

Shocks occur when exploding gas (from e.g. a supernova) travels faster than the sound speed

of the medium. These shocks travel through regions containing high energy particles moving in

magnetic fields. The particles barely notice the presence of the shocks since they have much higher

velocities and the shocks are thin compared to their gyroradii. Elastic scattering of the particles in

turbulent magnetic fields both in front of and in the wake of the shock isotropizes their velocities.

Thus, for a shock traveling at speed U, in the shock rest frame the upstream particles will appear to

be approaching with that same speed. Energy, mass, and momentum must be conserved across the

shock front, and this allows us to determine the resulting compression. For strong shocks the relation

between the gas densities in the downstream and upstream regions is given by

ρ

2

ρ

1

=

γ

H

+1

γ

H

? 1

(1.7)

Here γ

H

is the ratio of specific heats and is 5/3 for a fully ionized plasma. Thus the compression

ratio is a factor of 4. Conservation of mass implies

ρ

1

v

1

= ρ

2

v

2

v

2

=

1

4

v

1

(1.8)

In the shock rest frame, the upstream gas has velocity ? U while the downstream gas has velocity

? 1/4U. We now consider both upstream and downstream regions individually. In the rest frame

of the upstream gas (adding U to each side), the downstream particles approach the shock front

with velocity 3/4U. Similarly, in the rest frame of the downstream particles (adding 1/4U to each

side), the upstream particles approach the shock front with speed 3/4U. Due to the compressive

properties of the shock, particles have head-on collisions no matter whether they are in the upstream

or downstream regions, and those collisions occur with the exact same velocity relative to the shock.

This is shown schematically in Fig. 1.2.

We can determine the fractional energy increase in each shock crossing in much the same way

6

ρ

2

, v

2

ρ

1

, v

1

U

shock

downstream

upstream

upstream rest frame

downstream rest frame

shock rest frame

v

2

= 1/4 v

1

v

1

= |U|

v

2

= 3/4 U

at rest

v

1

= 3/4 U

at rest

Figure 1.2: Schematic showing the process for 1st order Fermi acceleration across shock

fronts. Top left panel depicts the shock propagating with velocity U through a high energy

plasma. Top right panel shows the rest frame of the shock and the compression between

the upstream and downstream regions. Because the velocities are isotropized by magnetic

fluctuations, the relative velocity of the upstream region is given by U. Bottom left and

right panels show the rest frame of the upstream and downstream regions, respectively. In

each case, particles crossing the shock front undergo head-on collisions with gas at relative

velocity 3/4U.

as for the 2nd order mechanism. Encountering gas of velocity V = 3/4U, the energy increases by

E

?

= γ(E + p

x

V )

(1.9)

In all the above we have taken the shocks to be non-relativistic while the particles are. Taking this

into account, we arrive at

∆E

E

=

V

c

cos θ

(1.10)

7

We average over incidence angles and probabilities to find

?

∆E

E

? =

4

3

V

c

(1.11)

for each full crossing from the upstream to the downstream region and back. If we denote the energy

increase in each interaction by E = βE

0

then

β =1+

4

3

V

c

(1.12)

Let us now assume that after each collision the particle has a probability P to pass through the shock

front again. After k collisions we will then have a number of particles N = P

k

N

0

with characteristic

energy E = β

k

E

0

. These can be related through

N

N

0

=

?

E

E

0

?

ln P

ln β

(1.13)

Since N actually represents the number of particles with at least energy E, we then write the differ-

ential energy spectrum as

N(E)dE ∝

?

E

E

0

?

ln P

ln β

り 1

dE

(1.14)

We now determine the re-crossing probability P . The number of particles crossing the shocks

is given by 1/4nc [4], where n is the number density. Conversely, in the downstream region, particles

are carried away from the shock front (top right panel of Fig. 1.2). This occurs at a rate 1/4nU. So

the loss per unit time is given by U/c. We then have

P =1 り

U

c

(1.15)

We combine Eqs. 1.12 and 1.15 and plug into Eq. 1.14 to find

N(E)dE ∝ E

り 2

dE

(1.16)

8

Thus we naturally arrive at a power law spectrum consistent with the observed and pervasive cosmic

rays

1

. We must note, however, that there is a limit to how much acceleration is possible with this

mechanism. Although much more efficient than 2nd order Fermi acceleration, the process is still

slow and must combat energy loss processes. Taking this into account, we find that this process

can account for cosmic ray acceleration in supernovae up to the knee. However, it is not possible to

produce the highest energy cosmic rays with this method. To do so, we must employ much higher

magnetic fields or larger acceleration regions

2

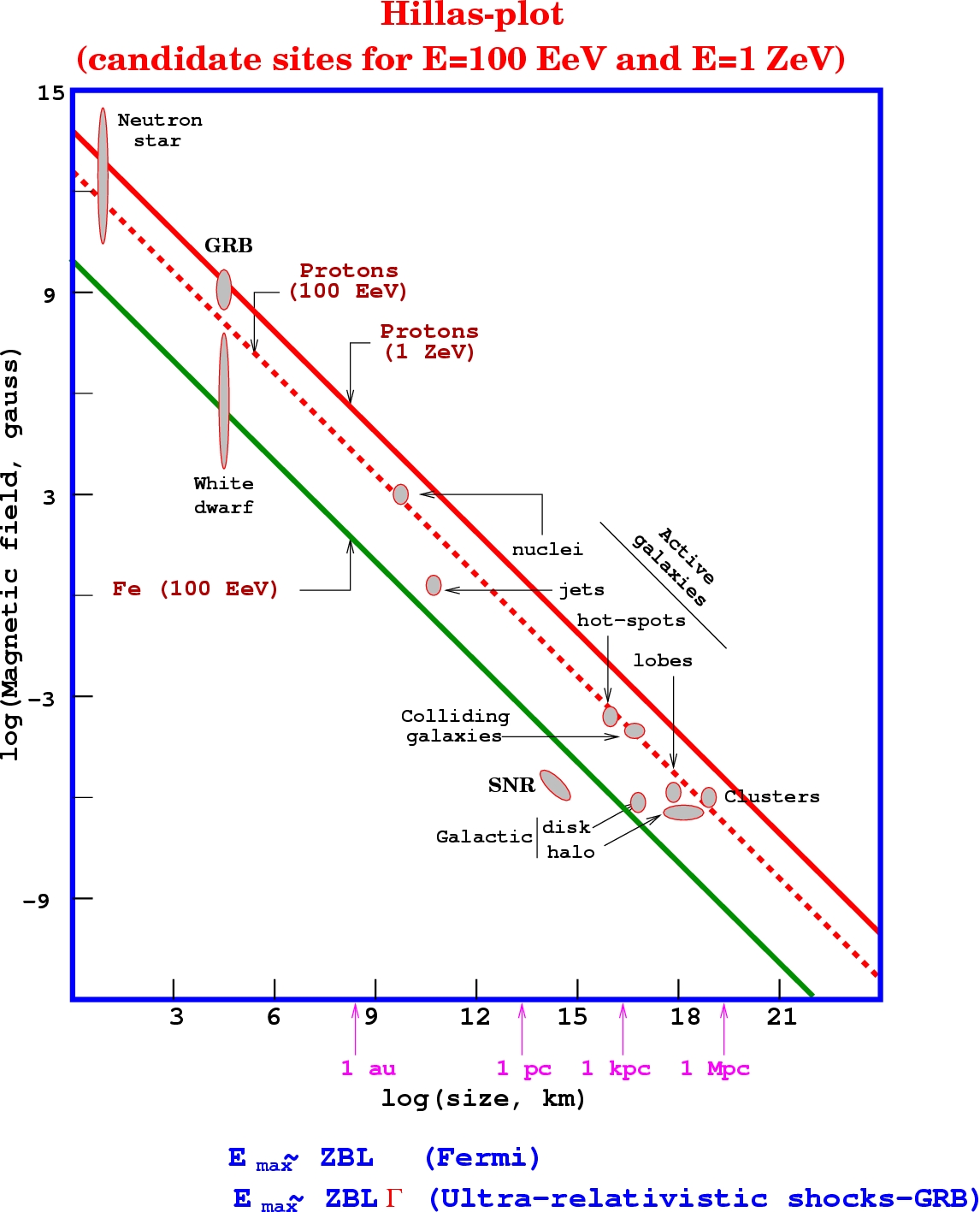

. We depict this in Fig. 1.3 and note that gamma-ray

bursts are one of the few source classes in which the conditions for UHECR acceleration are indeed

met.

1

Steepening to an E

り 2.7

spectrum is thought to be due to losses in the intervening space.

2

We should also note that this discussion has been limited to non-relativistic shock acceleration. The relativistic

case is much more complicated and we do not discuss it in detail here. It has the property however, of rapidly increasing

the energy of particles with each crossing of the shock front and is thus appropriate for UHECR acceleration.

9

Figure 1.3: The famous Hillas diagram illustrating the relationship between magnetic

fields and source size necessary to accelerate nuclei to ultra-high energies via the Fermi

mechanism. (adapted from [7])

10

1.2 Previous Work

Previous searches for high energy neutrino emission from gamma-ray bursts have been con-

ducted using data collected with the AMANDA and IceCube detectors. A variety of search strategies

have been employed covering a range of emission models and detection channels. Triggered searches

in the muon channel [8, 9, 10], triggered and rolling searches in the cascade channel [11], and anal-

yses dedicated to single, spectacular bursts [12, 13] have all failed to detect neutrinos from GRBs.

Upper limits have been set, but to date none exclude the most popular scenarios. We build on this

previous body of work in the analyses presented in this thesis and place all results in context in our

conclusions.

1.3 Organization of the Thesis

• Chapter 2 reviews the history of gamma-ray burst observations and presents the phenomeno-

logical framework that has been developed to explain them. We then describe the production

of neutrinos in GRB explosions through photomeson interactions and detail resulting spectra

occurring from the various phases of the emission.

• Chapter 3 describes the ways in which high energy astrophysical neutrinos may be detected

at the earth. The process is followed through interaction, lepton production, and subsequent

emission of

Ceˇ renkov radiation. Optical properties of the detection medium are considered. We

discuss how the arrival times and amplitudes of the emitted light may be used to reconstruct

events, and present the algorithms used in these reconstructions.

• Chapter 4 describes the satellites that observe the photon flux from GRBs and localize their

positions. The AMANDA and IceCube neutrino detectors are then presented, including layout,

hardware, and data acquisition systems.

• Chapter 5 explains the selection criteria for the inclusion of observed GRBs in our search. We

describe the blinding procedure and the determination of emission windows. The spatial and

temporal information for all bursts in our analyses are listed.

11

• Chapter 6 explains the process of creating simulated signals and backgrounds. The generation

of primaries is described, as well as the modeling of propagation through the atmosphere and

earth, energy losses, interaction, and the detector response to the emitted light.

• Chapter 7 presents a stacked search for muon neutrinos from GRBS using the AMANDA-II

detector. 85 GRBs observed in the northern hemisphere in 2005-2006 are considered. Event

selection and optimization of background rejection are presented in detail.

• Chapter 8 describes a search for muon neutrino emission from 41 GRBs using the 22-string

configuration of the IceCube detector. An unbinned likelihood method is utilized for the first

time in stacked searches for GRBs, maximizing the sensitivity of the analysis.

• Chapter 9 reviews the sources of systematic error in the analyses presented. Contributions from

theory, ice properties, and hardware are considered, as well as the effect of seasonal variation

in the atmospheric muon rate.

• Chapter 10 presents a summary of the work conducted in this thesis and places it in the context

of previous results. Prospects for the future are discussed.

12

Chapter 2

Gamma-ray Bursts

Gamma-ray Bursts (GRBs) are the most energetic events in the universe, outshining all other sources

over their short emission windows. Initially thought to be galactic in origin due to the fluxes observed,

they are now known to occur at cosmological distances. They are one of the prime candidates for

the acceleration of the highest energy cosmic rays and concurrent astrophysical neutrinos.

2.1 History

Gamma-ray bursts were discovered as a byproduct of efforts to enforce the partial test ban

treaty of 1963. This treaty prohibited the testing of nuclear weapons underwater, in the atmosphere,

and in space. The Vela satellites [14] were designed to detect the gamma radiation from such a

nuclear detonation. The satellites were operated in pairs on opposite sides of a high circular orbit

and timing information was used to determine the direction of sources. During data taking by Vela

5a and 5b in 1969, a strange phenomenon was discovered

1

; short, intense bursts of γ-rays from

outside their orbits rather than within. This topology meant that the sources were astrophysical

rather than terrestrial. When the data was declassified in 1973, the observations of 16 such bursts

were published [15]. Shortly thereafter, a collimated gamma-ray telescope aboard the OSO-7 satellite

was able to confirm the direction of one of the events [16]. The IMP-6 was able to measure spectral

characteristics of the bursts

2

and determined that the emission peaked in γ-rays and was thus not

just the high energy tail of some x-ray phenomenon [17]. These observations, all in rapid succession,

spurred a wealth of theoretical papers about the sources of these bursts. Unfortunately this was the

1

though it was not noticed in 1972 when the backlog of non-nuclear detonation γ-ray events began to be analyzed

2

using a hard x-ray detector intended to study solar flares

13

beginning of an experimental dark age that would not see an end for nearly 20 years.

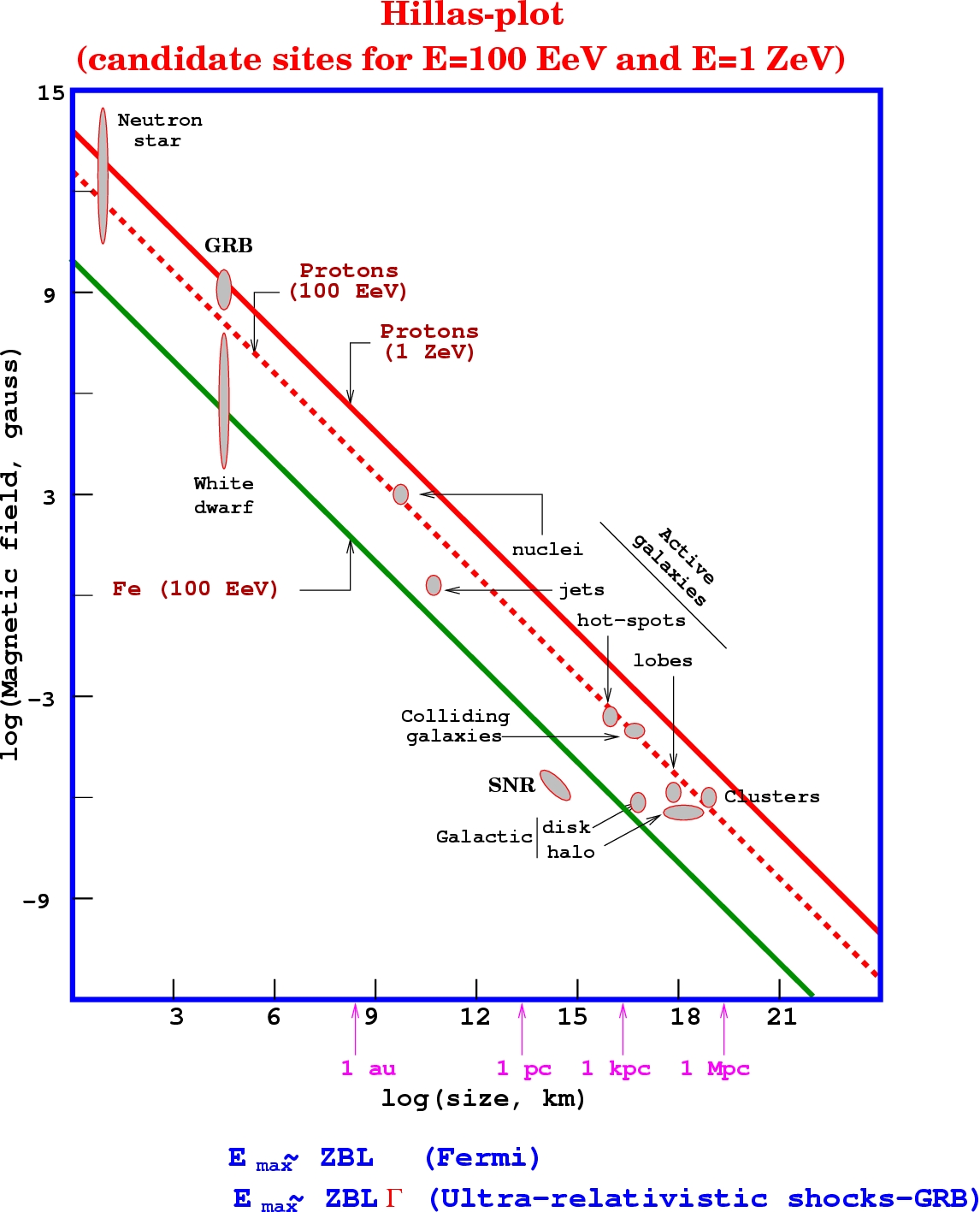

Figure 2.1: The Vela 5 satellite system. On the left is the Vela 5a and 5b pair joined

before launch. On the right is the Vela 5b satellite in low Earth orbit

The Burst and Transient Source Experiment (BATSE) [18] was launched aboard the Compton

Gamma Ray Observatory (CGRO) satellite on April 5, 1991. This was the first experiment dedicated

to the observation of gamma ray photons associated with transients. Its energy range (20 keV - 1

MeV) and all-sky field-of-view allowed it to detect GRBs at an average rate of 1/day throughout its

9 year mission, observing 2704 GRBs in total. This high rate of detection quickly increased statistics,

and studies were able to be conducted on the distributions of the GRB population. It was found that

the durations of GRBs follow a bimodal distribution [19]; so-called “short” bursts with a duration

? 2 s and “long” bursts with a duration ? 2 s. This distribution can be seen in Fig. 2.2. The short

bursts were found to display harder energy spectra than the long, leading to the supposition that

different underlying processes were at work in the 2 classes. The second major finding was in the

spatial distribution of the GRB population. Until this point, most people had thought that the

bursts must be galactic in origin, due to the fluxes observed (some theories even place the source of

the GRBs in the Oort Cloud). It was therefore a great surprise when BATSE observed that GRBs

14

are distributed isotropically both in number and in fluence [20] (see Fig. 2.3. In a galactic source

model, the brightest bursts would be distributed along the galactic plane. This lack of clustering

gave evidence that GRBs are cosmological phenomena, which implied huge energies of 10

50

り 10

54

ergs in the case of isotropic emission from the sources.

Figure 2.2: Distribution of GRB Durations for the BATSE 4B Catalog (through August

1996). Durations are given as T

90

, the time in which 5% to 95% of the γ-ray emission

occurs. The bimodal distribution separates GRBs into 2 classes; long and short (based on

data from [21])

The hypothesis of cosmological origin was confirmed by the BeppoSAX satellite [22] in 1997.

BeppoSAX was able to localize GRBs with arcminute precision, and more importantly, was able to

distribute that information to ground-based observatories much more rapidly than had previously

been achieved. In addition, they were able to make x-ray observations of GRB afterglow. These

multi-wavelength afterglow observations led to the first observation of an optical counterpart to a

GRB at cosmological distance [23].

The Swift satellite [24, 25] (see section 4.1.1), launched in 2004 opened a new window on

understanding GRBs. It was designed to have rapid slewing capability and incorporates γ-ray, x-ray,

15

+90

-90

+180

-180

2704 BATSE Gamma-Ray Bursts

10

-7

10

-6

10

-5

10

-4

Fluence, 50-300 keV (ergs cm

-2

)

Figure 2.3: Spatial Distribution of the full BATSE GRB sample. The isotropic spatial

distribution in both number and fluence implies cosmological origins. (from [18])

and ultraviolet detectors onboard. This has allowed detailed studies of the transition between the

prompt and afterglow emission phases and the first measurements of afterglow emission from short

GRBs [26]. Rapid dissemination of (usually) arcsecond localizations to ground-based telescopes

results in redshift measurements for ∼ 25% of all bursts. It has been found that the GRB population

extends to higher redshifts than imagined, with observed distances up to z=6.7 [27].

After nearly a decade without a full-sky GRB detector, the Fermi Gamma Space Telescope [28]

(formerly GLAST) was launched in July of 2008. A true successor to CGRO, Fermi possesses both

a wide field Gamma-ray Burst Monitor (GBM) and a more focused, higher precision detector (the

Large Area Telescope, or LAT). The LAT is analogous to the EGRET experiment aboard CRGO,

extending the energy range to 300 GeV. Fermi is able to slew to point the LAT at some GRBs

detected by the GBM, thus yielding a huge potential range of energies for GRB observations. This

has led to the detection of >10 GeV photons from bursts [29], raising new questions as to the source

physics. With a detection rate of ∼ 200/yr Fermi will continue to provide valuable new data in the

16

quest to understand the mysteries of GRBs.

2.2 Fireball Model

Long GRBs are thought to be caused by the core collapse of massive stars into black holes [30,

31]. This hypothesis is strengthened by the association of some long GRBs with abnormal type Ic

Supernovae (SNe)

3

. The first such correlation was between GRB980425 and SN1998bw [32] with

several other observations strengthening the connection to date. Long GRBs are always observed to

occur in star-forming regions. Short GRBs, on the other hand, are thought to be associated with

the merger of two compact objects (e.g. neutron stars) [33]. In either case, the accreting matter

is thought to spin rapidly, creating a disk [34]. At some point, the radiation pressure exceeds the

gravitational infall, and an explosion occurs, ejecting an e

±

, γ, and baryon plasma along the rotation

axis, with this fireball being fed by rapid accretion of matter from the disk. This wind accelerates to

relativistic velocities and randomizes and dissipates energy via internal shocks. It is thought that the

observed γ-rays are the result of synchrotron radiation from the accelerated electrons in the very high

magnetic fields of the burst environment [35, 36]. Let us consider the observations that lead to this

fireball phenomenology in order to better understand the physics of GRBs and why it is reasonable

to expect high energy neutrino emission.

2.2.1 Energy Spectra

The energy spectra of most GRBs are well described by the Band function [37], which models

the low energy component as a power law with exponential cutoff, smoothly transitioning to a steeper

power law at higher energies. While the fit is purely empirical and makes no claims with regards to

the burst physics, it is in agreement with a model of synchrotron emission from accelerated electrons

which then undergo cooling above some break energy. We simplify the picture slightly by taking

the photon spectra to be a broken power law, with the normalization chosen such that the fluence

integral matches that fit by the Band function.

3

This class of supernovae are known as hypernovae and are characterized by smooth spectra, higher than usual

energy, and strong radio emission.

17

dN(E

γ

)

dE

γ

= f

γ

×

?

(α

γ

り β

γ

)

γ

E

り α

γ

γ

for E

γ

< ?

γ

E

り β

γ

γ

for E

γ

≥ ?

γ

(2.1)

F

γ

=

?

dE

γ

E

γ

dN(E

γ

)

dE

γ

(2.2)

where F

γ

is the measured photon fluence. The break energy varies for each burst, but has a typical

value of ?

γ

∼ 250 keV

4

. Long bursts tend to have spectral indices scattered around α

γ

∼ 1 and β

γ

∼ 2

while short GRBs are harder by one power in energy on average.

Figure 2.4: Lightcurves for several gamma-ray bursts. Note that all bursts exhibit unique

temporal characteristics and some have short timescale variability. (from [25])

4

We note that the Swift detector (see section 4.1.1) is transparent to γ-rays above 150 keV and thus does not detect

the spectral break for the majority of GRBs it observes.

18

2.2.2 Compactness

GRBs experience variability on millisecond timescales (see Fig.2.4). The finite speed of light

places constraints on the source size. For a source of diameter d, any temporal variability of scale

smaller than ∆t ∼

d

c

will be smeared out by delays in travel time. This means that the compact

progenitor must satisfy:

R

GRB

?

c∆t

2

(2.3)

For observed timescales of ∆t ∼ 0.01 s, this gives a characteristic radius of R

GRB

? 1500 km.

For comparison, the radius of a neutron star is typically ∼ 12 km. In the case that the compact object

is a black hole, the source size can be defined by the Swartschild radius:

R

Swartschild

=

2GM

c

2

(2.4)

This relation yields an upper limit to the possible source mass of a GRB progenitor, supposing that

it is a black hole.

M<

c

2

R

GRB

G

∼ 2 × 10

33

kg ∼ 1000M

?

(2.5)

The isotropic distribution observed by BATSE (see Fig. 2.3) implies that GRBs are cosmo-

logical in nature. Coupled with the measured fluences F

γ

and the standard Λ

CDM

cosmology, this

leads to high corresponding energies at the source, E

iso

γ

, of ∼ 10

51

り 10

54

ergs. Given that GRBs have

durations spanning from 0.1 s to >100 s, this results in luminosities, L

iso

γ

of ∼ 10

49

り 10

52

erg/s. Here

we assume isotropic emission of the energy. If we take the typical photon energy in a GRB to be ?

γ

5

then we can determine the number density of photons in the source:

n

γ

=

L

iso

γ

4πR

2

c ?

γ

(1 + z)

≈ 1.5 × 10

30

cm

り 3

(2.6)

The above implies that the photons should be thermalized due to the high optical depth of the

5

This is reasonable given that emission tends to peak at the break energy, and that most of the photons will be

emitted at the peak.

19

plasma, which is at odds with the observed high energy emission well above the pair production

threshold. This is known as the compactness problem.

In order to resolve this apparent crisis, we note that given the mass limit imposed by Eq. 2.5

and the observed luminosities of O(10

52

) erg/s, the progenitor far exceeds the Eddington limit and

thus must drive a wind from the surface. We consider the case that the expansion is highly relativistic

and thus the photons will appear to be emitted in a cone of opening angle θ due to aberration. The

geometry is illustrated in Fig. 2.5. The observed time delay between spectral features is now given

by

∆t

obs

=

∆t(c り v cos θ)

c

(2.7)

θ

v

Δ

t (c -

v

cos

θ)

Δ

t

v

cos

θ

Δ

tc

Figure 2.5: Effect of relativistic aberration on time variability. The relativistically ex-

panding plasma is beamed into an opening angle θ. An observer situated along the line of

sight will observe a different time between spectral features than is intrinsic to the source.

We write the expansion velocity v in terms of the Lorentz factor 㡇 and Taylor expand (assuming

large 㡇) to get

v ≈ (1 㡇

1

2㡇

2

)c

(2.8)

Similarly, the opening angle will be small so the same technique may be applied. Combining terms

yields

20

∆t

obs

≈ ∆t

?

1

2㡇

2

+

θ

2

2

?

(2.9)

Since the beaming angle θ is inversely related to the Lorentz factor, we arrive at

∆t

obs

?

∆t

㡇

2

(2.10)

R

GRB

≈ c∆t

obs

㡇

2

(2.11)

Similarly, the intrinsic luminosity of the GRB is reduced by a factor 㡇

3

and the individual pho-

ton energies by a factor 㡇. We determine that the plasma becomes optically thin for 㡇

> 100.

Such a Lorentz factor also places the bulk of photons below the pair production threshold. Direct

measurements by Molinari et al. [38] give 㡇 ∼ 400.

2.2.3 Emission Mechanism

Assuming now an outflow with 㡇 ≥ 100, the jet must collide with the ISM and slow down.

While this external shock may account for observed afterglow emission, the time and distance scales

on which it occurs preclude it from being the cause of the prompt emission as it cannot produce

the observed temporal variability. We turn instead to internal shocks within the jet. While the jet

moves with a bulk Lorentz factor 㡇, individual ejections from the progenitor will have varying speeds.

When faster shells overtake slower shells, shocks will occur. At these shock fronts, electrons may be

accelerated via the Fermi mechanism (outlined in section 1.1.1). Due to the shock compression of

the fluid and the high velocity of expansion, the acceleration is both very efficient and fast. These

electrons then emit photons via synchrotron (and perhaps inverse Compton) radiation, resulting in

the observed keV-GeV emission. Such an internal shock acceleration scenario both accounts for rapid

temporal variability as well as a power law energy spectrum. It is natural that protons would also

be accelerated in these shocks, potentially leading to neutrino production.

21

2.2.4 Collimated Emission - Reducing the Energy Budget

While the energy budget for GRBs is extreme, it may be reduced if the emission is collimated.

In this case, the required energy is reduced by a factor Ω/4π where Ω is the opening angle of

the collimated jet. This would be experimentally observable by a temporal break in the afterglow

emission. The reason for this break comes from the interaction between the relativistic beaming effect

and the intrinsic beaming of the jet. The highly relativistic outflow from the GRB is Lorentz-beamed

into an angle of 1/㡇. As the fireball propagates through the interstellar medium (ISM) it is slowed

and the bulk Lorentz factor decreases, thus increasing the beaming angle. At some point, the outflow

will have slowed enough such that 1/㡇 > Ω and a flux deficit will be observed as a steepening of the

spectra. This jet break has a unique signature in several ways. First, it is achromatic - that is, since

it is a purely hydrodynamic effect, it affects all wavelengths equally. Second, it does not impact the

physics of the burst itself, so no effect on the electron or photon spectral index is expected. Further

details may be found in [39, 40].

Clear evidence of multi-color optical breaks with consistent radio data was observed in the

case of GRB990510 [41], among others (see Fig. 2.6). Based on the observed temporal jet breaks for

a sample of several tens of bursts, Frail et al. [42] and Bloom et al. [43] calculated the corresponding

jet collimation angles and GRB energies. They found that all GRBs in the sample were narrowly

clustered around emission energies of E

γ

∼ (5×10

50

㡇 1.3×10

51

ergs, as seen in Fig. 2.7. Thus, GRBs

have an energy budget on par with bright Supernovae, suggesting a correlation, and the observed

variances in luminosity and fluence can be attributed to differences in the jet opening angle rather

than intrinsic properties. Furthermore, this suggests that the true rate of GRBs is much higher, as

we only observe bursts with collimated emission along line of sight.

While the idea of beamed emission is attractive in that it reduces the energy budget of GRBs

to levels more in line with other astrophysical phenomena (e.g. supernovae), recent data calls these

conclusions into question. It was expected that Swift, with its multi-wavelength observational capa-

bilities, would measure jet breaks for many GRBs. This has not turned out to be the case however,

with very few bursts displaying temporal breaks that are consistent with the jet break model. The

current experimental and theoretical status of jet breaks is more fully covered in [44].

22

–5–

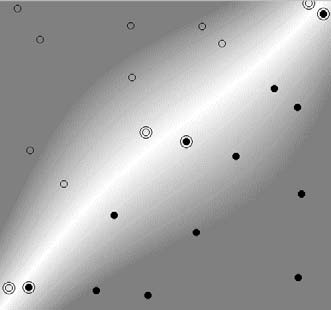

Fig. 1.— Optical light-curves of the transient afterglow of GRB 990510. In addition to

photometry from our group (filled symbols – see Table 1), we have augmented the light

curves with data from the literature (open symbols). The photometric zero-points in Landolt

V -band from our group are consistent with that of the OGLE group (Pietrzynski & Udalski

1999b) and the I -band zero-point is from the OGLE group. Some R-band measurements

were based on an incorrect calibration of a secondary star in the field (Galama et al. 1999)

and we have recalibrated these measurements.

Figure 2.6: Observation of achromatic jet breaks in GRB990510. The wavelength inde-

pendence of the break times agrees with the jet break model. (from [41])

23

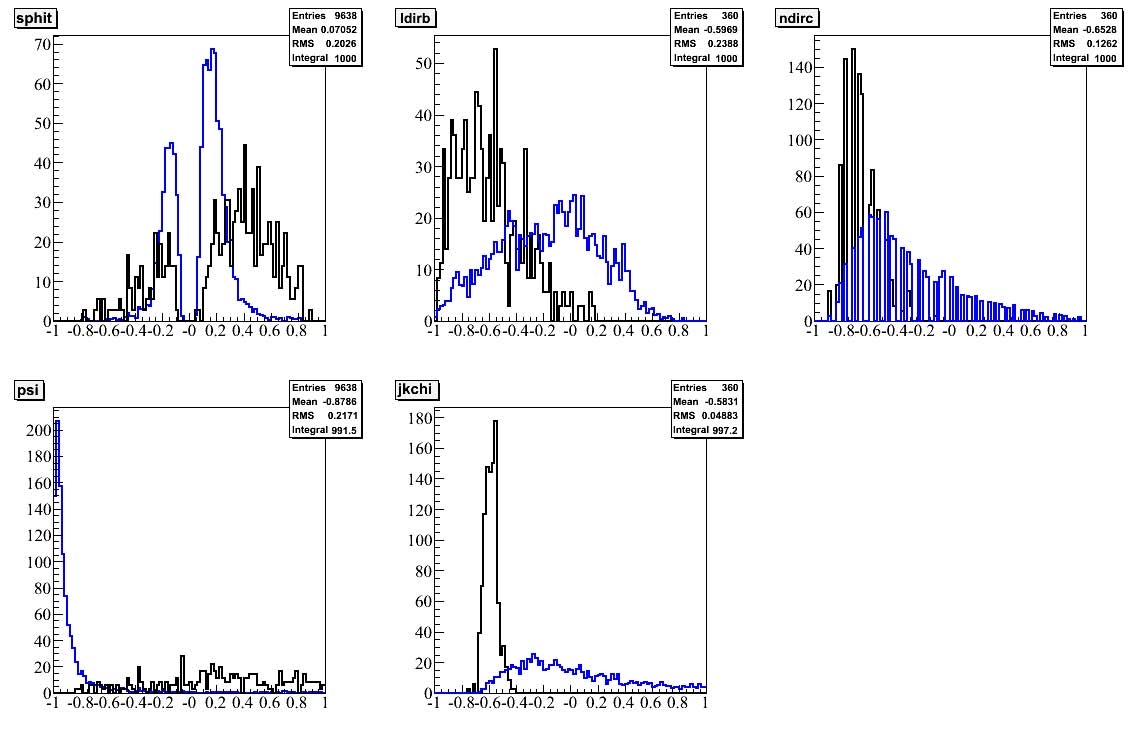

20

Figure 3. The distribution of the apparent isotropic γ -ray burst energy of GRBs with known

redshifts (top) versus the geometry-corrected energy for those GRBs whose afterglows exhibit the

signature of a non-isotropic outflow (bottom). The mean isotropic equivalent energy ?E

iso

(γ )? for

17 GRBs is 110 × 10

51

erg with a 1-σ spreading of a multiplicative factor of 6.2. In estimating the

mean geometry-corrected energy ?E

γ

? we applied the Bayesian inference formalism

60

and modified

to handle datasets containing upper and lower limits.

61

Arrows are plotted for five GRBs to indicate

upper or lower limits to the geometry-corrected energy. The value of ?log E

γ

? is 50.71±0.10 (1σ) or

equivalently, the mean geometry-corrected energy ?E

γ

? for 15 GRBs is 0.5 × 10

51

erg. The standard

deviation in log E

γ

is 0.31

+0.09

㡇 0.06

, or a 1-σ spread corresponding to a multiplicative factor of 2.0.

Figure 2.7: Calibrated GRB energy in the case of beamed emission. Upper panel shows

calculated isotropic equivalent energy for GRBs with measured redshift. Lower panel

shows energy after taking into account jet collimation angle Ω calculated from jet break

times. (from [42])

24

2.3 Neutrino Production

If hadrons (typically protons) are accelerated along with electrons in the fireball phenomenol-

ogy described above, neutrinos will be produced due to subsequent interactions. The energy spectra

of these neutrinos will depend on the environment and type of these interactions.

2.3.1 Prompt Neutrinos

Waxman and Bahcall showed that protons may interact with the internal shock prompt emis-

sion gamma-rays to produce pions via the delta resonance [45].

p + γ → ∆ → n + π

+

(2.12)

→ p + π

0

(2.13)

Charged pions decay to neutrinos and associated leptons, while neutral pions produce high energy

photons

6

.

π

+

→ µ

+

+ ν

µ

(2.14)

µ

+

→ ν¯

µ

+ e

+

+ ν

e

(2.15)

π

0

→ γ + γ

(2.16)

These processes result in a neutrino flavor ratio at the source of (ν

e

, ν

µ

, ν

τ

) =(1:2:0)

7

.

Eq. 2.12 indicates that the produced neutrino spectra should track the γ-ray spectra of Eq. 2.1,

as the combined center of mass energy of the interacting proton and photon must exceed the threshold

for production of the ∆-resonance:

6

This TeV-scale gamma emission is the target of GRB searches by Imaging Air Ceˇ renkov Telescopes such as MAGIC

as well as surface arrays such as MILAGRO.

7

Here, and in the rest of this thesis, we treat neutrinos and antineutrinos equally. The minor differences in cross-

section are taken into account in signal weighting but we shall not explicitly differentiate.

25

E

?

p

≥

m

2

∆

㡇 m

2

p

4E

?

γ

(2.17)

where primes indicate the co-moving frame of the fireball. We estimate that each of the four final

state particles in the π

+

decay chain given by Eqs. 2.12 and 2.14 contains equal energy, and that

on average, a fraction ?x

p→π

? ? 0.2 of the proton energy is transferred to pions in each interaction.

Thus each neutrino is expected to possess ∼ 5% of the proton energy.

The condition of Eq. 2.17 indicates that interactions of protons with high energy photons will

produce lower energy neutrinos, and vice versa. For each proton energy, then, the neutrino spectrum

traces the broken power law of Eq. 2.1. Assuming protons are accelerated with the same spectrum

as electrons, we then sum over proton energies to find the resultant neutrino spectra.

dN(E

ν

)

dE

ν

∝

E

㡇 α

ν

ν

for E

ν

< ?

b

ν

; α

ν

=3 㡇 β

γ

E

㡇 β

ν

ν

for ?

b

ν

≤ E

ν

< ?

s

ν

; β

ν

=3 㡇 α

γ

(2.18)

We relate the break in the neutrino spectrum to that of the observed photons by converting

Eq. 2.17 to the observer’s frame.

?

b

ν

=

1

4

?x

p→π

?

m

2

∆

㡇 m

2

p

4?

γ

㡇

2

(1 + z)

2

= 7.5 × 10

5

GeV

1

(1 + z)

2

?

㡇

10

2.5

?

2

?

MeV

?

γ

?

(2.19)

At high energy, the lifetime of the produced pions and muons τ

?

π,µ

exceeds the synchrotron

loss time t

?

s

. This introduces a further cooling break into the neutrino spectrum. t

?

s

is dependent on

the energy density of the magnetic field in the jet, U

?

B

= B

?2

/8π, and for pions is given by:

t

?

s

=

3m

4

π

c

3

4σ

T

m

2

e

E

?

π

U

?

B

(2.20)

where σ

T

= 0.665 × 10

㡇 24

cm

2

is the Thompson cross-section. The magnetic energy density can be

26

related to the fraction of the internal energy of the plasma that is carried by the magnetic field. We

denote this fraction by ξ

B

and write

4πR

2

c㡇

2

U

?

B

= ξ

B

L

int

(2.21)

We determine the collision radius R by noting that the colliding shells of the jet have velocities that

vary by ∆v ∼ c/2㡇

2

, where, as previously, 㡇 represents the average Lorentz factor of the fireball.

Given the observed time variability t

var

, these shells then collide at a time t

c

∼ ct

var

/∆v, which

corresponds to a radius R ? 2㡇

2

ct

var

. The total luminosity is related to the observed photon

luminosity through the electron energy fraction of the jet, L

int

= L

iso

γ

/ξ

e

. [46, 47]

The pion lifetime is given by

τ

?

π

= τ

0

π

E

?

π

m

π

c

2

= 2.6 × 10

㡇 8

E

?

π

m

π

c

2

(2.22)

Equating Eq. 2.20 with Eq. 2.22 yields the pion energy where synchrotron losses begin to suppress

the neutrino emission. Recalling the each ν

µ

carries 1/4 of the pion energy, and converting to the

observer’s frame, we find

?

s

ν

= 10

7

GeV

1

1+ z

?

ξ

e

ξ

B

?

㡇

10

2.5

?

4

?

t

var

0.01 s

?

?

10

52

erg/s

L

iso

γ

(2.23)

We note that in the above the equipartition fractions ξ

e

and ξ

B

are not measured and no good

theoretical method exists for estimating them. We set them each to be 0.1 in all future calculations.

This estimate is supported by afterglow observations [48]. Muons live about 100 times longer and

thus the energy scale at which their decay products (ν¯

µ

and ν

e

) begin to be suppressed is a factor of

10 lower. The decay probability is approximately given by the ratio of the cooling time to the decay

time t

?

s

/τ

?

∝ E

㡇 2

ν

and thus we expect the neutrino spectrum to steepen by 2 powers in energy above

?

s

ν

(γ

ν

= β

ν

+ 2). Combining with Eq. 2.18 we arrive at

27

dN(E

ν

)

dE

ν

= f

ν

×

?

b

ν

(α

ν

㡇 β

ν

)

E

㡇 α

ν

ν

for E

ν

< ?

b

ν

E

㡇 β

ν

ν

for ?

b

ν

≤ E

ν

< ?

s

ν

?

s

ν

(γ

ν

㡇 β

ν

)

E

㡇 γ

ν

ν

for E

ν

≥ ?

s

ν

(2.24)

It now remains to determine the normalization f

ν

. The neutrino fluence is proportional to the

measured gamma-ray fluence F

γ

?

dE

ν

E

ν

dN(E

ν

)

dE

ν

= x ·

?

dE

γ

E

γ

dN(E

γ

)

dE

γ

= x · F

γ

(2.25)

The constant of proportionality x contains several terms. It includes the fraction of jet proton energy

lost to pion production f

π

, a factor 1/f

e

that accounts for the fraction of total energy expected in

protons respective to electrons, and a factor 1/8. This final term is due to the fact that only half of

the photomeson interactions produce neutrinos (the others produce π

0

) and each of those reactions

creates four final state leptons. f

π

can be estimated from the size of the shocks and the mean free

path for photomeson interactions.

f

π

∝

∆R

?

λ

pγ

= N

int

(2.26)

The number of interactions is related to the photon energy density which is in turn a function of the

GRB luminosity in the comoving frame

8

. We follow the derivation of [46] and reformulate to ensure

an energy transfer ≤ 1:

f

π

= 1 㡇 (1 㡇 ? x

p→π

?)

N

int

(2.27)

N

int

=

L

iso

γ

10

52

erg/s

?

?

0.01 s

t

var

? ?

10

2.5

㡇

?

4

?

MeV

?

γ

?

(2.28)

8

Here we note that we assume isotropic emission, but also divide by a spherical shell. In the case of beamed emission,

the luminosity decreases, but cancels with the decreased solid angle.

28

An analytical approximation of the integration over neutrino energies yields a simple relationship

between the neutrino flux normalization and the gamma-ray fluence.

f

ν

≈

1

8

1

f

e

f

π

ln(10)

F

γ

(2.29)

A quasi-diffuse flux may be determined by summing over all bursts in a year and dividing by

the full sky solid angle. This was done by Waxman and Bahcall, assuming each GRB followed a

spectrum calculated from averages of the BATSE population [49]. Assuming 1000 GRBs per year

based on the BATSE rates, they calculated the diffuse flux shown in Fig. 2.8. This will hereafter be

referred to as the Waxman-Bahcall prompt GRB flux. The normalization is comparable to what one

would get assuming that all the ultra-high energy cosmic rays originate from GRBs. The average

parameters used in this calculation are shown in Table 2.1.

reduction of the flux happens above E

sb

π

[Eq. 23] due to

synchrotron radiation by pions.

3456789

?13

?12

?11

?10

?9

?8

?7

WB Limit

ICECUBE

burst

pp

p

γ

(head)

p

γ

(shocks)

p

γ

(head)

ν

μ

flux (10

3

bursts/yr)

atmospheric

ν

[log10(GeV/cm s sr)]

2

E [log10(GeV)]

op

ε

E

2

ν

Φ

ν

−1

(stellar)

pp (shocks)

He

H

FIG. 1: Diffuse muon-neutrino flux E

2

ν

Φ

ν

ε

㡇 1

op

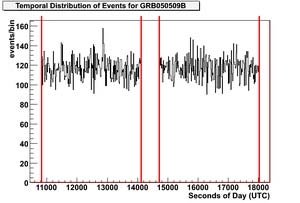

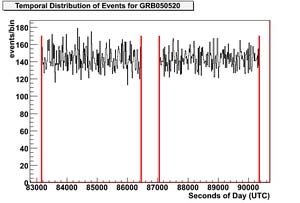

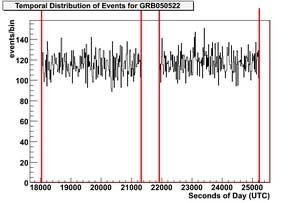

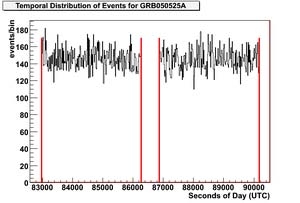

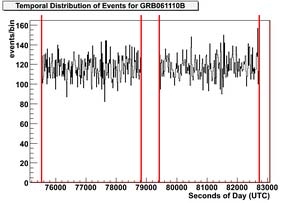

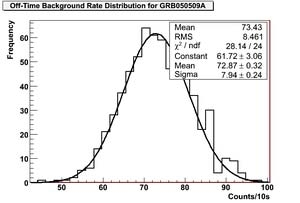

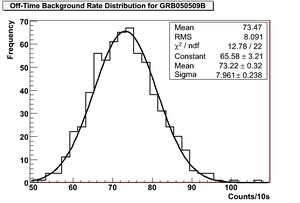

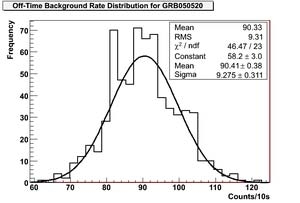

, shown as solid