The IceCube Collaboration:

Contributions to the 31

st

International Cosmic Ray Conference*

Łό

d

ź

, Poland, 7-15 July 2009

------------------

*

Includes some related papers submitted by individual members of

the Collaboration

This page intentionally left blank

PROCEEDINGS OF THE 31

st

ICRC, ŁOD´ Z´ 2009

1

IceCube COLLABORATION

R. Abbasi

24

, Y. Abdou

18

, M. Ackermann

36

, J. Adams

13

, J. Aguilar

24

, M. Ahlers

28

, K. Andeen

24

,

J. Auffenberg

35

, X. Bai

27

, M. Baker

24

, S. W. Barwick

20

, R. Bay

7

, J. L. Bazo Alba

36

, K. Beattie

8

,

J. J. Beatty

15,16

, S. Bechet

10

, J. K. Becker

17

, K.-H. Becker

35

, M. L. Benabderrahmane

36

,

J. Berdermann

36

, P. Berghaus

24

, D. Berley

14

, E. Bernardini

36

, D. Bertrand

10

, D. Z. Besson

22

,

M. Bissok

1

, E. Blaufuss

14

, D. J. Boersma

24

, C. Bohm

30

, J. Bolmont

36

, S. Boser¨

36

, O. Botner

33

,

L. Bradley

32

, J. Braun

24

, D. Breder

35

, T. Castermans

26

, D. Chirkin

24

, B. Christy

14

, J. Clem

27

,

S. Cohen

21

, D. F. Cowen

32,31

, M. V. D’Agostino

7

, M. Danninger

30

, C. T. Day

8

, C. De Clercq

11

,

L. Demirors¨

21

, O. Depaepe

11

, F. Descamps

18

, P. Desiati

24

, G. de Vries-Uiterweerd

18

, T. DeYoung

32

,

J. C. Diaz-Velez

24

, J. Dreyer

17

, J. P. Dumm

24

, M. R. Duvoort

34

, W. R. Edwards

8

, R. Ehrlich

14

,

J. Eisch

24

, R. W. Ellsworth

14

, O. Engdegard˚

33

, S. Euler

1

, P. A. Evenson

27

, O. Fadiran

4

,

A. R. Fazely

6

, T. Feusels

18

, K. Filimonov

7

, C. Finley

24

, M. M. Foerster

32

, B. D. Fox

32

,

A. Franckowiak

9

, R. Franke

36

, T. K. Gaisser

27

, J. Gallagher

23

, R. Ganugapati

24

, L. Gerhardt

8,7

,

L. Gladstone

24

, A. Goldschmidt

8

, J. A. Goodman

14

, R. Gozzini

25

, D. Grant

32

, T. Griesel

25

,

A. Groß

13,19

, S. Grullon

24

, R. M. Gunasingha

6

, M. Gurtner

35

, C. Ha

32

, A. Hallgren

33

,

F. Halzen

24

, K. Han

13

, K. Hanson

24

, Y. Hasegawa

12

, J. Heise

34

, K. Helbing

35

, P. Herquet

26

,

S. Hickford

13

, G. C. Hill

24

, K. D. Hoffman

14

, K. Hoshina

24

, D. Hubert

11

, W. Huelsnitz

14

,

J.-P. Hulߨ

1

, P. O. Hulth

30

, K. Hultqvist

30

, S. Hussain

27

, R. L. Imlay

6

, M. Inaba

12

, A. Ishihara

12

,

J. Jacobsen

24

, G. S. Japaridze

4

, H. Johansson

30

, J. M. Joseph

8

, K.-H. Kampert

35

, A. Kappes

24,a

,

T. Karg

35

, A. Karle

24

, J. L. Kelley

24

, P. Kenny

22

, J. Kiryluk

8,7

, F. Kislat

36

, S. R. Klein

8,7

,

S. Klepser

36

, S. Knops

1

, G. Kohnen

26

, H. Kolanoski

9

, L. Kopk¨ e

25

, M. Kowalski

9

, T. Kowarik

25

,

M. Krasberg

24

, K. Kuehn

15

, T. Kuwabara

27

, M. Labare

10

, S. Lafebre

32

, K. Laihem

1

,

H. Landsman

24

, R. Lauer

36

, H. Leich

36

, D. Lennarz

1

, A. Lucke

9

, J. Lundberg

33

, J. Lunemann¨

25

,

J. Madsen

29

, P. Majumdar

36

, R. Maruyama

24

, K. Mase

12

, H. S. Matis

8

, C. P. McParland

8

,

K. Meagher

14

, M. Merck

24

, P. Mesz´ ar´ os

31,32

, E. Middell

36

, N. Milke

17

, H. Miyamoto

12

, A. Mohr

9

,

T. Montaruli

24,b

, R. Morse

24

, S. M. Movit

31

, K. Munich¨

17

, R. Nahnhauer

36

, J. W. Nam

20

,

P. Nießen

27

, D. R. Nygren

8,30

, S. Odrowski

19

, A. Olivas

14

, M. Olivo

33

, M. Ono

12

, S. Panknin

9

,

S. Patton

8

, C. Per´ ez de los Heros

33

, J. Petrovic

10

, A. Piegsa

25

, D. Pieloth

36

, A. C. Pohl

33,c

,

R. Porrata

7

, N. Potthoff

35

, P. B. Price

7

, M. Prikockis

32

, G. T. Przybylski

8

, K. Rawlins

3

, P. Redl

14

,

E. Resconi

19

, W. Rhode

17

, M. Ribordy

21

, A. Rizzo

11

, J. P. Rodrigues

24

, P. Roth

14

, F. Rothmaier

25

,

C. Rott

15

, C. Roucelle

19

, D. Rutledge

32

, D. Ryckbosch

18

, H.-G. Sander

25

, S. Sarkar

28

,

K. Satalecka

36

, S. Schlenstedt

36

, T. Schmidt

14

, D. Schneider

24

, A. Schukraft

1

, O. Schulz

19

,

M. Schunck

1

, D. Seckel

27

, B. Semburg

35

, S. H. Seo

30

, Y. Sestayo

19

, S. Seunarine

13

, A. Silvestri

20

,

A. Slipak

32

, G. M. Spiczak

29

, C. Spiering

36

, M. Stamatikos

15

, T. Stanev

27

, G. Stephens

32

,

T. Stezelberger

8

, R. G. Stokstad

8

, M. C. Stoufer

8

, S. Stoyanov

27

, E. A. Strahler

24

,

T. Straszheim

14

, K.-H. Sulanke

36

, G. W. Sullivan

14

, Q. Swillens

10

, I. Taboada

5

, O. Tarasova

36

,

A. Tepe

35

, S. Ter-Antonyan

6

, C. Terranova

21

, S. Tilav

27

, M. Tluczykont

36

, P. A. Toale

32

, D. Tosi

36

,

D. Turcanˇ

14

, N. van Eijndhoven

34

, J. Vandenbroucke

7

, A. Van Overloop

18

, B. Voigt

36

,

C. Walck

30

, T. Waldenmaier

9

, M. Walter

36

, C. Wendt

24

, S. Westerhoff

24

, N. Whitehorn

24

,

C. H. Wiebusch

1

, A. Wiedemann

17

, G. Wikstrom¨

30

, D. R. Williams

2

, R. Wischnewski

36

,

H. Wissing

1,14

, K. Woschnagg

7

, X. W. Xu

6

, G. Yodh

20

, S. Yoshida

12

1

III Physikalisches Institut, RWTH Aachen University, D-52056 Aachen, Germany

2

Dept. of Physics and Astronomy, University of Alabama, Tuscaloosa, AL 35487, USA

3

Dept. of Physics and Astronomy, University of Alaska Anchorage, 3211 Providence Dr., Anchorage, AK 99508,

USA

4

CTSPS, Clark-Atlanta University, Atlanta, GA 30314, USA

5

School of Physics and Center for Relativistic Astrophysics, Georgia Institute of Technology, Atlanta, GA 30332.

USA

6

Dept. of Physics, Southern University, Baton Rouge, LA 70813, USA

7

Dept. of Physics, University of California, Berkeley, CA 94720, USA

8

Lawrence Berkeley National Laboratory, Berkeley, CA 94720, USA

9

Institut fur¨ Physik, Humboldt-Universitat¨ zu Berlin, D-12489 Berlin, Germany

10

Universite´ Libre de Bruxelles, Science Faculty CP230, B-1050 Brussels, Belgium

11

Vrije Universiteit Brussel, Dienst ELEM, B-1050 Brussels, Belgium

12

Dept. of Physics, Chiba University, Chiba 263-8522, Japan

2

PROCEEDINGS OF THE 31

st

ICRC, ŁOD´ Z´ 2009

13

Dept. of Physics and Astronomy, University of Canterbury, Private Bag 4800, Christchurch, New Zealand

14

Dept. of Physics, University of Maryland, College Park, MD 20742, USA

15

Dept. of Physics and Center for Cosmology and Astro-Particle Physics, Ohio State University, Columbus, OH

43210, USA

16

Dept. of Astronomy, Ohio State University, Columbus, OH 43210, USA

17

Dept. of Physics, TU Dortmund University, D-44221 Dortmund, Germany

18

Dept. of Subatomic and Radiation Physics, University of Gent, B-9000 Gent, Belgium

19

Max-Planck-Institut fur¨ Kernphysik, D-69177 Heidelberg, Germany

20

Dept. of Physics and Astronomy, University of California, Irvine, CA 92697, USA

21

Laboratory for High Energy Physics, Ecole´

Polytechnique Fed´ er´ ale, CH-1015 Lausanne, Switzerland

22

Dept. of Physics and Astronomy, University of Kansas, Lawrence, KS 66045, USA

23

Dept. of Astronomy, University of Wisconsin, Madison, WI 53706, USA

24

Dept. of Physics, University of Wisconsin, Madison, WI 53706, USA

25

Institute of Physics, University of Mainz, Staudinger Weg 7, D-55099 Mainz, Germany

26

University of Mons-Hainaut, 7000 Mons, Belgium

27

Bartol Research Institute and Department of Physics and Astronomy, University of Delaware, Newark, DE

19716, USA

28

Dept. of Physics, University of Oxford, 1 Keble Road, Oxford OX1 3NP, UK

29

Dept. of Physics, University of Wisconsin, River Falls, WI 54022, USA

30

Oskar Klein Centre and Dept. of Physics, Stockholm University, SE-10691 Stockholm, Sweden

31

Dept. of Astronomy and Astrophysics, Pennsylvania State University, University Park, PA 16802, USA

32

Dept. of Physics, Pennsylvania State University, University Park, PA 16802, USA

33

Dept. of Physics and Astronomy, Uppsala University, Box 516, S-75120 Uppsala, Sweden

34

Dept. of Physics and Astronomy, Utrecht University/SRON, NL-3584 CC Utrecht, The Netherlands

35

Dept. of Physics, University of Wuppertal, D-42119 Wuppertal, Germany

36

DESY, D-15735 Zeuthen, Germany

a

affiliated with Universitat¨ Erlangen-Nurnber¨

g, Physikalisches Institut, D-91058, Erlangen, Germany

b

on leave of absence from Universita` di Bari and Sezione INFN, Dipartimento di Fisica, I-70126, Bari, Italy

c

affiliated with School of Pure and Applied Natural Sciences, Kalmar University, S-39182 Kalmar, Sweden

Acknowledgments

We acknowledge the support from the following agencies: U.S. National Science Foundation-Office of Polar

Program, U.S. National Science Foundation-Physics Division, University of Wisconsin Alumni Research Foun-

dation, U.S. Department of Energy, and National Energy Research Scientific Computing Center, the Louisiana

Optical Network Initiative (LONI) grid computing resources; Swedish Research Council, Swedish Polar Research

Secretariat, and Knut and Alice Wallenberg Foundation, Sweden; German Ministry for Education and Research

(BMBF), Deutsche Forschungsgemeinschaft (DFG), Germany; Fund for Scientific Research (FNRS-FWO), Flanders

Institute to encourage scientific and technological research in industry (IWT), Belgian Federal Science Policy Office

(Belspo); the Netherlands Organisation for Scientific Research (NWO); M. Ribordy acknowledges the support of

the SNF (Switzerland); A. Kappes and A. Groß acknowledge support by the EU Marie Curie OIF Program;

J. P. Rodrigues acknowledge support by the Capes Foundation, Ministry of Education of Brazil.

1339: IceCube

Albrecht Karle, for the IceCube Collaboration (Highlight paper)

0653: All-Sky Point-Source Search with 40 Strings of IceCube

Jon Dumm, Juan A. Aguilar, Mike Baker, Chad Finley, Teresa Montaruli, for the

IceCube Collaboration

0812: IceCube Time-Dependent Point Source Analysis Using Multiwavelength

Information

M. Baker, J. A. Aguilar, J. Braun, J. Dumm, C. Finley, T. Montaruli, S. Odrowski, E.

Resconi for the IceCube Collaboration

0960: Search for neutrino flares from point sources with IceCube

(0908.4209)

J. L. Bazo Alba, E. Bernardini, R. Lauer, for the IceCube Collaboration

0987: Neutrino triggered high-energy gamma-ray follow-up with IceCube

Robert Franke, Elisa Bernardini for the IceCube collaboration

1173: Moon Shadow Observation by IceCube

D.J. Boersma, L. Gladstone and A. Karle for the IceCube Collaboration

1289: IceCube/AMANDA combined analyses for the search of neutrino sources at

low energies

Cécile Roucelle, Andreas Gross, Sirin Odrowski, Elisa Resconi, Yolanda Sestayo

1127: AMANDA 7-Year Multipole Analysis

(0906.3942)

Anne Schukraft, Jan-Patrick Hülß for the IceCube Collaboration

1418: Measurement of the atmospheric neutrino energy spectrum with IceCube

Dmitry Chirkin for the IceCube collaboration

0785: Atmospheric Neutrino Oscillation Measurements with IceCube

Carsten Rott for the IceCube Collaboration

1565: Direct Measurement of the Atmospheric Muon Energy Spectrum with

IceCube

(0909.0679)

Patrick Berghaus for the IceCube Collaboration

1400: Search for Diffuse High Energy Neutrinos with IceCube

Kotoyo Hoshina for the IceCube collaboration

1311:

A Search For Atmospheric Neutrino-Induced Cascades with IceCube

(0910.0215) Michelangelo D’Agostino for the IceCube Collaboration

0882: First search for extraterrestrial neutrino-induced cascades with IceCube

(0909.0989) Joanna Kiryluk for the IceCube Collaboration

0708: Improved Reconstruction of Cascade-like Events in IceCube

Eike Middell, Joseph McCartin and Michelangelo D’Agostino for the IceCube

Collaboration

1221: Searches for neutrinos from GRBs with the IceCube 22-string detector and

sensitivity estimates for the full detector

A. Kappes, P. Roth, E. Strahler, for the IceCube Collaboration

0515: Search for neutrinos from GRBs with IceCube

K. Meagher, P. Roth, I. Taboada, K. Hoffman, for the IceCube Collaboration

0393: Search for GRB neutrinos via a (stacked) time profile analysis

Martijn Duvoort and Nick van Eijndhoven for the IceCube collaboration

0764: Optical follow-up of high-energy neutrinos detected by IceCube

(0909.0631)

Anna Franckowiak, Carl Akerlof, D. F. Cowen, Marek Kowalski, Ringo Lehmann,

Torsten Schmidt and Fang Yuan for the IceCube Collaboration and for the ROTSE

Collaboration

0505: Results and Prospects of Indirect Searches for Dark Matter with IceCube

Carsten Rott and Gustav Wikström for the IceCube collaboration

1356: Search for the Kaluza-Klein Dark Matter with the AMANDA/IceCube

Detectors

(0906.3969), Matthias Danninger & Kahae Han for the IceCube Collaboration

0834: Searches for WIMP Dark Matter from the Sun with AMANDA

(0906.1615)

James Braun and Daan Hubert for the IceCube Collaboration

0861: The extremely high energy neutrino search with IceCube

Keiichi Mase, Aya Ishihara and Shigeru Yoshida for the IceCube Collaboration

0913: Study of very bright cosmic-ray induced muon bundle signatures measured

by the IceCube detector

Aya Ishihara for the IceCube Collaboration

1198: Search for High Energetic Neutrinos from Supernova Explosions with

AMANDA

(0907.4621)

Dirk Lennarz and Christopher Wiebusch for the IceCube Collaboration

0549: Search for Ultra High Energy Neutrinos with AMANDA

Andrea Silvestri for the IceCube Collaboration

1372: Selection of High Energy Tau Neutrinos in IceCube

Seon-Hee Seo and P. A. Toale for the IceCube Collaboration

0484: Search for quantum gravity with IceCube and high energy atmospheric

neutrinos,

Warren Huelsnitz & John Kelley for the IceCube Collaboration

0970: A First All-Particle Cosmic Ray Energy Spectrum From IceTop

Fabian Kislat, Stefan Klepser, Hermann Kolanoski and Tilo Waldenmaier for the

IceCube Collaboration

0518: Reconstruction of IceCube coincident events and study of composition-

sensitive observables using both the surface and deep detector

Tom Feusels, Jonathan Eisch and Chen Xu for the IceCube Collaboration

0737: Small air showers in IceTop

Bakhtiyar Ruzybayev, Shahid Hussain, Chen Xu and Thomas Gaisser for the IceCube

Collaboration

1429: Cosmic Ray Composition using SPASE-2 and AMANDA-II

K. Andeen and K. Rawlins For the IceCube Collaboration

0519: Study of High pT Muons in IceCube

(0909.0055)

Lisa Gerhardt and Spencer Klein for the IceCube Collaboration

1340: Large Scale Cosmic Rays Anisotropy With IceCube

(0907.0498)

Rasha U Abbasi, Paolo Desiati and Juan Carlos Velez for the IceCube Collaboration

1398: Atmospheric Variations as observed by IceCube

Serap Tilav, Paolo Desiati, Takao Kuwabara, Dominick Rocco,

Florian Rothmaier, Matt Simmons, Henrike Wissing for the IceCube Collaboration

1251: Supernova Search with the AMANDA / IceCube Detectors

(0908.0441)

Thomas Kowarik, Timo Griesel, Alexander Piégsa for the IceCube Collaboration

1352: Physics Capabilities of the IceCube DeepCore Detector

(0907.2263)

Christopher Wiebusch for the IceCube Collaboration

1336: Fundamental Neutrino Measurements with IceCube DeepCore

Darren Grant, D. Jason Koskinen, and Carsten Rott for the IceCube collaboration

1237: Implementation of an active veto against atmospheric muons in IceCube

DeepCore

Olaf Schulz, Sebastian Euler and Darren Grant for the IceCube Collaboration

1293: Acoustic detection of high energy neutrinos in ice: Status and

results from the South Pole Acoustic Test Setup

(0908.3251 – revised)

Freija Descamps for the IceCube Collaboration

0903: Sensor development and calibration for acoustic neutrino detection in ice

(0907.3561)

Timo Karg, Martin Bissok, Karim Laihem, Benjamin Semburg, and Delia Tosi

for the IceCube collaboration

PAPERS RELATED TO ICECUBE

0466: A new method for identifying neutrino events in IceCube data

Dmitry Chirkin

0395: Muon Production of Hadronic Particle Showers in Ice and Water

Sebastian Panknin, Julien Bolmont, Marek Kowalski and Stephan Zimmer

0642: Muon bundle energy loss in deep underground detector

Xinhua Bai, Dmitry Chirkin, Thomas Gaisser, Todor Stanev and David Seckel

0542: Constraints on Neutrino Interactions at energies beyond 100 PeV with

Neutrino Telescopes

Shigeru Yoshida

0006: Constraints on Extragalactic Point Source Flux from Diffuse Neutrino Limits

Andrea Silvestri and Steven W. Barwick

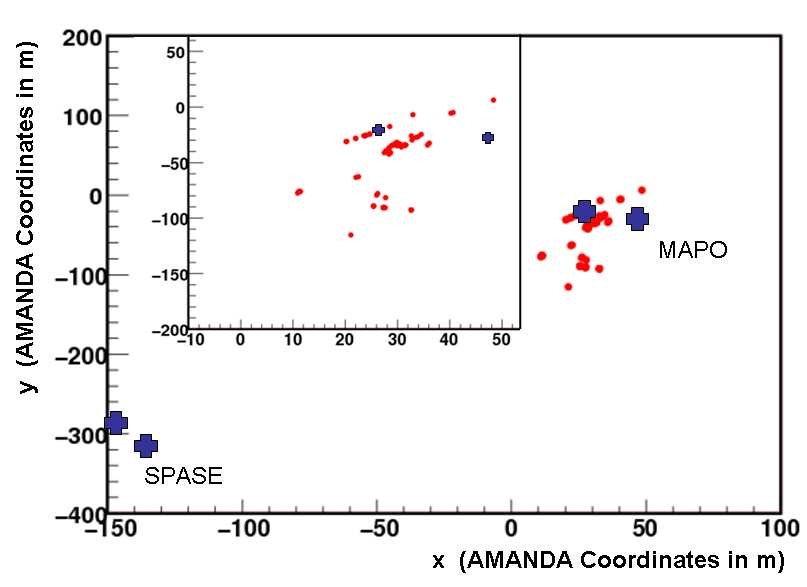

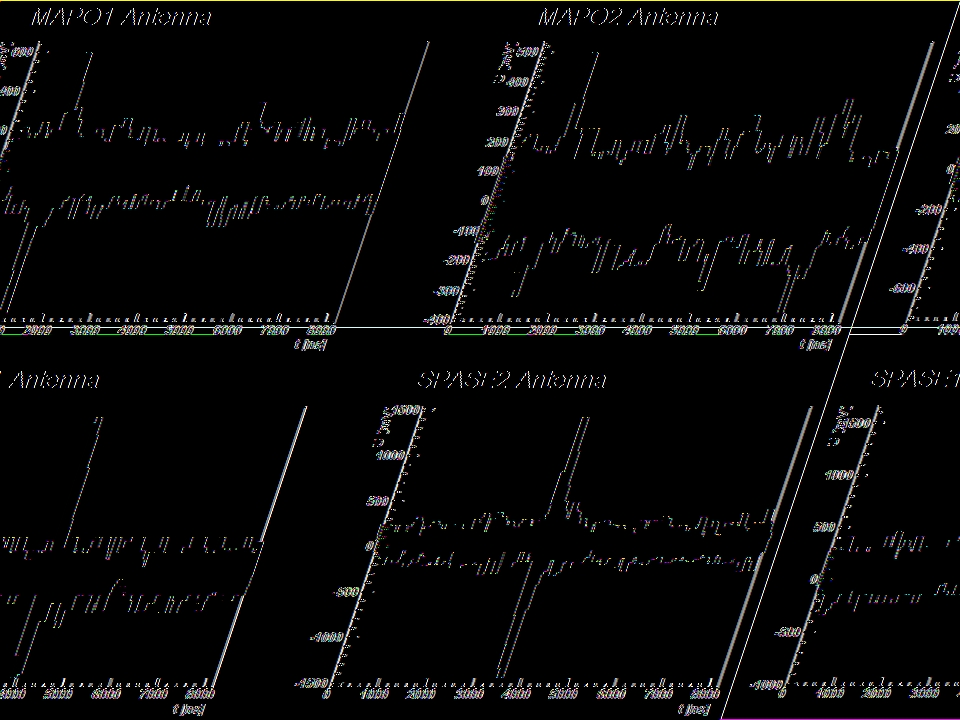

0418: Study of electromagnetic backgrounds in the 25-300 MHz frequency band at

the South Pole

Jan Auffenberg, Dave Besson

y

, Tom Gaisser, Klaus Helbing, Timo Karg, Albrecht

Karle,and Ilya Kravchenko

PROCEEDINGS OF 31

st

ICRC, ŁÓDŹ 2009

1

IceCube

Albrecht Karle

*

, for the IceCube Collaboration

*

University of Wisconsin-Madison, 1150 University Avenue, Madison, WI 53706

Abstract

. IceCube is a 1 km

3

neutrino telescope

currently under construction at the South Pole.

The detector will consist of 5160 optical sensors

deployed at depths between 1450 m and 2450 m in

clear Antarctic ice evenly distributed over 86

strings. An air shower array covering a surface

area of 1 km

2

above the in-ice detector will meas-

ure cosmic ray air showers in the energy range

from 300 TeV to above 1 EeV. The detector is de-

signed to detect neutrinos of all flavors: ν

e

, ν

μ

and

ν

τ

. With 59 strings currently in operation, con-

struction is 67% complete. Based on data taken

to date, the observatory meets its design goals.

Selected results will be presented.

Keywords:

neutrinos, cosmic rays, neutrino as-

tronomy.

I. INTRODUCTION

IceCube is a large kilometer scale neutrino tele-

scope currently under construction at the South Pole.

With the ability to detect neutrinos of all flavors over

a wide energy range from about 100 GeV to beyond

10

9

GeV, IceCube is able to address fundamental

questions in both high energy astrophysics and neu-

trino physics. One of its main goals is the search for

sources of high energy astrophysical neutrinos which

provide important clues for understanding the origin

of high energy cosmic rays.

The interactions of ultra high energy cosmic rays

with radiation fields or matter either at the source or

in intergalactic space result in a neutrino flux due to

the decays of the produced secondary particles such

as pions, kaons and muons. The observed cosmic

ray flux sets the scale for the neutrino flux and leads

to the prediction of event rates requiring kilometer

scale detectors, see for example

1

. As primary candi-

dates for cosmic ray accelerators, AGNs and GRBs

are thus also the most promising astrophysical point

source candidates of high energy neutrinos. Galactic

source candidates include supernova remnants, mi-

croquasars, and pulsars. Guaranteed sources of neu-

trinos are the cosmogenic high energy neutrino flux

from interactions of cosmic rays with the cosmic mi-

crowave background and the galactic neutrino flux

resulting from galactic cosmic rays interacting with

the interstellar medium. Both fluxes are small and

their measurement constitutes a great challenge.

Other sources of neutrino radiation include dark mat-

ter, in the form of supersymmetric or more exotic

particles and remnants from various phase transitions

in the early universe.

The relation between the cosmic ray flux and the

atmospheric neutrino flux is well understood and is

based on the standard model of particle physics. The

observed diffuse neutrino flux in underground labo-

ratories agrees with Monte Carlo simulations of the

primary cosmic ray flux interacting with the Earth's

atmosphere and producing a secondary atmospheric

neutrino flux

2

.

Although atmospheric neutrinos are the primary

background in searching for astrophysical neutrinos,

they are very useful for two reasons. Atmospheric

neutrino physics can be studied up to PeV energies.

The measurement of more than 50,000 events per

year in an energy range from 500 GeV to 500 TeV

will make IceCube a unique instrument to make pre-

cise comparisons of atmospheric neutrinos with

model predictions. At energies beyond 100 TeV a

harder neutrino spectrum may emerge which would

be a signature of an extraterrestrial flux. Atmos-

pheric neutrinos also give the opportunity to cali-

brate the detector. The absence of such a calibration

beam at higher energies poses a difficult challenge

for detectors at energies targeting the cosmogenic

neutrino flux.

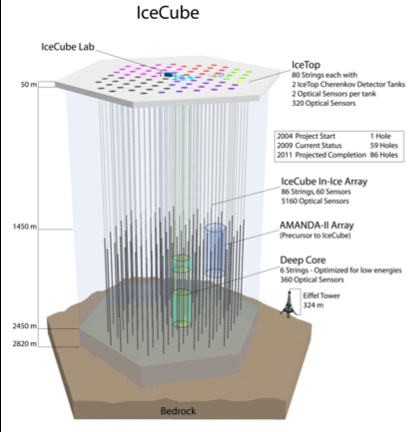

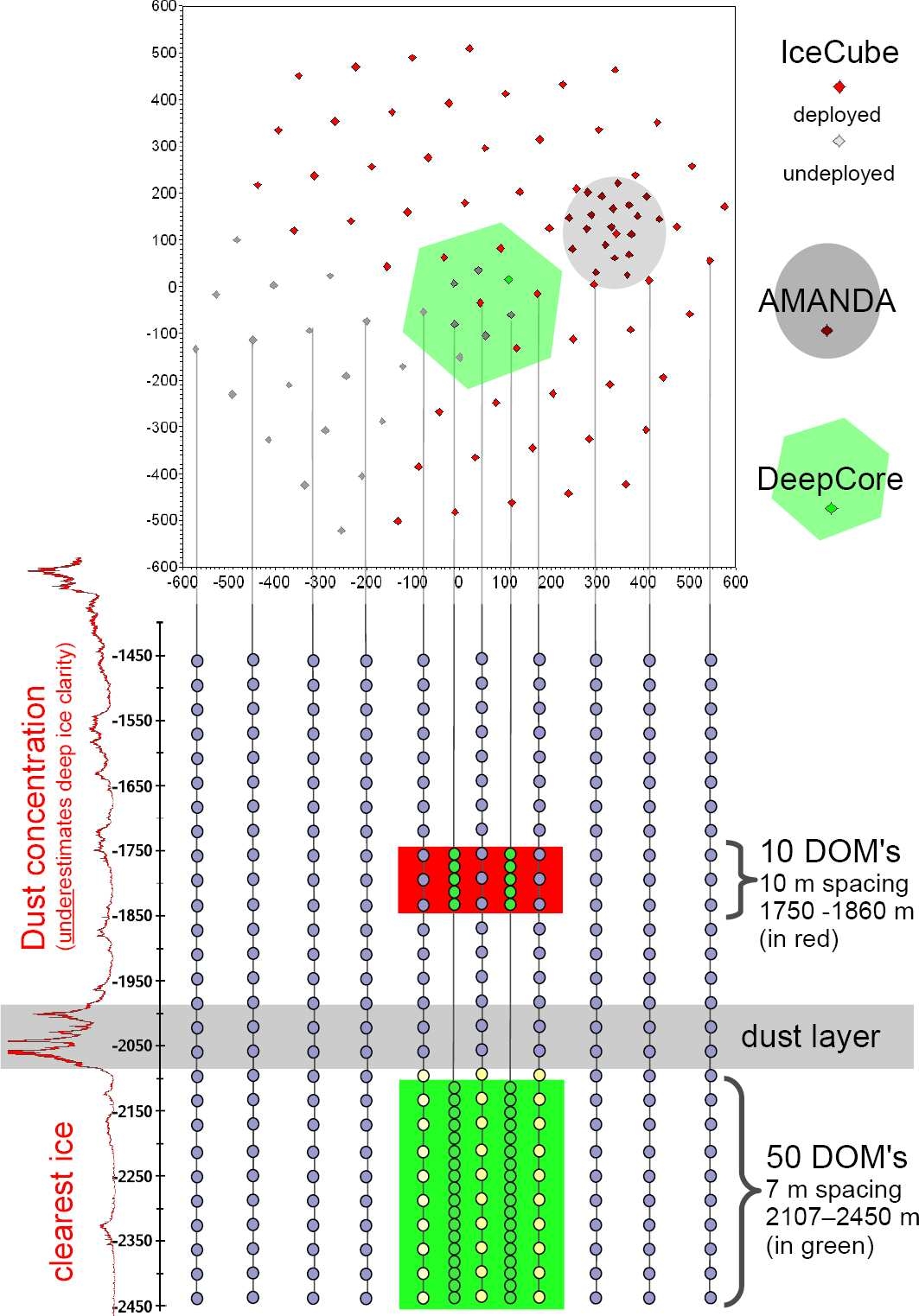

Fig. 1 Schematic view of IceCube. Fifty-nine of 86 strings are in

operation since 2009.

2

A. Karle et al., IceCube

II. DETECTOR AND CONSTRUCTION STA-

TUS

IceCube is designed to detect muons and cascades

over a wide energy range. The string spacing was

chosen in order to reliably detect and reconstruct

muons in the TeV energy range and to precisely

calibrate the detector using flashing LEDs and at-

mospheric muons.

The optical properties of the

South Pole Ice have been measured with various

calibration devices

3

and are used for modeling the

detector response to charged particles. Muon recon-

struction algorithms

4

allow measuring the direction

and energy of tracks from all directions.

In its final configuration, the detector will consist

of 86 strings reaching a depth of 2450 m below the

surface. There are 60 optical sensors mounted on

each string equally spaced between 1450m and

2450m depth with the exception of the six Deep

Core strings on which the sensors are more closely

spaced between 1760m and 2450m. In addition there

will be 320 sensors deployed in 160 IceTop tanks on

the surface of the ice directly above the strings. Each

sensor consists of a 25cm photomultiplier tube

(PMT), connected to a waveform recording data ac-

quisition circuit capable of resolving pulses with

nanosecond precision and having a dynamic range of

at least 250 photoelectrons per 10ns. With the most

recent construction season ending in February 2008,

half of the IceCube array has been deployed.

The detector is constructed by drilling holes in

the ice, one at a time, using a hot water drill. Drilling

is immediately followed by deployment of a detector

string into the water-filled hole. The drilling of a

hole to a depth of 2450m takes about 30 hours. The

subsequent deployment of the string typically takes

less than 10 hours. The holes typically freeze back

within 1-3 weeks. The time delay between two sub-

sequent drilling cycles and string deployments was

in some cases shorter than 50 hours. By the end of

February 2009, 59 strings and IceTop stations had

been deployed. We refer to this configuration as

IC59. Once the strings are completely frozen in the

commissioning can start. Approximately 99% of the

deployed DOMs have been successfully commis-

sioned. The 40-string detector configuration (IC40)

has been in operation from May 2008 to the end of

April 2009.

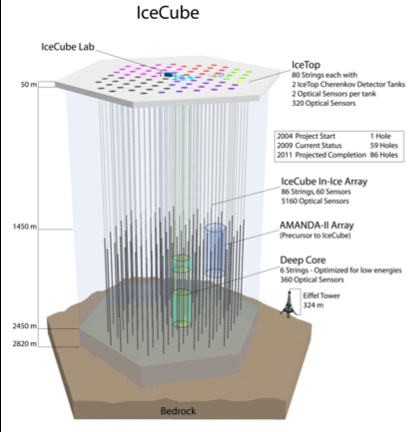

III. MUONS AND NEUTRINOS

At the depth of IceCube, the event rate from

downgoing atmospheric muons is close to 6 orders

of magnitude higher than the event rate from atmos-

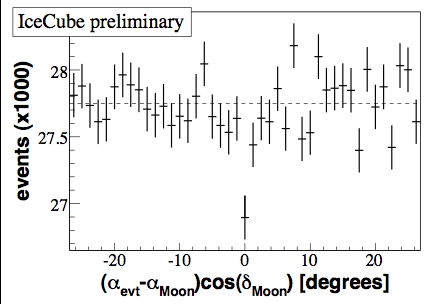

pheric neutrinos. Fig. 2 shows the observed muon

rate (IC22) as a function of the zenith angle

5

.

IceCube is effective in detecting downward going

muons.

A first measurement of the muon energy

spectrum is provided in the references

6

.

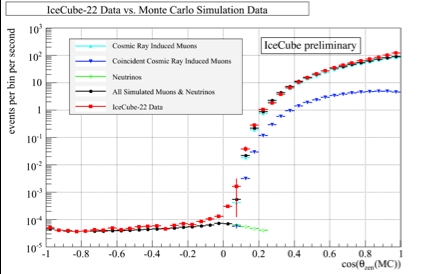

A good angular resolution of the experiment is the

basis for the zenith angle distribution and much more

so for the search of point sources of neutrinos from

galactice sources, AGNs or GRBs. Figure 3 shows

the angular resolution of IceCube for several detector

configurations based on high quality neutrino event

selections as used in the point source search for

IC40

7

.

The median angular resolution of IC40

achieved is already 0.7°, the design parameter for the

full IceCube.

The muon flux serves in many ways also as a

calibration tool. One method to verify the angular

resolution and absolute pointing of the detector uses

the Moon shadow of cosmic rays.

The Moon

reaches an elevation of about 28° above the horizon

at the South Pole. Despite the small altitude of the

Moon, the event rate and angular resolution of

IceCube are sufficient to measure the cosmic ray

shadow of the Moon by mapping the muon rate in

the vicinity of the Moon. The parent air showers

have an energy of typically 30 TeV, well above the

energy where magnetic fields would pose a signifi-

Fig. 2 Muon rate in IceCube as a function of zenith angle

5

.The

data agree with the detector simulation which includes atmos-

pheric neutrinos, atmospheric muons, and coincident cosmic ray

muons (two muons erroneously reconstructed as a single track.)

Fig. 3 The angular resolution function of different IceCube con-

figurations is shown for two neutrino energy ranges samples from

an E

-2

energy spectrum.

PROCEEDINGS OF 31

st

ICRC, ŁÓDŹ 2009

3

cant deviation from the direction of the primary

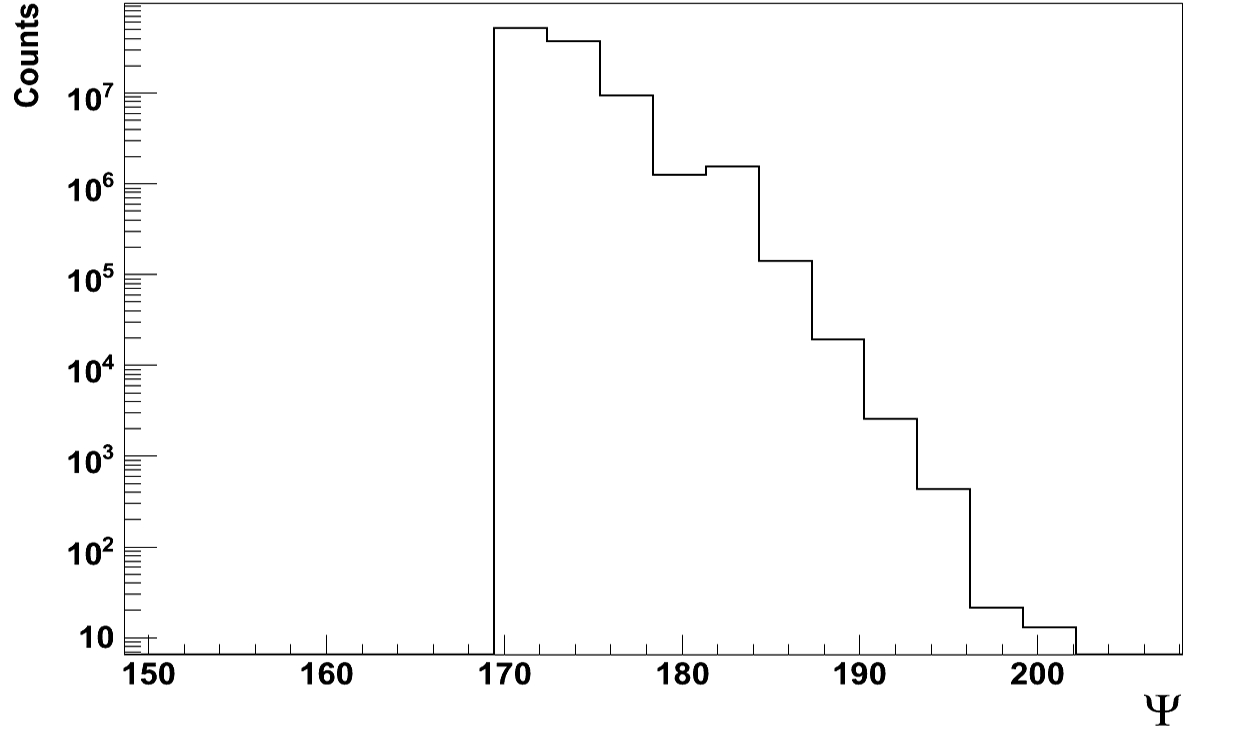

particles. Fig. 4 shows a simple declination band

with bin size optimized for this analysis. A deficit of

~900 events (~4.2σ) is observed on a background of

~28000 events in 8 months of data taking. The defi-

cit is in agreement with expectations and confirms

the assumed angular resolution and absolute point-

ing.

The full IceCube will collect of order 50 000 high

quality atmospheric neutrinos per year in the TeV

energy range. A detailed understanding of the re-

sponse function of the detector at analysis level is the

foundation for any neutrino flux measurement. We

use the concept of the neutrino effective area to

describe the response function of the detector with

respect to neutrino flavor, energy and zenith angle.

The neutrino effective area is the equivalent area for

which all neutrinos of a given neutrino flux imping-

ing on the Earth would be observed. Absorption ef-

fects of the Earth are considered as part of the detec-

tor and folded in the effected area.

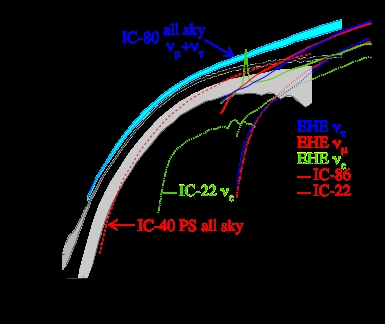

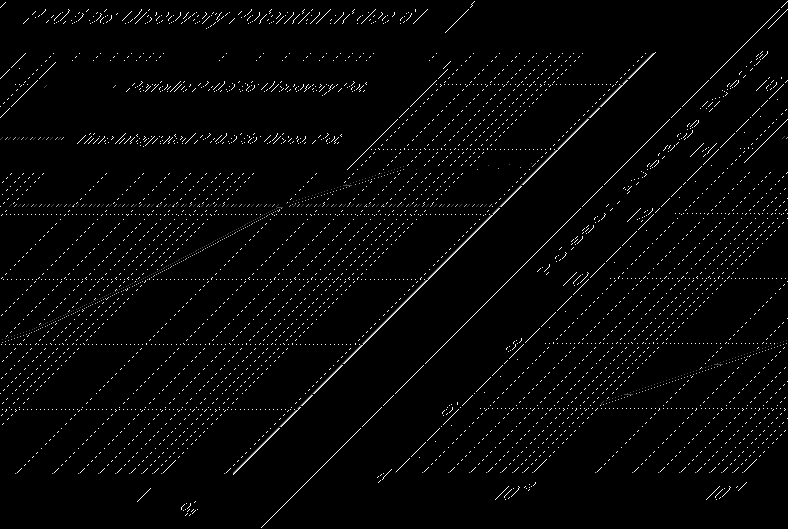

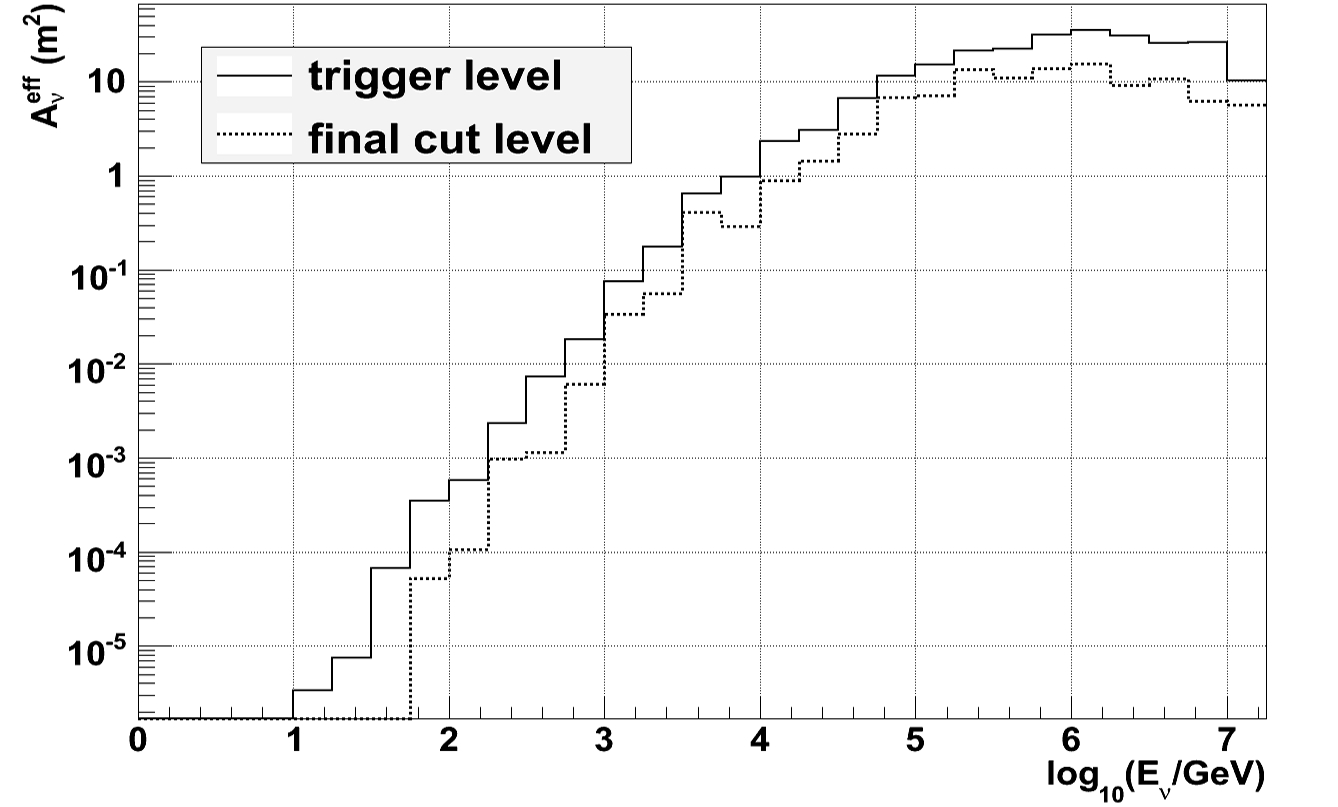

Figure 5 provides an overview of effective areas

for various analyses that are presented at this confer-

ence. First we note that the effective area increases

strongly in the range from 100 GeV to about 100

TeV. This is due to the increase in the neutrino-

nucleon cross-section and, in case of the muons, the

workhorse of high energy neutrino astronomy, due to

the additional increase of the muon range. Above

about 100 GeV, the increase slows down because of

radiative energy losses of muons.

The IC22

8

and IC80 as well as IC86 (IC80+6

Deep core) atmospheric ν

μ

area are shown for upgo-

ing neutrinos. The shaded area (IC22) indicates the

range from before to after quality cuts. The effective

area of IC40 point source analysis

7

is shown for all

zenith angles. It combines the upward neutrino sky

(predominantly energies < 1PeV) with downgoing

neutrinos (predominantly >1 PeV). Also shown is

the all sky ν

μ

+ ν

τ

area of IC80.

The ν

e

effective area is shown for the current

IC22 contained cascade analysis

9

as well as the IC22

extremely high energy (EHE) analysis

10

. It is inter-

esting to see how two entirely different analysis

techniques match up nicely at the energy transition

of about 5 PeV.

The cascade areas are about a factor of 20 smaller

than the ν

μ

areas, primarily because the muon range

allows the detection of neutrino interactions far out-

side the detector, increasing the effective detector

volume by a large factor. However, the excellent

energy resolution of contained cascades will benefit

the background rejection of any diffuse analysis, and

makes cascades a competitive detection channel in

the detector where the volume grows faster than the

area with the growing number of strings.

The figure illustrates why IceCube, and other

large water/ice neutrino telescopes for that matter,

can do physics over such a wide energy range. Un-

like typical air shower cosmic ray or gamma ray de-

tectors, the effective area increases by about 8 orders

of magnitude (10

-4

m

2

to 10

+4

m

2

) over an energy

range of equal change of scale (10 GeV to 10

9

GeV).

The analysis at the vastly different energy scales re-

Fig. 4 4.2σ deficit of events from direction of Moon in the

IceCube 40-string detector confirms pointing accuracy.

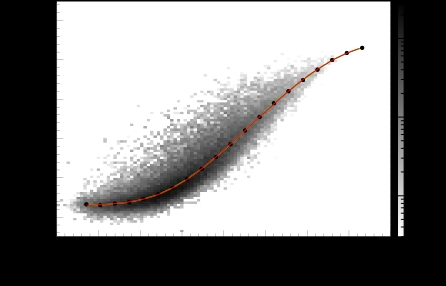

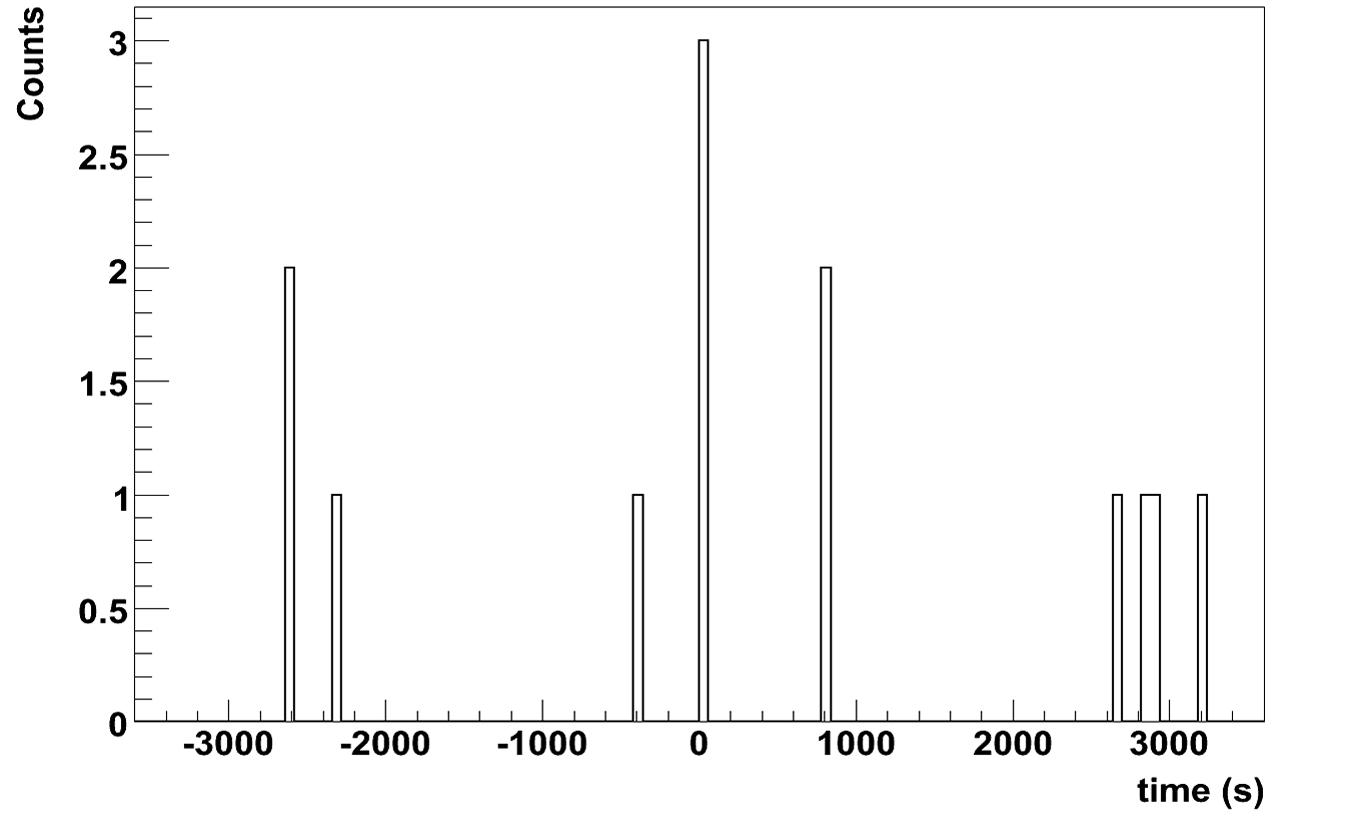

Fig. 6 The energy resolution for muons is approximately 0.3 in

log(energy) over a wide energy range

Fig. 5 The neutrino effective area is shown for a several IceCube

configurations (IC22, IC 40, IC86), neutrino flavors, energy

ranges and analysis levels (trigger, final analysis).

4

A. Karle et al., IceCube

quires very different approaches, which are pre-

sented in numerous talks in the parallel sessions

11, 45

.

The measurement of atmospheric neutrino flux

requires a good understanding of the energy re-

sponse. The energy resolution for muon neutrinos in

the IC22 configuration is shown in Fig. 6

8

. Over a

wide energy range (1 – 10000 TeV) the energy reso-

lution is ~0.3 in log(energy). This resolution is

largely dominated by the fluctuations of the muon

energy loss over the path length of 1 km or less.

IV. ATMOSPHERIC NEUTRINOS AND THE

SEARCH FOR ASTROPHYSICAL NEUTRINOS

We have discussed the effective areas, as well as

the angular and energy resolution of the detector.

Armed with these ingredients we can discuss some

highlights of neutrino measurements and astrophysi-

cal neutrino searches.

Figure 7 shows a preliminary measurement ob-

tained with the IC22 configuration. An unfolding

procedure has been applied to extract this neutrino

flux. Also shown is the atmospheric neutrino flux as

published previously based on 7 years of

AMANDA-II data. The gray shaded area indicates

the range of results obtained when applying the pro-

cedure to events that occurred primarily in the top or

bottom of the detector. The collaboration is devoting

significant efforts to understand and reduce system-

atic uncertainties as the statistics increases. The data

sample consists of 4492 high quality events with an

estimated purity of well above 95%. Several atmos-

pheric neutrino events are observed above 100 TeV,

pushing the diffuse astrophysical neutrino search

gradually towards the PeV energy region and higher

sensitivity. A look at the neutrino effective areas in

Fig. 5 shows that the full IceCube with 86 strings

will detect about one order of magnitude more

events: ~50000 neutrinos/year.

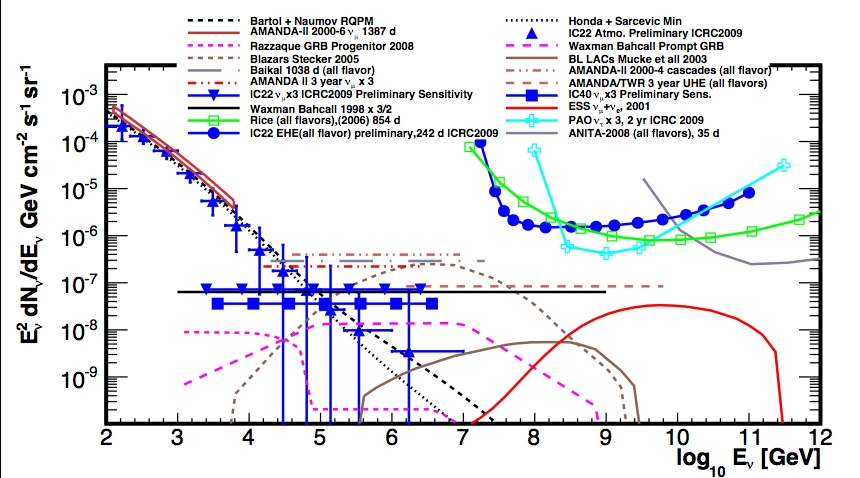

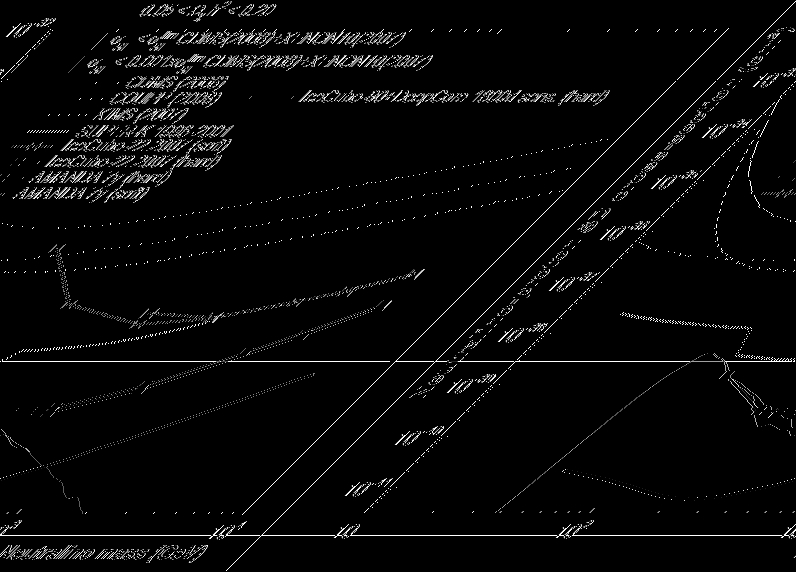

The search for astrophysical neutrinos is summa-

rized in Fig. 8. While the figure focuses on diffuse

fluxes, it is clear that some of these diffuse fluxes

may be detected as point sources. Some examples of

astrophysical flux models that are shown include

AGN Blazars

46

, BL Lacs

47

, Pre-cursor GRB models

and Waxman Bahcall bound

48

and Cosmogenic neu-

Fig. 7 Unfolded muon neutrino spectrum

8

averaged over zenith

angle, is compared to simulation and to the AMANDA result.

Data are taken with the 22 string configuration.

Fig. 8 Measured neutrino atmospheric neutrino fluxes from AMANDA and IceCube are shown together with a number of models for

astrophysical neutrinos and several limits by IceCube and other experiments

PROCEEDINGS OF 31

st

ICRC, ŁÓDŹ 2009

5

trinos

49

.

The following limits are shown for AMANDA

and IceCube:

• AMANDA-II, 2000-2006, atmospheric muon

neutrino flux

50

• IceCube-22 string, atmospheric neutrinos,

(preliminary)

8

• AMANDA-II, 2000-2003, diffuse E

-2

muon

neutrino flux limit

51

• AMANDA-II, 2000-2002, all flavors, not con-

tained events, PeV to EeV, E

-2

flux limit

52

• AMANDA-II, 2000-2004, cascades, contained

events, E

-2

flux limit

53

• IceCube-40, muon neutrinos, throughgoing

events, preliminary sensitivity

29

• IceCube-22, all flavor, throughgoing, downgo-

ing, extremely high energies (10 PeV to

EeV)

10

Also shown are a few experimental limits from

other experiments, including Lake Baikal

54

(diffuse,

not contained), and at higher energies some differen-

tial limits by RICE, Auger and at yet higher energies

energies from ANITA.

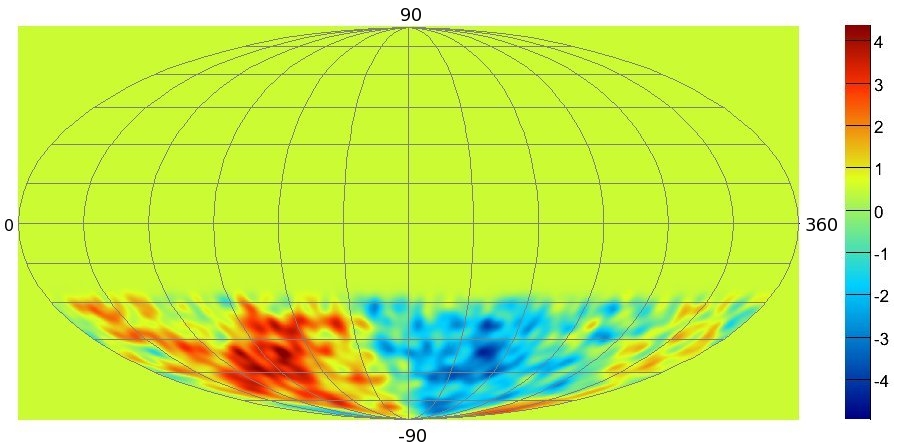

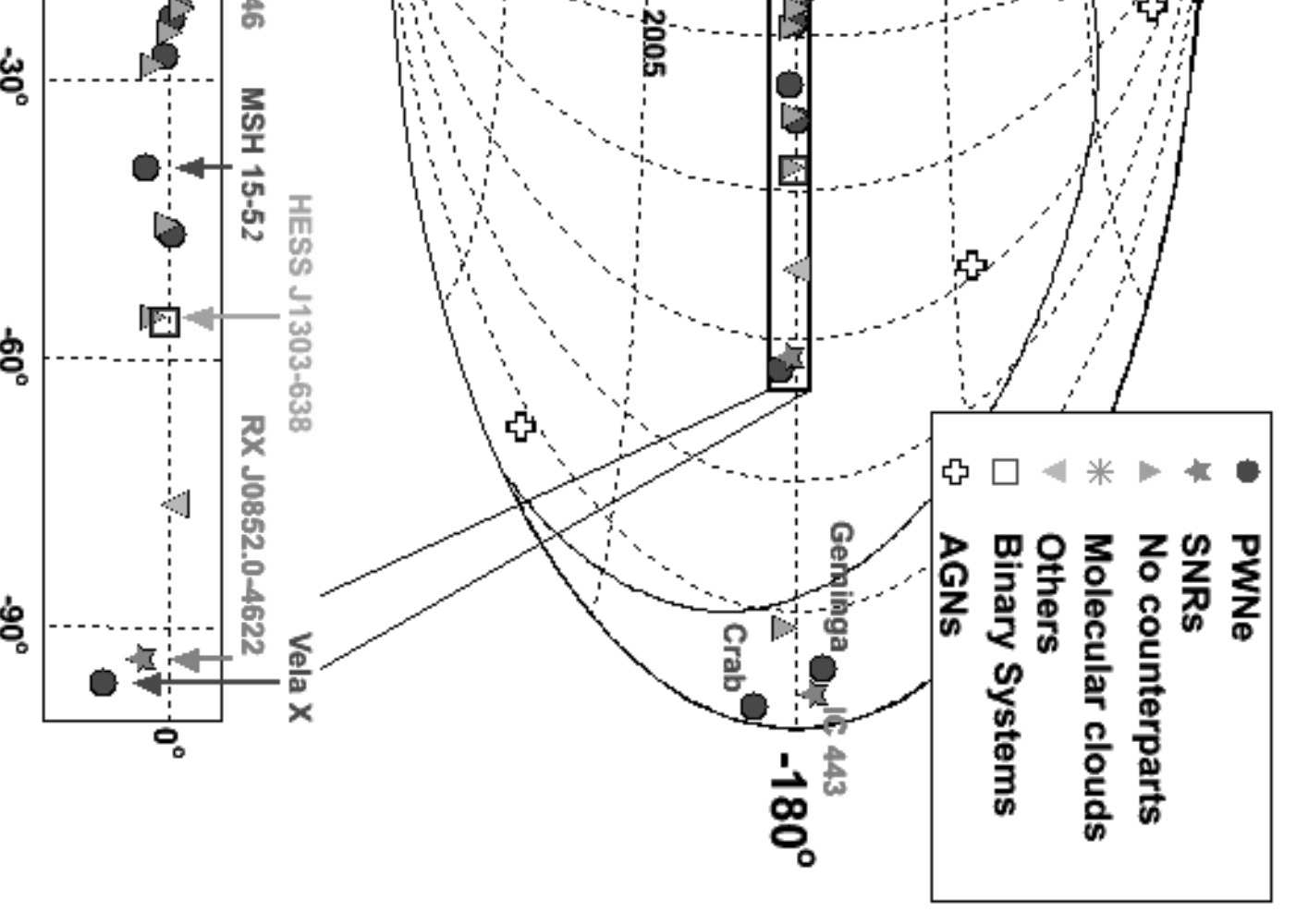

The skymap in Fig. 9 shows the probability for a

point source of high-energy neutrinos. The map was

obtained from 6 months of data taken with the 40

string configuration of IceCube. This is the first re-

sult obtained with half of IceCube instrumented.

The “hottest spot” in the map represents an excess of

7 events, which has a post-trial significance of 10

-4.4

.

After taking into account trial factors, the probability

for this event to happen anywhere in the sky map is

not significant. The background consists of 6796

neutrinos in the Northern hemisphere and 10,981

down-going muons rejected to the 10

-5

level in the

Southern hemisphere. The energy threshold for the

Southern hemisphere increases with increasing ele-

vation to reject the cosmic ray the muon background

by up to a factor of ~10

-5

. The energy of accepted

downgoing muons is typically above 100 TeV.

This unbinned analysis takes the angular resolu-

tion and energy information on an event-by-event

basis into account in the significance calculation.

The obtained sensitivity and discovery potential is

shown for all zenith angles in the figure.

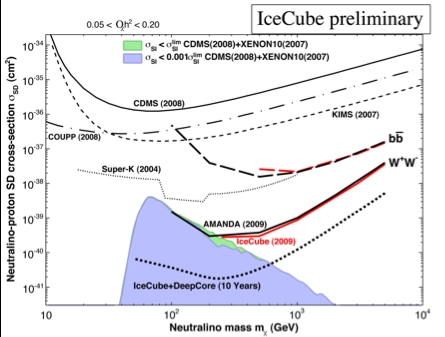

V. SEARCH FOR DARK MATTER

IceCube performs also searches for neutrinos pro-

duced by the annihilation of dark matter particles

gravitationally trapped at the center of the Sun and

the Earth. In searching for generic weakly interacting

massive dark matter particles (WIMPs) with spin-

independent interactions with ordinary matter,

IceCube is only competitive with direct detection

experiments if the WIMP mass is sufficiently large.

On the other hand, for WIMPs with mostly spin-

dependent interactions, IceCube has improved on the

previous best limits obtained by the SuperK experi-

ment using the same method. It improves on the best

limits from direct detection experiments by two or-

ders of magnitude. The IceCube limit as well as a

limit obtained with 7 years of AMANDA are shown

in the figure. It rules out supersymmetric WIMP

models not excluded by other experiments. The in-

stallation of the Deep Core of 6 strings as shown in

Fig. 1 will greatly enhance the sensitivity of IceCube

for dark matter. The projected sensitivity in the

range from 50 GeV to TeV energies is shown in Fig.

11. The Deep Core is an integral part of IceCube

and relies on the more closely spaced nearby strings

for the detection of low energy events as well as on a

highly efficient veto capability against cosmic ray

muon backgrounds using the surrounding IceCube

strings.

Fig, 9: The map shows the probability for a point source of high-

energy neutrinos on the atmospheric neutrino background. The

map was obtained by operating IceCube with 40 strings for half a

year

7

. The “hottest spot” in the map represents an excess of 7

events. After taking into account trial factors, the probability for

this event to happen anywhere in the sky map is not significant.

The background consists of 6796 neutrinos in the Northern hemi-

sphere and 10,981 down-going muons rejected to the 10

-5

level in

the Southern hemisphere.

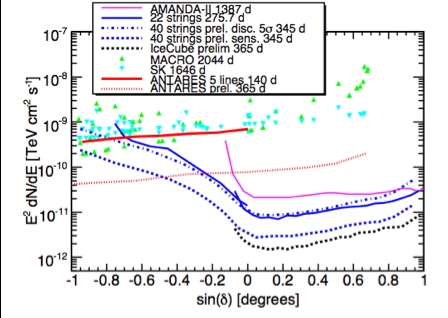

Fig. 10 Upper limits to E

-2

-type astrophysical muon neutrino spec-

tra are shown for the newest result of ½ year of IC40 and a num-

ber of earlier results obtained by IceCube and other experiments.

6

A. Karle et al., IceCube

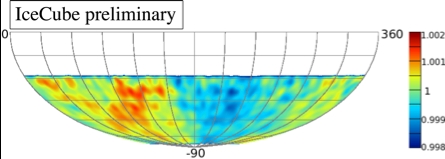

VI. COSMIC RAY MUONS AND HIGH ENERGY

COSMIC RAYS

IceCube is a huge cosmic-ray muon detector and

the first sizeable detector covering the Southern

hemisphere. We are using samples of several billion

downward-going muons to study the enigmatic large

and small scale anisotropies recently identified in the

cosmic ray spectrum by Northern detectors, namely

the Tibet array

55

and the Milagro array

56

. Fig. 12

shows the relative deviations of up to 0.001 from the

average of the Southern muon sky observed with the

22-string array

11

. A total of 4.3 billion events with a

median energy of 14 TeV were used. IceCube data

shows that these anisotropies persist at energies in

excess of 100 TeV ruling out the sun as their origin.

Having extended the measurement to the Southern

hemisphere should help to decipher the origin of

these unanticipated phenomena.

IceCube can detect events with energies ranging

from 0.1 TeV to beyond 1 EeV, neutrinos and cos-

mic ray muons.

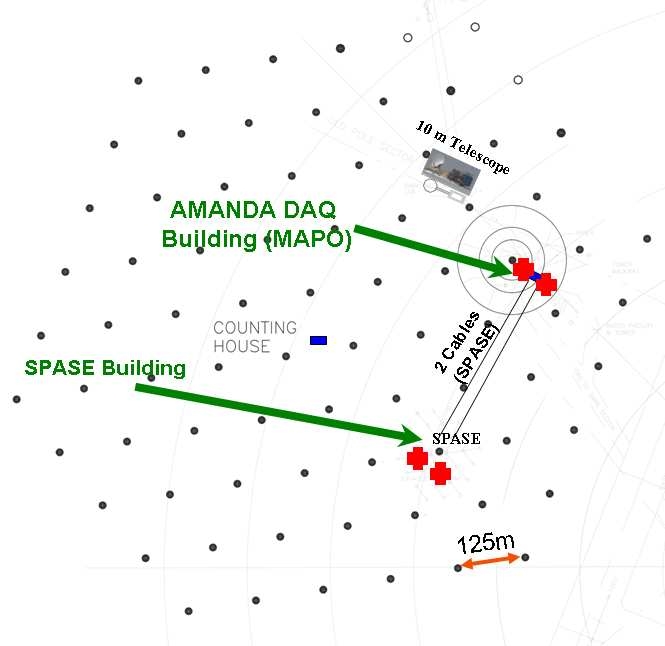

The surface detector IceTop consists of ice Cher-

enkov tank pairs. Each IceTop station is associated

with an IceCube string. With a station spacing of

125 m, it is efficient for air showers above energies

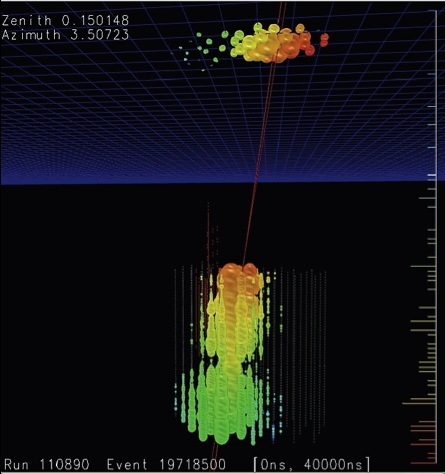

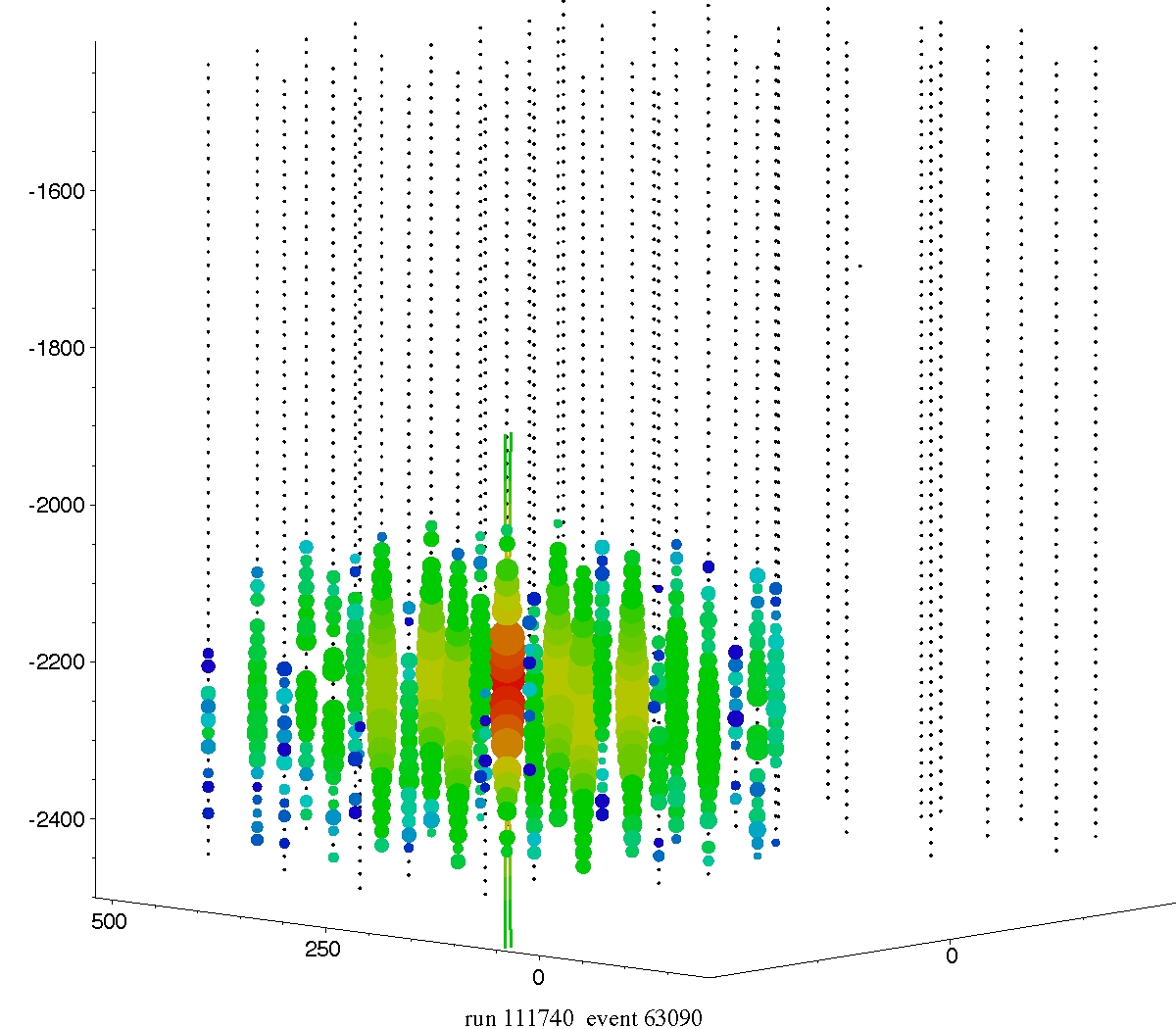

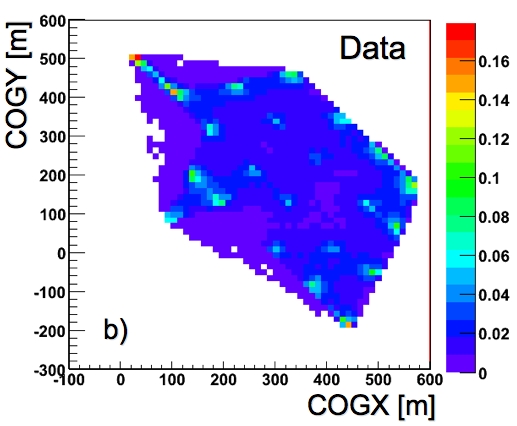

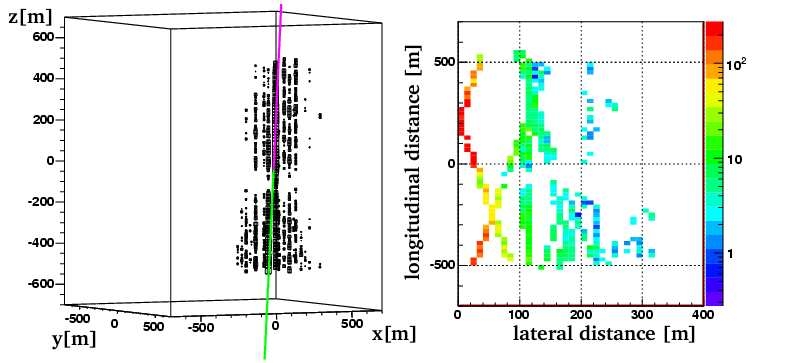

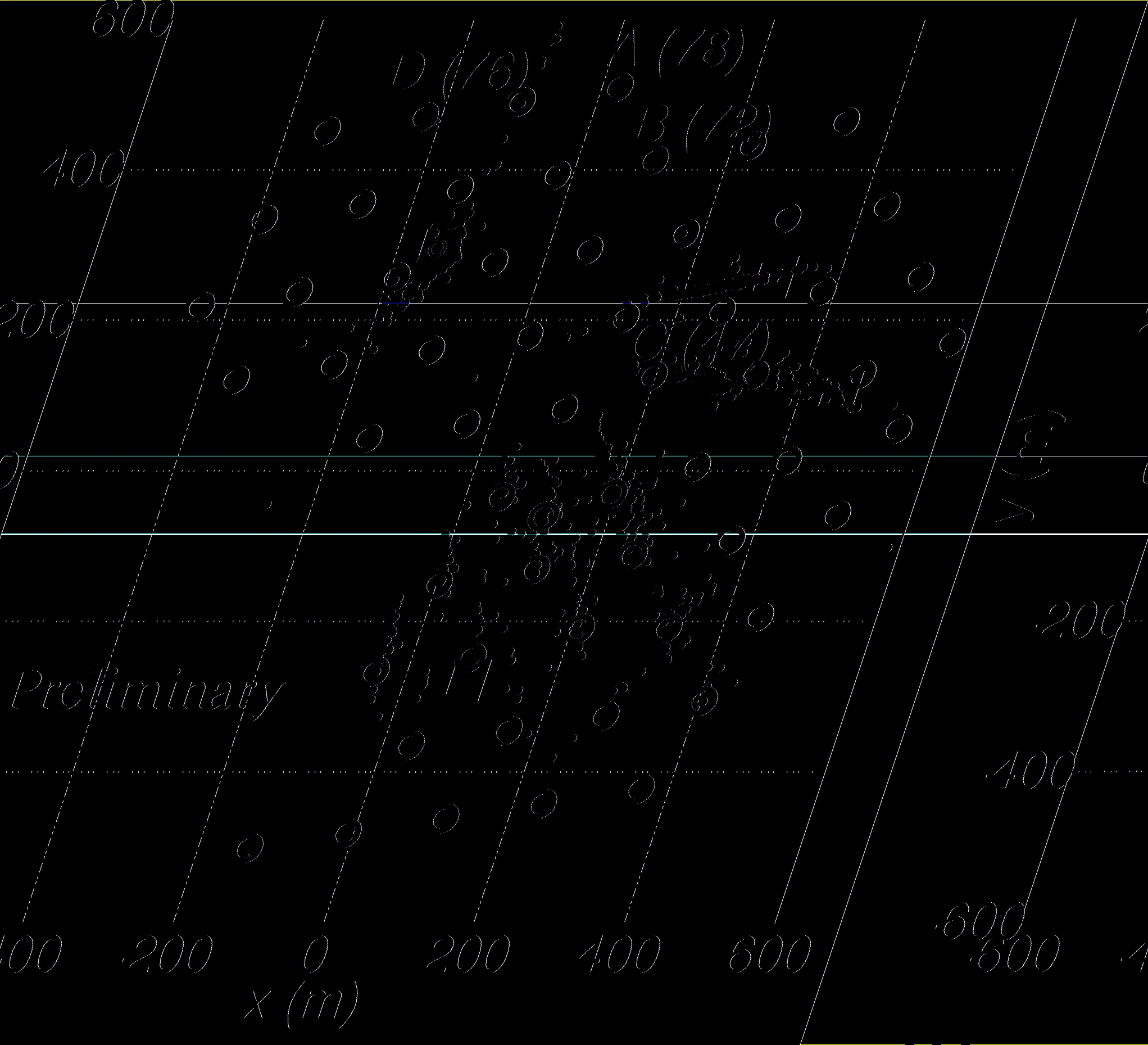

of 1 PeV. Figure 13 shows an event display of a

very high-energy (~EeV) air shower event. Hits are

recorded in all surface detector stations and a large

number of DOMs in the deep ice. Based on a pre-

liminary analysis some 2000 high-energy muons

would have reached the deep detector in this event if

the primary was a proton and more if it was a nu-

cleus. With 1 km

2

surface area, IceTop will acquire

a sufficient number of events in coincidence with the

in-ice detector to allow for cosmic ray measurements

up to 1 EeV. The directional and calorimetric meas-

urement of the high energy muon component with

the in-ice detector and the simultaneous measure-

ment of the electromagnetic particles at the surface

with IceTop will enable the investigation of the en-

ergy spectrum and the mass composition of cosmic

rays.

Events with energies above one PeV can deposit

an enormous amount of light in the detector. Figure

14 shows an event that was generated by flasher

pulse produced by an array of 12 UV LEDs that are

mounted on every IceCube sensor. The event pro-

duces an amount of light that is comparable with that

of an electron cascade on the order of 1 PeV. Pho-

tons were recorded on strings at distances up to 600

m from the flasher. The events are somewhat

brighter than previously expected because the deep

ice below a depth of 2100m is exceptionally clear.

Fig. 13 A very high energy cosmic ray air shower ob-

served both with the surface detector IceTop and the in-

ice detector string array.

Fig. 11 The red boxes show the upper limits at 90% confi-

dence level on the spin-dependent interaction of dark matter

particles with ordinary matter

18, 20

. The two lines represent the

extreme cases where the neutrinos originate mostly from

heavy quarks (top line) and weak bosons (bottom line) pro-

duced in the annihilation of the dark matter particles. Also

shown is the reach of the complete IceCube and its DeepCore

extension after 5 years of observation of the sun. The shaded

area represents supersymmetric models not disfavored by di-

rect searches for dark matter. Also shown are previous limits

from direct experiments and from the Superkamiokande ex-

periment.

Fig.12 The plot shows the skymap of the relative intensity in

the arrival directions of 4.3 billion muons produced by cosmic

ray interactions with the atmosphere with a median energy of

14 TeV; these events were reconstructed with an average angu-

lar resolution of 3 degrees. The skymap is displayed in equa-

torial coordinates.

PROCEEDINGS OF 31

st

ICRC, ŁÓDŹ 2009

7

The scattering length is substantially larger than in

average ice at the depth of AMANDA.

Extremely high energy (EHE) events, above about

1 PeV, are observed near and above the horizon. At

these energies, the Earth becomes opaque to neutri-

nos and one needs to change the search strategy. In

an optimized analysis, the neutrino effective area

reaches about 4000m

2

for IC80 at 1 EeV. IC80 can

therefore test optimistic models of the cosmogenic

neutrino flux. IceCube is already accumulating an

exposure with the current data that makes detection

of a cosmogenic neutrino event possible.

IceCube construction is on schedule to completion

in February 2011. The operation of the detector sta-

ble and data analysis of recent data allows a rapid in-

crease of the sensitivity and the discovery potential

of IceCube.

VII. ACKNOWLEDGEMENTS

We acknowledge the support from the following

agencies: U.S. National Science Foundation-Office

of Polar Program, U.S. National Science Foundation-

Physics Division, University of Wisconsin Alumni

Research Foundation, U.S. Department of Energy,

and National Energy Research Scientific Computing

Center, the Louisiana Optical Network Initiative

(LONI) grid computing resources; Swedish Research

Council, Swedish Polar Research Secretariat, and

Knut and Alice Wallenberg Foundation, Sweden;

German Ministry for Education and Research

(BMBF), Deutsche Forschungsgemeinschaft (DFG),

Germany; Fund for Scientific Research (FNRS-

FWO), Flanders Institute to encourage scientific and

technological research in industry (IWT), Belgian

Federal Science Policy Office (Belspo); the Nether-

lands Organisation for Scientific Research (NWO);

M. Ribordy acknowledges the support of the SNF

(Switzerland); A. Kappes and A. Groß acknowledge

support by the EU Marie Curie OIF Program.

REFERENCES

[1] E. Waxman and J. Bahcall, Phys. Rev. D 59 (1998)

023002

[2] T. Gaisser,

Cosmic Rays and Particle Physics

Cambridge University Press 1991

[3] M.Ackermann et al., J. Geophys. Res.

111

, D13203

(2006)

[4] J.Ahrens et al., Nucl. Inst. Meth. A

524

, 169 (2004)

[5] P.

Berghaus

for

the

IceCube

Collaboration,

proc.

Proc.

for

the

XV

International

Symposium

on

Very

High

Energy

Cosmic

Ray

Interactions

(ISVHECRI

2008),

Paris,

France,

arXiv:0902.0021

[6] P. Berghaus

et al.

, Direct Atmospheric Muon Energy

Spectrum Measurement with IceCube (IceCube col-

laboration), Proc. of the 31

st

ICRC HE1.5; astro-

ph.HE/arXiv: 0909.0679

[7] J. Dumm

et al.

, Likelihood Point-Source Search with

IceCube (IceCube collaboration), Proc. of the 31

st

ICRC OG2.5D.

[8] D. Chirkin

et al.

, Measurement of the Atmospheric

Neutrino Energy Spectrum with IceCube (IceCube

collaboration), Proc. of the 31

st

ICRC HE2.2

[9] J. Kiryluk

et al.

, First Search for Extraterrestrial Neu-

trino-Induced Cascades with IceCube (IceCube col-

laboration), Proc. of the 31

st

ICRC OG2.5; astro-

ph.HE/arXiv: 0909.0989

[10] K. Mase

et al.

, The Extremely High-Energy Neutrino

Search with IceCube (IceCube collaboration), Proc. of

the 31

st

ICRC HE1.4

[11] R. Abbasi and P. Desiati, JC Díaz Vélez

et al.

, Large-

Scale Cosmic-Ray Anisotropy with IceCube (IceCube

collaboration), Proc. of the 31

st

ICRC SH3.2; astro-

ph.HE/0907.0498

[12] K. Andeen

et al.

, Composition in the Knee Region

with SPASE-AMANDA (IceCube collaboration),

Proc. of the 31

st

ICRC HE1.2

[13] C. Rott et al., Atmospheric Neutrino Oscilaton

Measurement with IceCube (IceCube collaboration),

Proc. of the 31

st

ICRC HE2.3

[14] M. Baker

et al.

, IceCube Time-Dependent Point-

Source Analysis Using Multiwavelength Information

(IceCube collaboration), Proc. of the 31

st

ICRC OG2.5

[15] J. Bazo Alba

et al.

, Search for Neutrino Flares from

Point Sources with IceCube (IceCube collaboration,

to

appear in

Proc. of the 31

st

ICRC OG2.5

[16] M. Bissok

et al.,

Sensor Development and Calibration

for Acoustic Neutrino Detection in Ice (IceCube col-

laboration), Proc. of the 31

st

International Cosmic Ray

Conference, Lodz, Poland (2009) HE2.4; astro-

ph.IM/09073561

[17] D. Boersma

et al.

, Moon-Shadow Observation by

IceCube (IceCube collaboration), Proc. of the 31

st

ICRC OG2.5

Figure 14: A flasher event in IceCube. Such events,

produced by LEDs built in the DOMs, can be used for

calibration purposes.

8

A. Karle et al., IceCube

[18] J. Braun and D. Hubert

et al.

, Searches for WIMP

Dark Matter from the Sun with AMANDA (IceCube

collaboration), Proc. of the 31

st

ICRC HE2.3; astro-

ph.HE/09061615

[19] M. D’Agostino

et al.

, Search for Atmospheric Neu-

trino-Induced Cascades (IceCube collaboration), Proc.

of the 31

st

ICRC HE2.2; ; astro-

ph.HE/arXiv:0910.0215

[20] M. Danninger and K. Han

et al.

, Search for the Ka-

luza-Klein Dark Matter with the AMANDA/IceCube

Detectors (IceCube collaboration), Proc. of the 31

st

ICRC HE2.3; astro-ph.HE/0906.3969

[21] F. Descamps

et al.

, Acoustic Detection of High-

Energy Neutrinos in Ice (IceCube collaboration), Proc.

of the 31

st

ICRC HE2.4

[22] M. Duvoort

et al.

, Search for GRB Neutrinos via a

(Stacked) Time Profile Analysis (IceCube collabor-

ation), Proc. of the 31

st

ICRC OG2.4

[23] S. Euler

et al.

, Implementation of an Active Veto

against Atmospheric Muons in IceCube DeepCore

(IceCube collaboration), Proc. of the 31

st

ICRC OG2.5

[24] T. Feusels

et al.

, Reconstruction of IceCube Coinci-

dent Events and Study of Composition-Sensitive Ob-

servables Using Both the Surface and Deep Detector

(IceCube collaboration), Proc. of the 31

st

ICRC HE1.3

[25] A. Francowiak

et al.

, Optical Follow-Up of High-

Energy Neutrinos Detected by IceCube (IceCube and

ROTSE collaborations), Proc. of the 31

st

ICRC OG2.5

[26] R. Franke

et al.

, Neutrino Triggered High-Energy

Gamma-Ray Follow-Up with IceCube (IceCube col-

laboration), Proc. of the 31

st

ICRC OG2.5

[27] L. Gerhardt

et al.

, Study of High p_T Muons in

IceCube (IceCube collaboration), Proc. of the 31

st

ICRC HE1.5; astro-ph.HE/arXiv 0909.0055

[28] D. Grant

et al.

, Fundamental Neutrino Measurements

with IceCube DeepCore (IceCube collaboration),

Proc. of the 31

st

ICRC HE2.2

[29] K. Hoshina

et al.

, Search for Diffuse High-Energy

Neutrinos with IceCube (IceCube collaboration), Proc.

of the 31

st

ICRC OG2.5

[30] W. Huelsnitz

et al.,

Search for Quantum Gravity with

IceCube and High-Energy Atmospheric Neutrinos

(IceCube collaboration), Proc. of the 31

st

ICRC HE2.3

[31] A. Ishihara

et al.

, Energy-Scale Calibration Using

Cosmic-Ray Induced Muon Bundles Measured by the

IceCube Detector with IceTop Coincident Signals

(IceCube collaboration), Proc. of the 31

st

ICRC HE1.5

[32] A. Kappes

et al.

, Searches for Neutrinos from GRBs

with the IceCube 22-String Detector and Sensitivity

Estimates for the Full Detector (IceCube collabor-

ation), Proc. of the 31

st

ICRC OG2.4

[33] F. Kislat

et al.

, A First All-Particle Cosmic-Ray En-

ergy Spectrum from IceTop (IceCube collaboration),

Proc. of the 31

st

ICRC HE1.2

[34] M. Kowarik

et al.

, Supernova Search with the

AMANDA / IceCube Neutrino Telescopes (IceCube

collaboration), Proc. of the 31

st

ICRC OG2.2

[35] D. Lennarz

et al.

, Search for High-Energetic Neutrinos

from Supernova Explosions with AMANDA (IceCube

collaboration), Proc. of the 31

st

ICRC OG2.5; astro-

ph.HE/09074621

[36] K. Meagher

et al.

, Search for Neutrinos from GRBs

with IceCube (IceCube collaboration), Proc. of the 31

st

ICRC OG2.4

[37] E. Middell

et al.

, Improved Reconstruction of Cas-

cade-Like Events (IceCube collaboration), Proc. of the

31

st

ICRC OG2.5

[38] C. Portello-Roucelle

et al.

, IceCube / AMANDA

Combined Analyses for the Search of Neutrino Sour-

ces at Low Energies (IceCube collaboration), Proc. of

the 31

st

ICRC OG2.5

[39] C. Rott

et al.

, Results and Prospects of Indirect

Searches fo Dark Matter with IceCube (IceCube col-

laboration), Proc. of the 31

st

ICRC HE2.3

[40] B. Ruzybayev

et al.

, Small Air Showers in IceCube

(IceCube collaboration), Proc. of the 31

st

ICRC HE1.1

[41] A. Schukraft and J. Hülß

et al.

, AMANDA 7-Year

Multipole Analysis (IceCube collaboration), Proc. of

the 31

st

ICRC OG2.5; astro-ph.HE/09063942

[42] S. Seo

et al.

, Search for High-Energy Tau Neutrinos in

IceCube (IceCube collaboration), Proc. of the 31

st

ICRC OG2.5

[43]

43

A. Silvestri

et al.

, Search for Ultra–High-Energy

Neutrinos with AMANDA (IceCube collaboration),

Proc. of the 31

st

ICRC OG2.5

[44] S. Tilav

et al.

, Atmospheric Variations as Observed by

IceCube (IceCube collaboration), Proc. of the 31

st

ICRC HE1.1

[45] C. Wiebusch

et al.

, Physics Capabilities of the

IceCube DeepCore Detector (IceCube collaboration),

Proc. of the 31

st

ICRC OG2.5; astro-ph.IM/09072263

[46] F. W. Stecker. Phys. Rev. D, 72(10):107301, 2005

[47] A. Mücke et al. Astropart. Phys., 18:593, 2003

[48] S. Razzaque, P. Meszaros, and E. Waxman. Phys.

Rev. D, 68(8):083001, 2003

[49] R. Engel, D. Seckel, and T. Stanev. Phys. Rev. D,

64(9):093010, 2001

[50] A. Achterberg

et al

. (IceCube collaboration),

Phys.Rev.D

79

102005, 2009

[51] A. Achterberg

et al

. (IceCube collaboration),, Phys.

Rev. D

76

042008 (2007);

[52] Ackerman

et al

. (IceCube collaboration), Astrophys.

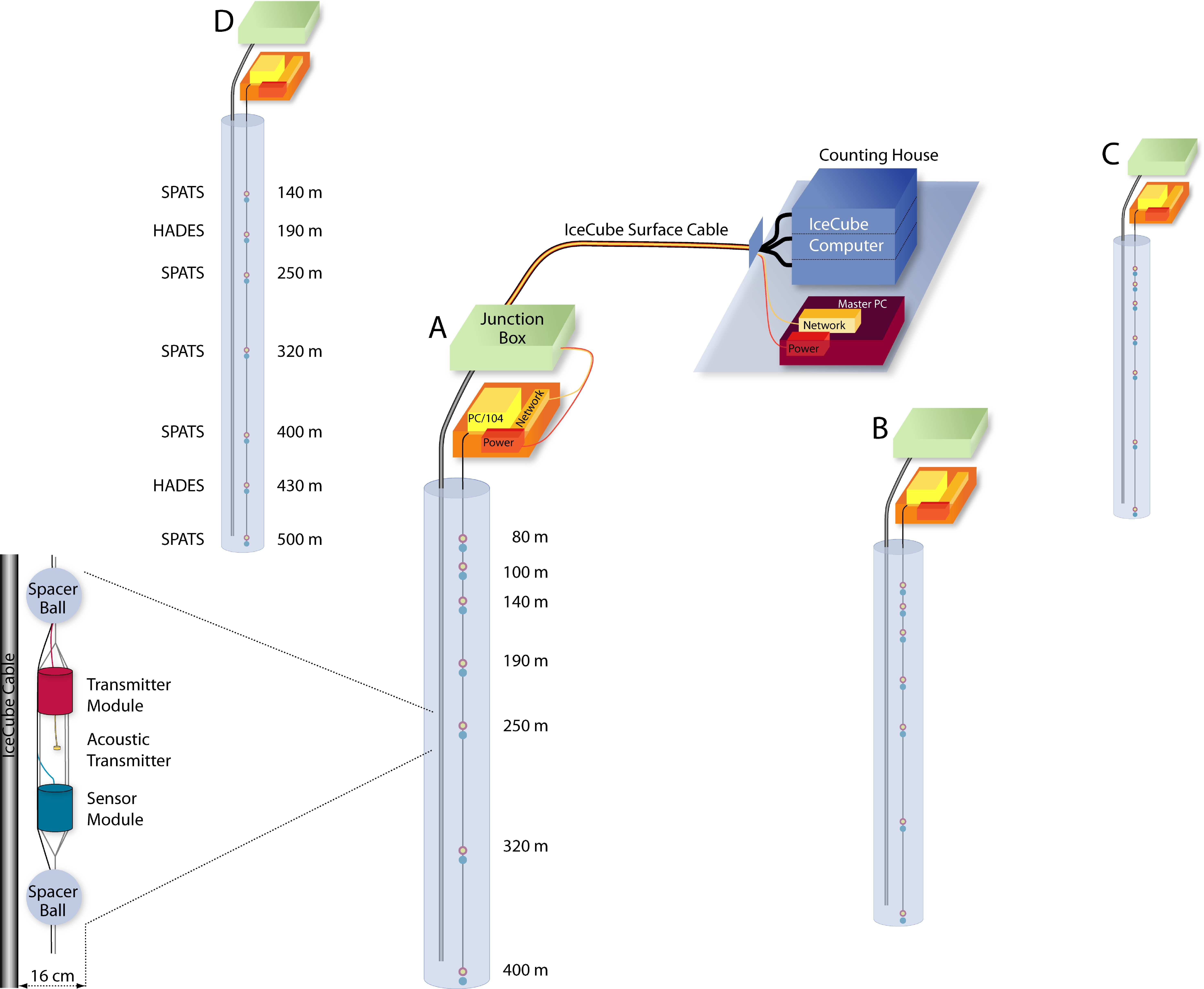

Jour.

675

2 1014-1024 (2008); astro-ph/07113022

[53] A. Achterberg,

et al

., Astrophys. Jour.

664

397-410

(2007); astro-ph/0702265v2

[54] A.V.Avrorin

et al.

, Astronomy Letters, Vol.35, No.10,

pp.651-662, 2009; arXiv:0909.5562

[55] M. Amenomori et al. [The Tibet AS-Gamma

Collaboration], Astrophys. J. 633, 1005 (2005)

arXiv:astroph/0502039

[56] A.A. Abdo et al., Phys. Rev. Lett. 101: 221101

(2008), arXiv:0801.3827

PROCEEDINGS OF THE 31

st

ICRC, ŁOD´ Z´ 2009

1

All-Sky Point-Source Search with 40 Strings of IceCube

Jon Dumm

∗

, Juan A. Aguilar

∗

, Mike Baker

∗

, Chad Finley

∗

, Teresa Montaruli

∗

,

for the IceCube Collaboration

†

∗

Dept. of Physics, University of Wisconsin, Madison, WI, 53706, USA

†

See the special section of these proceedings.

Abstract. During 2008-09, the IceCube Neutrino

Observatory was operational with 40 strings of

optical modules deployed in the ice. We describe

the search for neutrino point sources based on a

maximum likelihood analysis of the data collected

in this configuration. This data sample provides the

best sensitivity to high energy neutrino point sources

to date. The field of view is extended into the down-

going region providing sensitivity over the entire

sky. The 22-string result is discussed, along with

improvements leading to updated angular resolution,

effective area, and sensitivity. The improvement in

the performance as the number of strings is increased

is also shown.

Keywords: neutrino astronomy

I. INTRODUCTION

The primary goal of the IceCube Neutrino Obser-

vatory is the detection of high energy astrophysical

neutrinos. Such an observation could reveal the origins

of cosmic rays and offer insight into some of the

most energetic phenomena in the Universe. In order to

detect these neutrinos, IceCube will instrument a cubic

kilometer of the clear Antarctic ice sheet underneath the

geographic South Pole with an array of 5,160 Digital

Optical Modules (DOMs) deployed on 86 strings from

1.5–2.5 km deep. This includes six strings with a smaller

DOM spacing and higher quantum efficiency compris-

ing DeepCore, increasing the sensitivity to low energy

neutrinos <∼ 100 GeV. IceCube also includes a surface

array (IceTop) for observing extensive air showers of

cosmic rays. Construction began in the austral sum-

mer 2004–05, and is planned to finish in 2011. Each

DOM consists of a 25 cm diameter Hamamatsu photo-

multiplier tube, electronics for waveform digitization,

and a spherical, pressure-resistant glass housing. The

DOMs detect Cherenkov photons induced by relativistic

charged particles passing through the ice. In particular,

the directions of muons (either from cosmic ray showers

above the surface, or neutrino interactions within the ice

or bedrock) can be well reconstructed from the track-like

pattern and timing of hit DOMs.

The 22-string results presented in the discussion are

from a traditional up-going search. In such a search,

neutrino telescopes use the Earth as a filter for the large

background of atmospheric muons, leaving only an irre-

ducible background of atmospheric neutrinos below the

horizon. These have a softer spectrum (∼ E

−3.6

above

100 GeV) than astrophysical neutrinos which originate

from the decays of particles accelerated by the first order

Fermi mechanism and thus are expected to have an E

−2

spectrum. This search extends the field of view above

the horizon into the large background of atmospheric

muons. In order to reduce this background, strict cuts on

the energy of events need to be applied. This makes the

search above the horizon primarily sensitive to extremely

high energy (> PeV) sources.

II. METHODOLOGY

An unbinned maximum likelihood analysis, account-

ing for individual reconstructed event uncertainties and

energy estimators, is used in IceCube point source anal-

yses. A full description can be found in Braun et al. [1].

This method improves the sensitivity to astrophysical

sources over directional clustering alone by leveraging

the event energies in order to separate hard spectrum

signals from the softer spectrum of the atmospheric

neutrino or muon background. For each tested direction

in the sky, the best fit is found for the number of signal

events n

s

over background and the spectral index of

a power law γ of the excess events. The likelihood

ratio of the best-fit hypothesis to the null hypothesis

(n

s

= 0) forms the test statistic. The significance of

the result is evaluated by performing the analysis on

scrambled data sets, randomizing the events in right

ascension but keeping all other event properties fixed.

Uniform exposure in right ascension is ensured as the

detector rotates completely each day, and the location

at 90

◦

south latitude gives a uniform background for

each declination band. Events that are nearly vertical

(declination < −85

◦

or > 85

◦

) are left out of the

analysis, since scrambling in right ascension does not

work in the polar regions.

Two point-source searches are performed. The first is

an all-sky search where the maximum likelihood ratio

is evaluated for each direction in the sky on a grid,

much finer than the angular resolution. The significance

of any point on the grid is determined by the fraction

of scrambled data sets containing at least one grid point

with a log likelihood ratio higher than the one observed

in the data. This fraction is the post-trial p-value for

the all-sky search. Because the all-sky search includes

a large number of effective trials, the second search is

restricted to the directions of a priori selected sources

of interest. The post-trial p-value for this search is again

2

J. DUMM et al. ICECUBE POINT SOURCE ANALYSIS

calculated by performing the same analysis on scrambled

data sets.

III. EVENT SELECTION

Forty strings of IceCube were operational from April

2008 to May 2009 with ∼ 90% duty cycle after a good

run selection based on detector stability. The ∼ 3× 10

10

triggered events per year are first reduced to ∼ 1 × 10

9

events using low-level likelihood reconstructions and

energy estimators as part of an online filtering system

on site. These filtered events are sent over satellite to a

data center in the North for further processing, including

higher-level likelihood reconstructions for better angular

resolution. Applying the analysis-level cuts (described

below) that optimize the sensitivity to point sources

finally yields a sample of ∼ 3 × 10

4

events. Due to

offline filtering constraints, 144 days of livetime were

used to design the analysis strategy and finalize event

selection, keeping the time and right ascension of the

events blinded. This represents about one-half of the

final 40-string data sample. Because the northern sky

and southern sky present very different challenges, two

techniques are used to reduce the background due to

cosmic ray muons.

For the northern sky, the Earth filters out atmospheric

muons. Only neutrinos can penetrate all the way through

the Earth and interact near the detector to create up-

going muons. However, since down-going atmospheric

muons trigger the detector at ∼ 1 kHz, even a small

fraction of mis-reconstructed events contaminates the

northern sky search. Events may be mis-reconstructed

due to random noise or light from muons from indepen-

dent cosmic ray showers coincident in the same readout

window of ± 10 µs. Therefore, strict event selection

is still required to reject mis-reconstructed down-going

events. This selection is based on track-like quality

parameters (the reduced likelihood of the track fit and

the directional width of the likelihood space around

the best track fit [2]), a likelihood ratio between the

best up-going and down-going track solution, and a

requirement that the event’s set of hits can be split

into two parts which both reconstruct as nearly-upgoing.

Although the track-like quality parameters have very

little declination dependence, these last two parameters

only work for selecting up-going neutrino candidates

and remove down-going events. This event selection

provides an optimal sensitivity to sources of neutrinos

in the TeV–PeV energy range.

In the southern sky, energy estimators were used to

separate the large number of atmospheric muons from a

hypothetical source of neutrinos with a harder spectrum.

After track-quality selections, similar but tighter than

for the up-going sample, a cut based on an energy

estimator is made until a fixed number of events per

steradian is achieved. Because only the highest energy

events pass the selection, sensitivity is primarily to

neutrino sources at PeV energies and above. Unlike

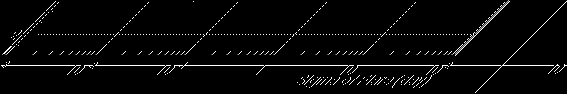

for the northern sky, which is a ∼ 90% pure sample

ν

/ GeV )

10

(E

log

2

3

4

5

6

7

8

9

(E/GeV)]

10

dP/d[log

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

Atmospheric (up-going)

-2

(up-going)

E

-2

(down-going)

E

-1.5

(down-going)

E

Fig. 1: Probability density (P) of neutrino energies at

final cut level for atmospheric and an E

−2

spectrum of

neutrinos averaged over the northern sky and E

−1.5

in

the southern sky.

of neutrino-induced muons, the event sample in the

southern sky is almost entirely well-reconstructed high

energy atmospheric muons and muon bundles.

IV. PERFORMANCE

The performance of the detector and the analysis

is characterized using a simulation of ν

µ

and ν¯

µ

. At-

mospheric muon background is simulated using COR-

SIKA [3]. Muon propagation through the Earth and

ice are done using MMC [4]. A detailed simulation of

the ice [5] propagates the Cherenkov photon signal to

each DOM. Finally, a simulation of the DOM, including

angular acceptance and electronics, yields an output

treated identically to data. For an E

−2

spectrum of

neutrinos the median angular difference between the

neutrino and the reconstructed direction of the muon in

the northern (southern) sky is 0.8

◦

(0.6

◦

). The different

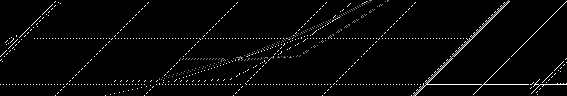

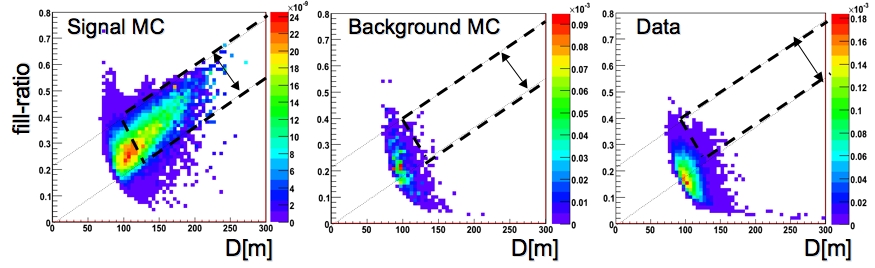

energy distributions in each hemisphere shown in Fig. 1

cause this effect, since the reconstruction performs better

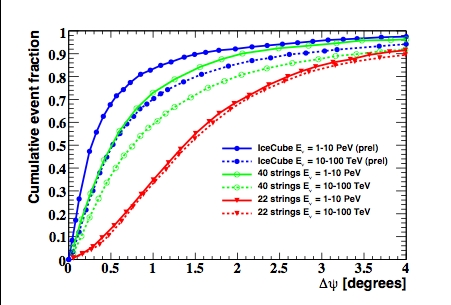

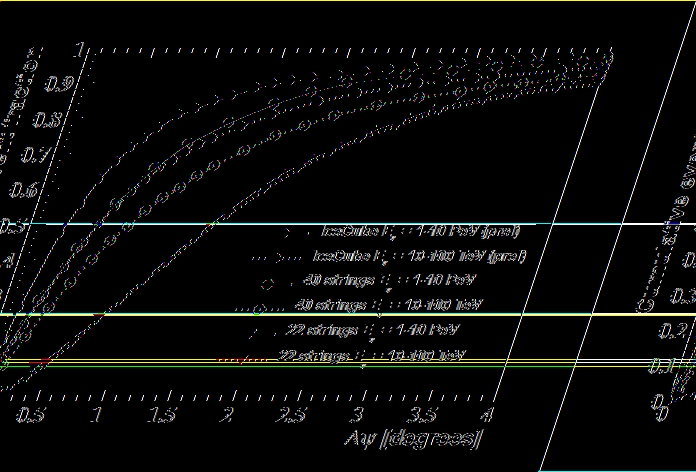

at higher energies. The cumulative point spread functions

for the 22-, 40-, and 80-string configurations of IceCube

are shown in Fig. 2 for two different ranges of energy.

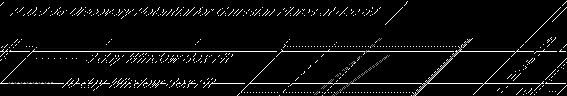

Fig. 3 shows the effective area to an equal-ratio flux

of ν

µ

+ ν¯

µ

. Fig. 4 shows the 40-string sensitivity to

an E

−2

spectrum of neutrinos for 330 days of livetime

and compared to the 22-string configuration of IceCube,

as well as ANTARES sensitivity, primarily relevant for

the southern sky. The 80-string result uses the same

methodology and event selection for the up-going region

as this work.

V. DISCUSSION

The previous season of IceCube data recorded with

the 22-string configuration has already been the subject

of point source searches [7]. The analysis included

5114 atmospheric neutrino events including a contam-

ination of about 5% of atmospheric muons during a

livetime of about 276 days. No evidence was found

for a signal, and the largest significance is located at

PROCEEDINGS OF THE 31

st

ICRC, ŁOD´ Z´ 2009

3

Fig. 2: The point spread function of the 22-, 40-, and 80-

string IceCube configurations in two energy bins. This

is the cumulative distribution of the angular difference

between the neutrino and recostructed muon track using

simulated neutrinos. The large improvement between the

22- and 40-string point spread function at high energies

is due to an improvement in the reconstruction, which

now uses charge information.

ν

/ GeV )

10

( E

log

2

3

4

5

6

7

8

9

]

2

Effective Area [m

μ

ν

+

μ

ν

-3

10

-2

10

-1

10

1

10

2

10

3

10

4

10

zenith range (0

°

, 30

°

)

zenith range (30

°

, 60

°

)

zenith range (60

°

, 90

°

)

zenith range (90

°

, 120

°

)

zenith range (120

°

, 150

°

)

zenith range (150

°

, 180

°

)

Fig. 3: The IceCube 40-string solid-angle-averaged ef-

fective area to an equal-ratio flux of ν

µ

and ν¯

µ

, recon-

structed within 2

◦

of the true direction. The different

shapes of each zenith band are due to a combination of

event selection and how much of the Earth the neutrinos

must travel through. Since the chance of a neutrino

interacting increases with its energy, in the very up-going

region high energy neutrinos are absorbed in the Earth.

Only near the horizon do muons from > PeV neutrinos

often reach IceCube. Above the horizon, low energy

events are removed by cuts, and in the very down-going

region effective area for high energies is lost due to

insufficient target material.

153.4

◦

r.a., 11.4

◦

dec. Accounting for all trial factors,

this is consistent with the null hypothesis at the 2.2 σ

level. The events in the most significant location did

not show a clear time dependent pattern, and these

coordinates have been included in the catalogue of

sources for the 40-string analysis.

Declination [degrees]

-80 -60 -40 -20

0

20

40

60

80

]

-1

s

-2

dN/dE [TeV cm

2

E

-12

10

-11

10

-10

10

-9

10

22 strings 275.7 d

40 strings prel. sens. 330 d

IceCube prelim 365 d

ANTARES prel. 365 d

Fig. 4: 40-string IceCube sensitivity for 330 days as

a function of declination to a point source with dif-

ferential flux

dΦ

dE

= Φ

0

(E/TeV)

−2

. Specifically, Φ

0

is

the minimum source flux normalization (assuming E

−2

spectrum) such that 90% of simulated trials result in a

log likelihood ratio log λ greater than the median log

likelihood ratio in background-only trials (log λ = 0).

Comparison are also shown for the 22-string and the

expected performance of the 80-string configuration, as

well as the ANTARES [6] sensitivity.

Since the 22-string analysis, a number of improve-

ments have been achieved. An additional analysis of

the 22-string data optimized for E

−2

and harder spectra

was performed down to −50

◦

declination with a binned

search [8]. These analyses are now unified into one

all-sky search which uses the energy of the events and

extends to −85

◦

declination. Secondly, a new recon-

struction that uses the charge observed in each DOM

performs better, especially on high energy events. Third,

an improved energy estimator, based on the photon

density along the muon track, has a better muon energy

resolution.

With construction more than half-complete, IceCube

is already beginning to demonstrate its potential as an

extraterrestrial neutrino observatory. The latest science

run with 40 strings was the first detector configuration

with one axis the same length as that of the final array.

Horizontal muon tracks reconstructed along this axis

provide the first class of events of the same quality as

those in the finished 80-string detector.

There are now 59 strings of IceCube deployed and

taking data. Further development of reconstruction and

analysis techniques, through a better understanding of

the detector and the depth-dependent properties of the

ice, have continued to lead to improvements in physics

results. New techniques in the southern sky may include

separating muon bundles of cosmic ray showers from

single muons induced by high energy neutrinos. At lower

energies, the identification of starting muon tracks from

neutrinos interacting inside the detector will be helped

with the addition of DeepCore [9].

4

J. DUMM et al. ICECUBE POINT SOURCE ANALYSIS

REFERENCES

[1] J. Braun et al. Methods for point source analysis in high energy

neutrino telescopes. Astropart. Phys. 29, 155, 2006.

[2] Neunhoffer, T. Astropart. Phys., 25, 220. 2006.

[3] D. Heck et al. CORSIKA: A Monte Carlo code to simulate

extensive air showers, FZKA, Tech. Rep., 1998.

[4] D. Chirkin and W. Rhode. Preprint hep-ph/0407075, 2004.

[5] J. Lundberg et al. Nucl. Inst. Meth., vol. A581, p. 619, 2007.

[6] J. A. Aguilar et al. Expected discovery potential and sensitivity to

neutrino point-like sources of the ANTARES neutrino telescope.

in proceedings 30

th

ICRC, Merida. 2007.

[7] R. Abbasi et al. (IceCube Collaboration) First Neutrino Point-

Source Results from IceCube in the 22-String Configuration.

submitted, 2009. astro-ph/09052253

[8] R. Lauer, E. Bernardini. Extended Search for Point Sources of

Neutrinos Below and Above the Horizon. in proceedings of 2

nd

Heidelberg Workshop, 2009, astro-ph/09035434.

[9] C. Wiebusch et al. (IceCube Collaboration), these proceedings.

PROCEEDINGS OF THE 31

st

ICRC, ŁO´ DZ´ 2009

1

IceCube Time-Dependent Point Source Analysis Using

Multiwavelength Information

M. Baker

∗

, J. A. Aguilar

∗

, J. Braun

∗

, J. Dumm

∗

, C. Finley

∗

, T. Montaruli

∗

, S. Odrowski

†

, E. Resconi

†

for the IceCube Collaboration

‡

∗

Dept. of Physics, University of Wisconsin, Madison, WI 53706, USA

†

Max-Planck-Institut fur Kernphysik, D-69177 Heidelberg, Germany

‡

see special section of these proceedings

Abstract

. In order to enhance the IceCube’s sen-

sitivity to astrophysical objects, we have developed

a dedicated search for neutrinos in coincidence

with flares detected in various photon wavebands

from blazars and high-energy binary systems. The

analysis is based on a maximum likelihood method

including the reconstructed position, the estimated

energy and arrival time of IceCube events. After a

short summary of the phenomenological arguments

motivating this approach, we present results from

data collected with 22 IceCube strings in 2007-2008.

First results for the 40-string IceCube configuration

during 2008-2009 will be presented at the conference.

We also report on plans to use long light curves and

extract from them a time variable probability density

function.

Keywords

: Neutrino astronomy, Multiwavelength

astronomy

I. INTRODUCTION

IceCube is a high-energy neutrino observatory cur-

rently under construction at the geographic South Pole.

The full detector will be composed of 86 strings of

60 Digital Optical Modules (DOMs) each, deployed

between 1500 and 2500m below the glacier surface. A

six string Deep Core with higher quantum efficiency

photomultipliers and closer DOM spacing in the lower

detector will enhance sensitivity to low energy neutrinos.

Muons passing through the detector emit Cˇ erenkov light

allowing reconstruction with

? 1

◦

angular resolution

in the full detector and about

1.5

◦

(median) in the

22 string configuration. In this paper we describe the

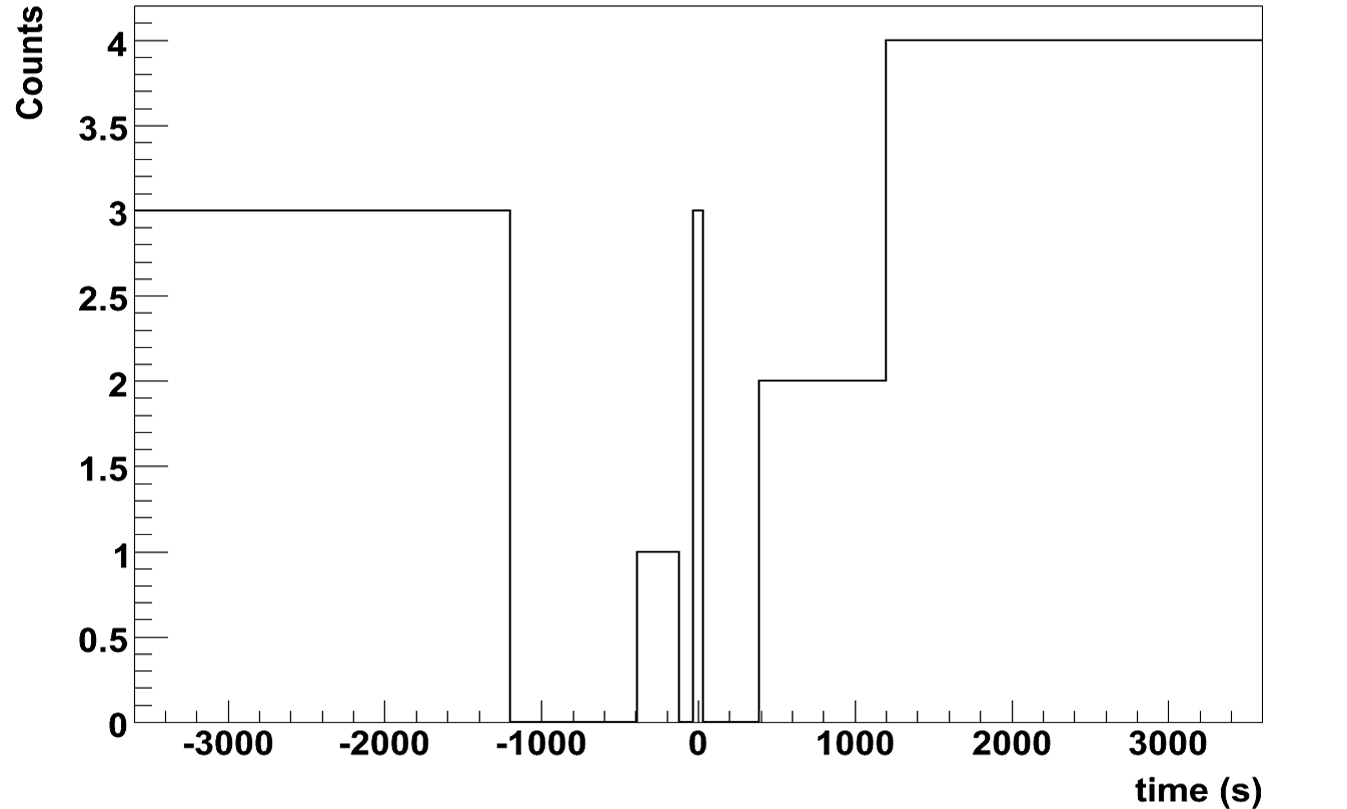

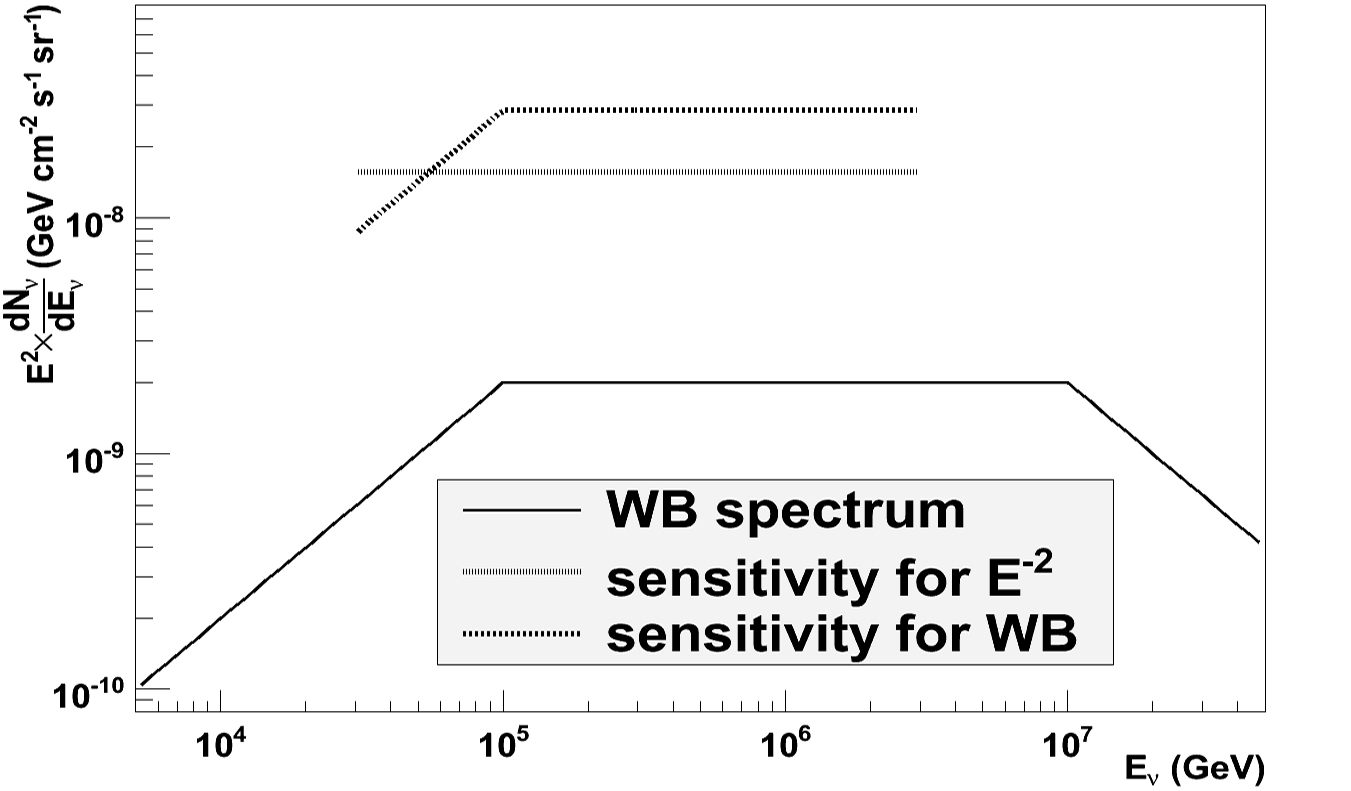

introduction of a time dependent term to the standard