Searching for Quantum Gravity with High-energy Atmospheric

Neutrinos and AMANDA-II

by

John Lawrence Kelley

A dissertation submitted in partial fulfillment of the

requirements for the degree of

Doctor of Philosophy

(Physics)

at the

University of Wisconsin – Madison

2008

?

c

2008 John Lawrence Kelley

All Rights Reserved

Searching for Quantum Gravity with High-energy

Atmospheric Neutrinos and AMANDA-II

John Lawrence Kelley

Under the supervision of Professor Albrecht Karle

At the University of Wisconsin – Madison

The AMANDA-II detector, operating since 2000 in the deep ice at the geographic South Pole,

has accumulated a large sample of atmospheric muon neutrinos in the 100 GeV to 10 TeV energy

range. The zenith angle and energy distribution of these events can be used to search for various

phenomenological signatures of quantum gravity in the neutrino sector, such as violation of Lorentz

invariance (VLI) or quantum decoherence (QD). Analyzing a set of 5511 candidate neutrino events

collected during 1387 days of livetime from 2000 to 2006, we find no evidence for such effects and set

upper limits on VLI and QD parameters using a maximum likelihood method. Given the absence of

new flavor-changing physics, we use the same methodology to determine the conventional atmospheric

muon neutrino flux above 100 GeV.

Albrecht Karle (Adviser)

i

The collaborative nature of science is often one of its more enjoyable aspects, and I owe a debt

of gratitude to many people who helped make this work possible.

First, I offer my thanks to my adviser, Albrecht Karle, for his support and for the freedom to

pursue topics I found interesting (not to mention multiple opportunities to travel to the South Pole).

I would like to thank Gary Hill for numerous helpful discussions, especially about statistics, and for

always being willing to talk through any problem I might have. Francis Halzen sold me on IceCube

during my first visit to Madison, and I have learned from him never to forget the big picture.

Many thanks to Teresa Montaruli for her careful analysis and insightful questions, and to

Paolo Desiati for listening patiently and offering helpful advice. I thank Dan Hooper for suggesting

that I look into the phenomenon of quantum decoherence and thus starting me off on this analysis

in the first place. And I thank Kael Hanson for providing fantastic opportunities for involvement in

IceCube hardware and software development, and Bob Morse for a chance to explore radio detection

techniques.

I offer my thanks as well to my officemate and friend, Jim Braun, for a collaboration that

has made the past years much more enjoyable. The O(1000) pots of coffee we have shared were also

rather instrumental in fueling this work.

I would like to thank my parents, James and Lorraine, for their unflagging encouragement

through all of my career-related twists and turns. Finally, I offer my deepest gratitude to my partner,

Autumn, for her support, flexibility, and understanding — and for leaving our burrito- and sushi-filled

life in San Francisco to allow me to pursue this degree. May the adventures continue.

ii

Contents

1 Introduction

1

2 Cosmic Rays and Atmospheric Neutrinos

4

2.1 Cosmic Rays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4

2.2 Atmospheric Neutrinos and Muons . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7

2.2.1 Production . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7

2.2.2 Energy Spectrum and Angular Distribution . . . . . . . . . . . . . . . . . . . .

7

2.3 Neutrino Oscillations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

3 Quantum Gravity Phenomenology

13

3.1 Violation of Lorentz Invariance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

3.2 Quantum Decoherence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

4 Neutrino Detection

21

4.1 General Techniques . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

4.2 Ceˇ renkov Radiation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

4.3 Muon Energy Loss . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

4.4 Other Event Topologies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

4.5 Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

5 The AMANDA-II Detector

26

5.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

5.2 Optical Properties of the Ice . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

iii

5.3 Data Acquisition and Triggering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

5.4 Calibration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

6 Simulation and Data Selection

31

6.1 Simulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

6.2 Filtering and Track Reconstruction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

6.3 Quality Cuts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

6.3.1 Point-source Cuts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

6.3.1.1 Likelihood Ratio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

6.3.1.2 Smoothness . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

6.3.1.3 Paraboloid Error . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

6.3.1.4 Flariness and Stability Period . . . . . . . . . . . . . . . . . . . . . . 36

6.3.2 Purity Cuts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

6.3.3 High-N

ch

Excess and Additional Purity Cuts . . . . . . . . . . . . . . . . . . . 38

6.4 Final Data Sample . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

6.5 Effective Area . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

6.6 A Note on Blindness . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

7 Analysis Methodology

49

7.1 Observables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

7.2 Statistical Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

7.2.1 Likelihood Ratio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

7.2.2 Confidence Intervals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

7.3 Incorporating Systematic Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

7.4 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

7.5 Complications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

7.5.1 Computational Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

7.5.2 Zero Dimensions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

7.6 Binning and Final Event Count . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

iv

8 Systematic Errors

62

8.1 General Approach . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

8.2 Sources of Systematic Error . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

8.2.1 Atmospheric Neutrino Flux Normalization . . . . . . . . . . . . . . . . . . . . . 64

8.2.2 Neutrino Interaction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

8.2.3 Reconstruction Bias . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

8.2.4 Tau-neutrino-induced Muons . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

8.2.5 Background Contamination . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

8.2.6 Timing Residual Uncertainty . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

8.2.7 Muon Energy Loss . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

8.2.8 Primary Cosmic Ray Slope . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

8.2.9 Charmed Meson Contribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

8.2.10 Rock Density . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

8.2.11 Pion/Kaon Ratio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

8.2.12 Optical Module Sensitivity and Ice . . . . . . . . . . . . . . . . . . . . . . . . . 72

8.3 Final Analysis Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

9 Results

77

9.1 Final Zenith Angle and N

ch

Distributions . . . . . . . . . . . . . . . . . . . . . . . . . 77

9.2 Likelihood Ratio and Best-fit Point . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

9.3 Upper Limits on Quantum Gravity Parameters . . . . . . . . . . . . . . . . . . . . . . 79

9.3.1 Violation of Lorentz Invariance . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

9.3.2 Quantum Decoherence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

9.4 Determination of the Atmospheric Neutrino Flux . . . . . . . . . . . . . . . . . . . . . 81

9.4.1 Result Spectrum . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

9.4.2 Valid Energy Range of Result . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

9.4.3 Dependence on Flux Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

9.5 Comparison with Other Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

v

10 Conclusions and Outlook

91

10.1 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

10.2 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

10.3 Outlook . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

10.3.1 IceCube . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

10.3.2 Sensitivity Using Atmospheric Neutrinos . . . . . . . . . . . . . . . . . . . . . . 95

10.3.3 Astrophysical Tests of Quantum Gravity . . . . . . . . . . . . . . . . . . . . . . 95

A Non-technical Summary

104

B Effective Area

108

C Reweighting of Cosmic Ray Simulation

112

C.1 Event Weighting (Single Power Law) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

C.2 Livetime . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

C.3 Event Weighting (H¨orandel) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

D Effective Livetimes and their Applications

116

D.1 Formalism . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

D.1.1 Constant Event Weight . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

D.1.2 Variable Event Weights . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

D.2 Application 1: Cosmic Ray Simulation . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

D.3 Application 2: The Error on Zero . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

D.3.1 A Worst-case Scenario . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

D.3.2 Variable Event Weights . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

D.3.3 An Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

D.3.4 A Caveat . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

1

Chapter 1

Introduction

Our Universe is a violent place. Stars burn through their elemental fuel and explode. Matter spirals

to its doom around supermassive black holes at the center of galaxies. Space still glows in every

direction from the primordial explosion of the Big Bang.

Born from these inhospitable conditions are the neutrinos. Anywhere nuclear reactions or

particle collisions take place, neutrinos are likely to be produced — in the Big Bang, in stars, and

even in our own nuclear reactors. The neutrino, having no electric charge, interacts only via the

weak force, and thus normal matter is nearly transparent to it. Trillions of neutrinos pass through

our bodies every second, and we never notice.

Wolfgang Pauli postulated the existence of the neutrino in 1933 to solve a problem with missing

energy in radioactive beta decay [1]. Twenty years later, Reines and Cowan first detected neutrinos

by placing liquid scintillator targets next to the Hanford and Savannah River nuclear reactors [2].

Today, we have also detected neutrinos from our Sun ([3]; see also fig. 1.1), from nuclear decay deep

in the Earth [4], and even from a nearby supernova [5, 6]. Figure 1.2 shows the fluxes and energy

ranges spanned by known and hypothetical neutrino sources.

Another product of the high-energy processes in the universe are cosmic rays: high-energy

protons and atomic nuclei that are accelerated to energies far beyond that of any particle accelerator

on Earth. These cosmic rays bombard the Earth continuously, producing yet more neutrinos that

rain down upon us from high in our atmosphere.

Given a large enough target, we can detect these high-energy atmospheric neutrinos. The

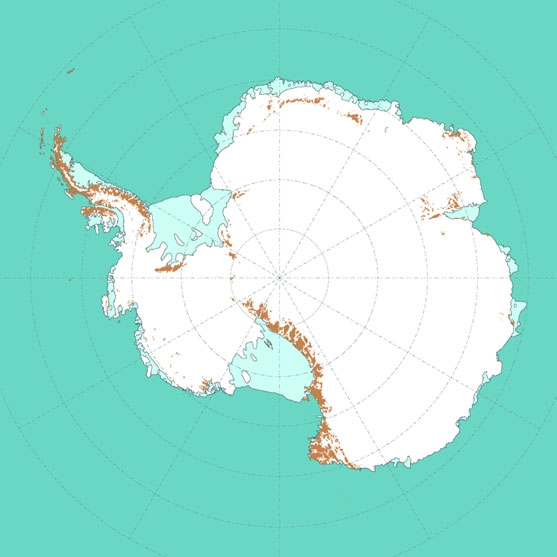

AMANDA-II neutrino detector employs the huge ice sheet at the South Pole as such a target, and

2

Figure 1.1: “Picture” of the Sun in neutrinos, as seen by the Super-Kamiokande neutrino

detector. Image credit: R. Svoboda and K. Gordan.

Figure 1.2: Neutrino fluxes as function of energy (multiplied by E

3

to enhance spectral

features) from various sources, including the cosmic neutrino background from the Big

Bang (CνB), supernovae neutrinos (SN ν), solar neutrinos, and atmospheric neutrinos

(from [7]).

3

uses sensitive light sensors deep in the ice to detect the light emitted by secondary particles produced

when a neutrino occasionally hits the ice or the bedrock. AMANDA-II accumulates such neutrinos at

the rate of about 16 per day, about four of which are sufficiently high quality to use for an analysis.

Why study these neutrinos? Nature provides a laboratory with energies far above what we

can currently produce on Earth, and studying these high-energy neutrinos can possibly reveal hints

of surprising new physical effects. We know that our theories of gravity and quantum mechanics are

mutually incompatible, but we have no theory of quantum gravity to unify the two. At high enough

energies, we should be able to probe effects of quantum gravity, and neutrinos may prove crucial to

this effort.

In this work, we examine atmospheric neutrinos detected by AMANDA-II from the years 2000

to 2006 for evidence of quantum gravitational effects, by determining their direction and approximate

energy. We have found no evidence for such effects, leading us to set limits on the size of any violations

of our existing theories. Finally, we determine the atmospheric neutrino flux as a function of energy,

extending measurements by other neutrino experiments.

4

Chapter 2

Cosmic Rays and Atmospheric Neutrinos

2.1 Cosmic Rays

Cosmic rays are protons and heavier ionized nuclei with energies up to 10

20

eV that constantly

bombard the Earth’s atmosphere. Exactly where they come from and how they are accelerated

to such incredible energies are both open questions. Nearly 100 years after Victor Hess’s balloon

experiments in 1912 showed that cosmic rays come from outer space [8], we still do not know their

source. One of the main difficulties is that the magnetic field of the Galaxy scrambles any directional

information that might point back to a source. Still, all but the highest-energy cosmic rays come

from within our Galaxy, and the expanding shocks around supernovae remnants are a likely candidate

acceleration site [9]. Figure 2.1 shows a composite image of the expanding shock wave around the

Tycho supernova remnant.

The cosmic ray energy spectrum is a power law with differential flux approximately propor-

tional to E

? 2.7

[11]. Figure 2.2 shows measurements of the flux from both direct measurements

(space- and balloon-based instruments) and indirect measurements (air shower arrays). Above about

10

6

GeV, the spectrum steepens to approximately dN/dE ∝ E

? 3

, a feature known as the knee. The

exact mechanism for this transition is unknown, but one possibility is a rigidity-dependent cutoff of

the spectrum as cosmic rays diffuse out of the Galaxy [12].

5

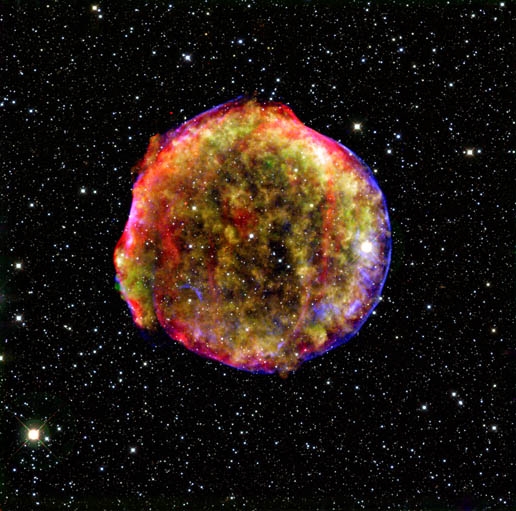

Figure 2.1:

X-ray and infrared multi-band image of Tycho’s supernova remnant

(SN 1572), a possible source of galactic cosmic ray acceleration [10]. Image credit:

MPIA/NASA/Calar Alto Observatory.

6

10

-10

10

-8

10

-6

10

-4

10

-2

10

0

10

0

10

2

10

4

10

6

10

8

10

10

10

12

E

2

dN/dE

(GeV cm

-2

sr

-1

s

-1

)

E

kin

(GeV / particle)

Energies and rates of the cosmic-ray particles

protons only

all-particle

electrons

positrons

antiprotons

CAPRICE

BESS98

AMS

Ryan et al.

Grigorov

JACEE

Akeno

Tien Shan

MSU

KASCADE

CASA-BLANCA

DICE

HEGRA

CasaMia

Tibet

AGASA

HiRes

Figure 1. Global view of the cosmic-ray spectrum.

would be an increase in the relative abundance of heavy nuclei as first protons, then helium,

then carbon, etc. reach an upper limit on total energy per particle [17]. The first evidence of

such a sequence (which I call a “Peters cycle” [1]) is provided by the recent publication of the

KASCADE experiment [21], which was discussed extensively at this workshop. The data from

KASCADE are limited in energy to below 10

17

eV. The larger KASCADE Grande array [22],

which encloses an area of one square kilometer, will extend the reach of this array to 10

18

eV.

KASCADE measures the shower size at the ground, separately for protons and for GeV muons.

Inferences from the measurements about primary composition depend on simulations of showers

through the atmosphere down to the sea level location of the experiment.

17

Figure 2.2: The cosmic ray energy spectrum as measured by various direct and indirect

experiments, from [13]. The flux has been multiplied by E

2

to enhance features in the

steep power-law spectrum.

7

2.2 Atmospheric Neutrinos and Muons

2.2.1 Production

As cosmic rays interact with air molecules in the atmosphere, a chain reaction of particle

production (and decay) creates an extensive air shower of electrons, positrons, pions, kaons, muons,

and neutrinos. Atmospheric neutrinos are produced through hadronic interactions generating charged

pions and kaons, which then decay into muons and muon neutrinos:

π

+

(K

+

) → µ

+

+ ν

µ

(2.1)

π

?

(K

?

) → µ

?

+ ν¯

µ

.

(2.2)

Some of the muons produced in the decay will also eventually decay via exchange of a W

±

boson,

producing both electron and muon neutrinos:

µ

+

→ ν¯

µ

+ e

+

+ ν

e

(2.3)

µ

?

→ ν

µ

+ e

?

+ ν¯

e

.

(2.4)

However, many of these atmospheric muons will survive to ground level and, depending on their

energy, can penetrate kilometers into the Earth before decaying. The process of atmospheric muon

and neutrino production is shown schematically in fig. 2.3.

2.2.2 Energy Spectrum and Angular Distribution

Atmospheric muon neutrinos dominate over all other neutrino sources in the GeV to TeV

energy range. The flux of atmospheric electron neutrinos is over one order of magnitude smaller than

the flux of muon neutrinos at these energies [15]. While the flux of parent cosmic rays is isotropic,

the kinematics of the meson interaction and decay in the atmosphere alters the angular distribution

of atmospheric ν

µ

to a more complicated function of the zenith angle.

While elaborate three-dimensional calculations exist for the expected flux of atmospheric neu-

trinos [16, 17], an approximate analytic formulation is given by Gaisser [11]:

8

?

0

?

?

?

?

?

?

?

?

?

?

!

!

?

e

e

?

e

?

e

?

e

?

Decay

Decay

?

?

?

?

?

?

?

?

?

e

Hadronic

shower

Cosmic

ray

Electromagnetic

shower

?

?

! ?

?

? ?

?

?

?

?

!

e

?

? ?

?

e

? ?

?

?

?

! ?

?

? ?

?

?

?

!

e

?

? ?

e

? ?

?

?

rays and

can

). In the

a

?

?

,

?

0

,

hadronic

0

decays

and leptons

but no

two muon

shown on

to the

and

The result of the Kamiokande experiment will be tested in the near future by

super-Kamiokande, which will have significantly better statistical precision. Also,

the neutrino oscillation hypothesis and the MSW solution will be tested by the

Sudbury Neutrino Observatory (SNO) experiment, which will measure both

charged- and neutral-current solar-neutrino interactions.

Figure 2.3: Atmospheric muon and neutrino production (from [14]).

9

dN

dE

ν

= C

?

A

πν

1 + B

πν

cos θ

∗

E

ν

/?

π

+ 0.635

A

Kν

1 + B

Kν

cos θ

∗

E

ν

/?

K

?

,

(2.5)

where

A

πν

= Z

Nπ

(1 ? r

π

)

γ

γ +1

(2.6)

and

B

πν

=

γ +2

γ +1

1

1 ? r

π

Λ

π

? Λ

N

Λ

π

ln(Λ

π

/Λ

N

)

.

(2.7)

Equivalent expressions hold for A

Kν

and B

Kν

. In the above, γ is the integral spectral index (so

γ ≈ 1.7); Z

ij

is the spectral-weighted moment of the integral cross section for the production of

particle j from particle i; Λ

i

is the atmospheric attenuation length of particle i; ?

i

is the critical

energy of particle i, at which the decay length is equal to the (vertical) interaction length; r

i

is the

mass ratio m

µ

/m

i

; and cos θ

∗

is not the zenith angle θ at the detector, but rather the angle at the

production height in the atmosphere.

The cosine of the atmospheric angle is roughly equal to that of the zenith angle for cos θ ≥ 0.5.

For steeper angles, we have a polynomial parametrization of the relation that averages over muon

production height [18],

cos θ

∗

=

?

cos

2

θ + p

2

1

+ p

2

(cos θ)

p

3

+ p

4

(cos θ)

p

5

1+ p

2

1

+ p

2

+ p

4

(2.8)

where the fit constants for our specific detector depth are given in table 2.1.

Table 2.1: Fit parameters for the cos θ

∗

correction (eq. 2.8), from [18].

p

1

0.102573

p

2

-0.068287

p

3

0.958633

p

4

0.0407253

p

5

0.817285

While significant uncertainties exist in some of the hadronic physics (especially production of

10

log

10

E

ν

/ GeV

1.5

2

2.5

3

3.5

4

-1

sr

-1

s

-2

cm

-1

/dE / GeV

Φ

d

10

log

-14

-13

-12

-11

-10

-9

-8

-7

-6

-5

Barr et al.

Honda et al.

cos

θ

-1

-0.5

0

0.5

1

-1

sr

-1

s

-2

cm

-1

/dE / GeV

Φ

d

10

log

-11

-10.9

-10.8

-10.7

-10.6

-10.5

-10.4

-10.3

-10.2

-10.1

-10

Barr et al.

Honda et al.

Figure 2.4: Predicted atmospheric neutrino flux as a function of energy and zenith angle,

extended to high energies with the Gaisser parametrization. The flux vs. energy (left) is

averaged over all angles, while the flux vs. zenith angle (right) is at 1 TeV.

K

±

and heavier mesons), eq. 2.5 is a useful parametrization of the flux in the energy range where

neutrinos from muon decay can be neglected (E

ν

of at least a few GeV, and higher for horizontal

events).

We can also use eq. 2.5 to extend the detailed calculations of Barr et al. [16] and Honda et al.

[17] to energies of 1 TeV and above, by fitting the parameters in an overlapping energy region (below

700 GeV). We show the extended fluxes for each of these models in fig. 2.4 as a function of energy

and zenith angle.

2.3 Neutrino Oscillations

If neutrinos are massive, their mass eigenstates do not necessarily correspond to their flavor

eigenstates. As we will show, this implies that neutrinos can change flavor as they propagate, and a

ν

µ

produced in the atmosphere may appear as some other flavor by the time it reaches our detector.

In general, there exists a unitary transformation U from the mass basis to the flavor basis.

For oscillation between just two flavors, say ν

µ

and ν

τ

, the transformation can be represented as a

rotation matrix with one free parameter, the mixing angle θ

atm

:

11

ν

µ

ν

τ

=

cos θ

atm

sin θ

atm

? sin θ

atm

cos θ

atm

ν

1

ν

2

.

(2.9)

For free particles propagating in a vacuum, the neutrino mass (energy) eigenstates evolve according

the equation

ν

1

(t)

ν

2

(t)

=

e

? iE

1

t

0

0

e

? iE

2

t

ν

1

(t = 0)

ν

2

(t = 0)

.

(2.10)

Combining equations 2.9 and 2.10, and using the approximation that the mass of the neutrino is

small compared to its momentum (so that E

i

≈ p +

m

i

2

2p

), we find

ν

µ

(t)

ν

τ

(t)

= U

f

(t)

ν

µ

(t = 0)

ν

τ

(t = 0)

,

(2.11)

where the time-evolution matrix U

f

(t) in the flavor basis is given by

U

f

(t) =

cos

2

θ

atm

e

? i

m

1

2

t

2p

+ sin

2

θ

atm

e

? i

m

2

2

t

2p

cos θ

atm

sin θ

atm

(e

? i

m

1

2

t

2p

? e

? i

m

2

2

t

2p

)

? cos θ

atm

sin θ

atm

(e

? i

m

1

2

t

2p

? e

? i

m

2

2

t

2p

) cos

2

θ

atm

e

? i

m

1

2

t

2p

+ sin

2

θ

atm

e

? i

m

2

2

t

2p

.

(2.12)

By squaring the appropriate matrix element above, this evolution equation can easily be used

to obtain the probability that a muon neutrino will oscillate into a tau neutrino. Conventionally, the

propagation time is replaced by a propagation length L, and the momentum can be approximated

by the neutrino energy E, resulting in the following expression for the survival (non-oscillation)

probability:

P

ν

µ

→ν

µ

= 1 ?

sin

2

2θ

atm

sin

2

?

∆m

2

atm

L

4E

?

,

(2.13)

where ∆m

2

atm

is the squared mass difference and L is in inverse energy units

1

(we continue this

1

L (GeV

? 1

) = L (m)/(c?) = L (m) · 5.07 × 10

15

m

? 1

GeV

? 1

.

12

convention unless noted otherwise).

In practice, for atmospheric neutrinos the zenith angle of the neutrino relative to a detector

serves as a proxy for the baseline L. Specifically, the baseline for a given zenith angle θ is given by

L =

?

R

2

Earth

cos

2

θ + h

atm

(2R

Earth

+ h

atm

) ? R

Earth

cos θ

(2.14)

if the neutrino is produced at a height h

atm

in the atmosphere, and where θ = 0 corresponds to a

vertically down-going neutrino. We assume that the Earth is spherical and set the radius R

Earth

=

6370 km, noting that the difference between the polar radius and equatorial radius is only about 0.3%.

We use an average neutrino production height in the atmospheric ?h

atm

? = 20 km [19]. We note that

any correction for detector depth is smaller than the error from either of these approximations.

A description of oscillation between all three flavors can be obtained as above, except that the

transformation matrix U has a 3 × 3 minimum representation and has four free parameters: three

mixing angles θ

12

, θ

13

, and θ

23

, and a phase δ

13

[20]. Fortunately, because of the smallness of the

θ

13

mixing angle and the “solar” mass splitting ∆m

12

, it suffices to consider a two-neutrino system

in the atmospheric case.

Observations of atmospheric neutrinos by Super-Kamiokande [21], Soudan 2 [22], MACRO

[23], and other experiments have provided strong evidence for mass-induced atmospheric neutrino

oscillations. Observations of solar neutrinos by the Sudbury Neutrino Observatory (SNO) have also

shown that the neutrinos truly change flavor, rather than decay or disappear in some other way [24].

A global fit to oscillation data from Super-Kamiokande and K2K [25] results in best-fit atmospheric

parameters of ∆m

2

atm

= 2.2 × 10

? 3

eV

2

and sin

2

2θ

atm

= 1 [26]. Thus from eq. 2.13, for energies

above about 50 GeV, atmospheric neutrino oscillations cease for Earth-diameter baselines. However,

a number of phenomenological models of physics beyond the Standard Model predict flavor-changing

effects at higher energies that can alter the zenith angle and energy spectrum of atmospheric muon

neutrinos. We consider two of these in the next chapter, violation of Lorentz Invariance and quantum

decoherence.

13

Chapter 3

Quantum Gravity Phenomenology

Experimental searches for possible low-energy signatures of quantum gravity (QG) can provide a

valuable connection to a Planck-scale theory. Hints from loop quantum gravity [27], noncommutative

geometry [28], and string theory [29] that Lorentz invariance may be violated or spontaneously broken

have encouraged phenomenological developments and experimental searches for such effects [30, 31].

Space-time may also exhibit a “foamy” nature at the smallest length scales, inducing decoherence of

pure quantum states to mixed states during propagation through this chaotic background [32].

As we will discuss, the neutrino sector is a promising place to search for such phenomena.

Water-based or ice-based Ceˇ renkov neutrino detectors such as BAIKAL [33], AMANDA-II [34],

ANTARES [35], and IceCube [36] have the potential to accumulate large samples of high-energy

atmospheric muon neutrinos. Analysis of these data could reveal unexpected signatures that arise

from QG phenomena such as violation of Lorentz invariance or quantum decoherence.

3.1 Violation of Lorentz Invariance

Many models of quantum gravity suggest that Lorentz symmetry may not be exact [31]. Even

if a QG theory is Lorentz symmetric, the symmetry may still be spontaneously broken in our Universe.

Atmospheric neutrinos, with energies above 100 GeV and mass less than 1 eV, have Lorentz gamma

factors exceeding 10

11

and provide a sensitive test of Lorentz symmetry.

Neutrino oscillations in particular are a sensitive testbed for such effects. Oscillations act as a

“quantum interferometer” by magnifying small differences in energy into large flavor changes as the

neutrinos propagate. In conventional oscillations, this energy shift results from the small differences

14

in mass between the eigenstates, but specific types of violation of Lorentz invariance (VLI) can also

result in energy shifts that can generate neutrino oscillations with different energy dependencies.

The Standard Model Extension (SME) provides an effective field-theoretic approach to VLI

[37]. The “minimal” SME adds all coordinate-independent renormalizable Lorentz- and CPT-violating

terms to the Standard Model Lagrangian. Even when restricted to first-order effects in the neutrino

sector, the SME results in numerous potentially observable effects [38, 39, 40]. To specify one particu-

lar model which leads to alternative oscillations at high energy, we consider only the Lorentz-violating

Lagrangian term

1

2

i(c

L

)

µνab

L

a

γ

µ

←→

D

ν

L

b

(3.1)

with the VLI parametrized by the dimensionless coefficient c

L

[39]. L

a

and L

b

are left-handed

neutrino doublets with indices running over the generations e, µ, and τ, and D

ν

is the covariant

derivative with A

←→

D

ν

B ≡ AD

ν

B ? (D

ν

A)B.

We restrict ourselves to rotationally invariant scenarios with only nonzero time components

in c

L

, and we consider only a two-flavor system. The eigenstates of the resulting 2 × 2 matrix c

T T

L

correspond to differing maximal attainable velocity (MAV) eigenstates. That is, eigenstates may

have limiting velocities other than the speed of light and may be distinct from either the flavor or

mass eigenstates. Any difference ∆c in the eigenvalues will result in neutrino oscillations. The above

construction is equivalent to a modified dispersion relationship of the form

E

2

= p

2

c

2

a

+ m

2

c

4

a

(3.2)

where c

a

is the MAV for a particular eigenstate, and in general c

a

?= c [41, 42]. Given that the

mass is negligible, the energy difference between two MAV eigenstates is equal to the VLI parameter

∆c/c = (c

a1

? c

a2

)/c, where c is the canonical speed of light.

The effective Hamiltonian H

±

representing the energy shifts from both mass-induced and VLI

oscillations can be written [43]

15

H

±

=

∆m

2

4E

U

θ

? 10

01

U

†

θ

+

∆c

c

E

2

U

ξ

? 10

01

U

†

ξ

(3.3)

with two mixing angles θ (the standard atmospheric mixing angle) and ξ (a new VLI mixing angle).

The associated 2 × 2 mixing matrices are given by

U

θ

=

cos θ sin θ

? sin θ cos θ

(3.4)

and

U

ξ

=

cos ξ

sin ξe

±iη

? sin ξe

?iη

cos ξ

(3.5)

with η representing their relative phase. Solving the Louiville equation for time evolution of the state

density matrix ρ

ρ˙ = ? i[H

±

, ρ]

(3.6)

results in the ν

µ

survival probability. This probability P

ν

µ

→ν

µ

is given by

P

ν

µ

→ν

µ

= 1 ?

sin

2

2Θ sin

2

?

∆m

2

L

4E

R

?

,

(3.7)

where the combined effective mixing angle Θ can be written

sin

2

2Θ =

1

R

2

(sin

2

2θ + R

2

sin

2

2ξ + 2R sin 2θ sin 2ξ cos η) ,

(3.8)

the correction to the oscillation wavelength R is given by

R =

?

1 + R

2

+ 2R(cos 2θ cos 2ξ + sin 2θ sin 2ξ cos η) ,

(3.9)

and the ratio R between the VLI oscillation wavelength and mass-induced wavelength is

16

E

ν

/ GeV

log

10

1

1.5

2

2.5

3

3.5

4

4.5

5

)

μ

ν

→

μ

ν

P(

0

0.2

0.4

0.6

0.8

1

Figure 3.1: ν

µ

survival probability as a function of neutrino energy for maximal baselines

(L ≈ 2R

Earth

) given conventional oscillations (solid line), VLI (dotted line, with n = 1,

sin 2ξ = 1, and ∆δ = 10

? 26

), and QD effects (dashed line, with n = 2 and D

∗

=

10

? 30

GeV

? 1

).

R =

∆c

c

E

2

4E

∆m

2

(3.10)

for a muon neutrino of energy E and traveling over baseline L. For simplicity, the phase η is often

set to 0 or π/2. For illustration, if we take both conventional and VLI mixing to be maximal

(ξ = θ = π/4), this reduces to

P

ν

µ

→ν

µ

(maximal) = 1 ? sin

2

?

∆m

2

L

4E

+

∆c

c

LE

2

?

.

(3.11)

Note the different energy dependence of the two effects. The survival probability for maximal baselines

as a function of neutrino energy is shown in fig. 3.1.

Several neutrino experiments have set upper limits on this manifestation of VLI, including

MACRO [44], Super-Kamiokande [45], and a combined analysis of K2K and Super-Kamiokande data

17

[43] (∆c/c < 2.0 × 10

? 27

at the 90% CL for maximal mixing). In previous work, AMANDA-II has

set a preliminary upper limit using four years of data of 5.3 × 10

? 27

[46]. Other neutrino telescopes,

such as ANTARES, are also expected to be sensitive to such effects (see e.g. [47]).

Given the specificity of this particular model of VLI, we wish to generalize the oscillation

probability in eq. 3.7. We follow the approach in [47], which is to modify the VLI oscillation length

L ∝ E

? 1

to other integral powers of the neutrino energy E. That is,

∆c

c

LE

2

→ ∆δ

LE

n

2

,

(3.12)

where n ∈ [1, 3], and the generalized VLI term ∆δ is in units of GeV

? n+1

. An L ∝ E

? 2

energy

dependence (n = 2) has been proposed in the context of loop quantum gravity [48] and in the case

of non-renormalizable VLI effects caused by the space-time foam [49]. Both the L ∝ E

? 1

(n = 1)

and the L ∝ E

? 3

(n = 3) cases have been examined in the context of violations of the equivalence

principle (VEP) [50, 51, 52]. We note that in general, Lorentz violation implies violation of the

equivalence principle, so searches for either effect are related [31].

We also note that there is no reason other than simplicity to formulate the VLI oscillations

as two-flavor, as any full description must incorporate all three flavors, and we know nothing of the

size of the various eigenstate splittings and mixing angles. However, a two-flavor system is probably

not a bad approximation, because in the most general case, one splitting will likely appear first as

we increase the energy. Also, since we will search only for a deficit of muon neutrinos, we do not care

to which flavor the ν

µ

are oscillating (so it need not be ν

τ

).

3.2 Quantum Decoherence

Another possible low-energy signature of QG is the evolution of pure states to mixed states via

interaction with the environment of space-time itself, or quantum decoherence. One heuristic picture

of this phenomenon is the production of virtual black hole pairs in a “foamy” spacetime, created

from the vacuum at scales near the Planck length [53]. Interactions with the virtual black holes may

not preserve certain quantum numbers like neutrino flavor, causing decoherence into a superposition

of flavors.

18

Quantum decoherence can be treated phenomenologically as a quantum open system which

evolves thermodynamically. The time-evolution of the density matrix ρ is modified with a dissipative

term

/δHρ

:

ρ˙ = ? i[H, ρ] +

/δHρ

.

(3.13)

The dissipative term representing the losses in the open system is modeled via the technique of

Lindblad quantum dynamical semigroups [54]. Here we outline the approach in ref. [55], to which we

refer the reader for more detail. In this case, we have a set of self-adjoint environmental operators

A

j

, and eq. 3.13 becomes

ρ˙ = ? i[H, ρ] +

1

2

?

j

([A

j

, ρA

j

] + [A

j

ρ, A

j

]) .

(3.14)

The hermiticity of the A

j

ensures the monotonic increase of entropy, and in general, pure states will

now evolve to mixed states. The irreversibility of this process implies CPT violation [56].

To obtain specific predictions for the neutrino sector, there are again several approaches for

both two-flavor systems [57, 58] and three-flavor systems [55, 59]. Again, we follow the approach

in [55] for a three-flavor neutrino system including both decoherence and mass-induced oscillations.

The dissipative term in eq. 3.14 is expanded in the Gell-Mann basis F

µ

, µ ∈ [0, 8], such that

1

2

?

j

([A

j

, ρA

j

] + [A

j

ρ, A

j

]) =

?

µ,ν

L

µν

ρ

µ

F

ν

.

(3.15)

At this stage we must choose a form for the decoherence matrix L

µν

, and we select the weak-coupling

limit in which L is diagonal, with L

00

= 0 and L

ii

= ? D

i

. These D

i

represent the characteristic length

scale over which decoherence effects occur. Solving this system for atmospheric neutrinos (where we

neglect mass-induced oscillations other than ν

µ

→ ν

τ

) results in the ν

µ

survival probability [55]:

19

P

ν

µ

→ν

µ

=

1

3

+

1

2

?

1

4

e

? LD

3

(1 + cos 2θ)

2

+

1

12

e

? LD

8

(1 ? 3 cos 2θ)

2

+ e

?

L

2

(D

6

+D

7

)

· sin

2

2θ

cos

L

2

?

?

∆m

2

E

?

2

? (D

6

? D

7

)

2

+

sin

?

L

2

?

?

∆m

2

E

?

2

? (D

6

? D

7

)

2

?

(D

6

? D

7

)

?

?

∆m

2

E

?

2

? (D

6

? D

7

)

2

??

.

(3.16)

Note the limiting probability of

1

3

, representing full decoherence into an equal superposition of flavors.

The D

i

not appearing in eq. 3.16 affect decoherence between other flavors, but not the ν

µ

survival

probability.

We note that in eq. 3.16, we must impose the condition ∆m

2

/E > |D

6

? D

7

|, but this is not

an issue in the parameter space we explore in this analysis. If one wishes to ensure strong conditions

such as complete positivity [57], there may be other inequalities that must be imposed (see e.g. the

discussion in ref. [59]).

The energy dependence of the decoherence terms D

i

depends on the underlying microscopic

model. As with the VLI effects, we choose a generalized phenomenological approach where we suppose

the D

i

vary as some integral power of the energy,

D

i

= D

∗

i

E

n

, n ∈ [1, 3] ,

(3.17)

where E is the neutrino energy in GeV, and the units of the D

∗

i

are GeV

? n+1

. The particularly

interesting E

2

form is suggested by decoherence calculations in non-critical string theories involving

recoiling D-brane geometries [60]. We show the n = 2 survival probability as a function of neutrino

energy for maximal baselines in fig. 3.1.

An analysis of Super-Kamiokande in a two-flavor framework has resulted in an upper limit

at the 90% CL of D

∗

< 9.0 × 10

? 28

GeV

? 1

for an E

2

model and all D

∗

i

equal [61]. ANTARES

has reported sensitivity to various two-flavor decoherence scenarios as well, using a more general

formulation [58]. Analyses of Super-Kamiokande, KamLAND, and K2K data [62, 63] have also set

20

strong limits on decoherence effects proportional to E

0

and E

? 1

. Because our higher energy range

does not benefit us for such effects, we do not expect to be able to improve upon these limits, and

we focus on effects with n ≥ 1.

Unlike the VLI system, we have used a full three-flavor approach to the phenomenology of the

QD system. There is no theoretical justification for doing so in one but not the other, but for the

special case in which all decoherence parameters are equal, the choice is important. This is because

in a three-flavor system, the limiting survival probability is 1/3, compared to 1/2 in a two-flavor

system. Since heuristically the equality of decoherence parameters suggests that the interactions

with space-time are flavor-agnostic, we feel that using a three-flavor description is more apt.

21

Chapter 4

Neutrino Detection

4.1 General Techniques

A major obstacle to overcome in the detection of the neutrino is its small cross section: while

the neutrino-nucleon cross section rises with energy, at 1 TeV the interaction length is still 2.5 million

kilometers of water [64]. Thus, any potential detector must encompass an enormous volume to achieve

a reasonable event rate. Once an interaction does occur in or near the detector, we can detect the

resulting charged particles by means of their radiation. A (relatively) cost-effective approach is to

use natural bodies of water or transparent ice sheets as the target material, and then instrument this

volume with photomultiplier tubes. While originally proposed in 1960 by K. Greisen and F. Reines

[65, 66], large-scale detectors of this sort have only been in operation for the past decade or so.

Water or ice neutrino detectors typically consist of vertical cables (called “strings” or “lines”)

lowered either into deep water or into holes drilled in the ice. Photomultiplier tubes (PMTs) in

pressure housings are attached to the cables, which supply power and communications. A charged-

current neutrino interaction with the surrounding matter produces a charged lepton via the process

ν

l

(ν

l

)+ q → l

?

(l

+

)+ q

?

,

(4.1)

where q is a valence or sea quark in the medium, and q

?

is as appropriate for charge conservation.

In the case of a muon neutrino, the resulting muon can travel a considerable distance within the

medium.

22

μ

±

ct/n

!ct

"

c

Figure 4.1: Formation of a Ceˇ renkov cone by a relativistic muon moving through a

medium.

4.2 Cˇerenkov Radiation

Because the relativistic muon produced in the neutrino interaction is traveling faster than the

speed of light in the medium, it will radiate via the Ceˇ renkov effect. A coherent “shock wave” of light

forms at a characteristic angle θ

c

depending on the index of refraction n of the medium, specifically,

cos θ

c

=

1

nβ

,

(4.2)

where β = v/c is the velocity of the particle. For ice, where n ≈ 1.33, the Ceˇ renkov angle is about

41

◦

for relativistic particles (β ≈ 1). A full treatment differentiates between the phase and group

indices of refraction, but this is a small correction (see e.g. [67]). Figure 4.1 presents a geometric

derivation of the simpler form shown in eq. 4.2.

The number of Ceˇ renkov photons emitted per unit track length as a function of wavelength λ

is given by the Franck-Tamm formula [68]

d

2

N

dxdλ

=

2πα

λ

2

?

1 ?

1

β

2

n

2

?

,

(4.3)

where α is the fine-structure constant. Because of the 1/λ

2

dependence, the high-frequency photons

dominate the emission, up to the ultraviolet cutoff imposed by the glass of the PMT pressure vessel

23

(about 365 nm [69]). Between this and the frequency at which is the ice is no longer transparent

(about 500 nm; see section 5.2), we expect an emission of about 200 photons per centimeter [70].

4.3 Muon Energy Loss

Ceˇ renkov radiation from the bare muon is not its dominant mode of energy loss. The rate of

energy loss as a function of distance, dE/dx, can be parametrized as

?

dE

dx

= a(E) + b(E) E ,

(4.4)

where a(E) is the ionization energy loss given by the standard Bethe-Bloch formula (see e.g. [71]), and

b(E) is the sum of losses by e

+

e

?

pair production, bremsstrahlung, and photonuclear interactions.

The energy losses from various contributions are shown in figure 4.2.

The ionization energy losses are continuous in nature, occurring smoothly along the muon

track. However, at high energies, the losses by bremsstrahlung, pair production, and photonuclear

interactions are not continuous but stochastic: rare events that result in large depositions of energy

via particle and photon creation. The particles produced are highly relativistic, and if charged, they

too will radiate via the Ceˇ renkov effect. Furthermore, because they are kinematically constrained to

the approximate direction of the muon, this emission will peak at the Ceˇ renkov angle of the muon.

The roughly conical Ceˇ renkov emission of the bare muon is thus enhanced by the various energy

losses described above [73].

4.4 Other Event Topologies

For charged-current ν

e

and ν

τ

interactions, or neutral-current interactions of any flavor, the

event topology is less track-like than the muon case described above, and is instead more spherical

or “cascade-like.” For ν

e

events, this is because of the short path length of the resulting electron or

positron within the ice. For ν

τ

events (except for those of very high energy), the resulting τ lepton

will decay immediately, in most cases resulting in a hadronic shower. However, 17% of the time [20],

the τ will decay via

24

the question arises whether this precision is sufficient to p

dreds of interactions along their way. Figure 6 is one of

strate that it is sufficient: the final energy distribution did

parametrizations. Moreover, different orders of the inter

responding to the number of the grid points over which

tested (Figure 9) and results of propagation with differ

other (Figure 10). The default value of

g

was chosen to b

other acceptable values 3 ≤

g

≤ 6 at the run time.

ioniz

brems

photo

epair

decay

energy [GeV]

energy losses

[

GeV/(g/cm

2

)

]

10

10

-10

10

-9

10

-8

10

-7

10

-6

10

-5

10

-4

10

-3

10

-2

1

-1

10

10

2

10

3

10

4

10

5

10

6

10

-1

1 10 10

2

10

3

10

4

10

5

10

6

10

7

10

8

10

9

10

10

10

11

precision of parametrization (total)

10

-10

10

-9

10

-8

10

-7

10

-6

10

-5

10

-4

10

-3

10

-2

10

-1

1

10

-1

1 10

Fig. 7. Ionization (upper solid curve),

bremsstrahlung (dashed), photonuclear

(dotted), epair production (dashed-dot-

ted) and decay (lower solid curve) losses

in ice

Fig.

8.

(e

pa

− e

np

)/

g=2

g=3

g=4

g=5

-3

10

-2

10

-1

1

g=5

g=3

10

4

10

5

0 10 20

Figure 4.2: Average muon energy loss in ice as a function of energy, from [72].

25

τ

+

→ µ

+

+ ν

µ

+ ν¯

τ

τ

?

→ µ

?

+ ν¯

µ

+ ν

τ

,

(4.5)

possibly resulting in a detectable muon track (albeit of significantly lower energy than the original

ν

τ

). For a neutral-current event, there is no outgoing charged lepton, although there may be a

hadronic shower from the collision.

Because cascade-like and track-like events have very different signatures and strategies for

background rejection, one generally focuses on one or the other early in the analysis. We consider

only ν

µ

-induced muons in this analysis; other types of event will be removed by the data-filtering

procedures which we describe in chapter 6.

4.5 Background

While we have described the means by which we might detect a neutrino-induced muon,

the background to such a search is formidable. Even with kilometers of overburden, high-energy

atmospheric muon bundles dominate over neutrino events by a factor of about 10

6

. Selecting only

“up-going” muons allows us to reject the large background of atmospheric muons, using the Earth

as a filter to screen out everything but neutrinos (see fig. A.2). In practice, we must also use other

observables indicating the quality of the muon directional reconstruction, in order to eliminate mis-

reconstructed atmospheric muon events — a topic we will revisit in chapter 6.

26

Chapter 5

The AMANDA-II Detector

5.1 Overview

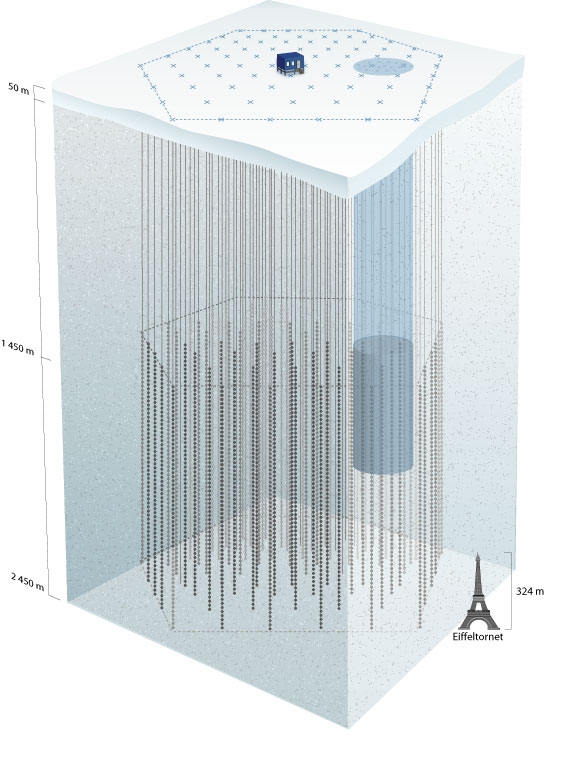

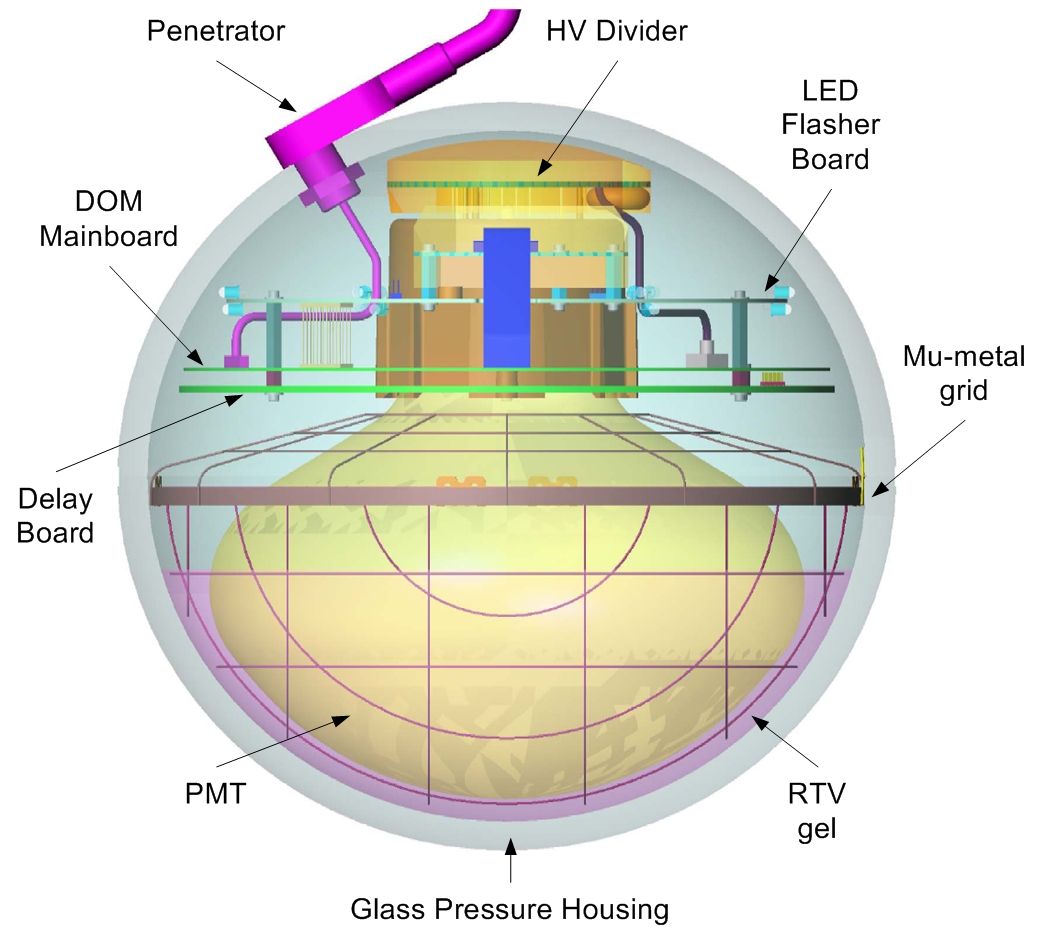

AMANDA, or the Antarctic Muon And Neutrino Detector Array, consists of 677 optical

modules (OMs) on 19 vertical cables or “strings” frozen into the deep, clear ice near the geographic

South Pole. Each OM consists of an 8” diameter Hamamatsu R5912-2 photomultiplier tube (PMT)

housed in a glass pressure sphere. The AMANDA-II phase of the detector commenced in the year

2000, after nine outer strings were added. Fig. 5.1 shows the geometry of the detector, as well as the

principal components of the OMs.

The bulk of the detector lies between 1550 and 2050 meters under the snow surface, where the

Antarctic ice sheet is extremely clear. The 19 strings are arranged roughly in three concentric cylin-

ders, the largest of which is approximately 200 meters in diameter. The OMs are connected to cables

which supply power and transmit PMT signals to the surface. Multiple cabling technologies are used:

coaxial, twisted-pair, and fiber optic. While most transmitted signals are analog, string 18 contains

prototype digital optical modules (DOMs) which digitize the PMT signal before transmission.

5.2 Optical Properties of the Ice

Far from being a homogeneous medium, the ice at the South Pole consists of roughly horizontal

layers of varying clarity. As the ice layers accumulated over geological time periods, varying amounts

of atmospheric dust were trapped during the deposition, depending on the climatological conditions

at the time. These “dust layers” strongly affect both the optical scattering and absorption lengths

27

Section 6 summarizes event classes for which the

reconstruction may fail and strategies to identify

and eliminate such events. The performance

of the reconstruction procedure is shown in

Section 7. We discuss possible improvements

in Section 8.

2. The AMANDA detector

The AMANDA-II detector (see Fig. 2) has been

operating since January 2000 with 677 optical

modules (OM) attached to 19 strings. Most of the

OMs are located between 1500 and 2000 m below

and Refs. [8,9]), while providing SPASE with

additional information for cosmic ray composition

studies [10].

The PMT signals are processed in a counting

room at the surface of the ice. The analog signals

are amplified and sent to a majority logic trigger

[11]. There the pulses are discriminated and a

trigger is formed if a minimum number of hit

PMTs are observed within a time window of

typically 2 ms: Typical trigger thresholds were 16

hit PMT for AMANDA-B10 and 24 for AMANDA-II.

For each trigger the detector records the peak

amplitude and up to 16 leading and trailing edge

times for each discriminated signal. The time

ARTICLE IN PRESS

light diffuser ball

HV divider

silicon gel

Module

Optical

pressure

housing

Depth

120 m

AMANDA-II

AMANDA-B10

Inner 10 strings:

zoomed in on one

optical module (OM)

main cable

PMT

200 m

1000 m

2350 m

2000 m

1500 m

1150 m

Fig. 2. The AMANDA-II detector. The scale is illustrated by the Eiffel tower at the left.

172

J. Ahrens et al. / Nuclear Instruments and Methods in Physics Research A 524 (2004) 169–194

Section 6 summarizes event classes for which the

reconstruction may fail and strategies to identify

and eliminate such events. The performance

of the reconstruction procedure is shown in

Section 7. We discuss possible improvements

in Section 8.

2. The AMANDA detector

The AMANDA-II detector (see Fig. 2) has been

operating since January 2000 with 677 optical

and Refs. [8,9]

), while providing SPASE with

additional information for cosmic ray composition

studies [10].

The PMT signals are processed in a counting

room at the surface of the ice. The analog signals

are amplified and sent to a majority logic trigger

[11]. There the pulses are discriminated and a

trigger is formed if a minimum number of hit

PMTs are observed within a time window of

typically 2 ms: Typical trigger thresholds were 16

hit PMT for AMANDA-B10 and 24 for AMANDA-II

For each trigger the detector records the peak

amplitude and up to 16 leading and trailing edge

ARTICLE IN PRESS

light diffuser ball

HV divider

silicon gel

Module

Optical

pressure

housing

Depth

120 m

AMANDA-II

AMANDA-B10

Inner 10 strings:

zoomed in on one

optical module (OM)

main cable

PMT

200 m

1000 m

2350 m

2000 m

1500 m

1150 m

Fig. 2. The AMANDA-II detector. The scale is illustrated by the Eiffel tower at the left.

172

J. Ahrens et al. / Nuclear Instruments and Methods in Physics Research A 524 (2004) 169–194

Section 6 summarizes event classes for which the

reconstruction may fail and strategies to identify

and eliminate such events. The performance

of the reconstruction procedure is shown in

Section 7. We discuss possible improvements

in Section 8.

2. The AMANDA detector

The AMANDA-II detector (see Fig. 2) has been

operating since January 2000 with 677 optical

modules (OM) attached to 19 strings. Most of the

OMs are located between 1500 and 2000 m below

and Refs. [8,9]

), while providing SPASE with

additional information for cosmic ray composition

studies [10].

The PMT signals are processed in a counting

room at the surface of the ice. The analog signals

are amplified and sent to a majority logic trigger

[11]. There the pulses are discriminated and a

trigger is formed if a minimum number of hit

PMTs are observed within a time window of

typically 2 ms: Typical trigger thresholds were 16

hit PMT for AMANDA-B10 and 24 for AMANDA-II

For each trigger the detector records the peak

amplitude and up to 16 leading and trailing edge

times for each discriminated signal. The time

ARTICLE IN PRESS

light diffuser ball

HV divider

silicon gel

Module

Optical

pressure

housing

Depth

120 m

AMANDA-II

AMANDA-B10

Inner 10 strings:

zoomed in on one

optical module (OM)

main cable

PMT

200 m

1000 m

2350 m

2000 m

1500 m

1150 m

Fig. 2. The AMANDA-II detector. The scale is illustrated by the Eiffel tower at the left.

172

J. Ahrens et al. / Nuclear Instruments and Methods in Physics Research A 524 (2004) 169–194

Figure 5.1: Diagram of the AMANDA-II detector with details of an optical module (from

[74]). The Eiffel tower on the left illustrates the scale.

28

and must be taken into account for event reconstruction and simulation.

The scattering and absorption properties have been measured or inferred using a number of

in situ light sources [75], resulting in a comprehensive model of the ice properties known as the

“Millennium” ice model. Since the publication of that work, the effect of smearing between dust

layers has been examined, resulting in an updated model of the ice known as “AHA.” The effective

scattering length in this model λ

eff

s

, defined such that

λ

eff

s

=

λ

s

1 ? ? cos θ

s

?

,

(5.1)

with an average scattering angle of ?cos θ

s

? ≈ 0.95, is shown along with the absorption length λ

a

in

fig. 5.2. The effective scattering length is approximately 20 m, whereas the absorption length (at 400

nm) is about 110 m.

5.3 Data Acquisition and Triggering

Cables from the deep ice are routed to surface electronics housed in the Martin A. Pomerantz

Observatory (MAPO). PMT signals, broadened after transmission to the surface, are amplified in

Swedish amplifiers (SWAMPs). A prompt output from the SWAMPs is fed to a discriminator,

which in turn feeds the trigger logic and a time-to-digital converter (TDC). The TDC records the

leading and falling edges when the signal crosses the discriminator level. Each edge pair forms a hit,

of which the TDC can store eight at a time. The difference between the edges is referred to as the

time-over-threshold, or TOT.

The main trigger requires 24 hit OMs within a sliding window of 2.5 µs. The hardware core

of the trigger logic is formed by the digital multiplicity adder-discriminator (DMADD). When the

trigger is satisfied, the trigger electronics open the gate to a peak-sensing analog-to-digital converter

(ADC) which is fed by a delayed signal from the SWAMPs. The ADC gate remains open for 9.8 µs,

and the peak amplitude during that window is assigned to all hits in that particular channel. 10

µs after the trigger, a stop signal is fed to the TDC. The trigger is also sent to a GPS clock which

timestamps the event. Events are recorded to magnetic tape and then flown north during the austral

summer season.

29

!"#$%&'()&

!"#$%&'()&

*+,

-

"..&&

'(

/*

)&

*+,

0

&

'(

/*

)&

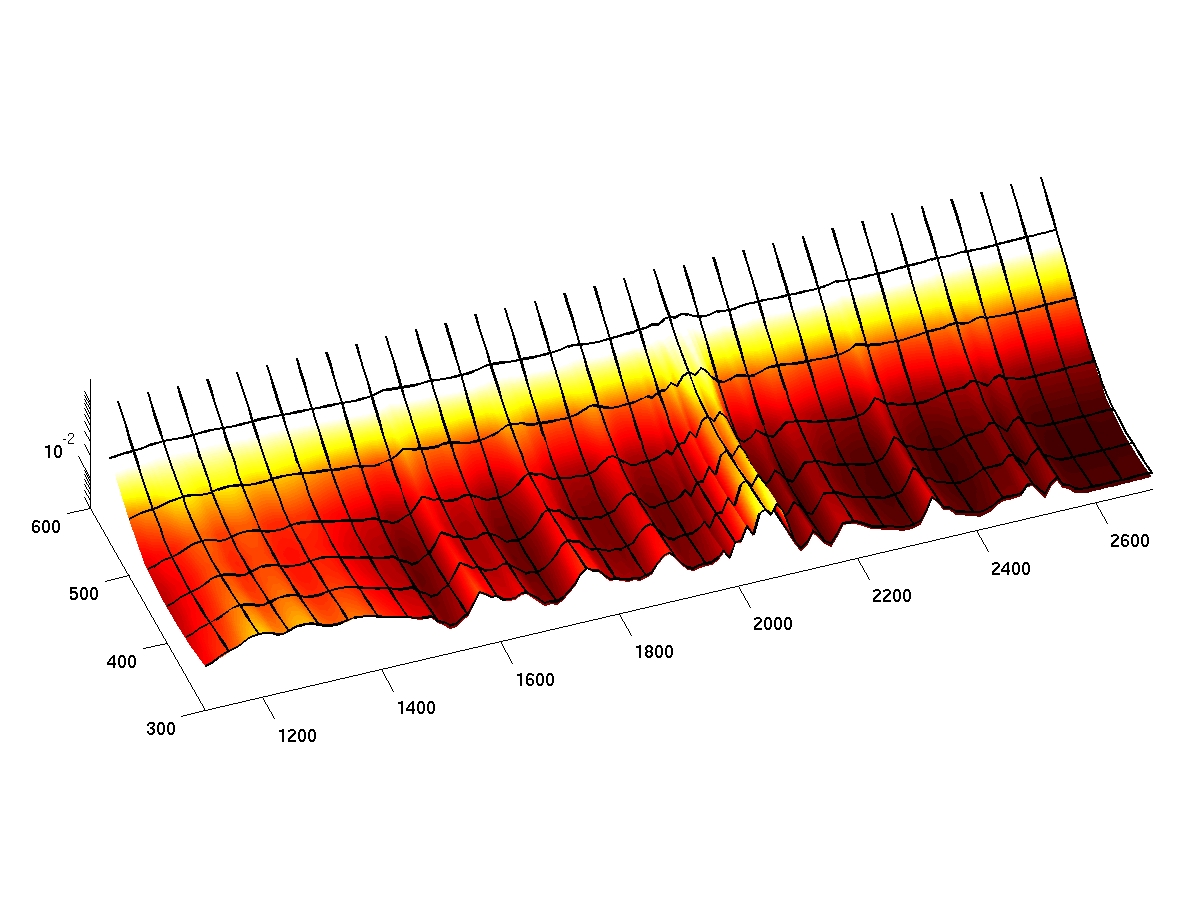

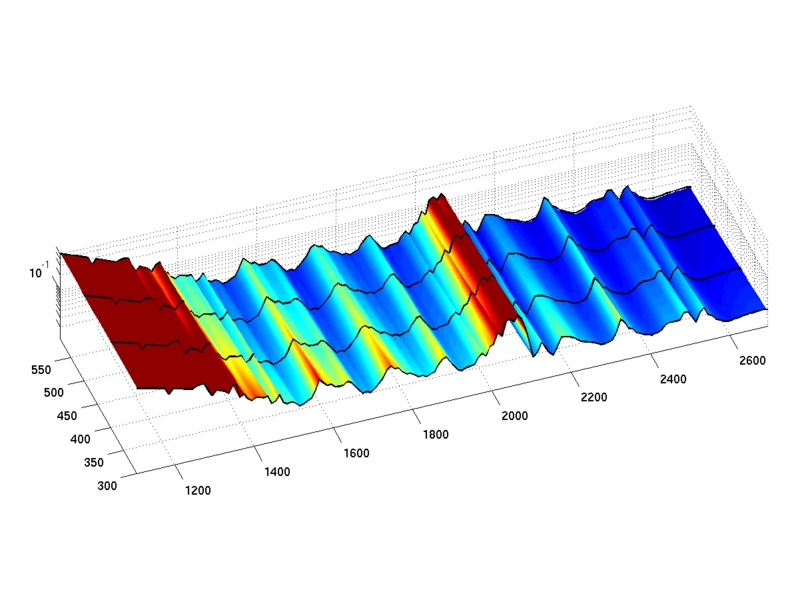

Figure 5.2: Inverse absorption and effective scattering lengths as a function of depth and

wavelength in the AHA ice model. Note the large dust peak at a depth of roughly 2050

m, with three smaller dust peaks at shallower depths.

30

SWAMP

~2 km

discriminator

trigger

…

TDC

DMADD

2 μs delay

ADC

gate

stop

c

o

m

p

u

t

e

readout

r

10 μs delay

Figure 5.3: Principal components of the AMANDA µ-DAQ (adapted from [67]).

The data acquisition system (DAQ) described here is known as the muon DAQ, or µ-DAQ, and

operated through 2006. A parallel DAQ that also records waveform information, the TWR-DAQ,

has been fully operational since 2004. Only µ-DAQ data are used in this analysis. A simplified block

diagram showing the principal components in shown in fig. 5.3.

5.4 Calibration

Calibration of cable time delays and the corrections for dispersion are performed with in situ

laser sources. After calibration, the time resolution for the first 10 strings is σ

t

≈ 5 ns (those with

coaxial or twisted-pair cables), and the time resolution for the optical fiber strings is σ

t

≈ 3.5 ns [74].

The time delay calibration is cross-checked using down-going muon data.

The amplitude calibration uses single photoelectrons (SPEs) from low-energy down-going

muons as a “calibration source” of known charge. The uncalibrated amplitude distribution of SPEs

is fit as the sum of an exponential and a Gaussian distribution, with the peak of the Gaussian portion

representing one PE.

31

Chapter 6

Simulation and Data Selection

6.1 Simulation

In order to meaningfully compare our data with expectations from various signal hypothe-

ses, we must have a detailed Monte Carlo (MC) simulation of the atmospheric neutrinos and the

subsequent detector response. For the input atmospheric muon neutrino spectrum, we generate an

isotropic power-law flux with the nusim neutrino simulator [76] and then reweight the events to

standard flux predictions [16, 17]. As discussed in section 2.2.2, we have extended the predicted

fluxes to the TeV energy range via the neutrinoflux package, which fits the low-energy region

with the Gaisser parametrization [11] and then extrapolates above 700 GeV. We add standard oscil-

lations and/or non-standard flavor changes by weighting the events with the muon neutrino survival

probabilities in eqs. 2.13, 3.7, or 3.16.

Muon propagation and energy loss near and within the detector are simulated using mmc

[72]. Photon propagation through the ice, including scattering and absorption, is modeled with

photonics [77], incorporating the depth-dependent characteristic dust layers from the AHA ice

model (see section 5.2). The detector simulation amasim [78] records the photon hits, and then

identical filtering and reconstruction methods are performed on data and simulation. Cosmic ray

background rejection is ensured at all but the highest quality levels by a parallel simulation chain fed

with atmospheric muons from corsika [79], although when reaching contamination levels of O(1%)

— a rejection factor of 10

8

— computational limitations become prohibitive.

As we will discuss further in chapter 8, the absolute sensitivity of the OMs is one of the

32

larger systematic uncertainties. Determining the effect on our observables can only be achieved by

rescaling the sensitivity within amasim and re-running the detector simulation. We have generated

atmospheric neutrino simulation for 7 different optical module sensitivities, and for each set we reach

an effective livetime (see appendix D) of approximately 60 years.

6.2 Filtering and Track Reconstruction

Filtering the large amount of raw AMANDA-II data from trigger level to neutrino level is an

iterative procedure. First, known bad optical modules are removed, resulting in approximately 540

OMs for use in the analysis. Unstable or incomplete runs (“bad files”) are identified and excluded.

Hits caused by electrical crosstalk and isolated noise hits are also removed (“hit cleaning”).

The initial data volume is so large that only fast, first-guess algorithms can be run on all events.

These include the direct walk algorithm [74] and JAMS [80], both of which employ pattern-matching

algorithms to reconstruct muon track directions. If the zenith angle is close to up-going (typically

greater than 70

◦

or 80

◦

), the event is kept in the sample. This step is known as “level 1” or L1

filtering. “Level 2” and “level 3” filtering steps consist of more computationally intensive directional

reconstructions, along with another zenith angle cut using the more accurate results.

The best angular resolution is achieved by likelihood-based reconstructions utilizing the timing

information of the photon hits. The iterative unbiased likelihood (UL) reconstruction uses the timing

of the first hit in an OM, and maximizes the likelihood

L =

?

i

p(t

i

|?a) ,

(6.1)

where ?a = (x, y, z, θ, φ) is the track hypothesis and t

i

is the timing residual for hit i. The timing

residual is the difference between the expected photon arrival time based only on geometry and the

actual arrival time, which in general is delayed by scattering in the ice. A parametrization of the

probability distribution function describing the time residuals of the first photon hits is given by the

Pandel function

1

[81], and this is convoluted with a Gaussian to include PMT jitter. A high-quality

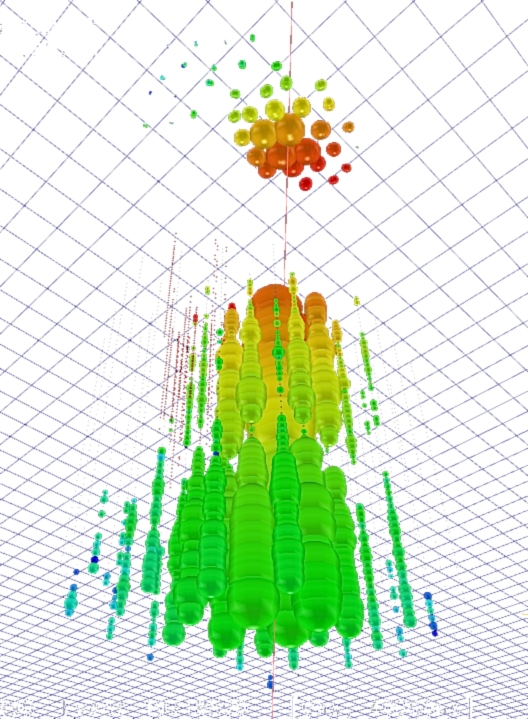

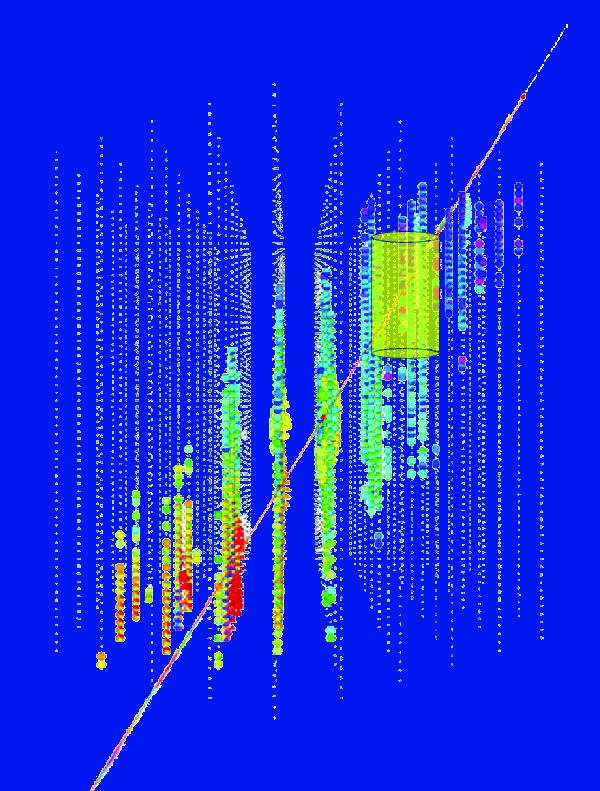

sample event is shown in fig. 6.1 along with the track from the UL reconstruction.

1

For this reason, the UL reconstruction is also commonly referred to as the “Pandel” reconstruction (even though

other reconstructions also use the Pandel p.d.f.). We use the terms interchangeably.

33

Figure 6.1: Sample AMANDA-II event from 2001, with number of OMs hit N

ch

= 77.

Colors indicate the timing of the hits, with red being earliest. The size of the circles

indicate the amplitude of the PMT signal. The line is the reconstructed track obtained

from the unbiased likelihood or “Pandel” fit (σ = 1.2

◦

).

34

Because we are interested in rejecting atmospheric muons, it is useful to compare the UL

likelihood with a reconstruction using the prior hypothesis that the event is down-going. This

Bayesian likelihood (BL) reconstruction weights the likelihood with the prior probability density

function (PDF) P

µ

(θ), a polynomial fit to the zenith-angle dependence of the muon flux at the depth

of the AMANDA-II detector. The likelihood to maximize therefore becomes

L

Bayes

=

?

i

p(t

i

|?a) P

µ

(θ) .

(6.2)

The log-likelihood ratio of the UL fit to the BL fit is then a test statistic which we can use as a

quality parameter.

6.3 Quality Cuts

After initial filtering and reconstruction (after level 3), atmospheric neutrino events are still

dominated by mis-reconstructed atmospheric muons (down-going muons that are incorrectly recon-

structed as up-going). In order to remove (or “cut”) these events, we must use several variables

indicating the quality of the track reconstruction.

6.3.1 Point-source Cuts

As a starting point for these quality cuts, we use the criteria developed for the 2000-2004

AMANDA-II point source analysis [82]. These cuts are applicable to an atmospheric neutrino analysis

primarily because they are not optimized for a high-energy extraterrestrial neutrino flux, and so their

efficiency for lower-energy atmospheric neutrinos is still quite good. These cuts are shown in table

6.1.

6.3.1.1 Likelihood Ratio

As described in section 6.2, the log likelihood ratio log L

UL

/L

BL

tests the relative probability

of the unbiased “Pandel” fit (which, because of the zenith-angle cuts, indicates the track is up-going

or close to it), and the Bayesian down-going fit. The larger this ratio, the less likely the event is to

be down-going. Including a dependence on the zenith angle is necessary because this test gets less

powerful near the horizon, where the up-going and down-going tracks may only be separated by a

35

Table 6.1: Initial quality cuts, originally designed for the 2000-2004 point-source analysis.

x = sin δ

UL

, where δ is the reconstructed declination, and Θ() is the unit step function.

Quality variables are described in the text.

Description

Criterion (to keep)

Likelihood ratio log L

UL

/L

BL

> 34 + 25(1 ? Θ(x ? 0.15)) · (x ? 0.15)

Smoothness

|S

Phit,UL

| < 0.36 and S

Phit,UL

?= 0

Paraboloid error

σ < 3.2

◦

? 4

◦

· Θ(x ? 0.75) · (x ? 0.75) and σ ?= 0

Flariness

F

TOT-short

+ F

B10

+ F

B10

< 10

Stability period

2000: day ∈ [47, 309]

2001: day ∈ [44, 293]

2002: day ∈ [43, 323]

2003: day ∈ [43, 315]

2004: day ∈ [43, 309]

2005-06: included in file selection

File size

2000-04: Runs/file > 6

Bayesian bug fix

2005-06: θ

BL

< 90

◦

small angle.

6.3.1.2 Smoothness

The smoothness of a track hypothesis is a topological parameter varying from -1 to 1 that

measures the evenness of the hits along the track. Positive values of the smoothness indicate hits

concentrated at the beginning of the track, while negative values indicate hits concentrated at the

end. Small absolute value of the smoothness indicates that the hits are more evenly distributed,

supporting the fit hypothesis.

The particular implementation of the smoothness calculation that we use, the P

hit

smoothness

or S

Phit

, only considers direct (unscattered) hits within a 50 m cylinder around the UL track. It

then compares the number of hits in the cylinder to the number expected given a minimally ionizing

muon (see ref. [80] for more detail). In table 6.1, we also explicitly exclude events with exactly zero

smoothness; in an early implementation, this result indicated no direct hits within the cylinder.

6.3.1.3 Paraboloid Error

The paraboloid error, or angular resolution parameter, is an estimate of the 1σ error on the